Abstract

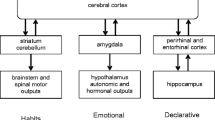

The computational resources of a neuromorphic network model introduced earlier were investigated in the first paper of this series. It was argued that a form of ubiquitous spontaneous local convolution enabled logical gate-like neural motifs to form into hierarchical feed-forward structures of the Hubel-Wiesel type. Here we investigate concomitant data-like structures and their dynamic rôle in memory formation, retrieval, and replay. The mechanisms give rise to the need for general inhibitory sculpting, and the simulation of the replay of episodic memories, well known in humans and recently observed in rats. Other consequences include explanations of such findings as the directional flows of neural waves in memory formation and retrieval, visual anomalies and memory deficits in schizophrenia, and the operation of GABA agonist drugs in suppressing episodic memories. We put forward the hypothesis that all neural logical operations and feature extractions are of the convolutional hierarchical type described here and in the earlier paper, and exemplified by the Hubel-Wiesel model of the visual cortex, but that in more general cases the precise geometric layering might be obscured and so far undetected.

Similar content being viewed by others

Data Availability

No datasets were generated or analysed during the current study.

References

Ahmed, T. (2023). Bio-inspired artificial synapses: Neuromorphic computing chip engineering with soft biomaterials. Memories–Materials Devices Circuits and Systems. https://doi.org/10.1016/j.memori.2023.100088

Anderson, N., & Piccinini, G. (2024). The Physical Signature of Computation: A Robust Mapping Account. Oxford: Oxford University Press.

Bohm, D. (1951). Quantum Theory. Englewood Cliffs, N. J., USA: Prentice-Hall.

Craver, C. F. (2007). Explaining the Brain. Oxford: Oxford University Press.

Dempsey, W. P., Zhuowei, Du., Natcochiy, A., Smith, D. K., Czajkowski, A. A., Robson, D. N., Li, J. M., Applebaum, S., Truong, T. V., Kesselman, C., Fraser, S. E., & Arnold, D. B. (2022). Regional synapse gain and loss accompany memory formation in larval Zebrafish. PNAS, 3, e2107661119. https://doi.org/10.1073/pnas2107661119

Eisen, A. J., Kozachkov, L., Bastos, A. M., Donoghue, J. A., Mahnke, M. K., Brincat, S. L., Chandra, S., Tauber, J., Brown, E. N., Fiete, I. R., & Miller, E. K. (2024). Propofol anesthesia destabilizes neural dynamics across cortex. Neuron[SPACE]https://doi.org/10.1016/j.neuron.2024.06.011

Fernandez-Ruiz, A., Sirota, A., Lopes-dos-Santos, V., & Dupret, D. (2023). Over and above frequency: Gamma oscillations as units of neural circuit operations. Neuron. https://doi.org/10.1016/j.neuron.2023.02.026

Fornito, A., Zalesky, A., & Bullmore, E. T. (2016). Fundamentals of Brain Network Analysis. Academic Press, an imprint of Elsevier, Amsterdam, Boston, Heidelberg, London, New York, Oxford, Paris, San Diego, San Francisco, Singapore, Sydney, Tokyo.

Guo, J. Y., Ragland, J. D., & Carter, C. S. (2019). Memory and cognition in schizophrenia. Mol Psychiatry, 24(5), 633–642. https://doi.org/10.1038/s41380-018-0231-1

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770-778. https://doi.org/10.1109/CVPR.2016.90

Johansen, J. P., Diaz-Mataix, L., Hamanaka, H., Ozawa, T., Ycu, E., Koivumaa, J., Kumar, A., Hou, M., Deisseroth, K., Boyden, E. S., & LeDoux, J. E. (2014). Hebbian and modularity mechanisms interact to trigger associative memory formation. PNAS). www.pnas.org/cgi/doi/10.1073/pnas.1421304111

Josselyn, S. A., & Tonegawa, S. (2020). Memory engrams: Recalling the past and imagining the future, Science, 367(6473). https://doi.org/10.1126/science.aaw4325

King, D. J., Hodgekins, J., Chouinard, P. A., Chouinard, V.-A., & Sperandio, I. (2017). A review of abnormalities in the perception of visual illusions in schizophrenia. Psychonomic Bulletin & Review, 24, 734–751. https://doi.org/10.3758/s13423-016-1168-5

Li, M., Liu, J., & Tsien, J. Z. (2016). Theory of connectivity: Nature and nurture of cell assemblies and cognitive computation. Frontiers in Neural Circuits, 10, 34. https://doi.org/10.3389/fncir.2016.00034

Lundqvist, M., Brincat, S. L., Rose J., Warden M. R., Buschman T. J., Miller E. K., & Herman, P. (2023). Working memory control dynamics follow principles of spatial computing. Nature Commununication, 14:1429. https://doi.org/10.1038/s41467-023-36555-4

Lundqvist, M., Miller E. K., Nordmark, J., Liljefors, J., & Herman, P. (2024). Beta: bursts of cognition. Trends in Cognitive Sciences. In press. https://doi.org/10.1016/j.tics.2024.03.010

Mohan, U. R., Zhang, H., Ermentrout, B., & Jacobs, J. (2024). The direction of theta and alpha travelling waves modulates human memory processing. Nature Human Behaviour[SPACE] https://doi.org/10.1038/s41562-024-01838-3. Epub ahead of print. PMID: 38459263.

Panoz-Brown, D., Iyer, V., Carey, L. M., Sluka, C. M., Rajio, G., Kestenman, J., Gentry, M., Brotheridge, S., Somekh, I., Corbin, H. E., Tucker, K. G., Almeida, B., Hex, S. B., Garcia, K. D., Hohmann, A. G., & Crystal, J. D. (2018). Replay of episodic memories in the rat. Current Biology, 28, 1628–1634. https://doi.org/10.1016/j.cub.2018.04.006

Piccinini, G. (2020). Neurocognitive Mechanisms: Explaining Biological Cognition. Oxford: Oxford University Press.

Piccinini, G., & Bahar, S. (2013). Neural Computation and the Theory of Computational Cognition. Cognitive Science, 37(3), 453–88. https://doi.org/10.1111/cogs.12012

Selesnick, S. (2024). Neural waves and computation in a neural net model I: Convolutional hierarchies. Journal of Computational Neuroscience. https://doi.org/10.1007/s10827-024-00866-2

Selesnick, S. A. (2019). Tsien’s power-of-two law in a neuromorphic network model suitable for artificial intelligence. IfCoLog Journal of Logics and their Applications, 6(7), 1223–1251.

Selesnick, S. A. (2022). Quantum-like Networks. An approach to neural behavior through their mathematics and logic: World Scientific.

Selesnick, S. (2023). Neural waves and short term memory in a neural network model. Journal of Biological Physics, 49, 159–194. https://doi.org/10.1007/s10867-023-09627-1

Selesnick, S. A., & Owen, G. S. (2012). Quantum-like logics and schizophrenia. Journal of Applied Logic, 10(1), 115–126. https://doi.org/10.1016/j.jal.2011.12.001

Selesnick, S. A., & Piccinini, G. (2018). Quantum-like Behavior without Quantum Physics II. A quantum-like model of neural network dynamics. Journal of Biological Physics, 44, 501–538. https://doi.org/10.1007/s10867-018-9504-9

Selesnick, S. A., & Piccinini, G. (2019). Quantum-like Behavior without Quantum Physics III. Logic and memory. Journal of Biological Physics, 45, 335–366. https://doi.org/10.1007/s10867-019-09532-6

Selesnick, S. A., Rawling, J. P., & Piccinini, G. (2017). Quantum-like Behavior without Quantum Physics I. Kinematics of Neural-like systems. Journal of Biological Physics, 43, 415–444. https://doi.org/10.1007/s10867-017-9460-9

Sung, C., Hwang, H., & Yoo, K. (2018). Perspective: A review on memristive hardware for neuromorphic computation. Journal of Applied Physics, 124, 151903. https://doi.org/10.1063/1.5037835

Tomé, D. F., Zhang, Ying, Aida, T., Mosto, O., Yifeng, Lu., Chen, M., Sadeh, S., Roy, D. S., & Clopath, C. (2024). Dynamic and selective engrams emerge with memory consolidation. Nature Neuroscience. https://doi.org/10.1038/s41593-023-01551-w

Tsien, J. Z. (2016). Principles of Intelligence: On Evolutionary Logic of the Brain. Frontiers in System Neuroscience,9(186). https://doi.org/10.3389/fnsys.2015.00186

Tsien, J. Z. (2015). A Postulate on the Brain’s Basic Wiring Logic. Trends Neuroscience, 38(11), 669–671. https://doi.org/10.1016/j.tins.2015.09.002

Van Hooser, S. D., Escobar, G. M., Maffei, A., & Miller, P. (2014). Emerging feed-forward inhibition allows the robust formation of direction selectivity in the developing ferret visual cortex. Journal of Neurophysiology, 111, 2355–2373. https://doi.org/10.1152/jn.00891.2013

Xie, K., Fox, G. E., Liu, J., Lyu, C., Lee, J. C., Kuang, H., Jacobs, S., Li, M., Liu, T., Song, S., & Tsien, J. Z. (2016). Brain Computation Is Organized via Power-of-Two-Based Permutation Logic, Frontiers in System Neuroscience10(95). https://doi.org/10.3389/fnsys.2016.00095

Acknowledgements

For valuable input on various relevant topics many thanks are owed to: Wallace Arthur, Felicitas Ehlen, Bryan Emmerson, the late James Hartle, Alex Kim, Ronald Munson, Gualtiero Piccinini, Piers Rawling and Ivan Selesnick. Grateful thanks also to referees for careful readings and cogent remarks which much improved an earlier version.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

SS is the sole author

Corresponding author

Ethics declarations

Informed Consent

The author declares that there were no human or animal participants involved in this purely theoretical study.

Conflict of interest

The authors declare no conflict of interest.

Additional information

Action Editor: Pulin Gong.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Appendix A: brief outline of the model

The model has two facets: a physical one, describing the internal structure of a network and its dynamics, and a structural one, describing how these networks may be combined and the structures so formed. This is achieved through the use of a logical scheme known as Gentzen sequent calculus. These two facets interact and the model requires them both. The use of the sequent calculus is rudimentary and no deep theorems from logic are needed or invoked.

1.1 The network structure

Our model quantum-like networks have nodes comprising a bipartite system which is supposed to model “standard” biological neurons in general morphology. That is to say, we assume two chamber-like nodes, one corresponding to the input part of the cell’s somatic membrane receiving fanned in dendritic signals, and the other to the output part of the cell’s somatic membrane including the hillock/trigger zone/axon initial segment (or possibly the whole axon). A single axon/output chamber fans out to the input chambers of other such bicameral neurons or b-neurons.

The real Hilbert space of states of a single b-neuron is thus of the form

where \(\mathbb {R}\) denotes the field of real numbers, \(e_0\) denotes the state representing the input node, \(e_1\) the state representing the output node, and we have explicitly written in the generators of the two subspaces. Compared to the qubit of quantum computing fame, \(e_0 = |0 \rangle\) and \(e_1= | 1 \rangle\). In addition we argued that this space is in fact the exterior algebra (aka the Fermi-Dirac-Fock space) of the space \(\mathbb {R}e_1\), the state space of the output node, so that \(e_1\wedge e_1=0\). This latter equation is a reflection of the fact that a biological neuron cannot enter a state of firing while it is firing. It is this exterior product structure that gives b-neurons their fermionic structure, although all vector spaces are over the field of real numbers. Consequently, collections of them obey the statistics of Fermi-Dirac.

Networks of such b-neurons are then defined and give rise to the following structures.

-

1.

A directed finite graph, denoted \(\mathscr {N}_A^b\) say, whose vertices are assigned b-neurons, denoted by \(n_i^A\), for \(i=1,\ldots , N\) where N is the number of vertices, with links/edges fanning in and out;

-

2.

A real finite dimensional Hilbert space, denoted \(\mathfrak {H}_A\), of dimension N, with an orthonormal basis \(\{e^A_i\}\), \(i=1,\ldots , N\), where \(e_k^A\) denotes the state corresponding to the output node of the b-neuron \(n_k^A\). Thus, an element of \(\mathfrak {H}_A\), such as

$$\begin{aligned} \mathbf {\text {v}}^A = \sum _i v_i^A e_i^A \end{aligned}$$(A.2)represents a (superpositional) state in which the b-network may be found to have the b-neuron \(n^A_{i_0}\) in its firing state, or ON, with probability

$$\begin{aligned} \frac{(v_{i_0}^A)^2}{\sum _i (v_{i}^A)^2}. \end{aligned}$$(A.3)(but please see the proviso in item 4 below). This follows from Born’s Law, which we may adopt for reasons given earlier. Our model b-neurons have no internal structure, or rather such structure has been hidden by the logic of our approach, which is aimed at considering only the relevant structural aspects. Thus the value \(v^A_i\) in the output node of \(n^A_i\) is not causally connected to whatever firing mechanism is involved until a dynamics is imposed to drive this mechanism. Only then does a recognizable form of action potential emerge from our model b-neuron. Such dynamics is discussed in Sect. 2 above.

-

3.

A real \(N\times N\) matrix \((J_{ij})\) whose entries are the product of a factor representing a synaptic weight or scaling factor associated with a single synaptic connection \(n^A_j\) to \(n^A_i\), and the corresponding adjacency matrix for the network, namely the number of edges or links from \(n^A_j\) to \(n^A_i\). We shall sometimes refer to the J functions (generally of time) as the exchange terms for the network at hand.

-

4.

The (real) space of states of the ensemble of fermionic b-neurons of a b-network \(\mathscr {N}_A^b\) is the usual (but real) Fermi-Dirac-Fock space of the occupied states of the individual b-neurons, namely the exterior algebra of the space \(\mathfrak {H}_A\) which we denote by \(E(\mathfrak {H}_A)\). Thus

$$\begin{aligned} E(\mathfrak {H}_A):= \mathbb {R} \oplus \mathfrak {H}_A \oplus \bigwedge ^2 \mathfrak {H}_A\oplus \dots \oplus \bigwedge ^N \mathfrak {H}_A. \end{aligned}$$(A.4)This is a graded Hopf algebra and also a real Hilbert space of dimension \(2^N\). Its structure is well known and described at length in the references cited. Its properties are fundamental to our model. The singleton state \(e_j^A\) may be regarded as the ON state of the respective b-neuron, which we also identify with the firing state of that b-neuron with the proviso, laid out in Selesnick (2024), that being in the firing state does not necessarily mean that the b-neuron is firing but merely that is in a state of preparation for firing but may be below the firing threshold for that b-neuron. These thresholds are not part of the model. Thus the basic states \(e_{i_0} \wedge e_{i_1} \wedge \ldots \wedge e_{i_p}\) are states of coacting groups of b-neurons and we have dubbed the general elements of \(E(\mathfrak {H}_A)\) firing patterns though they are generally superpositions of the basic states.

There is a dynamics controlling the inner structure of a network as it moves from one firing pattern to another and another dynamics controlling the network’s interaction with external inputs. These are described in Sect. 2.

1.2 A note on the logic

As noted, an integral facet of the model is the explicit use of a scheme familiar to computational logicians, namely a so-called Gentzen sequent calculus. While the physics-like attributes of the model described above deal with the internal structure of an individual network structure, the sequent calculus is supposed to encapsulate the possible outer structures of many networks and their combinations. The specific rules of our calculus renders it a fragment of a well known such calculus, introduced by J.-Y. Girard in the 1980 s and known as Intuitionistic Linear Logic (ILL). It may be considered to be a short-hand used to express relationships within the category of vector spaces, specifically the finite dimensional real Hilbert spaces that are the state spaces of our networks. The basic object is the sequent which is of the form \(A \vdash B\), which may, for our purposes, be thought of as an (un-named) linear map between the Hilbert spaces A and B. Such a map between state spaces of networks may be pictured (at a particular moment) as a wiring diagram, showing how one network is connected to another. In the cases of interest to us, this “wiring” will also include the disposition of certain non-synaptic connectivities. The inference that one such collection may be replaced by, or reduced to, a second such collection, via the rules set out in a list of basic inferences regarded as axiomatic, is signified by a fraction-like construct, with the hypothesis (a collection of sequents) as numerator and consequent (usually another collection of sequents) as denominator. Details and full references may be found in Selesnick and Piccinini (2019); Selesnick (2022). Here we use only a few of the multiplicative rules of GN which are reproduced below.

GN

Structural Rules

Exchange

Weakening

Contraction

The Identity Group

Axiom

Cut

Multiplicative Logical Rules

Conjunctive (Multiplicative) Connective

Of Course operator

!

Capital Greeks stand for finite sequences of formulas including possibly the empty one, and D stands for either a single formula or no formula, i.e. the empty sequence, and when it appears in the form \(\otimes D\), the \(\otimes\) symbol is presumed to be absent when D is empty. If \(\varGamma\) denotes the sequence \(A_1,A_2, \ldots , A_n\) then \(!\varGamma\) will denote the sequence \(!A_1,!A_2, \ldots , !A_n\).

We shall ride roughshod over logical niceties, and simply identify the types in our sequent formulas as the spaces of states of corresponding b-networks, and the combinator \(\otimes\) to have its usual connotation. Thus A will now stand for the Hilbert space we had previously denoted by \(\mathfrak {H}_A\), et sim. A blank space, i.e. the absence of a formula, is interpreted as \(\mathbb {R}\), the unoccupied state or vacuum.

Nota bene

The crucial fact that drives the efficacy of the model is the remarkable coincidence (if it is one) that the exterior algebra (aka the Fermi-Dirac-Fock space) perfectly interprets the of course operator, thereby forming a nexus between the physics-like paradigm and the structural logic paradigm.

Accordingly we uniformly denote \(E(\mathfrak {H}_A)\) by !A.

Appendix B: The coproduct on \(E(\mathbb {R}^3)\)

For a basis \(\{e_1,e_2,e_3\}\) for \(\mathbb {R}^3\) and 1 the vacuum or unoccupied state or algebra unit, we have

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Selesnick, S. Neural waves and computation in a neural net model II: Data-like structures and the dynamics of episodic memory. J Comput Neurosci 52, 223–243 (2024). https://doi.org/10.1007/s10827-024-00876-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-024-00876-0