Abstract

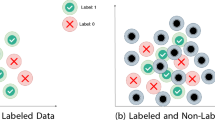

In many real-life problems, obtaining labelled data can be a very expensive and laborious task, while unlabeled data can be abundant. The availability of labeled data can seriously limit the performance of supervised learning methods. Here, we propose a semi-supervised classification tree induction algorithm that can exploit both the labelled and unlabeled data, while preserving all of the appealing characteristics of standard supervised decision trees: being non-parametric, efficient, having good predictive performance and producing readily interpretable models. Moreover, we further improve their predictive performance by using them as base predictive models in random forests. We performed an extensive empirical evaluation on 12 binary and 12 multi-class classification datasets. The results showed that the proposed methods improve the predictive performance of their supervised counterparts. Moreover, we show that, in cases with limited availability of labeled data, the semi-supervised decision trees often yield models that are smaller and easier to interpret than supervised decision trees.

Similar content being viewed by others

References

Bauer, E., & Kohavi, R. (1999). An Empirical Comparison of Voting Classification Algorithms: Bagging, Boosting, and Variants. Machine Learning, 36(1), 105–139.

Bennett, K., Demiriz, A., & et al. (1999). Semi-supervised support vector machines. Advances in Neural Information Processing Systems, 368–374.

Blockeel, H., De Raedt, L., & Ramon, J. (1998). Top-down induction of clustering trees, Proceedings of the 15th Int’l conference on machine learning (pp. 55–63).

Blum, A., & Mitchell, T. (1998). Combining labeled and unlabeled data with co-training, Proceedings of the 11th annual conference on computational learning theory (pp. 92–100).

Breiman, L. (1996). Bagging predictors. Machine Learning, 24(2), 123–140.

Breiman, L. (1996). Out-of-bag estimation. Technical report. California: University of California.

Breiman, L. (2001). Random forests. Machine Learning, 45(1), 5–32.

Breiman, L., Friedman, J., Olshen, R., & Stone, C.J. (1984). Classification and Regression Trees. Chapman & Hall/CRC.

Ceci, M. (2008). Hierarchical text categorization in a transductive setting, Proceedings of the 8th IEEE international conference on data mining workshops (pp. 184–191).

Ceci, M., Appice, A., Viktor, H.L., Malerba, D., Paquet, E., & Guo, H. (2012). Transductive relational classification in the co-training paradigm, Proceedings of the 8th international conference on machine learning and data mining in pattern recognition (pp. 11–25).

Chapelle, O., Schölkopf, B., & Zien, A. (2006). Semi-supervised Learning, vol. 2. MIT Press.

Chapelle, O., Sindhwani, V., & Keerthi, S.S. (2008). Optimization techniques for semi-supervised support vector machines. Journal of Machine Learning Research, 9, 203–233.

Chawla, N., & Karakoulas, G. (2005). Learning from labeled and unlabeled data: An empirical study across techniques and domains. Journal of Artificial Intelligence Research, 23(1), 331–366.

Cozman, F., Cohen, I., & Cirelo, M. (2002). Unlabeled data can degrade classification performance of generative classifiers, Proceedings of the 15th international Florida artificial intelligence research society conference (pp. 327–331).

Dara, R., Kremer, S.C., Stacey, D., & et al. (2002). Clustering unlabeled data with SOMs improves classification of labeled real-world data, Proc. of the international joint conference on neural networks (vol. 3, pp. 2237–2242).

De’ath, G., & Fabricius, K.E. (2000). Classification and regression trees: a powerful yet simple technique for ecological data analysis. Ecology, 81(11), 3178–3192.

Demiriz, A., Bennett, K.P., & Embrechts, M.J. (1999). Semi-supervised clustering using genetic algorithms, Proc. of the 5th conference on artificial neural networks in engineering (pp. 809–814).

Ford, E.S. (1999). Body mass index, diabetes, and c-reactive protein among us adults. Diabetes care, 22(12), 1971–1977.

Goldberg, A.B., Zhu, X., Singh, A., Xu, Z., & Nowak, R. (2009). Multi-manifold semi-supervised learning, Proc. of the 12th international conference on artificial intelligence and statistics (pp. 169–176).

Guo, Y., Niu, X., & Zhang, H. (2010). An extensive empirical study on semi-supervised learning, Proc. of 10th int’l conf. on data mining (pp. 186–195).

Guyon, I., Gunn, S., Ben-Hur, A., & Dror, G. (2004). Result analysis of the NIPS 2003 feature selection challenge, Advances in neural information processing systems (pp. 545–552).

Higuera, C., Gardiner, K.J., & Cios, K.J. (2015). Self-organizing feature maps identify proteins critical to learning in a mouse model of down syndrome. PloS one, 10 (6), e0129,126.

Joachims, T. (1999). Transductive inference for text classification using support vector machines, Proc. of the sixteenth international conference on machine learning (pp. 200–209).

Kocev, D., Vens, C., Struyf, J., & Džeroski, S. (2013). Tree ensembles for predicting structured outputs. Pattern Recognition, 46(3), 817–833.

Leistner, C., Saffari, A., Santner, J., & Bischof, H. (2009). Semi-supervised random forests, Proceedings of the 12th int’l conference on computer vision (pp. 506–513).

Levatić, J., Ćurak, J., Kralj, M., Šmuc, T., Osmak, M., & Supek, F. (2013). Accurate models for p-gp drug recognition induced from a cancer cell line cytotoxicity screen. Journal of Medicinal Chemistry, 5691–5708.

Levatic, J., Ceci, M., Kocev, D., & Dzeroski, S. (2014). Semi-supervised learning for multi-target regression, New frontiers in mining complex patterns - third international workshop, NFMCP 2014, held in conjunction with ECML-PKDD 2014, Nancy, France, September 19, 2014, Revised selected papers (pp. 3–18).

Levatić, J., Kocev, D., & Džeroski, S. (2014). The importance of the label hierarchy in hierarchical multi-label classification. Journal of Intelligent Information Systems, 1–25.

Lichman, M. (2013). UCI machine learning repository. http://archive.ics.uci.edu/ml.

Liu, W., Wang, J., & Chang, S.F. (2012). Robust and scalable graph-based semisupervised learning. Proceedings of the IEEE, 100(9), 2624–2638.

Liu, X., Song, M., Tao, D., Liu, Z., Zhang, L., Chen, C., & Bu, J. (2015). Random forest construction with robust semisupervised node splitting. IEEE Transactions on Image Processing, 24(1), 471–483.

Malerba, D., Ceci, M., & Appice, A. (2009). A relational approach to probabilistic classification in a transductive setting. Engineering Applications of Artificial Intelligence, 22(1), 109–116.

Mansouri, K., Ringsted, T., Ballabio, D., Todeschini, R., & Consonni, V. (2013). Quantitative structure–activity relationship models for ready biodegradability of chemicals. Journal of Chemical Information and Modeling, 53(4), 867–878.

Moro, S., Laureano, R., & Cortez, P. (2011). Using data mining for bank direct marketing: An application of the crisp-dm methodology, Proc. of the 25th European simulation and modelling conference (pp. 117–121).

Nigam, K., McCallum, A.K., Thrun, S., & Mitchell, T. (2000). Text classification from labeled and unlabeled documents using em. Machine learning, 39 (2-3), 103–134.

Quinlan, J.R. (1993). C4.5: Programs for Machine Learning. San Francisco: Morgan Kaufmann Publishers Inc.

Raileanu, L.E., & Stoffel, K. (2004). Theoretical comparison between the gini index and information gain criteria. Annals of Mathematics and Artificial Intelligence, 41(1), 77–93.

Rokach, L., & Maimon, O. (2014). Data Mining with Decision Trees: Theory and Applications. Series in machine perception and artificial intelligence. World Scientific.

Simonoff, J.S. (2013). Analyzing categorical data. Springer Science & Business Media.

Slavkov, I., Gjorgjioski, V., Struyf, J., & Džeroski, S. (2010). Finding explained groups of time-course gene expression profiles with predictive clustering trees. Molecular BioSystems, 6(4), 729–740.

Struyf, J., & Džeroski, S. (2006). Constraint based induction of multi-objective regression trees, Knowledge discovery in inductive databases, LNCS (vol. 3933, pp. 222–233).

Tanha, J., van Someren, M., & Afsarmanesh, H. (2015). Semi-supervised self-training for decision tree classifiers. International Journal of Machine Learning and Cybernetics, 1–16.

Triguero, I., García, S., & Herrera, F. (2015). Self-labeled techniques for semi-supervised learning: taxonomy, software and empirical study. Knowledge and Information Systems, 42(2), 245–284.

Vanschoren, J., Van Rijn, J.N., Bischl, B., & Torgo, L. (2014). Openml: networked science in machine learning. ACM SIGKDD Explorations Newsletter, 15(2), 49–60.

Vens, C., Struyf, J., Schietgat, L., Džeroski, S., & Blockeel, H. (2008). Decision trees for hierarchical multi-label classification. Machine Learning, 73 (2), 185–214.

Vergara, A., Vembu, S., Ayhan, T., Ryan, M.A., Homer, M.L., & Huerta, R. (2012). Chemical gas sensor drift compensation using classifier ensembles. Sensors and Actuators B: Chemical, 166, 320– 329.

Wilcoxon, F. (1945). Individual comparisons by ranking methods. Biometrics Bulletin, 80–83.

Yarowsky, D. (1995). Unsupervised word sense disambiguation rivaling supervised methods, Proceedings of the 33rd annual meeting on association for computational linguistics (pp. 189–196).

Zhang, C., & Wang, F. (2009). Graph-based semi-supervised learning. Artificial Life and Robotics, 14(4), 445–448.

Zhou, Z.H., & Li, M. (2007). Semi-supervised regression with co-training style algorithms. IEEE Transaction in Knowledge Data Engineering, 19(11), 1479–1493.

Zhou, D., Bousquet, O., Lal, T., Weston, J., & Schölkopf, B. (2004). Learning with local and global consistency. Advances in Neural Information Processing Systems, 16, 321–328.

Zhu, X. (2008). Semi-supervised learning literature survey. Technical report, Computer Sciences. University of Wisconsin-Madison.

Acknowledgements

We acknowledge the financial support of the Slovenian Research Agency, via the grant P2-0103 and a young researcher grant to the first author, as well as the European Commission, via the grants ICT-2013-612944 MAESTRA and ICT-2013-604102 HBP.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Levatić, J., Ceci, M., Kocev, D. et al. Semi-supervised classification trees. J Intell Inf Syst 49, 461–486 (2017). https://doi.org/10.1007/s10844-017-0457-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10844-017-0457-4