Abstract

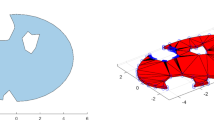

Parts classification can improve the efficacy of the manufacturing process in a computer-aided process planning system. In this study, we investigate various methodologies to assist with parts classification based on deep learning technologies, including a two-dimensional convolutional neural network (2D-CNN) trained using both picture data and CSV files; and a three-dimensional convolutional neural network (3D-CNN) trained using voxel data. Additionally, two novel methods are proposed: (1) feature recognition for the processing parts based on syntactic patterns, where their feature quantities are computed and saved to comma-separated variable (CSV) files that are subsequently employed to train the 2D-CNN model; and (2) voxelization of parts, wherein the voxel data of the parts is obtained for training the 3D-CNN model. The two methods are compared with a 2D-CNN model trained with the images of parts to classify. Results indicated that the 2D-CNN model trained with CSV data yielded the best performance and highest accuracy, followed by the 3D-CNN model, which was simpler and easier to implement and utilized better learning ability for the parts’ details. The 2D-CNN model trained with picture files evinced the lowest accuracy and a complex training network.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Achlioptas, P., Diamanti, O., Mitliagkas, I., & Guibas, L. (2017). Learning representations and generative models for 3D point clouds. arXiv preprint arXiv:1707.02392.

Babu, D. S., Leelavathy, N., Rath, R., & Varma, M. V. (2018). Recognition of object using improved features extracted from deep convolution network. Indian Journal of Public Health Research & Development, 9(12), 1517–1524.

Bouritsas, G., Bokhnyak, S., Bronstein, M., & Zafeiriou, S. (2019). Neural 3D morphable models: Spiral convolutional networks for 3D shape representation learning and generation. arXiv preprint arXiv:1905.02876.

Cai, Z., & Vasconcelos, N. (2018). Cascade R-CNN: Delving into high quality object detection. arXiv preprint arXiv:1712.00726.

Deng, J., Cheng, S., Xue, N., Zhou, Y., & Stefanos, Z. (2018). UV-GAN: Adversarial facial UV map completion for pose-invariant face recognition. arXiv preprint arXiv:1712.04695.

Flasiński, M. (2016). Syntactic pattern recognition: Paradigm issues and open problems. In C. H. Chen (Ed.), Handbook of pattern recognition and computer vision (pp. 3–25). Singapore: World Scientific.

Henderson, M. R., & Anderson, D. C. (1984). Computer recognition and extraction of form features: A CAD/CAM link. Computers in Industry, 5(4), 329–339.

Ji, S., Xu, W., Yang, M., & Yu, K. (2012). 3D convolutional neural networks for human action recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(1), 221–231.

Jin, D., Dernoncourt, F., Sergeeva, E., McDermott, M., & Chauhan, G. (2018). MIT-MEDG at SemEval-2018 task 7: Semantic relation classification via convolution neural network. In Proceedings of the 12th international workshop on semantic evaluation. https://doi.org/10.18653/v1/S18-1127.

Krizhevsky, A., Sutskever, I., & Hinton, G. (2012). Imagenet classification with deep convolutional neural networks. In NIPS (pp. 1106–1114).

Krot, K., & Czajka, J. (2018). Processing of design and technological data due to requirements of computer aided process planning systems. In International conference on intelligent systems in production engineering and maintenance. Springer. https://doi.org/10.1007/978-3-319-97490-3_26.

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444.

Li, Z., Lin, J., Zhou, D., Zhang, B., & Li, H. (2018). Local symmetry based hint extraction of B-Rep model for machining feature recognition. In Proceedings of the ASME 2018 international design engineering technical conferences and computers and information in engineering conference. https://doi.org/10.1115/DETC2018-85509.

Lin, D., Chen, G., Cohen-Or, D., Heng, P. A., & Huang, H. (2017). Cascaded feature network for semantic segmentation of rgb-d images. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1311–1319).

Litany, O., Bronstein, A., Bronstein, M., & Makadia, A. (2018). Deformable shape completion with graph convolutional autoencoders. arXiv preprint arXiv:1712.00268.

Liu, H., Cong, Y., Yang, C., & Tang, Y. (2019). Efficient 3D object recognition via geometric information preservation. Pattern Recognition, 92, 135–145.

Liu, H. T. D., Tao, M., Li, C. L., Nowrouzezahrai, D., & Jacobson, A. (2018). Beyond pixel norm-balls: Parametric adversaries using an analytically differentiable renderer. arXiv preprint arXiv:1808.02651.

Liu, J., Liu, X., Ni, Z., & Zhou, H. (2018b). A new method of reusing the manufacturing information for the slightly changed 3D CAD model. Journal of Intelligent Manufacturing, 29(8), 1827–1844.

Liu, L., & Li, H. (2019). Lending orientation to neural networks for cross-view geo-localization. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5624–5633).

Malyshev, A., Slyadnev, S., & Turlapov, V. (2017). Graph-based feature recognition and suppression on the solid models. In GraphiCon (pp. 319–322).

Mandikal, P., Murthy, N., Agarwal, M., & Babu, R. V. (2018). 3D-LMnet: Latent embedding matching for accurate and diverse 3D point cloud reconstruction from a single image. arXiv preprint arXiv:1807.07796.

Maturana, D., & Scherer, S. (2015). Voxnet: A 3D convolutional neural network for real-time object recognition. In 2015 IEEE/RSJ International conference on intelligent robots and systems (pp. 922–928).

Nedic, N., Stojanovic, V., & Djordjevic, V. (2015). Optimal control of hydraulically driven parallel robot platform based on firefly algorithm. Nonlinear Dynamics, 82(3), 1457–1473.

Park, S. J., Hong, K. S., & Lee, S. (2017). RDFNet: RGB-D multi-level residual feature fusion for indoor semantic segmentation. In Proceedings of the IEEE international conference on computer vision (pp. 4980–4989).

Ranjan, A., Bolkart, T., Sanyal, S., & Black, M. J. (2018). Generating 3D faces using convolutional mesh autoencoders. In Proceedings of the European conference on computer vision (pp. 704–720).

Ranjan, R., Kumar, N., Pandey, R. K., & Tiwari, M. K. (2005). Automatic recognition of machining features from a solid model using the 2D feature pattern. The International Journal of Advanced Manufacturing Technology, 26(7–8), 861–869.

Reda, I., Ayinde, B. O., Elmogy, M., Shalaby, A., & El-Baz, A. (2018). A new cnn-based system for early diagnosis of prostate cancer. In IEEE 15th International symposium on biomedical imaging (pp. 207–210).

Schwarz, M., Milan, A., Periyasamy, A. S., & Behnke, S. (2018). RGB-D object detection and semantic segmentation for autonomous manipulation in clutter. The International Journal of Robotics Research, 37(4–5), 437–451.

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.

Stojanovic, V., & Nedic, N. (2016). Identification of time-varying oe models in presence of non-Gaussian noise: Application to pneumatic servo drives. International Journal of Robust and Nonlinear Control, 26(18), 3974–3995.

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., et al. (2015). Going deeper with convolutions. In The IEEE Conference on computer vision and pattern recognition (pp. 1–9).

Weise, J., Benkhardt, S., & Mostaghim, S. (2018). A survey on graph-based systems in manufacturing processes. In 2018 IEEE Symposium series on computational intelligence (pp. 112–119).

Weng, Q., Mao, Z., Lin, J., & Liao, X. (2018). Land-use scene classification based on a cnn using a constrained extreme learning machine. International Journal of Remote Sensing, 39(19), 6281–6299.

Wu, Z., Song, S., Khosla, A., Yu, F., Zhang, L., Tang, X., et al. (2015). 3D shapenets: A deep representation for volumetric shapes. In The IEEE Conference on computer vision and pattern recognition (pp. 1912–1920).

Xie, L., Ahmad, T., Jin, L., Liu, Y., & Zhang, S. (2018). A new CNN-based method for multi-directional car license plate detection. IEEE Transactions on Intelligent Transportation Systems, 19(2), 507–517.

Zhang, Z., Jaiswal, P., & Rai, R. (2018). FeatureNet: Machining feature recognition based on 3D convolution neural network. Computer-Aided Design, 101, 12–22.

Zhi, S., Liu, Y., Li, X., & Guo, Y. (2018). Toward real-time 3D object recognition: A lightweight volumetric cnn framework using multitask learning. Computers & Graphics, 71, 199–207.

Zhong, Z., Jin, L., Xie, Z. (2015). High performance offline handwritten Chinese character recognition using googlenet and directional feature maps. In 2015 13th International IEEE conference on document analysis and recognition. https://doi.org/10.1109/ICDAR.2015.7333881.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ning, F., Shi, Y., Cai, M. et al. Various realization methods of machine-part classification based on deep learning. J Intell Manuf 31, 2019–2032 (2020). https://doi.org/10.1007/s10845-020-01550-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-020-01550-9