Abstract

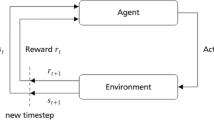

Fabrication areas in semiconductor industry are considered one of the most complex production systems. This complexity is caused by the high-mix of products and end-user market-based demands in that industry. Its dynamic and challenging processing requirements affect the handling capabilities of traditional production management paradigms. In this paper, we propose an application for dispatching and resources allocation through reinforcement learning. The application is based on a discrete-event simulation model for a case study of a real semiconductor manufacturing system. The model is built using both data-driven and agent-based approaches. The model simulates the various processing aspects that are present normally in these complex systems. The model’s agents are responsible for dispatching tasks and allocation of the different system’s resources. They employ Deep-Q-Network reinforcement learning. They learn simultaneously through the model execution. An independent Deep-Q-Network is trained for each agent. The model provides the training environment for the agents in which their decisions are applied and assessed for their adequacy. Our formulation of the environment’s state and the reward function for the learning algorithms creates cooperative decision-making policies for the agents. This results in improving the global performance of the whole system, and the performance of each agent’s resources. Our approach is compared to heuristics-based strategies that are applied in our case study. It achieved better production performance than the currently applied strategy.

Similar content being viewed by others

References

Chik, M., Darudin, M. Z. M., Ibrahim, K., & Ashaari, H. (2020). Manufacturing WIP management systems with automated dispatching decision: Silterra case study. In 2020 4th IEEE electron devices technology and manufacturing conference (EDTM) (pp. 1–4). IEEE

Edis, R. S., & Ornek, A. (2009). Simulation analysis of lot streaming in job shops with transportation queue disciplines. Simulation Modelling Practice and Theory, 17(2), 442–453.

Fordyce, K., Milne, R. J., Wang, C. T., & Zisgen, H. (2015). Modeling and integration of planning, scheduling, and equipment configuration in semiconductor manufacturing?: Part i review of successes and opportunities. International Journal of Industrial Engineering: Theory, Applications and Practice, 22(5), 575–600.

Fowler, J. W., Mönch, L., & Ponsignon, T. (2015). Discrete-event simulation for semiconductor wafer fabrication facilities: A tutorial. International Journal of Industrial Engineering, 22(5)

Frank, A. G., Dalenogare, L. S., & Ayala, N. F. (2019). Industry 4.0 technologies: Implementation patterns in manufacturing companies. International Journal of Production Economics, 210, 15–26.

Freitag, M., & Hildebrandt, T. (2016). Automatic design of scheduling rules for complex manufacturing systems by multi-objective simulation-based optimization. CIRP Annals, 65(1), 433–436.

Ghani, U., Monfared, R. P., & Harrison, R. (2012). Energy optimisation in manufacturing systems using virtual engineering-driven discrete event simulation. Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture, 226(11), 1914–1929.

Jeon, S. M., & Kim, G. (2016). A survey of simulation modeling techniques in production planning and control (ppc). Production Planning & Control, 27(5), 360–377.

Korytkowski, P., Wiśniewski, T., & Rymaszewski, S. (2013). An evolutionary simulation-based optimization approach for dispatching scheduling. Simulation Modelling Practice and Theory, 35, 69–85.

Kuhnle, A., & Lanza, G. (2019). Application of reinforcement learning in production planning and control of cyber physical production systems. In J. Beyerer, C. Kühnert, & O. Niggemann (Eds.), Machine Learning for Cyber Physical Systems (pp. 123–132). Berlin: Springer.

Lee, J., Bagheri, B., & Kao, H. A. (2015). A cyber-physical systems architecture for Industry 4.0-based manufacturing systems. Manufacturing Letters, 3, 18–23.

McDonald, T., Akenb, E., & Ellib, K. (2012). Utilizing simulation to evaluate production line performance under varying demand conditions. International Journal of Industrial Engineering Computations, 3(1), 3–14.

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A., Antonoglou, I., Wierstra, D., & Riedmiller, M. (2013). Playing atari with deep reinforcement learning. arXiv:1312.5602

Mönch, L. (2007). Simulation-based benchmarking of production control schemes for complex manufacturing systems. Control Engineering Practice, 15(11), 1381–1393.

Negria, E., Fumagalli, L., & Macchi, M .(2017) A review of the roles of Digital Twin in CPS-based production systems. In International conference on flexible automation and intelligent manufacturing, Modena, Italy.

Ocampo-Martinez, C., et al. (2019). Energy efficiency in discrete-manufacturing systems: insights, trends, and control strategies. Journal of Manufacturing Systems, 52, 131–145.

Ouelhadj, D., & Petrovic, S. (2009). A survey of dynamic scheduling in manufacturing systems. Journal of scheduling, 12(4), 417–431.

Reményi, C., & Staudacher, S. (2014). Systematic simulation based approach for the identification and implementation of a scheduling rule in the aircraft engine maintenance. International Journal of Production Economics, 147, 94–107.

Rocchetta, R., Bellani, L., Compare, M., Zio, E., & Patelli, E. (2019). A reinforcement learning framework for optimal operation and maintenance of power grids. Applied Energy, 241, 291–301.

Said, A. B., Shahzad, M. K., Zamai, E., Hubac, S., & Tollenaere, M. (2016). Towards proactive maintenance actions scheduling in the semiconductor industry (si) using bayesian approach. IFAC-PapersOnLine, 49(12), 544–549.

Shahrabi, J., Adibi, M. A., & Mahootchi, M. (2017). A reinforcement learning approach to parameter estimation in dynamic job shop scheduling. Computers & Industrial Engineering, 110, 75–82.

Sutton, R. S., & Barto, A. G. (2018). Reinforcement learning: An introduction. MIT Press, Cambridge.

Uzsoy, R., Church, L. K., Ovacik, I. M., & Hinchman, J. (1993). Performance evaluation of dispatching rules for semiconductor testing operations. Journal of Electronics Manufacturing, 03(02), 95–105.

Vachálek, J., Bartalský , L., Rovný, O., Šimšová, D., Morháč, M., & Lokšík, M. (2017). The digital twin of an industrial production line within the industry 4.0 concept. In 2017 21st international conference on process control (PC) (pp. 258–262).

Wang, Y. C., & Usher, J. M. (2005). Application of reinforcement learning for agent-based production scheduling. Engineering Applications of Artificial Intelligence, 18(1), 73–82.

Xanthopoulos, A., Kiatipis, A., Koulouriotis, D. E., & Stieger, S. (2017). Reinforcement learning-based and parametric production-maintenance control policies for a deteriorating manufacturing system. IEEE Access, 6, 576–588.

Zhu, X., Qiao, F., & Cao, Q. (2017). Industrial big data-based scheduling modeling framework for complex manufacturing system. Advances in Mechanical Engineering, 9(8), 1–12.

Zhuang, C., Liu, J., & Xiong, H. (2018). Digital twin-based smart production management and control framework for the complex product assembly shop-floor. The International Journal of Advanced Manufacturing, 96, 1149–1163.

Acknowledgements

This work is funded through the Mitacs Accelerate program, Grant No. IT01632, in partnership with Teledyne DALSA Semiconductor, a business unit of Teledyne Digital Imaging, Inc. The authors would like to thank the industrial and information technology teams at Teledyne DALSA semiconductor for their great support and collaboration.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sakr, A.H., Aboelhassan, A., Yacout, S. et al. Simulation and deep reinforcement learning for adaptive dispatching in semiconductor manufacturing systems. J Intell Manuf 34, 1311–1324 (2023). https://doi.org/10.1007/s10845-021-01851-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-021-01851-7