Abstract

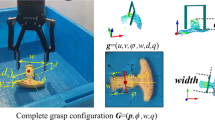

Recently, many researches focus on learning to grasp novel objects, which is an important but still unsolved issue especially for service robots. While some approaches perform well in some cases, they need human labeling and can hardly be used in clutter with a high precision. In this paper, we apply a deep learning approach to solve the problem about grasping novel objects in clutter. We focus on two-fingered parallel-jawed grasping with RGBD camera. Firstly, we propose a ‘grasp circle’ method to find more potential grasps in each sampling point with less cost, which is parameterized by the size of the gripper. Considering the challenge of collecting large amounts of training data, we collect training data directly from cluttered scene with no manual labeling. Then we need to extract effective features from RGB and depth data, for which we propose a bimodal representation and use two-stream convolution neural networks (CNNs) to handle the processed inputs. Finally the experiment shows that compared to some existing popular methods, our method gets higher success rate of grasping for the original RGB-D cluttered scene.

Similar content being viewed by others

References

Bohg, J., Morales, A., Asfour, T., Kragic, D.: Data-driven grasp synthesis-A survey. IEEE T. Robot. 30(2), 289–309 (2014). https://doi.org/10.1109/Tro.2013.2289018

Miller, A.T., Allen, P.K.: Grasp it! A versatile simulator for robotic grasping. Ieee Robot. Autom. Mag. 11(4), 110–122 (2004). https://doi.org/10.1109/Mra.2004.1371616

Pas, A.T., Gualtieri, M., Saenko, K., Platt, R.: Grasp pose detection in point clouds. arXiv:1706.09911 (2017)

Hu, Y., Li, Z., Li, G., Yuan, P., Yang, C., Song, R.: Development of sensory-motor fusion-based manipulation and grasping control for a robotic hand-eye system. IEEE Trans. Syst. Man Cybern. Syst. 47(7), 1169–1180 (2017)

Saxena, A., Driemeyer, J., Kearns, J., Osondu, C., Ng, A.Y.: Learning to grasp novel objects using vision. In: 10th International Symposium of Experimental Robotics (ISER) (2006)

Le, Q.V., Kamm, D., Kara, A.F., Ng, A.Y.: Learning to grasp objects with multiple contact points. In: 2010 IEEE International Conference on 2010 Robotics and Automation (ICRA), pp. 5062–5069. IEEE

Jiang, Y., Moseson, S., Saxena, A.: Efficient grasping from rgbd images: Learning using a new rectangle representation. In: 2011 IEEE International Conference on 2011 Robotics and Automation (ICRA), pp. 3304–3311. IEEE

Sun, C., Yu, Y., Liu, H., Gu, J.: Robotic grasp detection using extreme learning machine. In: IEEE International Conference on Robotics and Biomimetics 2015, pp. 1115–1120

Lenz, I., Lee, H., Saxena, A.: Deep learning for detecting robotic grasps. Int. J. Robot. Res. 34(4-5), 705–724 (2015)

Redmon, J., Angelova, A.: Real-time grasp detection using convolutional neural networks. In: 2015 IEEE International Conference on 2015 Robotics and Automation (ICRA), pp. 1316–1322. IEEE

Pinto, L., Gupta, A.: Supersizing self-supervision: Learning to grasp from 50K tries and 700 robot hours. In: IEEE International Conference on Robotics and Automation 2016, pp. 3406–3413

Levine, S., Pastor, P., Krizhevsky, A., Quillen, D.: Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection (2016). arXiv:1603.02199

Przybylski, M., Asfour, T., Dillmann, R.: Planning grasps for robotic hands using a novel object representation based on the medial axis transform. In: IEEE/rsj International Conference on Intelligent Robots and Systems 2011, pp. 1781–1788

Herzog, A., Pastor, P., Kalakrishnan, M., Righetti, L.: Template-based learning of grasp selection. In: IEEE International Conference on Robotics and Automation 2012, pp. 2379–2384

Fischinger, D., Vincze, M.: Empty the basket-a shape based learning approach for grasping piles of unknown objects. In: 2012 IEEE/RSJ International Conference on 2012 Intelligent Robots and Systems (IROS), pp. 2051–2057. IEEE

Fischinger, D., Weiss, A., Vincze, M.: Learning grasps with topographic features. Int. J. Robot. Res. 34(9), 1167–1194 (2015)

Gualtieri, M., Ten Pas, A., Saenko, K., Platt, R.: High precision grasp pose detection in dense clutter. In: 2016 IEEE/RSJ International Conference on 2016 Intelligent Robots and Systems (IROS), pp. 598–605. IEEE

Mahler, J., Liang, J., Niyaz, S., Laskey, M., Doan, R., Liu, X., Ojea, J.A., Goldberg, K.: Dex-Net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. arXiv:1703.09312 (2017)

Harada, K., Tsuji, T., Uto, S., Yamanobe, N., Nagata, K., Kitagaki, K.: Stability of soft-finger grasp under gravity. In: 2014 IEEE International Conference on 2014 Robotics and Automation (ICRA), pp. 883–888. IEEE

Abhishek, A., Kumar, M., Garg, D.P.: Grasp planning for a two-fingered parallel-jawed gripper. In: ASME 2006 International Mechanical Engineering Congress and Exposition 2006, pp. 1325–1333

Nguyen, V.D.: Constructing force-closure grasps. Int. J. Robot. Res. 7(3), 3–16 (1988)

Pas, A.T., Platt, R.: Using geometry to detect grasp poses in 3D point clouds. In: Isrr (2015)

Kappler, D., Bohg, J., Schaal, S.: Leveraging big data for grasp planning. In: IEEE International Conference on Robotics and Automation 2015, pp. 4304–4311

Chopin, N.: Fast simulation of truncated Gaussian distributions. Stat. Comput. 21(2), 275–288 (2011)

Krizhevsky, A.: Learning Multiple Layers of Features from Tiny Images Tech Report (2009)

Eitel, A., Springenberg, J.T., Spinello, L., Riedmiller, M.: Multimodal deep learning for robust RGB-D object recognition. In: 2015 IEEE/RSJ International Conference on 2015 Intelligent Robots and Systems (IROS), pp. 681–687. IEEE

Abadi, M., Agarwal, A., Barham, P., Brevdo, E., Chen, Z., Citro, C., Corrado, G.S., Davis, A., Dean, J., Devin, M.: TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. Google Technical Report (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: International Conference on Neural Information Processing Systems, pp. 1097–1105 (2012)

Kahler, O., Prisacariu, V., Ren, C., Sun, X., Torr, P., Murray, D.: Very high frame rate volumetric integration of depth images on mobile devices. IEEE Trans. Visual. Comput. Graph. 21(11), 1241–1250 (2015)

Quigley, M., Conley, K., Gerkey, B.P., Faust, J., Foote, T., Leibs, J., Wheeler, R., Ng, A.Y.: ROS: An open-source robot operating system. In: ICRA Workshop on Open Source Software (2009)

Sucan, I.A., Chitta, S.: Moveit! Online at http://moveit.ros.org (2013)

Diankov, R.: Automated construction of robotic manipulation programs (2010)

Acknowledgments

Our research has been supported in part by National Natural Science Foundation of China under Grant 61673261. We gratefully acknowledge YASKAWA Electric Corporation for supporting the funds on the project “Research and Development of Key Technologies for Smart Picking System” Moreover, this work has also been supported by Shanghai Kangqiao Robot Industry Development Joint Research Centre.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

(MP4 144 MB)

Rights and permissions

About this article

Cite this article

Ni, P., Zhang, W., Bai, W. et al. A New Approach Based on Two-stream CNNs for Novel Objects Grasping in Clutter. J Intell Robot Syst 94, 161–177 (2019). https://doi.org/10.1007/s10846-018-0788-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10846-018-0788-6