Abstract

Measurement of performance metrics namely repeatability and directional accuracy of an under-actuated cable-driven robot using a monocular camera is proposed. Experiments were conducted on a cable-driven robot used for construction and the results are compared with that from a laser tracker. Least value of about 18 mm was observed at the middle of the workspace and it agreed with the measurement from laser tracker. Using the proposed method it was also possible to measure orientation repeatability considering the end-effector coordinate system as the reference, which is an advantage over using a laser tracker. Since the robot workspace is very large and there are restrictions on feasible measurement volume while using a camera, a method to determine suitable locations of the camera to enable measurement in all of the workspace is also proposed. Results of this analysis show that the camera may be positioned at four feasible regions during measurement over the whole workspace. Finally, a method for measurement of cable profile is also proposed. The position and slope of the cable at two locations were measured using a camera. This was utilized to fit a catenary model such that profile of the cable between these points can be determined. Experiments conducted to determine the cable profile in different regions of workspace showed that there is comparatively greater sagging at the periphery of the workspace which explains the higher positioning errors in the same region.

Similar content being viewed by others

References

Williams II, R., Gallina, P.: Translational planar cable-direct-driven robots. J. Intell. Robot Syst. 37, 69–96 (2003)

Cone, L.L.: Skycam - an aerial robotic camera system. Byte 10, 122–132 (1985)

Albus, J., Bostelman, R., Dagalakis, N.: The nist robocrane. J. of Field Robot. 709–724 (1993)

Pinto, A.M., Moreira, E., Lima, J.: A cable-driven robot for architectural constructions: a visual-guided approach for motion control and path- planning. Auton. Robot. 41, 1487–1499 (2017)

Barnett, E., Gosselin, C.: Large-scale 3d printing with a cable-suspended robot. Autonomous Robots (2015)

ISO: Manipulating industrial robots – performance criteria and related test methods (1998)

Maloletov, A.V., Fadeev, M.Y., Klimchik, A.S.: Error analysis in solving the inverse problem of the cable-driven parallel underactuated robot kinematics and methods for their elimination. In: IFAC-PapersOnLine, pp. 1156–1161. 9th IFAC Conference on Manufacturing Modelling, Management and Control MIM 2019 (2019)

Boby, R.A.: Vision based techniques for kinematic identification of an industrial robot and other devices. Phd thesis Indian Institute of Technology Delhi (2018)

Schmidt, V.L.: Modeling techniques and reliable real-time implementation of kinematics for cable-driven parallel robots using polymer fiber cables. Phd thesis University of Stuttgart (2016)

Vicon: Motion Capture. https://www.vicon.com/. Accessed on 12/11/20

Boby, R.A., Klimchik, A.: Combination of geometric and parametric approaches for kinematic identification of an industrial robot. Robot. Comput. Integr. Manuf. 71, 102142 (2021)

Trujillo, J.C., Ruiz-Velázquez, E., Castillo-Toledo, B.: A cooperative aerial robotic approach for tracking and estimating the 3d position of a moving object by using pseudo-stereo vision. J. Intell. Robot. Syst. 96, 297–313 (2019)

Kaczmarek, A.L.: 3d vision system for a robotic arm based on equal baseline camera array. J. Intell. Robot. Syst. 99, 13–28 (2020)

Boby, R.A., Saha, S.K.: Single image based camera calibration and pose estimation of the end-effector of a robot. In: 2016 IEEE International Conference on Robotics and Automation (ICRA), pp. 2435–2440 (2016)

Boby, R.A.: Identification and classification of coating defects using machine vision. Master’s thesis, Indian Institute of Technology Madras (2010)

Boby, R.A., Sonakar, P.S., Singaperumal, M., Ramamoorthy, B.: Identification of defects on highly reflective ring components and analysis using machine vision. Int. J. Adv. Manuf. Technol. 52(1-4), 217–233 (2011)

Boby, R., Hanmandlu, M., Sharma, A., Bindal, M.: Extraction of fractal dimension for iris texture. In: Biometrics (ICB), 2012 5th IAPR International Conference on, pp. 330–335. IEEE (2012)

Roy, M., Boby, R.A., Chaudhary, S., Chaudhury, S., Roy, S. D., Saha, S.: Pose estimation of texture-less cylindrical objects in bin picking using sensor fusion. In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2279–2284. IEEE (2016)

Patruno, C., Nitti, M., Petitti, A., Stella, E., D’Orazio, T.: A vision-based approach for unmanned aerial vehicle landing. J. Intell. Robot. Syst. 95, 645–664 (2019)

Boutteau, R., Rossi, R., Qin, L., Merriaux, P., Savatier, X.: A vision-based system for robot localization in large industrial environments. J. Intell. Robot. Syst. 99, 359–370 (2020)

ISO: ISO 13309: Manipulating industrial robots-informative guide on test equipment and metrology methods of operation for robot performance evaluation in accordance with ISO 9283 (1995)

Davidson, M.W.: Resolution. https://www.microscopyu.com/microscopy-basics/resolution. Accessed on 8/9/21 (2021)

Dallej, T., Gouttefarde, M., Andreff, N., Hervé, P.E., Martinet, P.: Modeling and vision-based control of large-dimension cable-driven parallel robots using a multiple-camera setup. Mechatronics 61, 20–36 (2019)

Nan, R., LI, D., Jin, C., Wang, Q., Zhu, L., Zhu, W., Zhang, H., Yue, Y., Qian, L.: The five-hundred-meter aperture spherical radio telescope (fast) project. Int. J. Modern Phys. D 20 (06), 989–1024 (2011)

Kozak, K., Zhou, Q., Wang, J.: Static analysis of cable-driven manipulators with non-negligible cable mass. IEEE Trans. Robot. 22(3), 425–433 (2006)

Merkin, D.R.: Vvedenie v mekhaniku gibkoi niti. Nauka. In Russian (1980)

Innopolis University: Cable robot. https://www.youtube.com/watch?v=dMq9EQMONM4. Accessed on 8/9/21 (2020)

Salinas, R.M.: Mapping and localization from planar markers. http://www.uco.es/investiga/grupos/ava/node/57. Accessed on 8/9/21 (2018)

Wang, J., Olson, E.: AprilTag 2: Efficient and robust fiducial detection. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (2016)

Zake, Z., Caro, S., Roos, A.S., Chaumette, F., Pedemonte, N.: Stability analysis of pose-based visual servoing control of cable-driven parallel robots. In: Pott, A., Bruckmann, T. (eds.) Cable-Driven Parallel Robots, pp 73–84. Springer International Publishing (2019)

Hayat, A.A., Boby, R.A., Saha, S.K.: A geometric approach for kinematic identification of an industrial robot using a monocular camera. Robot. Comput. Integr. Manuf. 57, 329–346 (2019)

Basler: aca1920-155um - basler ace. https://www.baslerweb.com. Accessed on 12/11/20 (2020)

Faro: Faro vantage laser tracker. www.faro.com/en/Products/Hardware/Vantage-Laser-Trackers Accessed on 8/9/21 (2021)

Boby, R.A., Klimchik, A.: Identification of elasto-static parameters of an industrial robot using monocular camera. Robotics and Computer-Integrated Manufacturing, Forthcoming (2021)

Boby, R.A.: Kinematic identification of industrial robot using end-effector mounted monocular camera bypassing measurement of 3d pose. IEEE/ASME Transactions on Mechatronics, 1–1. https://doi.org/10.1109/TMECH.2021.3064916 (2021)

Fadeev, M., Boby, R.A., Maloletov, A.: Vision based method for improving position accuracy of four-cable-driven platform. In: 2021 International Conference Nonlinearity, Information and Robotics (NIR), pp. 1–6 (2021)

Garrido-Jurado, S., Munoz-Salinas, R., Madrid-Cuevas, F., Marin-Jimenez, M.: ArUco: a minimal library for augmented reality applications based on opencv. https://www.uco.es/investiga/grupos/ava/node/26. Accessed on 8/9/21 (2020)

Rafael, M., Manuel, M., Enrique, Y., Rafael, M.: Mapping and localization from planar markers. Pattern Recognit. 73, 158–171 (2018)

Acknowledgements

Mikhail Fadeev wrote code for controlling the robot. Roman Fedorenko prepared an analysis of motion capture systems.

Funding

This work was funded by RFBR fund No. 19-08-01234.

Author information

Authors and Affiliations

Contributions

Riby Abraham Boby conceptualized, designed, acquired/analyzed/interpreted data, created codes and drafted the manuscript as part of the reported work. Alexander Maloletov advised on the conception of work. Alexander Maloletov and Alexandr Klimchik reviewed and suggested improvements in the manuscript. All the authors approved the manuscipt.

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by Russian Federation for Basic Research (RFBR No. 19-08-01234).

Appendices

Appendix A: Performance Criteria of Industrial Robots

Repeatability and accuracy are two important metrics used to measure performance criteria of industrial robots. Measurement of repeatability and distance accuracy is explained in this Appendix. Repeatability of robot [6, 21] is measured after defining a cube shown in Fig. 14. Then, the robot is made to move within the shaded region with a given velocity. The movement is to be made in the order of P1, P2, P3, P4 and P5, as shown in Fig. 15.

Let the parameters, xj, yj and zj, are the coordinates of the jth attained pose. Robot repeatability in position, RPl, is then computed as

where, \(\bar {l}=\frac {1}{n}{\sum }_{j=1}^{n}l_{j}\) and \(l_{j}=\sqrt {(x_{j}-\bar {x})^{2}+(y_{j}-\bar {y})^{2}+(z_{j}-\bar {z})^{2}}\). Additionally, \(\bar {x}=\frac {1}{n}{\sum }_{j=1}^{n}x_{j}\), \(\bar {y}=\frac {1}{n}{\sum }_{j=1}^{n}y_{j}\) and \(\bar {z}=\frac {1}{n}{\sum }_{j=1}^{n}z_{j}\) which are the coordinates of the bary-center obtained after repeatedly approaching to a pose n times. Moreover, \(S_{l}=\sqrt {\frac {{\sum }_{j=1}^{n}(l_{j}-\bar {l})^{2}}{n-1}}\).

Now, if Aj, Bj and Cj be the roll, pitch and yaw angles of the end-effector of the robot at the jth pose respectively, robot’s repeatability for orientation corresponding to each rotation is given by

where \(\bar {A}=\frac {1}{n}{\sum }_{j=1}^{n}A_{j},\bar {B}=\frac {1}{n}{\sum }_{j=1}^{n}B_{j}\), and \(\bar {C}=\frac {1}{n}{\sum }_{j=1}^{n}C_{j}\) in which, \(\bar {A}\), \(\bar {B}\) and \(\bar {C}\) are the mean values of the angles obtained after reaching the orientation n times. Distance accuracy represents the deviation of the measured distance moved by the robot in comparison to the actual distance commanded to the robot. This is represented by the mean of the difference in measured and commanded distances. Directional accuracy ADP is calculated as follows:

where, \(\bar {D}=\frac {{\sum }_{j=1}^{j=n}D_{j}}{n}\), Dj = ||p1j − p2j||2 and Dj = ||pc1 − pc2||2. The 3-D coordinates of commanded pose for two points are represented by pc1 and pc2. The corresponding measured coordinates at jth measurement are p1j and p2j respectively.

Appendix B: Fiducial Markers

Methods of pose detection using fiducial markers are explained in this Appendix. A Software Development Kit (SDK) called ArUco [37] was used. Details about it are explained next. ArUco is a SDK for generating fiducial markers (Fig. 16a) which is used for artificial reality applications. The markers can be easily detected in an image taken by a camera. If the camera is calibrated before-hand, the pose consisting of information on 3-D position coordinates and 3-D orientation of the marker with respect to the camera can also be estimated. There is option to use a single marker, or an array of markers called board of markers. If an array of markers are used, even if there is occlusion of some of the markers it is still possible to detect the pose of the board when the configuration of the markers is known. Detection of markers in image is shown in Fig. 16b.

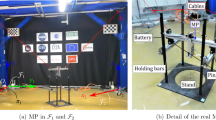

In this work, the camera was mounted on a tripod so that the robot’s end-effector is visible. Then the calibration was done to obtain the camera’s internal parameters. ArUco markers was created using the ArUco SDK. Its configuration was saved in a separate file. Given the configuration of the markers and the physical dimension of each marker, the pose of the board of markers from any image of the camera can be obtained. The robot was moved along a particular trajectory. At each pose of the robot, an image of the system of the markers was grabbed. Later, the images were processed using ArUco’s SDK to obtain the pose of the camera. After multiple measurements, the values of repeatability (as defined in Appendix A) were calculated. As discussed earlier in Appendix B, ArUco SDK can create markers which can be easily identified within a given image. They are designed in such a way that each marker has a unique id and can also be uniquely detected. A board of marker has multiple such markers arranged on a planar board which is then printed on a paper. Given the sequence in which the markers are arranged in the board and the marker size, the 2-D pose of each marker with respect to another can be obtained and this is termed as marker configuration. Therefore, given an input image from a calibrated camera in which any part of the board is visible and the marker configuration, the pose of the camera can be estimated.

An alternate method of utilizing the fiducial markers is to use a Marker map [38]. For Marker maps, the markers may not necessarily be pasted on the same planar surface. It can be pasted at any arbitrary orientations. An image containing any two given markers could provide the pose of one with respect to other. Assume there are multiple markers, for e.g., m1, m2,...mn. If multiple images with markers m1 and m2, then m2 and m3 etc., are present, it is possible to compute the pose of the marker m2 with respect to the other ones. Similarly, if the whole grid of markers is imaged in this fashion, the mutual pose of each one of the marker with respect to the others is also determined. An application of pose measurement of industrial robots using fiducial markers can be found at [11, 34].

Rights and permissions

About this article

Cite this article

Boby, R.A., Maloletov, A. & Klimchik, A. Measurement of End-effector Pose Errors and the Cable Profile of Cable-Driven Robot using Monocular Camera. J Intell Robot Syst 103, 32 (2021). https://doi.org/10.1007/s10846-021-01486-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10846-021-01486-z