Abstract

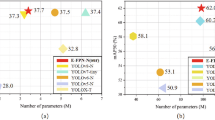

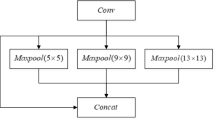

Object detection based on visual images provides significant technical support to realize autonomous environment perception of unmanned surface vehicles (USVs), yet difficulties exist for object detection on the sea surface, such as the constantly-changing weather conditions, considerable changes in the scale of objects, and severe object shaking. In this paper, an USV object detection algorithm is proposed based on an optimized feature fusion network. YOLOv4 was selected as the baseline, which is currently one of the object detection models with the best trade-offs between speed and accuracy. In the proposed weighted Cross Stage Partial Path Aggregation Network (wCSPPAN) structure, the concept of the Cross Stage Partial Network (CSPNet) was applied to the feature fusion network, so as to significantly reduce the amount of computation. In addition, weights that can be learned were introduced to learn input features of different resolutions in a more reasonable way. Finally, to obtain cross-space and cross-scale feature interactions, attempts were made to integrate the universal visual component of the Feature Pyramid Transformer (FPT) with the transformer mechanism in the YOLOv4 model. The FPT was used to transform the feature pyramid into another feature pyramid of the same size with richer contextual information for each level of feature map. We compare the performance with other advanced object detection algorithms. The experiments conducted on the dataset of sea surface buoys, which was collected and produced independently for the present study, and the open source Singapore Maritime Dataset verify that the proposed method achieved good results in the detection of sea objects of different scales under various weather conditions. In particular, the detection of long-distance small objects under foggy conditions with low visibility and extreme conditions was improved, and real-time sea surface object detection was achieved with an inference speed of up to 36FPS on a single RTX 2080Ti. Further, the detection results on the COCO dataset and KITTI dataset verify the excellent generalization ability of our proposed object detection model.

Similar content being viewed by others

References

Dai, Z., et al.: Fast and accurate cable detection using CNN. Appl. Intell. 50, 4688–4707 (2020)

Mao, Q.C., et al.: Finding every car: a traffic surveillance multi-scale vehicle object detection method. Appl. Intell. 50, 3125–3136 (2020)

Xu, Z.F., Jia, R.S., Sun, H.M., Liu, Q.M., Cui, Z.: Light-YOLOv3: fast method for detecting green mangoes in complex scenes using picking robots. Appl. Intell. 50, 4670–4687 (2020)

Girshick, R. et al.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Computer Vision and Pattern Recognition (CVPR), 2014 IEEE conference on, pp. 580–587. IEEE (2014)

Ren, S., et al.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems (NIPS), pp. 91–99 (2015)

Cai, Z., Vasconcelos, N.: Cascade R-CNN: delving into high quality object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6154–6162 (2018)

He, K., et al.: Mask R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 2961–2969 (2017)

Liu, W., et al.: SSD: Single shot multibox detector. In: ECCV (2016)

Redmon, J. et al.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 779–788 (2016)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7263–7271 (2017)

Redmon, J., Farhadi, A.: YOLOv3: An incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

Bochkovskiy, A., Wang, C.-Y., Liao, H.Y.M.: YOLOv4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020)

Lin, T.-Y. et al.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 2980–2988 (2017)

Duan, K., et al.: CenterNet: Keypoint triplets for object detection. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 6569–6578 (2019)

Law, H., Deng, J.: CornerNet: detecting objects as paired keypoints. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 734–750 (2018)

Tian, Z., et al.: FCOS:fully convolutional one-stage object detection. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 9627–9636 (2019)

Everingham, M., et al.: The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 111(1), 98–136 (2015)

Lin, T.-Y., et al.: Microsoft COCO: Common Objects in Context. In: ECCV, Microsoft COCO: Common Objects in Context (2014)

Xueqiang, S., Peng, J., Zhu, H.: Research on unmanned vessel surface object detection based on fusion of SSD and Faster-RCNN. Source: Proceedings - 2019 Chinese Automation Congress, CAC, pp. 3784–3788 (2019)

Prasad, D.K., Rajan, D., Rachmawati, L., Rajabally, E., Quek, C.: Video processing from electro-optical sensors for object detection and tracking in maritime environment: a survey. IEEE Trans. Intell. Transp. Syst. (IEEE). 18, 1993–2016 (2017)

Zhang, W., Gao, X.Z., Yang, C.F., Jiang, F., Chen, Z.Y.: A object detection and tracking method for security in intelligence of unmanned surface vehicles. Journal of Ambient Intelligence and Humanized Computing. (2020)

Liu, T., Pang, B., Ai, S., Sun, X.: Study on visual detection algorithm of sea surface targets based on improved YOLOv3. Sensors. 20, 7263 (2020)

Li, Y., Guo, J., Guo, X., Liu, K., Zhao, W., Luo, Y., Wang, Z.: A novel target detection method of the unmanned surface vehicle under all-weather conditions with an improved YOLOV3. Sensors. 20, 4885 (2020)

Huang, G., et al.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708. Honolulu, HI, USA, 21–26 July (2017)

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. In: CVPR. (2018)

Dong, Z., et al.: Feature Pyramid Transformer. In: ECCV, Feature Pyramid Transformer (2020)

Liu, S., et al.: Path aggregation network for instance segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8759–8768 (2018)

He, K., et al.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI). 37(9), 1904–1916 (2015)

Wang, C.-Y. et al.: CSPNet: A new backbone that can enhance learning capability of cnn. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshop (CVPR Workshop) (2020)

Vaswani, A., et al.: Attention is all you need. In: NeurIPS (2017)

Carion, N., et al.: End to-end object detection with transformers. In: ECCV (2020)

Lazebnik, S., Schmid, C., Ponce, J. Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In: CVPR (2006)

Springenberg, J.T., et al.: Striving for simplicity: The all convolutional net. In: ICLR (2015)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. In: ICLR (2016)

Adelson, E.H., et al.: Pyramid methods in image processing. RCA Eng. 29(6), 33–41 (1984)

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional networks. In: ECCV (2014)

Lin, T.-Y., et al.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2117–2125 (2017)

Li, Y., et al.: Scale-aware trident networks for object detection. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2019)

Qiao, S., Chen, L.-C., Yuille, A.: DetectoRS: Detecting objects with recursive feature pyramid and switchable atrous convolution. arXiv preprint arXiv:2006.02334 (2020)

Tan, M., Pang, R., Le, QV.: EfficientDet: Scalable and efficient object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Zhang, H., et al.: Co-Occurrent Features in Semantic Segmentation. In: CVPR (2019)

Yang, Z., et al.: Breaking the Softmax Bottleneck: a High-Rank Rnn Language Model. In: ICLR (2018)

Lin, M., Chen, Q., Yan, S.: Network in network. In: ICLR (2014)

Wang, C.-Y., Bochkovskiy, A., Liao, H.Y.M.: Scaled-YOLOv4: Scaling cross stage partial network. arXiv preprint arXiv:2011.08036 (2020)

Tan, M., Le, Q.V.: Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks. ICML (2019)

Kaiming He et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets robotics: the KITTI dataset. Int. J. Robot. Res. 11, 1231–1237 (2013)

Availability of Data and Materials

The open source Singapore Maritime Dataset available for download at: https://sites.google.com/site/dilipprasad/home/singapore-maritime-dataset.

Code Availability

Our code is currently not open source.

Funding

This work was supported by Equipment Pre-research Key Laboratory Fund (6142215190207). The authors would like to express their gratitude to all their colleagues in the National Key Laboratory of Science and Technology on Autonomous Underwater Vehicle, Harbin Engineering University.

Author information

Authors and Affiliations

Contributions

Xiaoqiang Sun: implementation and execution of the Deep Learning experiments; writing of the manuscript.

Tao Liu: development of the dataset and helped writing the manuscript.

Xiuping Yu: theoretical support on the idea; revising the manuscript.

Bo Pang: theoretical support on the idea; revising the manuscript.

Corresponding author

Ethics declarations

Ethics Approval

All procedures performed in this study were in accordance with with the ethical standards of the institution and the National Research Council. Approval was obtained from the ethics committee of Harbin Engineering University.

Consent to Participate

Informed consent was obtained from all individual participants included in the study.

Consent for Publication

The authors affirm that research participants provided informed consent for publication of their data and photographs.

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sun, X., Liu, T., Yu, X. et al. Unmanned Surface Vessel Visual Object Detection Under All-Weather Conditions with Optimized Feature Fusion Network in YOLOv4. J Intell Robot Syst 103, 55 (2021). https://doi.org/10.1007/s10846-021-01499-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10846-021-01499-8