Abstract

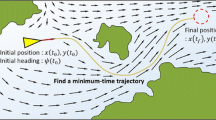

Path tracking has a significant impact on the success of long-term autonomous underwater vehicle (AUV) missions in terms of safety, energy-saving, and efficiency. However, it is a challenging problem due to the model uncertainty, and ocean current disturbance. Moreover, the widely used line of sight (LOS) algorithm with fixed lookahead distance does not perform well because it requires an urgent need for the automatic adjustment of the parameter. Considering the above, this study proposes an adaptive line-of-sight (ALOS) guidance method with reinforcement learning (RL) based on the dynamic data-driven AUV model (DDDAM). Firstly, we introduced a detailed AUV dynamic model mainly including the models with and without current influence. Next, we conducted a detailed analysis of the path tracking error dynamics and the factors influencing the tracking performance based on the model proposed above. We then used the DDDAM (using long short-term memory (LSTM) neural network) to pre-train the RL framework to generate more samples for online learning in order to speed up the learning process. Finally, the deterministic policy gradient (DPG) based RL was designed to optimize the continuously varying lookahead distance considering the previously analyzed factors. Collectively, this paper presents simulation cases and an evaluation of the algorithm. Our results indicate that the proposed method significantly improves the performance of path tracking with effectiveness and robustness.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

The data sets supporting the results of this article are included in the article.

References

A, B.D., B, M.L., A, G.P., B, I.G., B, R.B.: Comparison of model-free and model-based methods for time optimal hit control of a badminton robot. Mechatronics 24(8), 1021–1030 (2014)

Abdurahman, B., Savvaris, A., Tsourdos, A.: A switching los guidance with relative kinematics for path-following of underactuated underwater vehicles. Ifac Papersonline 50(1), 2290–2295 (2017)

Abdurahman, B., Savvaris, A., Tsourdos, A.: Switching los guidance with speed allocation and vertical course control for path-following of unmanned underwater vehicles under ocean current disturbances. Ocean Eng. 182(JUN.15), 412–426 (2019)

Anderson, B.D.O., Moore, J.B., Molinari, B.P.: Linear optimal control. IEEE Trans. Syst. Man Cybern. 93(4), 559–559 (1972)

Benjamin, M.R., Schmidt, H., Newman, P., Leonard, J.: Nested autonomy for unmanned marine vehicles with moos-ivp. J. Field Robot. 27, 834–875 (2010)

Carreras, M., Hernndez, J.D., Vidal, E., Palomeras, N., Ribas, D., Ridao, P.: Sparus ii auv-a hovering vehicle for seabed inspection. IEEE J. Ocean. Eng. 43(2), 344–355 (2018)

Filonov, P., Lavrentyev, A., Vorontsov, A.: Multivariate industrial time series with cyber-attack simulation: Fault detection using an lstm-based predictive data model. arXiv:1612.06676 (2016)

Fossen, T.I., Lekkas, A.M.: Direct and indirect adaptive integral line-of-sight path-following controllers for marine craft exposed to ocean currents. Int. J. Adapt. Control Signal Proc. 31(4), 445–463 (2017)

Fossen, T.I., Pettersen, K.Y.: On uniform semiglobal exponential stability (usges) of proportional line-of-sight guidance laws. Automatica 50(11), 2912–2917 (2014)

Gers, F.A., Schraudolph, N.N.: Learning precise timing with lstm recurrent networks. J. Mach. Learn. Res. 3, 115–143 (2003)

Khaled, N., Chalhoub, N.G.: A self-tuning guidance and control system for marine surface vessels. Nonlinear Dyn. 73(1-2), 897–906 (2013)

Kupcsik, A., Deisenroth, M.P., Peters, J., Loh, A.P., Vadakkepat, P., Neumann, G.: Model-based contextual policy search for data-efficient generalization of robot skills. Artif. Intell. 247, 415–439 (2017)

Laghrouche, S., Plestan, F., Glumineau, A.: Higher order sliding mode control based on integral sliding mode. Automatica 43(3), 531–537 (2007)

Lekkas, A., Fossen, T.: Integral los path following for curved paths based on a monotone cubic hermite spline parametrization. IEEE Trans. Control Syst. Technol. 22(6), 2287–2301 (2014)

Lekkas, A.M., Fossen, T.I.: A time-varying lookahead distance guidance law for path following. Ifac Proc. 45(27), 398–403 (2012)

Lekkas, A.M., Fossen, T.I.: Uav path following in windy urban environments. J. Intell. Robot. Syst. 74, 1013–1028 (2014)

Lillicrap, T.P., Hunt, J.J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., Silver, D., Wierstra, D.: Continuous control with deep reinforcement learning. Comput. Sci. 8(6), 1–14 (2015)

Lin, C., Chiang, H., Lee, T.: A practical fuzzy controller with q-learning approach for the path tracking of a walking-aid robot. In: The SICE Annual Conference 2013, pp. 888–893 (2013)

Liu, P., Huda, M.N., Sun, L., Yu, H.: A survey on underactuated robotic systems: Bio-inspiration, trajectory planning and control. Mechatronics 72, 102443 (2020)

Liu, P., Yu, H., Cang, S.: Adaptive neural network tracking control for underactuated systems with matched and mismatched disturbances. Nonlinear Dyn. 98, 1447–1464 (2019)

Liu, P., Yu, H., Shuang, C.: Optimized adaptive tracking control for an underactuated vibro-driven capsule system. Nonlinear Dyn. 94, 1803–1817 (2018)

Lu, L., Dan, W., Peng, Z.: Eso-based line-of-sight guidance law for path following of underactuated marine surface vehicles with exact sideslip compensation. IEEE J. Ocean. Eng. 42(2), 477–487 (2017)

Malus, A., Kozjek, D., Vrabic, R.: Real-time order dispatching for a fleet of autonomous mobile robots using multi-agent reinforcement learning. CIRP Ann. 69, 397–400 (2020)

Mandel, J., Beezley, J.D., Bennethum, L.S., Chakraborty, S., Vodacek, A.: A dynamic data driven wildland fire model. In: Computational Science - ICCS 2007, 7th International Conference Beijing, China, May 27-30 2007 Proceedings, Part I (2007)

Mu, D., Wang, G., Fan, Y., Bai, Y., Zhao, Y.: Fuzzy-based optimal adaptive line-of-sight path following for underactuated unmanned surface vehicle with uncertainties and time-varying disturbances. Math. Probl. Eng. 2018, 1–12 (2018)

Nouri, N.M., Valadi, M., Asgharian, J.: Optimal input design for hydrodynamic derivatives estimation of nonlinear dynamic model of auv. Nonlinear Dyn. 92(2), 139–151 (2018)

Polydoros, A.S., Nalpantidis, L.: Survey of model-based reinforcement learning: Applications on robotics. J. Intell. Robot. Syst. 86(2), 153–173 (2017)

Pong, V., Gu, S., Dalal, M., Levine, S.: Temporal difference models: Model-free deep RL for model-based control. 1–14 arXiv:1802.09081 (2018)

Praczyk, T.: Using neurocevolutionary techniques to tune odometric navigational system of small biomimetic autonomous underwater vehicle c preliminary report. J. Intell. Robot. Syst. 100, 363–376 (2020)

Qiao, L., Zhang, W.: Adaptive second-order fast nonsingular terminal sliding mode tracking control for fully actuated autonomous underwater vehicles. IEEE J. Ocean. Eng. 44(2), 363–385 (2019)

Sadeghzadeh, M., Calvert, D., Abdullah, H.A.: Self-learning visual servoing of robot manipulator using explanation-based fuzzy neural networks and q-learning. J. Intell. Robot. Syst. 78(1), 83–104 (2015)

Shi, H., Lin, Z., Zhang, S., Li, X., Hwang, K.S.: An adaptive decision-making method with fuzzy bayesian reinforcement learning for robot soccer. Inform. Sci. 436-437, 268–281 (2018)

Silver, D., Lever, G., Heess, N., Degris, T., Wierstra, D., Riedmiller, M.: Deterministic policy gradient algorithms. In: 31st International Conference on Machine Learning, ICML, 2014, pp 387–395 (2014)

Sun, Y., Cheng, J., Zhang, G., Xu, H.: Mapless motion planning system for an autonomous underwater vehicle using policy gradient-based deep reinforcement learning. J. Intell. Robot. Syst. 96, 591–601 (2019)

Sutton, R.S., Barto, A.G.: Reinforcement learning: An introduction (1998)

Wang, A., Jia, X., Dong, S.: A new exponential reaching law of sliding mode control to improve performance of permanent magnet synchronous motor. IEEE Trans. Magnet. 49(5), 2409–2412 (2013)

Wang, X., Yao, X., Zhang, L.: Path planning under constraints and path following control of autonomous underwater vehicle with dynamical uncertainties and wave disturbances. J. Intell. Robot. Syst. 99(3), 891–908 (2020)

Woo, J.: Y.C..K.N.: Deep reinforcement learning-based controller for path following of an unmanned surface vehicle. Ocean Eng. 183(1), 155–166 (2019)

Yuan, C., Licht, S., He, H.: Formation learning control of multiple autonomous underwater vehicles with heterogeneous nonlinear uncertain dynamics. IEEE Trans. Cybern. 48(10), 2920–2934 (2018)

Yue, Z., Zhu, D.: A bio-inspired neurodynamics based back stepping path-following control of an auv with ocean current. Int. J. Robot. Autom 27(3), 298–307 (2012)

Acknowledgements

The work is partially supported by the National Key Research and Development Program of China (Project No.2016YFC0301400), the National Natural Science Foundation of China (Project No.51379198), the National Natural Science Foundation of China (under grant No.51809246), the National Natural Science Foundation of Shandong Province (under grant No.ZR2018QF003) and the Fundamental Research Funds for the Central Universities (Project No.201961005).

Funding

The work is partially supported by the National Key Research and Development Program of China (Project No.2016YFC0301400), the National Natural Science Foundation of China (Project No.51379198), the National Natural Science Foundation of China (under grant No.51809246), the National Natural Science Foundation of Shandong Province (under grant No.ZR2018QF003) and the Fundamental Research Funds for the Central Universities (Project No.201961005).

Author information

Authors and Affiliations

Contributions

Dianrui Wang: Methodology, Resources, Software, Writing Original Draft, Writing-Review & Editing; Bo He: Conceptualization, Investigation, Supervision, Project administration, Funding acquisition; Yue Shen: Project administration; Guangliang Li: Writing-Review & Editing; Guanzhong Chen: Data Curation.

Corresponding author

Ethics declarations

Consent for Publication

Consent for publication was obtained from all participants.

Competing interests

The authors declare that they have no competing financial interests

Additional information

Consent to Participate

We confirm that the manuscript has been read and approved by all named authors. We further confirm that the order of authors listed in the manuscript has been approved by all of us.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, D., He, B., Shen, Y. et al. A Modified ALOS Method of Path Tracking for AUVs with Reinforcement Learning Accelerated by Dynamic Data-Driven AUV Model. J Intell Robot Syst 104, 49 (2022). https://doi.org/10.1007/s10846-021-01504-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10846-021-01504-0