Abstract

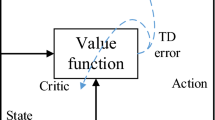

In reinforcement learning navigation, agent exploration based on intrinsic rewards has uncertainties, including observation, action, and neural network prediction uncertainties, owing to partially observable environments. This causes stagnant exploration, randomizes strategies, and leads to unreasonable intrinsic rewards. This study proposes a spatial consciousness model of intrinsic rewards (SC-modelX) for agent navigation in partially observable environments. In this method, the spatial consciousness state generator not only remembers the historical observation information of the agent but also regresses the current spatial position. In similar scenes that are difficult to identify, the two characteristics suppress the uncertainty of observation and action, respectively. The suppress-uncentainty model was proposed to enhance the input–output relationship of intrinsic rewards to suppress the influence of neural network prediction uncertainties. The experimental results demonstrate that the spatial consciousness model of intrinsic rewards can effectively evaluate the novelty of observations and improve the efficiency of exploration, strategy performance, and convergence speed. The three uncertainties proposed in this paper are complementary to agent exploration in partially observable environment and SC-modelX can effectively improve the agent exploration ability.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

Not applicable.

References

Tang J, Li L, Ai Y, et al. Improvement of End-to-End Automatic Driving Algorithm Based on Reinforcement Learning//2019 Chinese Automation Congress (CAC). IEEE, (2020)

Miyazaki, K., Km, A.: Application of Deep Reinforcement Learning to Decision-Making System Based on Consciousness. Procedia Computer Science 190, 631–636 (2021)

Qiu H. Multi-Agent Navigation Based on Deep Reinforcement Learning and Traditional Pathfinding Algorithm, (2020)

Lee J D, Lee J Y, Chen C H, et al. A New Approach to Robot Guidance in Unfamiliar Environment Using an Indication Post. IEEE, (1989)

Montesanto, A., Tascini, G., Puliti, P., et al.: Navigation with Memory in a Partially Observable Environment. Robot. Auton. Syst. 54(1), 84–94 (2006)

Adomi M, Shikauchi Y, Ishii S. Hidden Markov Model for Human Decision Process in a Partially Observable Environment//International Conference on Artificial Neural Networks. Springer-Verlag, (2010)

Cai, K., Wang, C., Song, S., et al.: Risk-Aware Path Planning Under Uncertainty in Dynamic Environments. J Intell Robot Syst 101, 47 (2021). https://doi.org/10.1007/s10846-021-01323-3

Thrun S B. The Role of Exploration in Learning Control. Handbook of Intelligent Control, (1992)

Xu Z X, Chen X L, Cao L, et al. A Study of Count-Based Exploration and Bonus for Reinforcement Learning//2017 IEEE 2nd International Conference on Cloud Computing and Big Data Analysis (ICCCBDA). IEEE, (2017)

Wilcox A, Balakrishna A, Thananjeyan B, et al. LS3: Latent Space Safe Sets for Long-Horizon Visuomotor Control of Sparse Reward Iterative Tasks, (2021)

Schmidhuber, J.: Formal Theory of Creativity, Fun, and Intrinsic Motivation (1990–2010). IEEE Trans. Auton. Ment. Dev. 2(3), 230–247 (2010)

Still, S., Precup, D.: An Information-Theoretic Approach to Curiosity-Driven Reinforcement Learning. Theory Biosci. 131(3), 139–148 (2012)

Szegedy C, Zaremba W, Sutskever I, et al. Intriguing Properties of Neural Networks. Computer Science, (2013)

Pfeiffer, C., Serino, A., Blanke, O.: The Vestibular System: A Spatial Reference for Bodily Self-Consciousness. Front. Integr. Neurosci. 8, 31 (2014)

Farrell, J.: The Global Positioning System & Inertial Navigation. Proceedings of the Ion GPS International Technical Meeting of the Satellite Division of the Institute of Navigation Pts and 2283(6), 955–964 (1999)

Kawaguchi, J., Hashimoto, T., Kubota, T., et al.: Autonomous Optical Guidance and Navigation Strategy Around a Small Body. J. Guidance, Control, Dyn. 20(5), 1010–1017 (2015)

Titterton D H, Weston J L. Strapdown Inertial Navigation Technology. IEEE Aerospace and Electronic Systems Magazine, (2004)

Zhang F, Li S, Yuan S, Sun E, Zhao L. Algorithms Analysis of Mobile Robot SLAM Based on Kalman and Particle Filter 9th International Conference on Modelling, Identification and Control (ICMIC), Kunming, 2017, p. 10501055, (2017). https://doi.org/10.1109/ICMIC.2017.8321612

Huang G P, Mourikis A I, Roumeliotis S I. Analysis and Improvement of the Consistency of Extended Kalman Filter Based SLAM IEEE International Conference on Robotics and Automation, Pasadena, CA, 2008, pp. 473–479, (2008). https://doi.org/10.1109/ROBOT.2008.4543252

Wang S, Clark R, Wen H, Trigoni N. DeepVO: Towards End-to-End Visual Odometry with Deep Recurrent Convolutional Neural Networks IEEE International Conference on Robotics and Automation (ICRA 2017), (2017)

Chen C, Rosa S, Miao Y, Lu C X, Wu W, Markham A, Trigoni N. Selective Sensor Fusion for Neural VisualInertial Od- Ometry. CVPR, (2019)

Milford, M.J., Wyeth, G.F.: Mapping a Suburb with a Single Camera Using a Biologically Inspired SLAM System. In IEEE Transactions on Robotics 24(5), 1038–1053 (2008). https://doi.org/10.1109/TRO.2008.2004520,October

Jeff. Hwu, Tiffany & Isbell, Jacob & Oros. Nicolas & Krichmar, pp. 635–641, (2017). https://doi.org/10.1109/IJCNN.2017.7965912. A self driving robot using deep convolutional neural networks on neuromorphic hardware

Edelman, G.M., Hopkins, J.J.: Learning in and from Brain-Based Devices. Science 318(5853), 1103–1105 (2007). https://doi.org/10.1126/science.1148677,November16

Rosenbaum D, Besse F, Viola F, Rezende D J, Ali Eslami S M. Learning Models for Visual 3D Localization with Implicit Mapping. Comput. Vis. Pattern Recognit., (2018)

Banino, A., Barry, C., Uria, B., et al.: Vector-Based Navigation Using Grid-Like Representations in Artificial Agents. Nature 557(7705), 429–433 (2018)

Jimenez-Romero C et al. “A Model for Foraging Ants, Controlled by Spiking Neural Networks and Double Pheromones.” Arxiv Admin/1507.08467, (2015)

Moser, M.-B., Moser, E.: Where Am I? WHERE AM I GOING ? Sci. Am. 314(1), 26–33 (2016)

Bush, D., Barry, C., Manson, D., Burgess, N.: Using Grid Cells for Navigation. Neuron 87(3), 507–520 (2015)

Sanders, H., Rennó-Costa, C., Idiart, M., Lisman, J.: Grid Cells and Place Cells: An Integrated View of Their Navigational and Memory Function. Trends. Neurosci. 38(12, December), 763–775 (2015)

Sutton R, Barto A. Reinforcement Learning:an Introduction. MIT Press, (1998)

Goharimanesh, M., Mehrkish, A., Janabi-Sharifi, F.: A Fuzzy Reinforcement Learning Approach for Continuum Robot Control. J Intell Robot Syst 100, 809–826 (2020). https://doi.org/10.1007/s10846-020-01237-6

Luo J, Oubong G. A Comparison of SIFT, PCA-SIFT and SURF. International Journal of Image Processing, (2009)

Lu, X., Ji, W., Li, X., et al.: Bidirectional Adaptive Feature Fusion for Remote Sensing Scene Classification. Neurocomputing. FEB 328, 135–146 (2019)

Wang X, Han T X, Yan S. An HOG-LBP Human Detector with Partial Occlusion Handling//IEEE International Conference on Computer Vision. IEEE, (2009)

Neves G, Cerqueira R, Albiez J, et al. Rotation-Invariant Shipwreck Recognition with Forward-Looking Sonar, (2019)

Yang L, Dong P Z, Sun B. Hierarchical Category Classification Scheme Using Multiple Sets of Fully-Connected Networks with a CNN Based Integrated Circuit as Feature Extractor, (2018)

Lau M M, Phang J, Lim K H. Convolutional Deep Feedforward Network for Image. Classification//2019 7th International Conference on Smart Computing & Communications (ICSCC), (2019)

Wu, J.L., He, Y., Yu, L.C., et al.: Identifying Emotion Labels from Psychiatric Social Texts Using a Bi-Directional LSTM-CNN Model. IEEE Access 8:1–1, 99 (2020)

Lorenzo J, Parra I, Wirth F, et al. RNN-Based Pedestrian Crossing Prediction Using Activity and Pose-Related Features. (2020)

Zhang Y, Feng C, Li H. Quality Estimation with Transformer and RNN Architectures. (2019)

Yang G, Chen X, Liu K, et al. DeepPseudo: Deep Pseudo-Code Generation via Transformer and Code Feature Extraction, (2021)

Neuland, R., Rodrigues, F., Pittol, D., et al.: Interval Inspired Approach Based on Temporal Sequence Constraints to Place Recognition. J Intell Robot Syst 102, 4 (2021). https://doi.org/10.1007/s10846-021-01375-5

Mnih V, Kavukcuoglu K, Silver D, et al. Playing Atari with Deep Reinforcement Learning. Comput. Sci., (2013)

Hausknecht M, Stone P. Deep Recurrent Q-Learning for Partially Observable MDPs. Comput. Sci., (2015)

Kapturowski S, Ostrovski G, Quan J, et al. Recurrent Experience Replay in Distributed Reinforcement Learning. ICLR, (2019)

Fickinger A, Jaques N, Parajuli S, Chang M, Rhinehart N, Berseth G, Russell S, Levine S. Explore and Control with Adversarial Surprise. arXiv Preprint ArXiv:2107.07394, (2021)

Strehl, A.L., Littman, M.L.: An Analysis of Model-Based Interval Estimation for Markov Decision Processes. J. Comput. Syst. Sci. 74(8), 1309–1331 (2008)

Bellemare M, Srinivasan S, Ostrovski G, Schaul T, Saxton D, Munos R. Unifying Count-Based Exploration and Intrinsic Motivation. In Advances in Neural Information Processing Systems:1471–1479, (2016)

Ostrovski G, Bellemare M G, Oord A V D, et al. Count-Based Exploration with Neural Density Models, (2017)

Uri Burda Y, Edwards H, Storkey A J, Klimov O. Exploration by Random Network Distillation. In 7th International Conference on Learning Representations. ICLR, NewOrleans, LA, USA, May 6–9, 2019, (2019)

Burda Y, Edwards H, Pathak D, Storkey A, Darrell T, Alexei A. Efros. Large-Scale Study of Curiosity-Driven Learning, (2018)

Pathak D, Agrawal P, Efros A A, Darrell T. Curiosity-Driven Exploration by Self-Supervised Prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 16–17, (2017)

Zhelo O, Zhang J, Tai L, et al. Curiosity-Driven Exploration for Maples Navigation with Deep Reinforcement Learning//ICRA Workshop in Machine Learning in the Planning and Control of Robot Motion. (2018)

Sequeira, P., Melo, F.S., Paiva, A.: Learning by appraising: an emotion-based approach to intrinsic reward design[J]. Adapt. Behav. 22(5), 330–349 (2014)

Raileanu R , Rocktschel T . RIDE: Rewarding Impact-Driven Exploration for Procedurally-Generated Environments[C]// 08th International Conference on Learning Representations 2020. (2020)

Campero A , Raileanu R , H Küttler, et al. Learning with AMIGo: Adversarially Motivated Intrinsic Goals[C]// (2020)

Hochreiter S . Recurrent Neural Net Learning and Vanishing Gradient. (1998)

Glorot, X., Bordes, A. & Bengio, Y . Deep Sparse Rectifier Neural Networks. Fourteenth International Conference on Artificial Intelligence and Statistics. (2011)

Thomas P S, Brunskill E. Policy Gradient Methods for Reinforcement Learning with Function Approximation and Action-Dependent Baselines, (2017)

Zhang Y, Clavera I, Tsai B, et al. Asynchronous Methods for Model-Based Reinforcement Learning, (2019)

Chevalier-Boisvert M, Bahdanau D, Lahlou S, et al. BabyAI: A Platform to Study the Sample Efficiency of Grounded Language Learning, (2018)

Flet-Berliac Y, Ferret J, Pietquin O, et al. Adversarially Guided Actor-Critic, (2021)

Jiang Z, Minervini P, Jiang M, et al. Grid-to-Graph: Flexible Spatial Relational Inductive Biases for Reinforcement Learning, (2021)

Gan C, Schwartz J, Alter S, et al. ThreeDWorld: A Platform for Interactive Multi-Modal Physical Simulation, (2020)

Gan C, Zhou S, Schwartz J, et al. The ThreeDWorld Transport Challenge: A Visually Guided Task-and-Motion Planning Benchmark for Physically Realistic Embodied AI[J], (2021)

Funding

This work was supported by National Natural Science Foundation of China (Key Program) (Grant number 51935005). Author Peng Liu has received research support from it.

This work was supported by Basic Scientific Research Projects of China (Grant number JCKY20200603C010). Author Peng Liu has received research support from it.

This work was supported by Science and Technology Program Projects of Heilongjiang Province, China (Grant number GA21C031). Author Ye Jin has received research support from it.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Zhenghongyuan Ni, Ye Jin, Peng Liu and Wei Zhao. The first draft of the manuscript was written by Zhenghongyuan Ni and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors have no relevant financial or non-financial interests to disclose.

Ethics Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ni, Z., Jin, Y., Liu, P. et al. Spatial Consciousness Model of Intrinsic Reward in Partially Observable Environments. J Intell Robot Syst 106, 71 (2022). https://doi.org/10.1007/s10846-022-01771-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10846-022-01771-5