Abstract

We present a closed form solution to the problem of registration of fully overlapping 3D point clouds undergoing unknown rigid transformations, as well as for detection and registration of sub-parts undergoing unknown rigid transformations. The solution is obtained by adapting the general framework of the universal manifold embedding (UME) to the case where the transformations the object may undergo are rigid. The UME nonlinearly maps functions related by certain types of geometric transformations of coordinates to the same linear subspace of some Euclidean space while retaining the information required to recover the transformation. Therefore registration, matching and classification can be solved as linear problems in a low dimensional linear space. In this paper, we extend the UME framework to the special case where it is a priori known that the geometric transformations are rigid. While a variety of methods exist for point cloud registration, the method proposed in this paper is notably different as registration is achieved by a closed form solution that employs the UME low dimensional representation of the shapes to be registered.

Similar content being viewed by others

References

Aiger, D., Mitra, N.J., Cohen-Or, D.: 4-points congruent sets for robust surface registration. ACM Trans. Graph. 27(3), 1–10 (2008)

Anderson, R.: Niwot ridge long-term ecological research site, colorado. National Center for Airborne Laser Mapping (NCALM), distributed by OpenTopography (2013)

Aoki, Y., Goforth, H., Srivatsan, R.A., Lucey, S.: Pointnetlk: Robust & efficient point cloud registration using pointnet. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Arun, K., Huang, T., Blostein, S.: Least-squares fitting of two 3-d point sets. ieee t pattern anal. Pattern Analysis and Machine Intelligence, IEEE Transactions on PAMI-9, 698 – 700 (1987). https://doi.org/10.1109/TPAMI.1987.4767965

Bai, X., Luo, Z., Zhou, L., Fu, H., Quan, L., Tai, C.L.: D3feat: Joint learning of dense detection and description of 3d local features. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6358–6366 (2020). https://doi.org/10.1109/CVPR42600.2020.00639

Besl, P.J., McKay, N.D.: A method for registration of 3-d shapes. IEEE Trans. Pattern Anal. Mach. Intell. 14(2), 239–256 (1992)

Bulow, H., Birk, A.: Scale-free registration in 3-d: 7 degrees of freedom with fourier mellin soft transforms. Int. Jou. Comp. Vision 126, 731–750 (2018)

Bustos, A.P., Chin, T.J.: Guaranteed outlier removal for point cloud registration with correspondences. IEEE Trans. Pattern Anal. Mach. Intell. 40(12), 2868–2882 (2018). https://doi.org/10.1109/TPAMI.2017.2773482

Campbell, D., Petersson, L.: Gogma: Globally-optimal gaussian mixture alignment. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5685–5694 (2016)

Chetverikov, D., Svirko, D., Stepanov, D., Krsek, P.: The trimmed iterative closest point algorithm. In: Object recognition supported by user interaction for service robots, vol. 3, pp. 545–548 vol.3 (2002)

Choi, S., Zhou, Q.Y., Miller, S., Koltun, V.: A large dataset of object scans. arXiv:1602.02481 (2016)

Choy, C., Dong, W., Koltun, V.: Deep global registration. In: CVPR (2020)

Choy, C., Park, J., Koltun, V.: Fully convolutional geometric features. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8957–8965 (2019). https://doi.org/10.1109/ICCV.2019.00905

D. Evangelidis, G., Kounades-Bastian, D., Horaud, R., Psarakis, E.: A generative model for the joint registration of multiple point sets (2014)

Darom, T., Keller, Y.: Scale-invariant features for 3-d mesh models. IEEE Trans. Image Process. 21(5), 2758–2769 (2012)

Efraim, A., Francos, J.M.: The universal manifold embedding for estimating rigid transformations of point clouds. In: 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5157–5161 (2019)

Ferencz, I.H., Shimshoni, I.: Registration of 3d point clouds using mean shift clustering on rotations and translations. 2017 Int. Conf. 3D Vis. (3DV) pp. 374–382 (2017)

Fischler, M., Bolles, R.: Random sample concensus: Aparadigm for model fitting with applications to image analysis and automated cartography. Graph. Image Process. 24, 381–395 (1981)

Fuhrmann, S., Langguth, F., Goesele, M.: Mve: A multi-view reconstruction environment. In: Proceedings of the Eurographics Workshop on Graphics and Cultural Heritage, GCH ’14, pp. 11–18. Eurographics Association, Aire-la-Ville, Switzerland, Switzerland (2014)

Garcia-Garcia, A., Gomez-Donoso, F., Garcia-Rodriguez, J., Orts-Escolano, S., Cazorla, M., Azorin-Lopez, J.: Pointnet: A 3d convolutional neural network for real-time object class recognition. In: 2016 International Joint Conference on Neural Networks (IJCNN), pp. 1578–1584 (2016)

Gelfand, N., Mitra, N.J., Guibas, L.J., Pottmann, H.: Robust global registration. In: Symposium on geometry processing, vol. 2, p. 5. Vienna, Austria (2005)

Gojcic, Z., Zhou, C., Wegner, J.D., Wieser, A.: The perfect match: 3d point cloud matching with smoothed densities. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5540–5549 (2019). https://doi.org/10.1109/CVPR.2019.00569

Grant, W.S., Voorhies, R.C., Itti, L.: Finding planes in lidar point clouds for real-time registration. In: 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 4347–4354 (2013). https://doi.org/10.1109/IROS.2013.6696980

Grigsby, S.: Leaf-on lidar point cloud data for solar site assessment of the cu-boulder campus. Department of Geography, University of Colorado at Boulder, digital media (2013)

Guo, Y., Bennamoun, M., Sohel, F., Lu, M., Wan, J., Kwok, N.M.: A comprehensive performance evaluation of 3d local feature descriptors. Int. J. Comput. Vision 116(1), 66–89 (2016)

Hackel, T., Wegner, J., Schindler, K.: Fast semantic segmentation of 3d point clouds with strongly varying density. ISPRS Ann. Photogr. Remote Sens. Spat. Inform. Sci. III–3, 177–184 (2016). https://doi.org/10.5194/isprsannals-III-3-177-2016

Hagege, R., Francos, J.M.: Parametric estimation of affine transformations: an exact linear solution. J. Math. Imag. Vis. 37(1), 1–16 (2010)

Horn, B.K.P.: Extended gaussian images. Proc. IEEE 72(12), 1671–1686 (1984)

Horn, B.K.P.: Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A 4(4), 629–642 (1987)

Horn, B.K.P., Hilden, H., Negahdaripour, S.: Closed-form solution of absolute orientation using orthonormal matrices. J. Opt. Soc. Am. 5(7), 1127–1135 (1988)

Johnson, A.E., Hebert, M.: Using spin images for efficient object recognition in cluttered 3d scenes. IEEE Trans. Pattern Anal. Mach. Intell. 21(5), 433–449 (1999)

Lee, T.k., Lim, S., Lee, S., An, S., Oh, S.y.: Indoor mapping using planes extracted from noisy rgb-d sensors. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 1727–1733 (2012). https://doi.org/10.1109/IROS.2012.6385909

Liu, X., Qi, C.R., Guibas, L.J.: Flownet3d: Learning scene flow in 3d point clouds. In: CVPR 2019 (2018)

Lucas, B., Kanade, T.: An iterative image registration technique with an application to stereo vision (ijcai) (1981)

Maes, C., Fabry, T., Keustermans, J., Smeets, D., Suetens, P., Vandermeulen, D.: Feature detection on 3d face surfaces for pose normalisation and recognition. pp. 1 – 6 (2010)

Magnusson, M., Lilienthal, A., Duckett, T.: Scan registration for autonomous mining vehicles using 3d-ndt. J. Field Robot. 24(10), 803–827 (2007). https://doi.org/10.1002/rob.20204

Makadia, A., Patterson, A., Daniilidis, K.: Fully automatic registration of 3d point clouds. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), vol. 1, pp. 1297–1304 (2006)

Mellado, N., Aiger, D., Mitra, N.J.: Super 4pcs fast global pointcloud registration via smart indexing. Comput. Graph. Forum 33(5), 205–215 (2014)

Myronenko, A., Song, X.: Point set registration: coherent point drift. IEEE Trans. Pattern Anal. Mach. Intell. 32(12), 2262–2275 (2010)

Myronenko, A., Song, X.B.: On the closed-form solution of the rotation matrix arising in computer vision problems. ArXiv arXiv:abs/0904.1613 (2009)

Neely, A.: Quantifying rock strength controls in Guadalupe Mountains, NM/TX. National Center for Airborne Laser Mapping (NCALM), distributed by OpenTopography (2019)

OSU: B4 project - southern san andreas and san jacinto faults. Ohio State University, School of Earth Sciences witho colaboration with National Center for Airborne Laser Mapping (NCALM), distributed by OpenTopography (2006)

Pathak, K., Birk, A., Vaškevičius, N., Poppinga, J.: Fast registration based on noisy planes with unknown correspondences for 3-d mapping. IEEE Trans. Rob. 26(3), 424–441 (2010). https://doi.org/10.1109/TRO.2010.2042989

Pottmann, H., Leopoldseder, S., Hofer, M.: Registration without icp. Comput. Vis. Image Underst. 95(1), 54–71 (2004)

Rusinkiewicz, S., Levoy, M.: Efficient variants of the icp algorithm. In: Proceedings Third International Conference on 3-D Digital Imaging and Modeling, pp. 145–152 (2001)

Rusu, R.B., Blodow, N., Beetz, M.: Fast point feature histograms (fpfh) for 3d registration. In: 2009 IEEE International Conference on Robotics and Automation, pp. 3212–3217 (2009)

Rusu, R.B., Blodow, N., Marton, Z.C., Beetz, M.: Aligning point cloud views using persistent feature histograms. In: 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 3384–3391 (2008)

Sipiran, I., Bustos, B.: Harris 3d: a robust extension of the harris operator for interest point detection on 3d meshes. Vis. Comput. 27, 963–976 (2011)

Slater, L.J.E.: Lincoln national forest, NM: Floodplains in Fluvial Networks. National Center for Airborne Laser Mapping (NCALM), distributed by OpenTopography (2012)

Tam, G.K.L., Cheng, Z., Lai, Y., Langbein, F.C., Liu, Y., Marshall, D., Martin, R.R., Sun, X., Rosin, P.L.: Registration of 3d point clouds and meshes: a survey from rigid to nonrigid. IEEE Trans. Visual Comput. Graph. 19(7), 1199–1217 (2013)

Vaskevicius, N., Birk, A., Pathak, K., Schwertfeger, S.: Efficient representation in 3d environment modeling for planetary robotic exploration. Adv. Robot. 24 (2010)

Wang, Y., Solomon, J.: Deep closest point: Learning representations for point cloud registration. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 3522–3531 (2019)

Wasklewicz, T.: Death valley national park, ca: Badwater basin. National Center for Airborne Laser Mapping (NCALM), distributed by OpenTopography (2013)

Yang, H., Shi, J., Carlone, L.: Teaser: fast and certifiable point cloud registration. IEEE Trans. Rob. 37(2), 314–333 (2021). https://doi.org/10.1109/TRO.2020.3033695

Yang, J., Cao, Z., Zhang, Q.: A fast and robust local descriptor for 3d point cloud registration. Inf. Sci. 346–347, 163–179 (2016)

Yang, J., Li, H., Campbell, D., Jia, Y.: Go-icp: a globally optimal solution to 3d icp point-set registration. IEEE Trans. Pattern Anal. Mach. Intell. 38(11), 2241–2254 (2016)

Yang, J., Xiao, Y., Cao, Z.: Toward the repeatability and robustness of the local reference frame for 3d shape matching: an evaluation. IEEE Trans. Image Process. 27(8), 3766–3781 (2018)

Yang, J., Zhang, Q., Xian, K., Xiao, Y., Cao, Z.: Rotational contour signatures for both real-valued and binary feature representations of 3d local shape. Comput. Vis. Image Underst. 160, 133–147 (2017)

Yuan, W., Eckart, B., Kim, K., Jampani, V., Fox, D., Kautz, J.: Deepgmr: Learning latent gaussian mixture models for registration. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M. (eds.) Computer Vision - ECCV 2020, pp. 733–750. Springer International Publishing, Cham (2020)

Zabulis, X., Lourakis, M.I.A., Koutlemanis, P.: Correspondence-free pose estimation for 3d objects from noisy depth data. Vis. Comput. 34(2), 193–211 (2018)

Zhang, Z.: Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vision 13(2), 119–152 (1994)

Zhou, Q.Y., Park, J., Koltun, V.: Fast global registration. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision - ECCV 2016, pp. 766–782. Springer International Publishing, Cham (2016)

Zhou, Q.Y., Park, J., Koltun, V.: Open3D: A modern library for 3D data processing. arXiv:1801.09847 (2018)

Acknowledgements

This work is based on data services provided by the OpenTopography Facility with support from the National Science Foundation under NSF Award Numbers 1557484, 1557319, and 1557330.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research is supported by NSF-BSF Computing and Communication Foundations (CCF) grants, CCF-2016667 and BSF-2016667.

Appendices

Proof for proposition 1

Proof

First, notice that

Adding and subtracting \(\int \limits _{X_{miss}^{(i)}}\mathbf{x}' d\mathbf{x}\) to (56), we obtain

Hence,

\( \square \)

Mean UME Error Matrix Detailed Derivation

Following definition 1, we notice that

and similarly

Therefore,

similarly it is found that

Substituting (63) and (62) into (32) and taking the expectation

Proof of Proposition 2

Proof

The proof is a two step procedure. In the first step, the rotation between two sets of points is found by minimizing the sum of squared errors independently of the translation, showing that \(E({\hat{\mathbf{R}}}) = {\bar{\mathbf{R}}}\). Next, using the result for the rotation, it is shown that \(E({\hat{\mathbf{t}}}) ={\bar{\mathbf{t}}}\). Define \(\widetilde{\mathbf{h}}^c_i\) and \(\mathbf{g}^c_i\), as in (19). From (21) and (22), the solutions for \({\hat{\mathbf{R}}}\) and \({\bar{\mathbf{R}}}\) in (41) and (42) can be written as

Thus, the estimated rotation is determined by the centered UME matrices, and hence, it can be obtained independently of the estimated translation. In addition, from (22) we have that the translation is selected such that the estimation error of (41) and (42) is determined completely by (65) and (66). Define the estimation errors

Taking the expectation of both sides of (67), and substituting (67) into (68), we have

Taking the \(m_i^2\) weighted average of (69), we have

Since the solution is obtained using the weighted-and-centered versions \(\widetilde{\mathbf{h}}_{i}^c, {\mathbf{g}^c}_{i}\), it can be easily shown using the definition of the centering process in (19) that \(\frac{\sum _{i=1}^P m_i^2\mathbf{e}_{res,i}}{\sum _{i=1}^P m_i^2}=0\) and \(\frac{\sum _{i=1}^P m_i^2{\bar{\mathbf{e}}}_{res,i}}{\sum _{i=1}^P m_i^2}=0\). Thus, (70) becomes

Assuming \(\mathbf{g}_i^c\) are not all zeros

As for the translation, let us evaluate the mean translation from (41) by substituting (22):

where the last equality is due to the definition of (42). \(\square \)

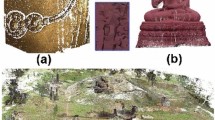

Real Data Registration Results

Rights and permissions

About this article

Cite this article

Efraim, A., Francos, J.M. Estimating Rigid Transformations of Noisy Point Clouds Using the Universal Manifold Embedding. J Math Imaging Vis 64, 343–363 (2022). https://doi.org/10.1007/s10851-022-01070-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-022-01070-6