Abstract

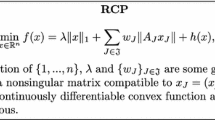

We consider a class of nonsmooth convex optimization problems where the objective function is the composition of a strongly convex differentiable function with a linear mapping, regularized by the group reproducing kernel norm. This class of problems arise naturally from applications in group Lasso, which is a popular technique for variable selection. An effective approach to solve such problems is by the proximal gradient method. In this paper we derive and study theoretically the efficient algorithms for the class of the convex problems, analyze the convergence of the algorithm and its subalgorithm.

Similar content being viewed by others

References

Bach, F.: Consistency of the group Lasso and multiple kernel learning. J. Mach. Learn. Res. 9, 1179–1225 (2008)

Bakin, S.: Adaptive Regression and Model Selection in Data Mining Problems. PhD Thesis. Australian National University, Canberra (1999)

Boikanyo, O.A., Morosanu, G.: Four parameter proximal point algorithms. Nonlinear Anal. 74, 544–C555 (2011)

Boikanyo, O.A., Morosanu, G.: Inexact Halpern-type proximal point algorithm. J. Glob. Optim. 51, 11C26 (2011). doi: 10.1007/s10898-010-9616-7

Cartis, C., Gould, N.I.M., Ph. Toint, L.: Adaptive cubic regularisation methods for unconstrained optimization. Part I: motivation, convergence and numerical results. Math. Program. Ser. A 127, 245–295 (2011)

Combettes, P.L., Pesquet J.C.: Proximal Splitting Methods in Signal Processing. arXiv:0912.3522v4 [math.OC] 18 May (2010)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 4, 1168–1200 (2005)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55, 293–318 (1992)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1359 (2001)

Friedman, J., Hastie, T., Tibshirani, R.: A Note on the Group Lasso and a Sparse Group Lasso. arXiv:1001.0736v1 [math.ST] 5 Jan (2010)

Kelley, C.T.: Iterative Methods for Linear and Nonlinear Equations. Society for Industrial and Applied Mathematics, Philadelphia (1995)

Kim, D., Sra, S., Dhillon, I.: A scalable trust-region algorithm with application to mixednorm regression. In: International Conference on Machine Learning (ICML), p. 1 (2010)

Liu, J., Ji, S., Ye, J.: SLEP: Sparse Learning with Efficient Projections. Arizona State University (2009)

Luo, Z.Q., Tseng, P.: On the linear convergence of descent methods for convex essentially smooth minimization. SIAM J. Control Optim. 30(2), 408–425 (1992)

Ma, S., Song, X., Huang, J.: Supervised group Lasso with applications to microarray data analysis. BMC Bioinform. 8(1), 60 (2007)

Meier, L., Van De Geer, S., Buhlmann, P.: The group Lasso for logistic regression. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 70(1), 53–71 (2008)

Nesterov, Y.: Introductory Lectures on Convex Optimization. Kluwer, Boston (2004)

Paul, H.Calamai, Jorge, J.Mor: Projected gradient methods for linearly constrained problems. Math. Progrom. 39, 93–116 (1987)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Rockafellar, R.T., Wets, R.J.B.: Variational Analysis. Springer, New York (1998)

Roth, V., Fischer, B.: The group-Lasso for generalized linear models: uniqueness of solutions and efficient algorithms. In: Proceedings of the 25th International Conference on Machine Learning, pp. 848–855, ACM (2008)

Tibshirani, R.: Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B 58, 267–288 (1996)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. 117, 387–423 (2009)

Tseng, P.: Approximation accuracy, gradient methods, and error bound for structured convex optimization. Math. Program. 125(2), 263–295 (2010)

Van den Berg, E., Schmidt, M., Friedlander M., Murphy, K.: Group Sparsity Via Linear-Time Projection. Technical Report TR-2008-09, Department of Computer Science, University of British Columbia (2008)

Wright, S., Nowak, R., Figueiredo, M.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57(7), 2479–2493 (2009)

Yang, H., Xu, Z., King, I., Lyu, M.: Online learning for group Lasso. In: 27th International Conference on, Machine Learning (2010)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 68(1), 49–67 (2006)

Zheng, H., Chen, S., Mo, Z., Huang, X.: Numerical Computation (in Chinese). Wuhan University Press, Wuhan (2004)

Zou, H., Hastie, T.: Regularization and variable selection via the elastic net. J. R. Stat. Soc. B. 67(2), 301–320 (2005)

Zou, H.: The adaptive Lasso and its oracle properties. J. Am. Stat. Assoc. 101(476), 1418–1429 (2006)

Acknowledgments

This work was supported by the National Science Foundation of China, Grant No. 61179033, and by the National Science Foundation, Grant No. DMS-1015346. We would like to thank Professor Zhi-Quan Luo, and part of this work was performed during a research visit by the first author to the University of Minnesota. We would like to thank the reviewer for his valuable suggestions and the editor for his helpful assistance.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix: A Proof of Theorem 2

By Lemma 2, since \(f_2\) is given by (11), it follows that

so that \(\bar{X}\) is bounded (since \(w_{J}>0 \) for all \(J\in \mathcal{J }\)), as well as being closed convex. Since \(F\) is convex and \(\bar{X}\) is bounded, it follows from [19, Theorem 8.7] that (36) holds. Next, we prove below the EB condition (32).

We argue by contradiction. Suppose there exists a \(\zeta \ge min F\) such that (32) fails to hold for all \(\kappa >0\) and \(\epsilon >0\). Then there exists a sequence \(x^{1},x^{2},\ldots \,\,\text{ in}\,\, \mathfrak R ^{n}\backslash \bar{X}\) satisfying

where for simplicity we let \(r^{k}:=r(x^k), \delta _{k}:=\Vert Bx^k-B\bar{x}^k\Vert \), and \(\bar{x}^k:=\arg \min _{s\in \bar{X}}\Vert x^{k}-s\Vert \). Let

By (35) and (38), \(A\bar{x}^k=\bar{y}\) and \(\nabla f_2(\bar{x}^k)=\bar{g}\) for all \(k\).

By (36) and (37), \({x^k}\) is bounded. By further passing to a subsequence if necessary, we can assume that \({x^k}\rightarrow \text{ some}~ \bar{x}\). Since \(\{r(x^k)\}=\{r^k\}\rightarrow 0\) and \(r\) is continuous by Lemma 1, this implies \(r(\bar{x})=0\), so \(\bar{x}\in \bar{X}\). Hence \(\Vert x^k-\bar{x}^k\Vert \le \Vert x^k-\bar{x}\Vert \rightarrow 0\), and \(\delta _k\le \Vert B\Vert \Vert x^k-\bar{x}^k\Vert \rightarrow 0\) as \(k\rightarrow \infty \) so that \({\bar{x}^k}\rightarrow \bar{x}\) (by \(\Vert \bar{x}^k-\bar{x}\Vert \le \Vert \bar{x}^k-x^k\Vert +\Vert x^k-\bar{x}\Vert \rightarrow 0\)). Also, by (35) and (38), \({g^k}\rightarrow \nabla f_2(\bar{x})= \bar{g}\).

Since \(f_1(x^k)\ge 0\), (11) implies \(h(Ax^k)=F(x^k)-f_1(x^k)\le F(x^k)\le \zeta \) for all \(k\). Since \({Ax^k}\) is bounded and \(h(y)\rightarrow \infty \) whenever \(\Vert y\Vert \rightarrow \infty \), this implies that \({Ax^k}\) and \(\bar{y}\) lie in some compact convex subset \(Y\) of \(\mathfrak R ^{m}\). By our assumption on \(h, h\) is strongly convex and \(\nabla h\) is Lipschitz continuous on \(Y\), so, in particular,

for some \(0<\mu \le L\).

Denote \(\bar{B}\) as an block diagonal matrix of \(N\times N\) which diagonal block is \(B_J, J\in \mathcal{J }\). Let \(B\) is obtained by swapping some rows and swapping some columns from \(\bar{B}\), such that \((Bx)_J=B_Jx_J, \forall J\in \mathcal{J }\). By the nonsingularity of \(B_J(J\in \mathcal{J }), \bar{B}\) and \(B\) are both nonsingular. We claim that there exists \(\kappa > 0\) such that

We argue this by contradiction. Suppose this is false. Then, by passing to a subsequence if necessary, we can assume that

Since \(\bar{y}=A\bar{x}^k\), this is equivalent to \(\{Au^k\}\rightarrow 0\), where we let

Then \(\Vert Bu^k\Vert = 1\) for all \(k\). By further passing to a subsequence if necessary, we will assume that \(\{Bu^k\}\rightarrow \text{ some}~B\bar{u}\). Then \(A\bar{u}=0\) and \(\Vert B\bar{u}\Vert =1\), Moreover,

Since \(Ax^k\) and \(\bar{y}\) are in \(Y\), the Lipschitz continuity of \(\nabla h\) on \(Y\) [see (39) and (38)] yield

By further passing to a subsequence if necessary, we can assume that, for each \(J\in \mathcal{J }\), either (a) \(\Vert B_J^{-T}(x^k_J-g^k_J)\Vert \le w_{J}\) for all \(k\) or (b) \(\Vert B_J^{-T}(x^k_J-g^k_J)\Vert >w_{J}\) and \(\bar{x}^k_J\ne 0\) for all \(k\) or (c) \(\Vert B_J^{-T}(x^k_J-g^k_J)\Vert >w_{J}\) and \(\bar{x}^k_J=0\) for all \(k\).

Case (a). In this case, Lemma 1 implies that \(r^k_J= -x^k_J\) for all \(k\). Since \(\{r^k\}\rightarrow 0\) and \(\{x^k\}\rightarrow \bar{x}\), this implies \(\bar{x}_J= 0\). Also, by (41) and (37), we have

Thus \(\bar{u}_J=- \mathop {\lim }\limits _{k \rightarrow \infty }\frac{\bar{x}^k_J}{\delta _k}\). Suppose \(\bar{u}_J \ne 0\). Then \(\bar{x}^k_J\ne 0\) for all \(k\) sufficiently large, i.e. \(B_J\bar{x}^k_J \ne 0\), so \(\bar{x}^k\in \bar{X}\) and the optimality condition for (11), (12) and \(\nabla f_2(\bar{x}^k)= \bar{g}\) imply

by \(\bar{u}_J=- \mathop {\lim }\limits _{k \rightarrow \infty }\frac{\bar{x}^k_J}{\delta _k}, \mathop {\lim }\limits _{k \rightarrow \infty }\frac{B^T_JB_J\bar{x}^k_J}{\Vert B_J\bar{x}^k_J\Vert }=\mathop {\lim } \limits _{k \rightarrow \infty } \frac{B^T_JB_J\bar{x}^k_J}{\delta _k}\cdot \frac{\delta _k}{\Vert B_J\bar{x}^k_J\Vert }=-B^T_JB_J\bar{u}_J\cdot \mathop {\lim }\limits _{k \rightarrow \infty }\frac{1}{\Vert B_J\frac{\bar{x}^k_J}{\delta _k}\Vert }\), we get

Case (b). Since \(\bar{x}^k\in \bar{X}\) and \(\bar{u}_J \ne 0\), the optimality condition for (11), (12) and \(\nabla f_2(\bar{x}^k)= \bar{g}\) imply (44) holds for all \(k\). Then Lemma 1 implies

where the third equality uses (41) and (42); the fifth equality uses (44); the sixth equality uses the definition of \(\theta \). Dividing both sides by \(\delta _{k}\) and using (37) yields in the limit

where \(\bar{\theta }:=\mathop {\lim }\limits _{k \rightarrow \infty }\frac{\Vert B_J(x^k_J+r^k_J)\Vert -\Vert B_J\bar{x}^k_J\Vert }{\delta _k}\). Thus \(B^T_JB_J\bar{u}_J\) is a nonzero multiple of \(\bar{g}_J\).

Case (c). In this case, it follows from \(\{\bar{x}^k\}\rightarrow \bar{x}\) that \(\bar{x}_J= 0\). Since \(\Vert B_J^{-T}(x^k_J - g^k_J)\Vert >w_{J}\) for all \(k\), this implies \(\Vert B_J^{-T}\bar{g}_J\Vert \ge w_{J}\). Since \(\bar{x}\in \bar{X}\), the optimality condition \(0\in \bar{g}_{J}+ w_{J}B^T_J\partial \Vert 0\Vert \) implies \(\Vert B_J^{-T}\bar{g}_J\Vert \le w_{J}\). Hence \(\Vert B_J^{-T}\bar{g}_J\Vert =w_{J}\). Then similar to (46) and \(\bar{x}^k_J= 0\),

Dividing both sides by \(\delta _{k}\), one has

using (37), (41) and \(\bar{x}^k_J= 0\) yields in the limit

Suppose \(\bar{u}_J\ne 0\), then \(\Vert B_J\bar{u}_J\Vert >0\). Thus (49) implies \(B^T_JB_J\bar{u}_J\) is a negative multiple of \(\bar{g}_J\).

Since \(\frac{x^k-\bar{x}^k}{\delta _{k}}= \{u^k\}\rightarrow \bar{u}\ne 0\), we have \(\langle x^k-\bar{x}^k,\bar{u}\rangle > 0\) for all \(k\) sufficiently large. Fix any such \(k\) and let

with \(\epsilon > 0\). Since \(A\bar{u}= 0\), we have \(\nabla f_2(\hat{x})=A^T\nabla h(A\hat{x})=A^T\nabla h(A\bar{x}^k)= \nabla f_2(\bar{x}^k)=\bar{g}\). We show below that, for \(\epsilon > 0\) sufficiently small, \(\hat{x}\) satisfies

for all \(J\in \mathcal{J }\), and hence \(\hat{x}\in \bar{X}\). Then \(\langle x^k-\bar{x}^k,\bar{u}\rangle > 0\) and \(\Vert B\bar{u}\Vert = 1\) yield

for all \(\epsilon > 0\) sufficiently small, which contradicts to \(\bar{x}^k\) being the point in \(\bar{X}\) nearest to \(x^k\) and thus proves (40). For each \(J\in \mathcal{J }\), if \(\bar{u}_J= 0\), then \(\hat{x}_J= \bar{x}^k_J\) and (50) holds automatically (since \(\bar{x}^k\in \bar{X}\)). Suppose that \(\bar{u}_J\ne 0\). We prove (50) below by considering the three aforementioned cases (a), (b) and (c).

Case (a). Since \(\bar{u}_J\ne 0\), by (45), we have that \(B^T_JB_J\bar{u}_J\) is a positive multiple of \(\bar{g}_{J}\). Also, by (44) and \(B^T_JB_J\bar{x}^k_J\) is a negative multiple of \(\bar{g}_J\), hence \(B^T_JB_J\hat{x}^k_J\) is a negative multiple of \(\bar{g}_J\) for all \(\epsilon > 0\) sufficiently small. Suppose \(\bar{g}_J+ aB^T_JB_J\hat{x}^k_J =0 (a>0)\), combining with (44) (\(\bar{g}_J + w_J\frac{B^T_JB_J\bar{x}^k_J}{\Vert B_J\bar{x}^k_J\Vert } = 0\)), we have that \(aB_J\hat{x}^k_J =w_J\frac{B_J\bar{x}^k_J}{\Vert B_J\bar{x}^k_J\Vert }, \frac{B_J\hat{x}^k_J}{\Vert B_J\hat{x}^k_J\Vert }= \frac{B_J\bar{x}^k_J}{\Vert B_J\bar{x}^k_J\Vert }\). Thus \(\hat{x}^k_J\) satisfies

Case (b). Since (44) holds, \(B^T_JB_J\bar{x}^k_J\) is a negative multiple of \(\bar{g}_J\). Also, \(B^T_JB_J\bar{u}_J\) is a nonzero multiple of \(\bar{g}_J\). A similar argument as in case (a) shows that \(B^T_JB_J\hat{x}^k_J\) satisfies (50) for all \(\epsilon > 0\) sufficiently small.

Case (c). We have \(\bar{x}^k_J= 0\) and \(B^T_JB_J\bar{u}_J\) is a negative multiple of \(\bar{g}_J\). Hence \(B^T_JB_J\hat{x}^k_J\) is a negative multiple of \(\bar{g}_J\) for all \(\epsilon > 0\), so it satisfies (50).

Obviously,

is equivalent to (by their optimization conditions)

Hence

Since \(\bar{x}^k\in \bar{X}\) and \(\nabla f_2(\bar{x}^k)=\bar{g}\), we have similarly that

i.e.

Adding the above two inequalities and simplifying yield

Since \(Ax^k\) and \(A\bar{x}^k=\bar{y}\) are in \(Y\), we also have from (38), (39) and (40) that

Combining these two inequalities and using (39) yield

Thus

Dividing both sides by \(\Vert B(x^k-\bar{x}^k)\Vert \) yields a contradiction to (37).

Appendix B: Proof of Lemma 3

Firstly, we give the following Resolvent Identity (see Lemma 3 in [3] or Lemma 2 in [4]).

For any \(\beta ,\gamma >0\), and \(x\in R^n\), since the operator \(\partial \varphi \) is maximal monotone, the following Resolvent Identity holds true:

Using the resolvent identity (55) and the fact that the resolvent is nonexpansive, we get

So, we have

After getting rid of sign of the absolute value, one can get that

when \(0<\alpha \le 1, 1+|1-\frac{1}{\alpha }|=\frac{1}{\alpha }\); and when \(\alpha >1, 1+|1-\frac{1}{\alpha }|=2-\frac{1}{\alpha }<2\).

Thus, we have

where \(\tilde{\alpha }=\min \{\alpha , \frac{1}{2}\}\) and \(\forall \alpha >0\).

That is the conclusion of Lemma 3.

Rights and permissions

About this article

Cite this article

Zhang, H., Wei, J., Li, M. et al. On proximal gradient method for the convex problems regularized with the group reproducing kernel norm. J Glob Optim 58, 169–188 (2014). https://doi.org/10.1007/s10898-013-0034-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-013-0034-5