Abstract

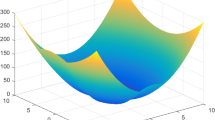

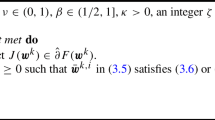

We propose an indefinite proximal subgradient-based algorithm (IPSB) for solving nonsmooth composite optimization problems. IPSB is a generalization of the Nesterov’s dual algorithm, where an indefinite proximal term is added to the subproblems, which can make the subproblem easier and the algorithm efficient when an appropriate proximal operator is judiciously setting down. Under mild assumptions, we establish sublinear convergence of IPSB to a region of the optimal value. We also report some numerical results, demonstrating the efficiency of IPSB in comparing with the classical dual averaging-type algorithms.

Similar content being viewed by others

Data availability statements

The authors confirm that all data generated or analysed during this study are included in the paper.

References

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Bertsekas, D.P.: Nonlinear Programming. Taylor & Francis, Milton Park (1997)

Boyd, S., Xiao, L., Mutapcic, A.: Subgradient methods. Lecture Notes of EE392o, Stanford University, Autumn Quarter, 2004:2004–2005 (2003)

Burke, J.V., Curtis, F.E., Lewis, A.S., Overton, M.L., Simões, L.E.: Gradient sampling methods for nonsmooth optimization. In: Numerical Nonsmooth Optimization, pp. 201–225. Springer (2020)

Cai, X.-J., Guo, K., Jiang, F., Wang, K., Wu, Z.-M., Han, D.-R.: The developments of proximal point algorithms. J. Oper. Res. Soc. China 1–43 (2022)

Combettes,P.L., Pesquet, J.C.: Proximal splitting methods in signal processing. In: Fixed-Point Algorithms for Inverse Problems in Science and Engineering, pp. 185–212. Springer (2011)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward–backward splitting. Multiscale Model. Simul. 4(4), 1168–1200 (2005)

Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12(7), 2021–2059 (2011)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55(1), 293–318 (1992)

Geman, S., Geman, D.: Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6(6), 721–741 (1984)

Grötschel, M., Lovász, L., Schrijver, A.: The ellipsoid method. In: Geometric Algorithms and Combinatorial Optimization, pp. 64–101. Springer (1993)

He, B., Ma, F., Yuan, X.: Optimal proximal augmented Lagrangian method and its application to full Jacobian splitting for multi-block separable convex minimization problems. IMA J. Numer. Anal. 40(2), 1188–1216 (2020)

Jiang, F., Cai, X., Han, D.: The indefinite proximal point algorithms for maximal monotone operators. Optimization 70(8), 1759–1790 (2021)

Jiang, F., Wu, Z., Cai, X.: Generalized ADMM with optimal indefinite proximal term for linearly constrained convex optimization. J. Ind. Manag. Optim. 16(2), 835–856 (2020)

LeCun, Y., Cortes, C., Burges, C.J.C.: The MNIST database of handwritten digits. http://yann.lecun.com/exdb/mnist/ (2017)

Li, M., Sun, D., Toh, K.C.: A majorized ADMM with indefinite proximal terms for linearly constrained convex composite optimization. SIAM J. Optim. 26(2), 922–950 (2016)

Mäkelä, M.: Survey of bundle methods for nonsmooth optimization. Optim. Methods Softw. 17(1), 1–29 (2002)

Martinet, B.: Regularization d’inequations variationelles par approximations successives. Revue Francaise d’Informatique et de Recherche Opérationelle 4, 154–159 (1970)

Nesterov, Y.: Smooth minimization of nonsmooth functions. Math. Program. 103(1), 127–152 (2005)

Nesterov, Y.: Primal-dual subgradient methods for convex problems. Math. Program. 120(1), 221–259 (2009)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, vol. 87. Springer, Berlin (2013)

Ram, S.S., Nedić, A., Veeravalli, V.V.: Incremental stochastic subgradient algorithms for convex optimization. SIAM J. Optim. 20(2), 691–717 (2009)

Ram, S.S., Nedić, A., Veeravalli, V.V.: Distributed stochastic subgradient projection algorithms for convex optimization. J. Optim. Theory Appl. 147(3), 516–545 (2010)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877–898 (1976)

Shor, N.Z.: Minimization Methods for Non-differentiable Functions. Springer Series in Computational Mathematics, Springer, Berlin (1985)

Xiao, L.: Dual averaging methods for regularized stochastic learning and online optimization. J. Mach. Learn. Res. 11(10), 2543–2596 (2010)

Zou, H., Hastie, T.: Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B 67, 301–320 (2005)

Acknowledgements

The authors thank the editor and the referees for the valuable comments/suggestions, which help us improve the paper greatly. The research of the second author was partially supported by NSFC with Nos. 12131004 and 12126603; and the research of the third author was partially supported by NSFC with No. 12171021 and by Beijing NSF with No. Z180005.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, R., Han, D. & Xia, Y. An indefinite proximal subgradient-based algorithm for nonsmooth composite optimization. J Glob Optim 87, 533–550 (2023). https://doi.org/10.1007/s10898-022-01173-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-022-01173-9