Abstract

In this paper, we propose an interior stochastic gradient method for bound constrained optimization problems where the objective function is the sum of a smooth expectation of random integrands and a non-Lipshitz \(\ell _p (0<p<1)\) regularizer. We first define an L-stationary point of the problem and show that an L-stationary point is a first-order stationary point and a global minimizer is an L-stationary point. Next we propose a stochastic gradient method to reduce the computational cost for the exact gradients of the smooth term in the objective function and to keep the feasibility of iterates. We show that the proposed stochastic gradient method is convergent to an \(\epsilon \)-approximate L-stationary point in \({\mathcal {O}}(\epsilon ^{-4})\) computational complexity, with optimality measure in \(\ell _2\) norm. Finally, we conduct preliminary numerical experiments to test the proposed method and compare it with some existing method. Preliminary numerical results indicate that the proposed method is promising.

Similar content being viewed by others

Data Availability

All used data sets in Figs. 5-7 were downloaded from the website https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/.

References

Beck, A., Eldar, Y.C.: Sparsity constrained nonlinear optimization: optimality conditions and algorithms. SIAM J. Optim. 23, 1480–1509 (2013)

Bian, W., Chen, X.: Optimality and complexity for constrained optimization problems with nonconvex regularization. Math. Oper. Res. 42, 1063–1084 (2017)

Bian, W., Chen, X., Ye, Y.Y.: Complexity analysis of interior point algorithms for non-Lipschitz and nonconvex minimization. Math. Program. 149, 301–327 (2015)

Candès, E., Wakin, M., Boyd, S.: Enhancing sparsity by reweighted \(\ell _1\) minimization. J. Fourier Anal. Appl. 14, 877–905 (2008)

Chen, X., Ge, D., Wang, Z., Ye, Y.: Complexity of unconstrained \(\ell _2\)-\(\ell _p\) minimization. Math. Program. 143, 371–383 (2014)

Chen, X., Ng, M., Zhang, C.: Nonconvex \(\ell _p \) regularization and box constrained model for image restoration. IEEE Trans. Image Process. 21, 4709–4721 (2012)

Chen, X., Niu, L.F., Yuan, Y.X.: Optimality conditions and a smoothing trust region Newton method for nonLipschitz optimization. SIAM J. Optim. 23, 1528–1552 (2013)

Chen, X., Toint, Ph.L., Wang, H.: Complexity of partially-separable convexly-constrained optimization with non-Lipschitzian singularities. SIAM J. Optim. 29, 874–903 (2019)

Chen, X., Toint, Ph.L.: High-order evaluation complexity for convexly-constrained optimization with non-Lipschitzian group sparsity terms. Math. Program. 187, 47–78 (2020)

Chen, X., Xu, F., Ye, Y.: Lower bound theory of nonzero entries in solutions of \(\ell _2\)-\(\ell _p\) minimization. SIAM J. Imaging Sci. 32, 2832–2852 (2010)

Daubechies, I., Devore, R., Fornasier, M., Güntürk, C.S.: Iteratively reweighted least squares minimization for sparse recovery. Commun. Pure Appl. Math. 63, 1–38 (2010)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Fan, J., Xue, L., Zou, H.: Strong oracle optimality of folded concave penalized estimation. Ann. Stat. 42, 819–849 (2014)

Ge, D., Jiang, X., Ye, Y.: A note on the complexity of \(l_p\) minimization. Math. Program. 21, 1721–1739 (2011)

Ge, D.D., He, R.H., He, S.M.: An improved algorithm for the \({L}_2\)-\({L}_p\) minimization problem. Math. Program. 166, 131–158 (2017)

Ghadimi, S., Lan, G., Zhang, H.: Mini-batch stochastic approximation methods for nonconvex stochastic composite optimization. Math. Program. 155, 267–305 (2016)

Lai, M.J., Wang, Y.: An unconstrained \(\ell _q\) minimization with \(0<q<1\) for sparse solution of under-determined linear systems. SIAM J. Optim. 21, 82–101 (2011)

Lai, M.J., Xu, Y., Yin, W.: Improved iteratively reweighted least squares for unconstrained smoothed \(\ell _q\) minimization. SIAM J. Numer. Anal. 51, 927–957 (2013)

Li, D.H., Sun, Z., Zhang, X.J.: A constrained optimization reformulation and a feasible descent direction method for \({L}_{{1}/{2}}\) regularization. Comput. Optim. Appl. 59, 263–284 (2014)

Liu, Y.F., Ma, S.Q., Dai, Y.H., Zhang, S.Z.: A smoothing SQP framework for a class of composite \({L}_q\) minimization over polyhedron. Math. Program. 158, 467–500 (2016)

Lu, Z.S.: Iterative reweighted minimization methods for \(\ell _p\) regularized unconstrained nonlinear programming. Math. Program. 147, 277–307 (2014)

Metel, M.R., Takeda, A.: Simple stochastic gradient methods for non-smooth non-convex regularized optimization. ICML, pages 4537–4545, (2019)

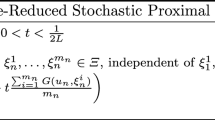

Pham, N.H., Nguyen, L.M., Phan, D.T., Tran-Dinh, Q.: Proxsarah: An efficient algorithmic framework for stochastic composite nonconvex optimization. J. Mach. Learn. Res. 21, 1–48 (2020)

Reddi, S.J., Sra, S., Póczos, B., Smola, A.: Proximal stochastic methods for nonsmooth non-convex finite-sum optimization. In: NIPS, (2016)

Rockafellar, R.T., Wets, R.J.B.: Variational Analysis, 3rd printing Springer Verlag, Heidelberg, Berlin, New York (2009)

Wang, X., Wang, S.X., Zhang, H.: Inexact proximal stochastic gradient method for convex composite optimization. Comput. Optim. and Appl. 68, 579–618 (2017)

Wang, X., Zhang, H.: Inexact proximal stochastic second-order methods for nonconvex composite optimization. Optim. Method Softw. 35, 808–835 (2020)

Wang, X.Y., Wang, X., Yuan, Y.: Stochastic proximal quasi-Newton methods for non-convex composite optimization. Optim. Method Softw. 34, 922–948 (2019)

Wen, Z.W., Yin, W.T., Goldfarb, D., Zhang, Y.: A fast algorithm for sparse reconstruction based on shrinkage, subspace optimization, and continuation. SIAM J. Sci. Comput. 32, 1832–1857 (2010)

Wright, J., Nowak, R.D., Figueiredo, M.A.T.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57, 2479–2493 (2019)

Xiao, L., Zhang, T.: A proximal stochastic gradient method with progressive variance reduction. SIAM J. Optim. 24, 2057–2075 (2014)

Xu, Y., Jin, R., Yang, T.: Stochastic proximal gradient methods for non-smooth non-convex regularized problems. arXiv:1902.07672v3 [math.OC], 30 Mar (2019)

Xu, Y., Qi, Q., Lin, Q., Jin, R., Yang, T.: Stochastic optimization for DC functions and non-smooth non-convex regularizers with non-asymptotic convergence. ICML, pages 6942–6951, (2019)

Xu, Z.B., Chang, X.Y., Xu, F.M., Zhang, H.: \({L}_{{1}/{2}}\) regularization: A thresholding representation theory and a fast solver. IEEE T. Neur. Net. Lear. 23, 1013–1027 (2012)

Yuan, G.X., Ho, C.H., Lin, C.J.: Recent advances of large-scale linear classification. Proceedings of the IEEE 100, 2584–2603 (2012)

Zhang, C., Chen, X.: A smoothing active set method for linearly constrained non-Lipschitz nonconvex optimizaiton. SIAM J. Optim 30, 1–30 (2020)

Zhang, S., Xin, J.: Minimization of transformed \({L}_1\) penalty: theory, difference of convex function algorithm, and robust application in compressed sensing. Math. Program. 169, 307–336 (2018)

Acknowledgements

We would like to thank two anonymous reviewers for their valuable comments and suggestions which helped to improve the paper.

Funding

This work was supported by the Chinese NSF Grant (nos.11771157, 11731013, 11871453, 11961011 and 11971106), the Ministry of Education, Humanities and Social Sciences project (no.17JYJAZH011), Young Elite Scientists Sponsorship Program by CAST (2018QNRC001), Youth Innovation Promotion Association, CAS, by special projects in key fields of Guangdong Universities (2021ZDZX1054), the Natural Science Foundation of Guangdong Province (2022A1515010567), the Major Key Project of PCL (No. PCL2022A05) and PolyU153001/18P, and the University Research Facility in Big Data Analytics of the Hong Kong Polytechnic University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proof of Lemma 2.2

Proof of Lemma 2.2

Notice that h is level bounded. The solution set of (2.2) is nonempty and bounded.

Firstly, we assume \(\alpha < {\bar{\omega }}\). For any \(t^*\in {\mathcal {T}}^*_\alpha \), suppose that \(t^*\ne 0\), then \(t^*>0\). By the optimality condition for (2.2), we have

Then it derives

which yields \(t^* \ge (\frac{2\lambda (1-p)}{L})^{\frac{1}{2-p}}\). Then it implies from Lemma 2.1 that

However, it contradict the assumption \(\alpha <{\bar{\omega }}\). Hence, \({\mathcal {T}}^*_\alpha =\{0\}\).

Secondly, we assume \(\alpha ={\bar{\omega }}\). By easy computations we have

And for any \(t>0\), the following equalities hold:

Recall that by Lemma 2.1, \(h'(t)\) is monotonically decreasing in \((0,\varrho \,]\) and monotonically increasing in \([\,\varrho ,+\infty )\) with \(\varrho =(\frac{\lambda p(1-p)}{L})^{\frac{1}{2-p}}\). Then we obtain

Thus \(h'(t)\) has two roots:

\(h'(t)>0\) in \((0,t_1) \cup (t_2,+\infty )\) and \(h'(t)<0\) in \((t_1,t_2)\). Thus h(t) is monotonically increasing in \((0,t_1]\) and \([t_2,+\infty )\) and is monotonically decreasing in \([t_1,t_2]\). Then by (A.1) we obtain

It thus follows that

which together with (A.1) indicates that \({\mathcal {T}}^*_\alpha =\{0, t_2\}\) when \(\alpha ={\bar{\omega }}\).

At last we assume \(\alpha > {\bar{\omega }}\). Let \(\bar{t}=\left( \frac{2\lambda (1-p)}{L}\right) ^{\frac{1}{2-p}}\). Then for any \(t^*\in {\mathcal {T}}^*_\alpha \), it yields from \(h(t^*)\le h(\bar{t})\) that

Then \(t^*\ne 0\) thus \(t^*>0\). Then the optimality of \(t^*\) indicates

which together with \(h(t^*)< h(0)\) implies

Recall that by Lemma 2.1, \(h_1\) is monotonically increasing on \([(\frac{\lambda p(1-p)}{L})^{\frac{1}{2-p}}, +\infty )\). Hence, \(t^*\) satisfying (A.2) and (A.3) is unique. The proof is completed.

Rights and permissions

About this article

Cite this article

Cheng, W., Wang, X. & Chen, X. An Interior Stochastic Gradient Method for a Class of Non-Lipschitz Optimization Problems. J Sci Comput 92, 42 (2022). https://doi.org/10.1007/s10915-022-01897-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01897-6

Keywords

- Sparse optimization

- \(\ell _p\) regularizer

- Bound constraints

- Stochastic gradient

- Computational complexity