Abstract

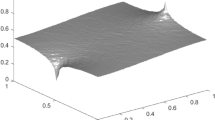

Graph total variation methods have been proved to be powerful tools for unstructured data classification. The existing algorithms, such as MBO (short for Merriman, Bence, and Osher) algorithm, can solve such problems very efficiently with the help of Nyström approximation. However, the strictly theoretical convergence is still unclear due to such approximation. In this paper, we aim at designing a fast operator-splitting algorithm with a low memory footprint and strict convergence guarantee for two-phase unsupervised classification. We first present a general smooth graph total variation model, which mainly consists of four terms, including the Lipschitz-differential regularization term, general double-well potential term, balanced term, and the boundedness constraint. Then the proximal gradient methods without and with acceleration are designed with low computation cost, due to the closed form solution related to proximal operators. The convergence analysis is further investigated under quite mild conditions. We conduct numerical experiments in order to evaluate the performance and convergence of proposed algorithms, on two different data sets including the synthetic two-moons and the MNIST. Namely, the results demonstrate the convergence and robustness of the proposed algorithms.

Similar content being viewed by others

Data availability

Enquiries about data availability should be directed to the authors.

Notes

More details including properties and examples about KL function can be found in Sect. 2.4 and the appendix of [1]

“MNIST” data set can be obtained from http://yann.lecun.com/exdb/mnist/.

References

Attouch, H., Bolte, J., Svaiter, B.F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized gauss-seidel methods. Math. Program. 137(1–2), 91–129 (2013)

Balashov, M.V.: The gradient projection algorithm for smooth sets and functions in nonconvex case. Set-Valued Var. Anal. 29, 341–360 (2021)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Bertozzi, A.L., Flenner, A.: Diffuse interface models on graphs for classification of high dimensional data. Multiscale Model. Simul. 10(3), 1090–1118 (2012)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146, 459–494 (2014)

Bosch, J., Klamt, S., Stoll, M.: Generalizing diffuse interface methods on graphs: nonsmooth potentials and hypergraphs. SIAM J. Appl. Math. 78(3), 1350–1377 (2018)

Boyd, Z.M., Bae, E., Tai, X., Bertozzi, A.L.: Simplified energy landscape for modularity using total variation. Siam J. Appl. Math. 78(5), 2439–2464 (2018)

Brandes, U., Delling, D., Gaertler, M., Gorke, R., Hoefer, M., Nikoloski, Z., Wagner, D.: On modularity clustering. IEEE Trans. Knowl. Data Eng. 20(2), 172–188 (2008)

Bühler, T., Hein, M.: Spectral clustering based on the graph p-laplacian. In: Proceedings of the 26th Annual International Conference on Machine Learning, pp. 81–88. Association for Computing Machinery, New York, NY, USA (2009)

Chang, H., Glowinski, R., Marchesini, S., Tai, X.C., Wang, Y., Zeng, T.: Overlapping domain decomposition methods for ptychographic imaging. SIAM J. Sci. Comput. 43(3), B570–B597 (2021)

Chang, H., Marchesini, S.: A general framework for denoising phaseless diffraction measurements. CoRR arXiv:1611.01417 (2016)

Chung, F.R.K.: Spectral graph theory. In: CBMS Regional Conference Series in Mathematics (1997)

Dong, B.: Sparse representation on graphs by tight wavelet frames and applications. Appl. Comput. Harmon. Anal. 42(3), 452–479 (2017)

Elmoataz, A., Lezoray, O., Bougleux, S.: Nonlocal discrete regularization on weighted graphs: A framework for image and manifold processing. IEEE Trans. Image Process. 17(7), 1047–1060 (2008)

Feng, S., Huang, W., Song, L., Ying, S., Zeng, T.: Proximal gradient method for nonconvex and nonsmooth optimization on hadamard manifolds. Optim. Lett. 6, 1862–4480 (2021)

Gennip, Y., Bertozzi, A.L.: \(\gamma \)-convergence of graph ginzburg-landau functionals. Adv. Differ. Equ. 17(11), 1115–1180 (2012)

Glowinski, R., Osher, S.J., Yin, W.: Splitting Methods in Communication, Imaging, Science, and Engineering. Springer, Cham (2016)

Glowinski, R., Pan, T.W., Tai, X.C.: Some Facts About Operator-Splitting and Alternating Direction Methods, pp. 19–94 (2016)

Goldstein, T., Studer, C., Baraniuk, R.G.: A field guide to forward-backward splitting with a FASTA implementation. CoRR arXiv:1411.3406 (2014)

Hu, H., Laurent, T., Porter, M.A., Bertozzi, A.L.: A method based on total variation for network modularity optimization using the mbo scheme. SIAM J. Appl. Math. 73(6), 2224–2246 (2013)

Huang, Y., Shen, Z., Cai, F., Li, T., Lv, F.: Adaptive graph-based generalized regression model for unsupervised feature selection. Knowl.-Based Syst. 227, 107156 (2021)

Jia, F., Tai, X.C., Liu, J.: Nonlocal regularized cnn for image segmentation. Inverse Probl. Imaging 14(5), 891–911 (2020)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2323 (1998)

Li, F., Ng, M.K.: Image colorization by using graph bi-laplacian. Adv. Comput. Math. 45(3), 1521–1549 (2019)

Li, H., Lin, Z.: Accelerated proximal gradient methods for nonconvex programming. In: C. Cortes, N. Lawrence, D. Lee, M. Sugiyama, R. Garnett (eds.) Advances in Neural Information Processing Systems, vol. 28, pp. 379–387. Curran Associates, Inc. (2015)

Li, J., Zhao, J., Wang, Q.: Energy and entropy preserving numerical approximations of thermodynamically consistent crystal growth models. J. Comput. Phys. 382, 202–220 (2019)

Liu, J., Zheng, X.: A block nonlocal tv method for image restoration. SIAM J. Imaging Sci. 10(2), 920–941 (2017)

Luxburg, U.: A tutorial on spectral clustering. Stat. Comput. 17(4), 395–416 (2007)

Merkurjev, E., Kosti, T., Bertozzi, A.L.: An mbo scheme on graphs for classification and image processing. SIAM J. Imaging Sci. 6(4), 1903–1930 (2013)

Merriman, B., Bence, J.K., Osher, S.J.: Diffusion-generated motion by mean curvature for filaments. In: J. Taylor (ed.) Proceedings of the Computational Crystal Growers Workshop, pp. 73–83. AMS (1992)

Muehlebach, M., Jordan, M.: A dynamical systems perspective on nesterov acceleration. In: K. Chaudhuri, R. Salakhutdinov (eds.) Proceedings of the 36th International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 97, pp. 4656–4662. PMLR (2019)

Nesterov, Y.: A method for solving the convex programming problem with convergence rate \(o(1/k^2)\). Proc. USSR Academy Sci. 269, 543–547 (1983)

Ng, A.Y., Jordan, M.I., Weiss, Y.: On spectral clustering: Analysis and an algorithm. In: Proceedings of the 14th International Conference on Neural Information Processing Systems: Natural and Synthetic, NIPS’01, pp. 849-856. MIT Press, Cambridge (2001)

Odonoghue, B., Candes, E.J.: Adaptive restart for accelerated gradient schemes. Found. Comput. Math. 15(3), 715–732 (2015)

Peressini, A.L., Sullivan, F.E., Uhl, J.J.: The Mathematics of Nonlinear Programming. Springer, New York (1988)

Qin, J., Lee, H., Chi, J.T., Drumetz, L., Chanussot, J., Lou, Y., Bertozzi, A.L.: Blind hyperspectral unmixing based on graph total variation regularization. IEEE Trans. Geosci. Remote Sensing 59(4), 3338–3351 (2021)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 60(1–4), 259–268 (1992)

Shang, R., Wang, L., Shang, F., Jiao, L., Li, Y.: Dual space latent representation learning for unsupervised feature selection. Pattern Recognit. 114, 107873 (2021)

Shang, R., Zhang, X., Feng, J., Li, Y., Jiao, L.: Sparse and low-dimensional representation with maximum entropy adaptive graph for feature selection. Neurocomputing 485, 57–73 (2022)

Shen, J., Xu, J., Yang, J.: A new class of efficient and robust energy stable schemes for gradient flows. SIAM Rev. 61(3), 474–506 (2019)

Szlam, A., Bresson, X.: Total variation, cheeger cuts. In: Proceedings of the 27th International Conference on Machine Learning, pp. 1039–1046 (2010)

Tang, C., Bian, M., Liu, X., Li, M., Zhou, H., Wang, P., Yin, H.: Unsupervised feature selection via latent representation learning and manifold regularization. Neural Netw. 117, 163–178 (2019)

Wen, B., Chen, X., Pong, T.K.: Linear convergence of proximal gradient algorithm with extrapolation for a class of nonconvex nonsmooth minimization problems. Siam J. Optim. 27(1), 124–145 (2017)

Wu, T., Li, W., Jia, S., Dong, Y., Zeng, T.: Deep multi-level wavelet-cnn denoiser prior for restoring blurred image with cauchy noise. IEEE Signal Process. Lett. 27, 1635–1639 (2020). https://doi.org/10.1109/LSP.2020.3023299

Yang, X.F., Zhao, J., Wang, Q.: Numerical approximations for the molecular beam epitaxial growth model based on the invariant energy quadratization method. J. Comput. Phys. 333, 104–127 (2017)

Yao, Q., Kwok, J.T., Gao, F., Chen, W., Liu, T.: Efficient inexact proximal gradient algorithm for nonconvex problems. In: Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, IJCAI-17, pp. 3308–3314 (2017)

Yin, K., Tai, X.C.: An effective region force for some variational models for learning and clustering. J. Sci. Comput. 74, 1–22 (2018)

Zelnikmanor, L., Perona, P.: Self-tuning spectral clustering, pp. 1601–1608 (2004)

Zhou, D., Schölkopf, B.: Regularization on discrete spaces. In: Kropatsch, W.G., Sablatnig, R., Hanbury, A. (eds.) Pattern Recognition, pp. 361–368. Springer, Berlin (2005)

Zhu, W., Chayes, V., Tiard, A., Sanchez, S., Dahlberg, D., Bertozzi, A.L., Osher, S., Zosso, D., Kuang, D.: Unsupervised classification in hyperspectral imagery with nonlocal total variation and primal-dual hybrid gradient algorithm. IEEE Trans. Geosci. Remote Sensing 55(5), 2786–2798 (2017)

Funding

This work was partially supported by the National Natural Science Foundation of China under Award 11871372, 11501413, and Natural Science Foundation of Tianjin under Award 18JCYBJC16600. BS recognizes support from the Postgraduate Innovation Research Project of Tianjin under award 2020YJSS141.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sun, B., Chang, H. Proximal Gradient Methods for General Smooth Graph Total Variation Model in Unsupervised Learning. J Sci Comput 93, 2 (2022). https://doi.org/10.1007/s10915-022-01954-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01954-0