Abstract

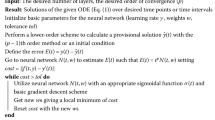

In this paper, we investigate residual neural network (ResNet) method to solve ordinary differential equations. We verify the accuracy order of ResNet ODE solver matches the accuracy order of the data. Forward Euler, Runge–Kutta2 and Runge–Kutta4 finite difference schemes are adapted generating three learning data sets, which are applied to train three ResNet ODE solvers independently. The well trained ResNet solvers obtain 2nd, 3rd and 5th orders of one step errors and behave just as its counterpart finite difference method for linear and nonlinear ODEs with regular solutions. In particular, we carry out (1) architecture study in terms of number of hidden layers and neurons per layer to obtain optimal network structure; (2) target study to verify the ResNet solver is as accurate as its finite difference method counterpart; (3) solution trajectory simulations. A sequence of numerical examples are presented to demonstrate the accuracy and capability of ResNet solver.

Similar content being viewed by others

Data Availibility

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

References

LeCun, Y., Bengio, Y.: Convolutional networks for images, speech, and time-series, The handbook of brain theory and neural networks (1995)

Bengio, Y.: Learning deep architectures for AI. Found. Trends Mach. Learn. 2(1), 1–127 (2009)

Krizhevsky, A., Sutskever, I., Hinton, G.: Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521, 436–444 (2015)

Wang, B., Yuan, B., Shi, Z., Osher, S.J.: EnResNet: ResNets ensemble via the Feynman-Kac formalism for adversarial defense and beyond. SIAM J. Math. Data Sci. 2(3), 559–582 (2020)

Weinan, E.: A proposal on machine learning via dynamical systems. Commun. Math. Stat. 5(1), 1–11 (2017)

Chaudhari, P., Oberman, A., Osher, S., Soatto, S., Carlier, G.: Deep relaxation: partial differential equations for optimizing deep neural networks (2017). arXiv:1704.04932

Haber, E., Ruthotto, L.: Stable architectures for deep neural networks. Inverse Probl. 34(1), 014004 (2018)

Chang, B., Meng, L., Haber, E., Ruthotto, L., Begert, D., Holtham, E.: Reversible architectures for arbitrarily deep residual neural networks, in: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), 2018, AAAI Press, 2018, pp. 2811–2818

Ruthotto, L., Haber, E.: Deep neural networks motivated by partial differential equations. J. Math. Imaging Vis. 62(3), 352–364 (2020)

Lu, Y., Zhong, A., Li, Q., Dong, B.: Beyond finite layer neural networks: bridging deep architectures and numerical differential equations, arXiv:1710.10121 (2017)

He, J., Xu, J.: MgNet: a unified framework of multigrid and convolutional neural network. Sci. China Math. 62(7), 1331–1354 (2019)

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314 (1989)

Hornik, K., Stinchcombe, M., White, H.: Universal approximation of an unknown mapping and its derivatives using multilayer feedforward networks. Neural Netw. 3(5), 551–560 (1990)

Barron, A.R.: Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans. Inf. Theory 39(3), 930–945 (1993)

Pinkus, A.: Approximation theory of the mlp model in neural networks. Acta Numer. 8, 143–195 (1999)

Lagaris, I., Likas, A., Fotiadis, D.: Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw. 95, 987–1000 (1998)

Rudd, K., Ferrari, S.: A constrained integration (cint) approach to solving partial differential equations using artificial neural networks. Neurocomputing 155, 277–285 (2015)

Raissi, M., Perdikaris, P., Karniadakis, G.E.: Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378, 686–707 (2019)

Sirignano, J., Spiliopoulos, K.: DGM: a deep learning algorithm for solving partial differential equations. J. Comput. Phys. 375, 1339–1364 (2018)

Long, Z., Lu, Y., Dong, B.: PDE-Net 2.0: learning PDEs from data with a numeric-symbolic hybrid deep network. J. Comput. Phys. 399, 108925 (2019)

Winovich, N., Ramani, K., Lin, G.: ConvPDE-UQ: convolutional neural networks with quantified uncertainty for heterogeneous elliptic partial differential equations on varied domains. J. Comput. Phys. 394, 263–279 (2019)

Beck, C.E.W., Jentzen, A.: Machine learning approximation algorithms for high-dimensional fully nonlinear partial differential equations and second-order backward stochastic differential equations. J. Nonlinear Sci. 29(4), 1563–1619 (2019)

Fan, Y., Lin, L., Ying, L., Zepeda-Núñez, L.: A multiscale neural network based on hierarchical matrices. Multiscale Model. Simul. 17(4), 1189–1213 (2019)

Khoo, Y., Lu, J., Ying, L.: Solving parametric pde problems with artificial neural networks, Eur. J. Appl. Math. (2020) 1–15

Li, Y., Lu, J., Mao, A.: Variational training of neural network approximations of solution maps for physical models. J. Comput. Phys. 409, 109338 (2020)

Qiu, C., Yan, J.: Cell-average based neural network method for hyperbolic and parabolic partial differential equations, J. Comput. Phys. Under review

Qin, T., Wu, K., Xiu, D.: Data driven governing equations approximation using deep neural networks. J. Comput. Phys. 395, 620–635 (2019)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016) 770–778

Chen, S., Billings, S.A., Grant, P.M.: Non-linear system identification using neural networks. Int. J. Control 51(6), 1191–1214 (1990)

González-García, R., Rico-Martínez, R., Kevrekidis, I.: Identification of distributed parameter systems: A neural net based approach, Computers & Chemical Engineering 22 (1998) S965–S968, european Symposium on Computer Aided Process Engineering-8

Milano, M., Koumoutsakos, P.: Neural network modeling for near wall turbulent flow. J. Comput. Phys. 182(1), 1–26 (2002)

Pathak, J., Lu, Z., Hunt, B.R., Girvan, M., Ott, E.: Using machine learning to replicate chaotic attractors and calculate lyapunov exponents from data. Chaos Interdiscip. J. Nonlinear Sci. 27(12), 121102 (2017)

Vlachas, P. R., Byeon, W., Wan, Z. Y., Sapsis, T. P., Koumoutsakos, P.: Data-driven forecasting of high-dimensional chaotic systems with long short-term memory networks, Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 474 (2213) (2018) 20170844

Mardt, A., Pasquali, L., Wu, H., Noé, F.: Vampnets: deep learning of molecular kinetics, Nat. Commun. 9 (5) (2018)

Yeung, E., Kundu, S., Hodas, N.: Learning deep neural network representations for koopman operators of nonlinear dynamical systems. Am. Control Conf. (ACC) 2019, 4832–4839 (2019)

Raissi, M., Perdikaris, P., Karniadakis, G. E.: Multistep neural networks for data-driven discovery of nonlinear dynamical systems (2018). arXiv:1801.01236

Chen, R.T.Q., Rubanova, Y., Bettencourt, J., Duvenaud, D.: Neural ordinary differential equations 12, 6572–6583 (2018)

Rudy, S.H., Kutz, J.N., Brunton, S.L.: Deep learning of dynamics and signal-noise decomposition with time-stepping constraints. J. Comput. Phys. 396, 483–506 (2019)

Sun, Y., Zhang, L., Schaeffer, H.: NeuPDE: neural network based ordinary and partial differential equations for modeling time-dependent data, in: Lu, J., Ward, R. (Eds.), Proceedings of The First Mathematical and Scientific Machine Learning Conference, Vol. 107 of Proceedings of Machine Learning Research, PMLR, Princeton University, Princeton, NJ, USA, 2020, pp. 352–372

Reshniak, V., Webster, C. G.: Robust learning with implicit residual networks (2019). arXiv:1905.10479

Xie, X., Zhang, G., Webster, C.G.: Non-intrusive inference reduced order model for fluids using deep multistep neural network. Mathematics 7(8), 757 (2019)

Keller, R., Du, Q.: Discovery of dynamics using linear multistep methods (2020). arXiv:1912.12728

Zagoruyko, S., Komodakis, N.: Wide residual networks, Proceedings of the British Machine Vision Conference (BMVC) (87) (2016) 1–12

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K. Q.: Densely connected convolutional networks, 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017) 2261–2269

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR) 2017, 5987–5995 (2017)

Haber, E., Ruthotto, L, Holtham, E.: Learning across scales—A multiscale method for convolution neural networks, arXiv arXiv:1703.02009 (2017)

Hornik, K.: Approximation capabilities of multilayer feedforward networks. Neural Netw. 4(2), 251–257 (1991)

Leshno, M., Lin, V.Y., Pinkus, A., Schocken, S.: Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw. 6(6), 861–867 (1993)

Venturi, L., Jelassi, S., Ozuch, T., Bruna, J.: Depth separation beyond radial functions. J. Mach. Learn. Res. 23, 1–56 (2022)

Kaplan, J., McCandlish, S., Henighan, T., Brown, T. B., Chess, B., Child, R., Gray, S., Radford, A., Wu, J., Amodei, D.: Scaling laws for neural language models (2020). arXiv:2001.08361

Wu, K., Xiu, D.: Numerical aspects for approximating governing equations using data. J. Comput. Phys. 384, 200–221 (2019)

Boyce, W. E., DiPrima, R. C.: Elementary differential equations and boundary value problems, John Wiley & Sons, Inc., New York-London-Sydney, 10th Edition

Chartrand, R.: Numerical differentiation of noisy, nonsmooth data. ISRN Appl, Math (2011)

Pulch, R.: Polynomial chaos for semiexplicit differential algebraic equations of index 1. Int. J. Uncertain. Quantif. 3(1), 1–23 (2013)

Funding

ChangxinQiu: Research work of this author is supported by National Natural Science Foundation of China under Grant (Nos. 12201327) and Ningbo Natural Science Foundation (Nos. 2022J087). Bendickson Bendickson and JoshuaKalyanapu: Researchwork of the authors are partially supported by National Science Foundation grant DMS-1457443. Jue Yan: Researchwork of theauthor is supported by National Science Foundation grant DMS-1620335 and Simons Foundation grant 637716.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

In the appendix, we revisit the one-step error between target and the exact solution through interpolation polynomial approximation. For one step error, orders of \(O(\Delta ^2)\), \(O(\Delta ^3)\) and \(O(\Delta ^5)\) are obtained for the first order forward Euler method (3.2), second order Runge–Kutta2 method (3.3) and fourth order Runge–Kutta4 method (3.4) with \(\Delta \) as the step size.

Case I: \({\textbf {y}}_j^2\) obtained from Forward Euler method (3.2)

Given \({\textbf {y}}_j^1={\textbf {x}}_j(t_0)\), subtract the exact solution \({\textbf {x}}_j(t_0+\Delta )\) of (3.1) from \({\textbf {y}}_j^2\) of the forward Euler method (3.2), we have

Here \(\frac{d}{dt}{\textbf {F}}({\textbf {x}}(t),t)=\frac{\partial {\textbf {F}}}{\partial {\textbf {x}}}{\textbf {F}}+ \frac{\partial {\textbf {F}}}{\partial t}\) refers to the complete derivative to the t variable, with \(\frac{\partial {\textbf {F}}}{\partial {\textbf {x}}}\) denoting the Jacobian matrix of the vector function \({\textbf {F}}\) on variable \({\textbf {x}}(t)\) and \(\frac{d {\textbf {x}}}{dt}={\textbf {F}}\). Forward Euler method can be considered as a constant quadrature rule approximation to the integral of the ODE system (3.1). Weighted mean value theorem is applied to estimate the error term.

Case II: \({\textbf {y}}_j^2\) obtained from 2nd order Runge–Kutta method (3.3)

Again we have \({\textbf {y}}_j^1={\textbf {x}}_j(t_0)\). Subtract \({\textbf {x}}_j(t_0+\Delta )\) of (3.1) from \({\textbf {y}}_j^2\) of the second order Runge–Kutta method (3.3), we have

where \(k_2={\textbf {F}}({\textbf {y}}_j^1+\Delta k_1, t_0+\Delta )\), \(k_1={\textbf {F}}({\textbf {y}}_j^1,t_0)\) and \(\widetilde{k_2}={\textbf {F}}({\textbf {x}}_j(t_0+\Delta ), t_0+\Delta )\). With the \(O(\Delta )\) local truncation error of the forward Euler method approximating \({\textbf {x}}_j(t_0+\Delta )\) and applying the Lipschitz continuity of \({\textbf {F}}\) of the dynamic system, we have

Here C represents a generic constant. The error from the two-points quadrature rule can be estimated as

Combine the above arguments, we have

Here \({\textbf {G}}_1(t)\) denotes the linear interpolation polynomial that interpolates \({\textbf {F}}({\textbf {x}}(t),t)\) at \(t_0\) and \(t_0+\Delta \). And \(\frac{d^2}{dt^2}{\textbf {F}}({\textbf {x}}(\cdot ),\cdot )\) denotes the complete second derivative of \({\textbf {F}}({\textbf {x}}(t),t)\) to t. This 2-stage Runge–Kutta method can be considered as a trapezoidal quadrature rule approximating the integration.

Case III: \({\textbf {y}}_j^2\) obtained from 4th order Runge–Kutta method (3.4)

With \({\textbf {y}}_j^1={\textbf {x}}_j(t_0)\) and subtract \({\textbf {x}}_j(t_0+\Delta )\) of (3.1) from \({\textbf {y}}_j^2\) of the fourth order Runge-Kutta method (3.4), we have

Terms of \(k_2, k_3\) and \(k_4\) are from the Runge–Kutta4 method (3.4), with \(k_1={\textbf {F}}({\textbf {y}}_j^1,t_0)={\textbf {F}}({\textbf {x}}_j(t_0),t_0)\). We have \(\widetilde{k_2}={\textbf {F}}({\textbf {x}}_j(t_0+\frac{\Delta }{3}),t_0+\frac{\Delta }{3})\), \(\widetilde{k_3}={\textbf {F}}({\textbf {x}}_j(t_0+\frac{2\Delta }{3}),t_0+\frac{2\Delta }{3})\) and \(\widetilde{k_4}={\textbf {F}}({\textbf {x}}_j(t_0+\Delta ),t_0+\Delta )\) introduced that \(k_2, k_3\) and \(k_4\) approximate. Rewrite the Runge–Kutta4 method of (3.4) as a one-step method, \({\textbf {y}}_j^2={\textbf {y}}_j^1+\Delta \Phi \left( t_0,{\textbf {y}}_j^1,{\textbf {F}}({\textbf {y}}_j^1),\Delta \right) \), we have

Here C represents a generic constant. The error from the four-points quadrature rule can be estimated as

Again C represents a generic constant. Summarize the above arguments, we have

Here \({\textbf {G}}_3(t)\) denotes the cubic interpolation polynomial that interpolates \({\textbf {F}}({\textbf {x}}(t),t)\) at \(t_0\), \(t_0+\Delta /3\), \(t_0+2\Delta /3\) and \(t_0+\Delta \). And \(\frac{d^4{\textbf {F}}}{dt^4}\) denotes the complete fourth derivative of \({\textbf {F}}({\textbf {x}}(t),t)\) to t variable at somewhere. This version of 4-stage Runge–Kutta method can be considered as the three-eighth Simpson quadrature rule approximating the integration.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qiu, C., Bendickson, A., Kalyanapu, J. et al. Accuracy and Architecture Studies of Residual Neural Network Method for Ordinary Differential Equations. J Sci Comput 95, 50 (2023). https://doi.org/10.1007/s10915-023-02173-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02173-x