Abstract

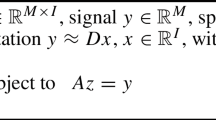

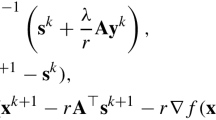

In this paper, we propose a nonconvex and nonsmooth image prior based on the hyperbolic tangent function and apply it as a regularization term for image restoration and reconstruction problems. Theoretically, we analyze the properties of the function and the minimizers of its associated proximal problem. Since the proximal problem has no closed-form solution, we propose a derivative-free Nelder-Mead simplex based selection algorithm to find the global minimizer. To reduce the computational cost, we only solve a small 1D problem and then use the 1D solution template as a look-up table to interpolate high-dimension data. Moreover, we consider a variational model based on the proposed image prior. Then we use the alternating direction method of multipliers algorithm on the nonconvex model to derive efficient numerical algorithms. Various experiments on image denoising, image deblurring, and image reconstruction demonstrate that the proposed nonconvex prior is competitive with the existing priors. In particular, it outperforms others in recovering piecewise constant images.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author upon request.

Notes

https://brainweb.bic.mni.mcgill.ca/

References

Bian, W., Chen, X.: Linearly constrained non-lipschitz optimization for image restoration. SIAM J. Imag. Sci. 8(4), 2294–2322 (2015)

Boyd, S., Parikh, N., Chu, E.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Now Publishers Inc (2011)

Chan, T.F., Esedoglu, S.: Aspects of total variation regularized L1 function approximation. SIAM J. Appl. Math. 65(5), 1817–1837 (2005)

Chen, X., Guo, L., Zhaosong, L., Ye, J.J.: An augmented lagrangian method for non-lipschitz nonconvex programming. SIAM J. Numer. Anal. 55(1), 168–193 (2017)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Frank, L.L.E., Friedman, J.H.: A statistical view of some chemometrics regression tools. Technometrics 35(2), 109–135 (1993)

Friedman, J.H.: Fast sparse regression and classification. Int. J. Forecast. 28(3), 722–738 (2012)

Geman, D., Yang, C.: Nonlinear image recovery with half-quadratic regularization. IEEE Trans. Image Process. 4(7), 932–946 (1995)

Goldstein, T., Osher, S.: The split Bregman method for L1-regularized problems. SIAM J. Imag. Sci. 2(2), 323–343 (2009)

Guoyong, G., Jiang, S., Yang, J.: A TVSCAD approach for image deblurring with impulsive noise. Inverse Prob. 33(12), 125008 (2017)

Gu, S., Xie, Q., Meng, D., Zuo, W., Feng, X., Zhang, L.: Weighted nuclear norm minimization and its applications to low level vision. Int. J. Comput. Vision 121(2), 183–208 (2017)

Guo, W., Lou, Y., Qin, J., Yan, M.: A novel regularization based on the error function for sparse recovery. J. Sci. Comput. 87(1), 1–22 (2021)

Huang, C., Li, Z., Liu, Y., Tingting, W., Zeng, T.: Quaternion-based weighted nuclear norm minimization for color image restoration. Pattern Recogn. 128, 108665 (2022)

Jorge, N., Stephen, J. W.: Numerical optimization (2006)

Karakuş, O., Mayo, P., Achim, A.: Convergence guarantees for non-convex optimisation with cauchy-based penalties. IEEE Trans. Signal Process. 68, 6159–6170 (2020)

Krishnan, D., Fergus, R.: Fast image deconvolution using hyper-Laplacian priors. Adv. Neural. Inf. Process. Syst. 22, 1033–1041 (2009)

Lagarias, J.C., Reeds, J.A., Wright, M.H., Wright, P.E.: Convergence behavior of the Nelder-Mead simplex algorithm in low dimensions. SIAM J. Optim. 9, 112–147 (1999)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

Li, F., Shen, C., Fan, J., Shen, C.: Image restoration combining a total variational filter and a fourth-order filter. J. Vis. Commun. Image Represent. 18(4), 322–330 (2007)

Li, Z., Yan, M., Zeng, T., Zhang, G.: Phase retrieval from incomplete data via weighted nuclear norm minimization. Pattern Recognition, p. 108537 (2022)

Lou, Y., Yan, M.: Fast L1–L2 minimization via a proximal operator. J. Sci. Comput. 74(2), 767–785 (2018)

Lou, Y., Zeng, T., Osher, S., Xin, J.: A weighted difference of anisotropic and isotropic total variation model for image processing. SIAM J. Imag. Sci. 8(3), 1798–1823 (2015)

Nelder, J.A., Mead, R.: A simplex method for function minimization. Comput. J. 7(4), 308–313 (1965)

Nikolova, M.: Analytical bounds on the minimizers of (nonconvex) regularized least-squares. Inverse Probl. Imaging 1(4), 1–677 (2007)

Nikolova, M., Ng, M.K., Tam, C.-P.: Fast nonconvex nonsmooth minimization methods for image restoration and reconstruction. IEEE Trans. Image Process. 19(12), 3073–3088 (2010)

Nikolova, M., Ng, M.K., Zhang, S., Ching, W.-K.: Efficient reconstruction of piecewise constant images using nonsmooth nonconvex minimization. SIAM J. Imag. Sci. 1(1), 2–25 (2008)

Osher, S., Burger, M., Goldfarb, D., Jinjun, X., Yin, W.: An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 4(2), 460–489 (2005)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60(1–4), 259–268 (1992)

Selesnick, I., Lanza, A., Morigi, S., Sgallari, F.: Non-convex total variation regularization for convex denoising of signals. J. Math. Imaging Vis. 62(6), 825–841 (2020)

Tao, T., Vidakovic, B.: Almost everywhere behavior of general wavelet shrinkage operators. Appl. Comput. Harmon. Anal. 9(1), 72–82 (2000)

Wang, Y., Yang, J., Yin, W., Zhang, Y.: A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imag. Sci. 1(3), 248–272 (2008)

Woodworth, J., Chartrand, R.: Compressed sensing recovery via nonconvex shrinkage penalties. Inverse Prob. 32(7), 075004 (2016)

Tingting, W., Xiaoyu, G., Li, Z., Li, Z., Niu, J., Zeng, T.: Efficient boosted DC algorithm for nonconvex image restoration with rician noise. SIAM J. Imag. Sci. 15(2), 424–454 (2022)

Xu, L., Lu, C., Xu, Y., Jia, J.: Image smoothing via L0 gradient minimization. In: Proceedings of the 2011 SIGGRAPH Asia Conference, pp. 1–12 (2011)

You, J., Jiao, Y., Xiliang, L., Zeng, T.: A nonconvex model with minimax concave penalty for image restoration. J. Sci. Comput. 78(2), 1063–1086 (2019)

Zhang, C.-H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010)

Zhang, C.-H., Huang, J.: The sparsity and bias of the lasso selection in high-dimensional linear regression. Ann. Stat. 36(4), 1567–1594 (2008)

Zhang, S., Xin, J.: Minimization of transformed \(l_1\) penalty: theory, difference of convex function algorithm, and robust application in compressed sensing. Math. Program. 169(1), 307–336 (2018)

Zhang, T.: Analysis of multi-stage convex relaxation for sparse regularization. J. Mach. Learn. Res. , 11(3), (2010)

Zuo, W., Meng, D., Zhang, L., Feng, X., Zhang, D.: A generalized iterated shrinkage algorithm for non-convex sparse coding. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 217–224 (2013)

Funding

This work was supported by the following funds. – 1) Fang Li was supported by Natural Science Foundation of Shanghai (No. 22ZR1419500). – 2) Fang Li was supported by Science and Technology Commission of Shanghai Municipality (No. 22DZ2229014) – 3) Fang Li was supported by Natural Science Foundation of Chongqing, China (No. CSTB2023NSCQ-MSX0276). – 4) Xiao-Guang Lv was supported by National Natural Science Foundation of China (NSFC) (No. 12171205). – 5) Xiao-Guang Lv was supported by Qing Lan project of Jiangsu Province.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by F. Li and X-G. Lv. The first draft of the manuscript was written by F. Li and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

A. proof of Theorem 1

Proof

-

(a)

For \(z=0\), the conclusion holds since \(x_{opt}^{(z=0,\lambda ,a)} =0.\) For \(z\ne 0\), without loss of generality, we only consider the case of \(z>0\). If \(x>z>0\), since \(\phi '(x;a)>0\), we have

$$\begin{aligned} H'(x;z,\lambda ,a) =\lambda \phi '(x;a)+x-z>0. \end{aligned}$$(27)Then \(H(x;z,\lambda ,a)\) is monotonically increasing for \(x\in [z,+\infty ]\). If \(x<0\), we have \(\phi '(x;a)<0\) and \(H'(x;z,\lambda ,a)<0\). Then \(H(x;z,\lambda ,a)\) is monotonically decreasing for \(x\in [-\infty ,0]\). Note that \(H(x;z,\lambda ,a)\) is a continuous function when \(x\in [0,z]\). Therefore, the global minimizer of \(H(x;z,\lambda ,a)\) must be in [0, z].

-

(b)

By definition of H, we get \(H(x;z,\lambda ,a)=H(-x;-z,\lambda ,a)\). Since \(x_{opt}^{(z,\lambda ,a)}\) is the minimizer of \(H(x;z,\lambda ,a)\), we have

$$\begin{aligned} H(x_{opt}^{(z,\lambda ,a)};z,\lambda ,a)\le H(x;z,\lambda ,a), \forall x\in R. \end{aligned}$$Equivalently,

$$\begin{aligned} H(-x_{opt}^{(z,\lambda ,a)};-z,\lambda ,a)\le H(-x;-z,\lambda ,a), \forall -x\in R. \end{aligned}$$Hence

$$\begin{aligned} H(-x_{opt}^{(z,\lambda ,a)};-z,\lambda ,a)\le H(x;-z,\lambda ,a), \forall x\in R, \end{aligned}$$i.e., \(x_{opt}^{(-z,\lambda ,a)} = -x_{opt}^{(z,\lambda ,a)} \).

-

(c)

Denote

$$\begin{aligned} x_i\triangleq x_{opt}^{(z_i,\lambda ,a)} \in \arg \min _x H(x;z_i,\lambda ,a), i = 1,2. \end{aligned}$$Then we get

$$\begin{aligned} \frac{1}{2}(x_1-z_1)^2+\lambda \phi (x_1;a)\le & {} \frac{1}{2}(x_2-z_1)^2+\lambda \phi (x_2;a),\\ \frac{1}{2}(x_2-z_2)^2+\lambda \phi (x_2;a)\le & {} \frac{1}{2}(x_1-z_2)^2+\lambda \phi (x_1;a). \end{aligned}$$

Adding the two inequalities together yields

It is equivalent to

Equation (28) can be simplified as

Since \(z_1<z_2\), we get \(x_1\le x_2\), that means, \(x_{opt}^{(z_1,\lambda ,a)}\le x_{opt}^{(z_2,\lambda ,a)}\). \(\square \)

B. proof of Theorem 2

Proof

By Eqs. (7) and (10), we can rewrite \(H''(x;z,\lambda ,a)\) as a function of \(\theta =\tanh \left( \frac{|x|}{a}\right) \):

Note that when \(x\in (0,+\infty )\), \(\theta (x)\in (0,1)\) is monotonically increasing. Since

we have \(F'<0\) for \(\theta \in (0,\frac{1}{\sqrt{3}})\), while \(F'>0\) for \(\theta \in (\frac{1}{\sqrt{3}},+\infty )\). It implies that F is monotonically decreasing for \(\theta \in (0,\frac{1}{\sqrt{3}})\) and monotonically increasing for \(\theta \in (\frac{1}{\sqrt{3}},+\infty )\). Hence F attains its minima at \(\theta = \frac{1}{\sqrt{3}}\) with function value

-

(a)

If \(\lambda <\frac{3\sqrt{3}a^2}{4}\), by Eq. (31), we have \(H''(x;z,\lambda ,a)>0\) when \(x>0\). Since \(H(x;z,\lambda ,a)\) is continuous at \(x=0\) and \(H(x;z,\lambda ,a)\) is strictly convex in \((0,+\infty )\), together with Theorem 1(a), we deduce that \(H(x;z,\lambda ,a)\) has a unique (global) minimizer in [0, z]. Since \(H'(x=z;z,\lambda ,a)=\lambda \phi '(z;\lambda ,a)>0\), H is monotonically increasing in a local neighbourhood of \(x=z\). Therefore the global minimizer of H is in [0, z).

-

(b)

If \(\lambda =\frac{3\sqrt{3}a^2}{4}\), by Eq. (31), \(H''(x;z,\lambda ,a)\ge 0\) and

$$\begin{aligned} H''(x;z,\lambda ,a)=0\ \text{ iff } \ x=a\pm \text{ atanh }\left( \frac{1}{\sqrt{3}}\right) , \end{aligned}$$where \(\text{ atanh }\) is the inverse hyperbolic tangent function. It implies that \(H'(x;z,\lambda ,a)\) is strictly increasing in \((0,+\infty )\). Note that \(H'(x\rightarrow 0^+;z,\lambda ,a)=\frac{\lambda }{a}-z\) and \(H'(x=z;z,\lambda ,a)>0\). If \(\frac{\lambda }{a}-z\ge 0\), then H is monotonically increasing and such that the global minimizer is \(x=0\). Otherwise, \(H'(x\rightarrow 0^+;z,\lambda ,a)<0\). By the zero point theorem of continuous function H, we get that \(H'(x;z,\lambda ,a)=0\) has a unique solution in (0, z), which is the unique (global) minimizer.

-

(c)

Assume \(\lambda >\frac{3\sqrt{3}a^2}{4}\). By Eq. (29), the equation

$$\begin{aligned} F(\theta ) = 0 \end{aligned}$$(32)

is equivalent to the standard cubic equation

where \(p=-1, q=\frac{a^2}{2\lambda }\). This equation can be solved by using Cardano’s formula. Denote the discriminant as

Since \(\lambda >\frac{3\sqrt{3}a^2}{4}\), we have \(\Delta <0\) such that the equation Eq. (33) has three real roots:

where

Since \(\alpha _0 = \arccos \left( -\frac{3\sqrt{3}a^2}{4\lambda }\right) \in [\frac{\pi }{2},\pi ]\), then \(\alpha =\frac{1}{3}\alpha _0 \in [\frac{\pi }{6},\frac{\pi }{3}]\). It is straightforward to verify that \(\theta _1>0, \theta _2>0, \theta _3<0\) and \(\theta _1<\theta _2\). By definition, \(\theta = \tanh \left( \frac{|x|}{a}\right) >0\). So we need only consider the roots \(\theta _i,i=1,2\). Moreover, since

we deduce that \(\theta _1\in \left( 0,\frac{1}{\sqrt{3}}\right) \) and \(\theta _2\in \left( \frac{1}{\sqrt{3}},1\right) \). Using the monotonicity of \(\theta (x)\), we get that \(H''(x;z,\lambda ,a)=0\) has two different solutions

which are inflection points of \(H(x;z,\lambda ,a)\).

Note that finding the zero points of \(H'\) can be regarded as finding the intersection points of two curves:

It is obvious that when \(x>0\),

Hence the curve \(y_1(x)\) is above \(y=x\), and its asymptote is \(y=x\).

Assume \(y_1(x)\) and \(y_2(x)\) are tangent, then the tangent point satisfies the following two equations:

which are equivalent to

According to the second equation in Eq. (36), the tangent points are just the inflection points \({\tilde{x}}_i\) of H. Define two thresholding values:

Since \(y_1'(x)=H''(x;z,\lambda ,a)\) is positive in \((0,{\tilde{x}}_1)\cup ({\tilde{x}}_2,+\infty )\), and negative in \(({\tilde{x}}_1,{\tilde{x}}_2)\), it implies that \(y_1(x)\) is strictly increasing in \((0,{\tilde{x}}_1)\) and \(({\tilde{x}}_2,+\infty )\), and strictly decreasing in \(({\tilde{x}}_1,{\tilde{x}}_2)\). Hence we deduce that \(y_1(x)\) attains its local maximum at \({\tilde{x}}_1\) and its local minimum at \({\tilde{x}}_2\). Meanwhile, we have \(z_1> z_2\). Since \(y_1(x)\) is increasing in \((0,{\tilde{x}}_1)\), \(y_1(x\rightarrow 0^+)=\frac{\lambda }{a}<y_1({\tilde{x}}_1)=z_1\). Therefore, there are only two cases that can be occurred, i.e., \(\lambda /a\le z_2\) or \(\lambda /a> z_2\), as displayed in Fig. 23a and b, respectively. The horizontal lines in Fig. 23 denote \(y_2(x)=z\) with different z values, and the marked points with magenta boundaries are local minimizers. In the following, we analyze the critical and extreme points of \(H(x;z,\lambda ,a)\) in detail.

Case 1: Assume \(\lambda /a\le z_2\), then

-

\(z\le \lambda /a\): \(y_1(x)\) and \(y_2(x)\) have no intersection point, i.e., \(H'\) is always positive. Hence H attains its global minimum at \(x=0\). See the line \(y_2=\lambda /a\) and the gray dotted line below it in Fig. 23a, for instance.

-

\(\lambda /a<z<z_2\): \(y_1(x)\) and \(y_2(x)\) have one positive intersection point such that \(H'=0\), which is the unique local minimizer. See the gray dotted line between \(y_2=\lambda /a\) and \(y_2=z_2\) in Fig. 23a, for instance.

-

\(z=z_2\): \(y_1(x)\) and \(y_2(x)\) have two positive intersection points, and the smaller one is a local minimizer. See the line \(y_2=z_2\) in Fig. 23a.

-

\(z_2<z<z_1\): \(y_1(x)\) and \(y_2(x)\) have three positive intersection points. Using the monotonicity of \(y_1(x)\), we deduce that the middle one is a local maximizer and the other two are local minimizers. See the gray dotted line between \(y_2=z_2 \) and \(y_2=z_1\) in Fig. 23a, for example.

-

\(z=z_1\): \(y_1(x)\) and \(y_2(x)\) have two positive intersection points, and the bigger one is a local minimizer. See the line \(y_2=z_1\) in Fig. 23a.

-

\(z>z_1\): \(y_1(x)\) and \(y_2(x)\) have one positive intersection point, which is a local minimizer. Since \(y_1(x)\rightarrow x\) as \(x\rightarrow \infty \), the local minimizer of \(H(x;z,\lambda ,a)\)approximates z as x goes to infinity. See the gray dotted line above \(y_2=z_1\) in Fig. 23a, for example.

Case 2: Assume \(\lambda /a> z_2\), then

-

\(z\le z_2\): \(y_1(x)\) and \(y_2(x)\) have no intersection point, i.e., \(H'\) is always positive. Hence H attains its global minimum at \(x=0\). See the line \(y_2=z_2\) and the gray dotted line below it in Fig. 23b, for example.

-

\(z=z_2\): \(y_1(x)\) and \(y_2(x)\) have one positive intersection point, which is not an extreme point. Hence H attains its global minimum at \(x=0\). See the line \(y_2=z_2\) in Fig. 23b.

-

\(z_2<z\le \lambda /a\): \(y_1(x)\) and \(y_2(x)\) have two positive intersection point such that \(H'=0\). The smaller one is a local maximizer, and the smaller one is a local minimizer. \(x=0\) is also a candidate of extreme point. See the gray dotted line between \(y_2=z_2\) and \(y_2 = \lambda /a\) in Fig. 23b, for instance.

-

\(\lambda /a<z<z_1\): \(y_1(x)\) and \(y_2(x)\) have three positive intersection points. Using the monotonicity of \(y_1(x)\), we deduce that the middle one is a local maximizer and the other two are local minimizers. As an example, see the gray dotted line between \(y_2=z_1\) and \(y_2=\lambda /a\) in Fig. 23b.

-

\(z=z_1\): \(y_1(x)\) and \(y_2(x)\) have two positive intersection points, and the bigger one is a local minimizer. See the line \(y_2=z_1\) in Fig. 23b.

-

\(z>z_1\): \(y_1(x)\) and \(y_2(x)\) have one positive intersection point, which is a local minimizer. Since the asymptote of \(y_1(x)\) is \(y=x\), the local minimizer of \(H(x;z,\lambda ,a)\) approximates z as x goes to infinity. For instance, see the gray dotted line above \(y_2=z_1\) in Fig. 23b.

Based on the above analysis, we get that there are one or two local minimizers of H, which are in \([0,{\tilde{x}}_1)\) or \([{\tilde{x}}_2,z]\). Moreover, if there exist two local minimizers, then one is in \([0,{\tilde{x}}_1)\), and the other is in \([{\tilde{x}}_2,z]\). This completes the proof. \(\square \)

C. proof of Theorem 3

Proof

Assume \({\varvec{x}}^{*}= t{\varvec{z}}^0\) for some \(t>0\). For any \({\varvec{x}}\) satisfies \(\Vert {\varvec{x}}\Vert _{2}=\left\| {\varvec{x}}^{*}\right\| _{2}=t\), we have

Hence, the solution \({\varvec{x}}^{*}\) of Eq. (15) must be parallel with \({\varvec{z}}\), i.e., \({\varvec{x}}^{*} = t^*{\varvec{z}}^0, t^*\ge 0\).

Let \({\varvec{x}}=t{\varvec{z}}^0\). Then the data fitting term can be rewritten as

Hence problem Eq. (15) can be transformed into problem Eq. (16). This completes the proof. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, F., Lv, XG. A Nonconvex Nonsmooth Image Prior Based on the Hyperbolic Tangent Function. J Sci Comput 97, 55 (2023). https://doi.org/10.1007/s10915-023-02366-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02366-4

Keywords

- Nonconvex nonsmooth function

- Hyperbolic tangent prior

- Regularization

- Image restoration

- Image reconstruction