Abstract

In this paper, we explore quadratures for the evaluation of \(B^T \phi (A) B\) where A is a symmetric positive-definite (s.p.d.) matrix in \(\mathbb {R}^{n \times n}\), B is a tall matrix in \(\mathbb {R}^{n \times p}\), and \(\phi (\cdot )\) represents a matrix function that is regular enough in the neighborhood of A’s spectrum, e.g., a Stieltjes or exponential function. These formulations, for example, commonly arise in the computation of multiple-input multiple-output (MIMO) transfer functions for diffusion PDEs. We propose an approximation scheme for \(B^T \phi (A) B\) leveraging the block Lanczos algorithm and its equivalent representation through Stieltjes matrix continued fractions. We extend the notion of Gauss–Radau quadrature to the block case, facilitating the derivation of easily computable error bounds. For problems stemming from the discretization of self-adjoint operators with a continuous spectrum, we obtain sharp estimates grounded in potential theory for Padé approximations and justify averaging algorithms at no added computational cost. The obtained results are illustrated on large-scale examples of 2D diffusion and 3D Maxwell’s equations and a graph from the SNAP repository. We also present promising experimental results on convergence acceleration via random enrichment of the initial block B.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Problem Statement

Let \(A=A^T\in {\mathbb {R}}^{n\times n}\) be a symmetric positive definite matrix and let B be a tall matrix with \(B\in {\mathbb {R}}^{n\times p}\), with \(p\ll n\). We refer to p as the block size. We want to approximate

via approximations of \(\phi (A,s)B\) on a block Krylov subspace

where blkspan means that the whole space range\(([B, AB, A^2 B, \dots A^{m-1} B])\) is generated. In the literature various functions \(\phi \) are considered to derive bounds for the scalar case, for instance strictly completely monotonic functions [3, 5] or functions with sufficient derivatives on the spectral interval of A [36]. Here, we mainly consider the simpler problem \(\phi (A,s)=(A+sI)^{-1}\) with \(s\in {\mathbb {R}}_+\), which can be generalized to Stieltjes functions. Our numerical experiments will also illustrate the applicability of our results for exponentials and resolvents with purely imaginary \(s\in \imath {\mathbb {R}}\), which is an important class of problems in the computation of transfer functions of linear time-invariant (LTI) systems with imaginary Laplace frequencies and time domain responses. To simplify notation, without loss of generality, we assume that the matrix B has orthonormal columns.

Gaussian-quadrature rules via Krylov subspace methods [24] have been extended to the block case [18, 40]. Gauss–Radau quadrature rules have also been extended to the block case via the block Krylov subspace [35], where at each block Krylov iteration, p Ritz values of A projected onto the block Krylov subspace are prescribed by a low-rank modification of this projection. These p Ritz values are distinct and larger than the maximum eigenvalue of A, which did not lead to an acceleration in convergence of the block Krylov method in [35].

In this paper, we take a different approach and extend Gauss–Radau quadrature to the block case by prescribing p identical Ritz values that are smaller than the minimum eigenvalue of A. When considering the quadratic form \(B^T \phi (A) B\), this allows us to obtain two-sided estimates of the solution at the cost of the standard block Lanczos algorithm in the Krylov block subspace \(\mathcal {K}_m(A,B)\). We further show that this choice leads to a (convergent) monotonically decreasing approximation and allows for computable and tight error bounds. This definition is consistent with the Gauss–Radau bound for the A-norm of the error in CG, which to date only has been developed for the scalar case [37]. The matrices we consider in this work arise as discretizations of PDE operators in unbounded domains whose smallest eigenvalue tends to zero, therefore, the p prescribed Ritz values are zero.

We focus on applications stemming from diffusive electromagnetic problems in an unbounded domain where we show that averaging Gauss–Radau and Gauss quadratures leads to a cost-free reduction in approximation error. This significantly improves the accuracy of the standard Krylov projection approximation, even though matrix-function approximation by Krylov projection is near optimal in global norms [1].

A vast body of literature exists for approximating \(\phi (A)B\) via block Krylov subspace methods for symmetric and non-symmetric matrices A [21]. Ideas based on Gauss–Radau quadratures have motivated the Radau–Lanczos method for the scalar (non-block) case [20], and the Radau–Arnoldi method for the non-symmetric block case [22]. Similarly to the Gauss–Radau definition in [35], the Radau–Arnoldi method in [22] modifies p Ritz values at each iteration to be larger than the largest eigenvalue of A. The considered functions \(\phi (\cdot )\) in these works are Riemann–Stieltjes integrals, and we note that the results obtained for the resolvent in this work carry over straightforwardly to such functions.

Monotonicity results for the ordinary Lanczos algorithm were obtained for the exponential function in [8]; see also similar results in [19]. Two-sided monotonic bounds for scalar Gauss and Gauss–Radau quadratures were first obtained in [36]. In this work we generalize these results to matrix-valued quadratures calculated via the block Lanczos algorithm. For instance, using pairs of Gauss and Gauss–Radau quadratures has been explored for functions of adjacency matrices in [3]. For the related conjugate gradient algorithm, Gauss–Radau based upper bounds for the scalar case have been developed in [37, 38]. For rational functions of matrices, error bounds for the classical Lanczos algorithms have been obtained in [23]. Recently, error bounds for approximating \(\phi (A)B\) with the Lanczos method have been extended to the block Lanczos method [42]. A connection of the block Lanczos algorithm and matrix continued fraction similar to the one used in this work was described in the context of quantum physics for MIMO transfer function approximations in [30].

The remainder of the article is organized as follows: we review the basic properties of the block Lanczos algorithm in Sect. 2, and we show that the resolvent of the Lanczos block tridiagonal matrix leads to a Stieltjes-matrix continued fraction in Sect. 3. Using these continued fractions we define the notion of Gauss–Radau quadrature for the block case in Sect. 4. This is used in Sect. 5 to derive a two-sided error bound. Convergence acceleration based on averaged Gauss–Radau and Gauss quadrature is presented in the same section with a more detailed treatment in “Appendix A”. Last, numerical examples are presented in Sect. 6.

1.2 Notation

Given two square symmetric matrices \(G_1, G_2\) we use the notation \(G_1<G_2\) to mean that the matrix \(G_2-G_1\) is positive definite. A sequence of matrices \(\{G_m\}_{m\ge 0}\) is said to be monotonically increasing (resp. decreasing) if \(G_m < G_{m+1}\) (resp. \(G_{m+1}<G_m\)) for all m. Positive-definite \(p \times p\) matrices are denoted by Greek letters \(\varvec{\alpha },\varvec{\beta },\varvec{\gamma }\) and matrix-valued functions by calligraphic capital letters such as \(\mathcal{C}(s)\) or \( \mathcal{F}(s)\). Last, for \(\varvec{\alpha }, \varvec{\beta }\in \mathbb {R}^{p\times p}\) and \(\varvec{\beta }\) is nonsingular, we use the notation \(\frac{\varvec{\alpha }}{\varvec{\beta }}:= \varvec{\alpha }\varvec{\beta }^{-1}\) (right inversion). The matrix \(E_k \in \mathbb {R}^{mp\times p}\) has zero elements except for the \(p\times p\) identity matrix in the k-th block, \(E_k =[0,\ldots , 0,I, \ldots , 0]^T\).

2 Block Gauss Quadratures Via the Block Lanczos Algorithm

For scalar \( \mathcal{F}(s)\), i.e. \(p=1\), the approximation of \( \mathcal{F}(s)=B^T (A+ sI)^{-1}B\) by means of \( \mathcal{F}_m(s)=E_1^T (T_m+ sI)^{-1}E_1\), where \(T_m\) is a specifically designed s.p.d. tridiagonal matrix, can be classically interpreted as a quadrature formula for integrals [3, 36]. The block setting with \(p>1\) and \(T_m\) a specifically designed s.p.d. block tridiagonal matrix allows for an analogous block quadrature interpretation.

Consider the eigendecompositions \(A=Z\Lambda Z^T\) with eigenpairs \((\lambda _i,z_i)\) and \(T_m=\tilde{Z}_m \tilde{\Lambda }_m \tilde{Z}_m^T\), \(T_m \in \mathbb {R}^{mp\times mp}\) with eigenpairs \((\tilde{\lambda }_i,\tilde{z}_i)\). Then we can rewrite \( \mathcal{F}(s)\) as an integral

with symmetric, matrix valued spectral measure

with \(H(\cdot )\) the Heaviside step function. The approximation \( \mathcal{F}_m(s)\) of this integral can be written as

which can be interpreted as a quadrature rule \(\sum _i w_i \frac{1}{x_i+s}\) with weights \(w_i=(\tilde{z}_i^T E_1)^T(\tilde{z}_i^T E_1)\) and nodes \(x_i=\tilde{\lambda }_i\). Such a quadrature rule is said to be a Gauss quadrature rule if it exactly integrates polynomials of degree (strictly) less than 2m. A quadrature rule is called Gauss–Radau quadrature rule if additionally, one quadrature node is fixed at either end of the integration interval. In this paper we consider matrices A stemming from the discretization of a differential operator with continuous spectrum approaching 0, hence we will fix a quadrature node at \(x_0/\tilde{\lambda }_0 = 0\) [24].

Gauss–Radau quadrature has been extended to the block case in Section 4.3 of [35] where p quadrature nodes are prescribed that are distinct and larger than the maximum eigenvalue of A. In this extension of the Gauss–Radau quadrature to the block case, we propose to prescribe the first quadrature point at 0 with quadrature weight \(w_i\) of rank p.

To obtain a (block)-symmetric \(T_m\) and a quadrature approximation the block Lanczos algorithm (Algoritm 1) is used. Let us assume that we can perform m steps, with \(mp \le n\), of the block Lanczos iteration without breakdowns or deflationFootnote 1 [26, 39]. As a result, the orthonormal block vectors \(Q_i\in \mathbb {R}^{n \times p}\) form the matrix \({\varvec{Q}}_m=[Q_1, \ldots , Q_m]\in \mathbb {R}^{n\times mp}\), whose columns contain an orthonormal basis for the block Krylov subspace

The Lanczos iteration can then be compactly written as

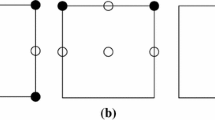

where \(T_m\) is the symmetric positive definite block tridiagonal matrix

and \(\varvec{\alpha }_i, \varvec{\beta }_i \in \mathbb {R}^{p \times p}\) are the block coefficients in Algorithm 1.

Using the Lanczos decomposition, \( \mathcal{F}(s)\) can be approximated as

This approximation is known as a block Gauss quadrature rule. In the following, we show that \( \mathcal{F}_m\) is monotonically increasing with m, i.e. \( \mathcal{F}_m< \mathcal{F}_{m+1}< \mathcal{F}(s)\) and thereby converges monotonically. Analogous results for the non-block case (i.e., \(p=1\)) for a larger class of functions were obtained in [8, 19, 36]. We also introduce a computable upper-bound matrix \( {\tilde{\mathcal{F}}}_m\) that monotonically decays as iterations increase, that is \( {\tilde{\mathcal{F}}}_m> {\tilde{\mathcal{F}}}_{m+1}> \mathcal{F}> \mathcal{F}_m\). These two-sided bounds will then provide an error measure and allows us to reduce the approximation error using an averaging formula. The quantity \( {\tilde{\mathcal{F}}}_m\) may be interpreted as an extension to \(p>1\) of the approximation via a Gauss–Radau quadrature rule introduced in [36].

Remark 1

The simple Lanczos algorithm using only three-term recursions without re-orthogonalization is known to be unstable due to computer round-offs. The instability is manifested by the loss of orthogonality of the Lanczos vectors and the appearance of spurious copies of the Lanczos eigenvalues. However, this instability only dampens the spectral adaptation of the approximation of actions of regular enough matrix functions and does not affect the converged approximation [9, 13, 27]. Likewise, even though the spectral nodes and weights of the Gaussian quadratures computed via the simple Lanczos algorithm can be affected by round-off, the converged quadrature rule is not [25, 32]. Moreover, compared to the actions of matrix functions, the quadrature convergence is less affected by the loss of orthogonality of Lanczos vectors, because these vectors are not explicitly used in the computation of the Gaussian quadratures. We assume that the same reasoning applies to the block extensions as well to the Gauss–Radau quadrature, which we observe in our numerical experiments. Specifically, we assume that \(T_m\) obtained from three-term block recursions is s.p.d. and (thereby) that its block Cholesky factorization exists.

3 Gauss Quadrature and the Matrix S-fraction

We represent the block tridiagonal Lanczos matrix \(T_m\) (see Eq. (4)) in a block \(LDL^T\) decomposition.

Lemma 1

Let \(T_m\) be as defined in Eq. (4). Then there exist \(\hat{\varvec{\kappa }}_{i}\in {\mathbb {R}}^{p\times p}\), \(\hat{\varvec{\kappa }}_1=I_p\) and \(\varvec{\gamma }_{i}\in {\mathbb {R}}^{p\times p}\) all full rank such that

where

and \(\varvec{\alpha }_1=(\hat{\varvec{\kappa }}_{1}^{-1})^T\varvec{\gamma }_1^{-1}\hat{\varvec{\kappa }}_{1}^{-1}=\varvec{\gamma }_1^{-1}\), \(\varvec{\alpha }_i=(\hat{\varvec{\kappa }}_{i}^{-1})^T(\varvec{\gamma }_{i-1}^{-1}+\varvec{\gamma }_{i}^{-1})\hat{\varvec{\kappa }}_{i}^{-1}\) and \(\varvec{\beta }_i= {-(\hat{\varvec{\kappa }}_{i}^{-1})^T \varvec{\gamma }_{i-1}^{-1} \hat{\varvec{\kappa }}_{i-1}^{-1}}\) for \(i=2,\ldots ,m\).

Proof

The block \(LDL^T\) decomposition for positive definite, tridiagonal matrices consists of a block bidiagonal L with identity as its diagonal block and the block diagonal s.p.d. D. The parametrization in Eq. (6) is a general parameterization of such a \(LDL^T\) decomposition with \(L=(\widehat{\varvec{K}}_{m}^{-1})^T {J}_{m} (\widehat{\varvec{K}}_{m})^T\) and \(D=(\widehat{\varvec{K}}_{m}^{-1})^T \varvec{\Gamma }^{-1}_m (\widehat{\varvec{K}}_{m}^{-1})\).

The \(p\times p\) matrices must be of full rank, since they are Cholesky factors of the s.p.d. Lanczos matrix (6), which is of full rank. Further by the same reasoning, the (block)-diagonal factor \({\varvec{\Gamma }}_m\) is s.p.d. since D is s.p.d. . \(\square \)

We can extract the matrices \(\varvec{\gamma }_i\)’s and \(\hat{\varvec{\kappa }}_{i}\)’s directly during the block Lanczos recurrences using the coefficients \(\varvec{\alpha }_i\)’s and \(\varvec{\beta }_i>0\)’s. This is reported in Algorithm 2, which in a different parameterization was derived in Appendix C of [14]. From their construction, we obtain that \(\hat{\varvec{\kappa }}_i\), \(\varvec{\gamma }_{i}\) are full rank, as long as no deflation occurs in the block Lanczos recurrence, as \(\varvec{\beta }_i^T\varvec{\beta }_i>0\) for \(i=2,\ldots ,m\), i.e. nonsingularity of all coefficients \(\varvec{\alpha }_i,\varvec{\beta }_i\) ensures that Algorithm 2 runs without breakdowns. This algorithm is also related to the matrix continued fraction construction via block Lanczos algorithm used for computation of quantum scattering problems [30].

To write \( \mathcal{F}_m\) as a matricial continued-S-fractions we first convert \(T_m\) to pencil form using the factors introduced in (6). To this end, we introduce the matrices \(\varvec{\hat{\gamma }}_j\)

The matrices \(\varvec{\gamma }_j\) and \(\varvec{\hat{\gamma }}_j\) are known as the Stieltjes parameters [16] and they are both s.p.d. if block Lanczos runs without breakdown.

Let \( Z_m:= {J}_m \varvec{\Gamma }_m^{-1} {J}_{m}^T\) and \(\varvec{\widehat{\Gamma }}_m=\textrm{blkdiag}(\varvec{\hat{\gamma }}_{1},\dots ,\varvec{\hat{\gamma }}_{m})\). Then, due to the initial condition \(\varvec{\hat{\gamma }}_1=I_p\), the function \( \mathcal{F}_m\) can be rewritten using the pencil form \((Z_m,\varvec{\widehat{\Gamma }}_m)\), that is

Next, we show that \( \mathcal{F}_m\) is a matricial Stieltjes continued fraction (S-fraction) that can be written as

where the basic building block of these S-fractions is given by the recursion

Proposition 1

Let the recursion \(\mathcal{C}_i(s)\), \(i=m-1, \ldots , 1\) as in (10) be given. Then for \(s\in {\mathbb {R}}_+\) and \(\mathcal{C}_{m+1}(s) = 0\), the function \( \mathcal{F}_m\) is a continued matrix S-fraction defined as

This result can be formally related to earlier works by Duykarev et al. [16, 17] in matricial continued S-fraction. The proof used here is related to the derivation by Ingerman (Section 2.4 [28]).

Proof

We recall that \( \mathcal{F}_m(s)\) corresponds to the first \(p\times p\) block of the solution \({ U}_m\) of the linear system

Let \({ U}_m = [\mathcal{U}_1; \ldots ; \mathcal{U}_m]\), with \(\mathcal{U}_i\) a \(p\times p\) block. We notice that \( U_m\) is full column rank. Let us write down the relevant block equations of this system, that is

As a preliminary observation, we note that we can assume that all \(\mathcal{U}_i\) are full rank. Indeed, since the coefficient matrix is block tridiagonal and unreducible, if there exists a nonzero vector v such that \(\mathcal{U}_{i}v=0\) for some i, the components of \(\mathcal{U}_1v\) can be obtained by solving the \((i-1)\times (i-1)\) system with the top-left portion of the coefficient matrixFootnote 2.

For \(1<i \le m\), let us formally define the operation

Note that this allows us to write

Rearranging terms for \(i=m\) in the Eq. (14), it holds

so that since \(\mathcal{U}_m\) is non-singular, we obtain \(\mathcal{C}_m^{-1}= s \hat{\varvec{\gamma }}_m + \frac{1}{\varvec{\gamma }_m}\). Reordering terms similarly in the ith Eq. (13), with \(1<i < m\), we obtain

Using (15) with index \(i+1\) in place of i and substituting, we rewrite the equation above as

yielding the following recurrence for \(\mathcal{C}_i\) (for \(\mathcal{U}_i\) nonsingular)

Rearranging also the first Eq. (12), we have

so that \(s\hat{\varvec{\gamma }}_1 \mathcal{U}_1 + \mathcal{C}_2^{-1} \mathcal{U}_2 = I_p\). Using (15), we obtain \(s\hat{\varvec{\gamma }}_1 \mathcal{U}_1 + (\mathcal{C}_2+\varvec{\gamma }_1)^{-1} \mathcal{U}_1 = I_p\), from which we get the final relation,

\(\square \)

4 Block Gauss–Radau Quadrature

Let us modify the lowest floor of (9) by replacing \(\varvec{\gamma }_m\) with \(\frac{\varvec{\gamma }_m}{\epsilon }\), \(\epsilon >0\) and keeping the remaining Stieltjes parameters unchanged. In the limit of \(\epsilon \rightarrow 0\) such a modification yields

Equivalently, such a limiting transition can be written using the resolvent of a block tridiagonal matrix as

where

and \(\varvec{\alpha }_i\), \(i=1,\ldots , m-1\) and \(\varvec{\beta }_i\), \(i=2,\ldots , m\) are the same block coefficients as in Algorithm 1, with

using \(\varvec{\gamma }_m\), \(\hat{\varvec{\kappa }}_{m}\) computed via Algorithm 2. The following proposition is fundamental for understanding the distinct properties of \( \mathcal{F}_m\) and \( {\tilde{\mathcal{F}}}_m\) and the relation of \( {\tilde{\mathcal{F}}}_m\) to the Gauss–Radau quadrature.

Proposition 2

The matrix function \( \mathcal{F}_m(s)\) is analytical and of full rank at \(s=0\) as

Further, \( {\tilde{\mathcal{F}}}_m(s)\) has a pole of order one at \(s=0\) with a residue matrix of rank p, specifically

Proof

The basic building block of the considered S-fractions is given in (10), which allows us to succinctly define the truncated matrix continued S-fraction (16) recursively as

in the same fashion we defined \( \mathcal{F}_m(s)\) in equation (16) via (11).

We will start with (19) and use the recursions (10) and (11) to compute \( \mathcal{F}_m(0)\). First, we notice that \(\mathcal{C}_m(0)=\varvec{\gamma }_m\). Then for \(i\le m\) we get \(\mathcal{C}_{i-1}=\mathcal{C}_{i}+\varvec{\gamma }_{i-1}\) which proves (19) by induction.

Likewise, (20) can be obtained from the recursion (10) and (21). We will start with computing \((\lim _{s\rightarrow 0} s\mathcal{C}_{m})^{-1}=\varvec{\hat{\gamma }}_{m}\) and then get the recursion

Then (20) will be computed by substituting \(\mathcal{C}_1\) into the last equality of (21). The inverse of the sum of s.p.d. matrices is of full rank, which concludes the proof. \(\square \)

The poles of \( {\tilde{\mathcal{F}}}_m\) (as of any matrix S-fraction) are real non-positive and the pole at \(s=0\) is of first order with residue matrix of rank p, which follows from the second result of Proposition 2. Therefore, \(\tilde{T}_m\) has eigenvalue zero with multiplicity p and we arrive at the following corollary.

Corollary 1

The symmetric block tridiagonal matrix \(\tilde{T}_m\) is semi-definite with p-dimensional null space.

Because it is obtained from \(T_m\) by modifying only the last diagonal block element \(\tilde{\varvec{\alpha }}\), \(\tilde{ \mathcal{F}}_m\) can be viewed as a natural extension of the Gauss–Radau quadrature introduced in [36] for \(p=1\), thus we will call it block Gauss–Radau quadrature.

Remark 2

Other choices of \(\tilde{\varvec{\alpha }}_m\) that lead to block Gauss–Radau rules are discussed in [35], where p distinct eigenvalues of the block tridiagonal matrix \(\tilde{T}_m\) are prescribed by solving a block tridiagonal system. Here we consider discretizations of differential operators with a contentious spectrum where the lowest eigenvalue tends to zero such that we choose p eigenvalues at zero.

Remark 3

The theory presented in this manuscript was developed for s.p.d. matrices. In the case A is a nonnegatvive definite matrix and the intersection of the column space of B with the null space of A is empty (\(\textrm{col}(B) \cap \textrm{null}(A) = \{\varnothing \}\)) we can still use block Lanczos algorithms to approximate the considered quadratic forms. In exact arithmetic \(T_m\) would be positive definite for this case, a property that is lost in computer arithmetic. However, a spectral correction can be applied to the eigenvalues of \(T_m\) that are not positive by expanding the spectral projectors introduced for the non-block case in [12] to the block case. Essentially, eigenvalues below a spectral threshold are shifted to the threshold and block Lanczos is rerun on the spectrally corrected matrix to restore the block tridiagonal structure. In the numerical experiments presented in Sects. 6.1.2 and 6.2 the considered matrix A is nonnegative definite, yet no spectral corrections were needed.

5 Two-Sided Error Bounds and Convergence Acceleration Via Averaging of Gauss and Gauss–Radau Rules

5.1 Monotonically Convergent Lower and Upper Bounds

In the absence of deflation, Gaussian quadrature \( \mathcal{F}_m(s)\), as all Krylov approximations eventually do, converges to \( \mathcal{F}(s)\). Gauss–Radau quadrature \( {\tilde{\mathcal{F}}}_m(s)\) differs from \( \mathcal{F}_m(s)\) only by the absence of the lower floor in the S-fraction representation, thus it also converges to the same limit. We next prove that the two sequences give monotonic two-sided bounds and that their difference defines an upper bound.

Theorem 1

For a given real positive s, the following holds,

-

(i)

The matrix sequence \(\{ \mathcal{F}_m(s)\}_{m\ge 1}\) is increasing, i.e. \( \mathcal{F}_m(s)< \mathcal{F}_{m+1}(s)\);

-

(ii)

The matrix sequence \(\{ {\tilde{\mathcal{F}}}_m(s)\}_{m\ge 1}\) is decreasing, i.e. \( {\tilde{\mathcal{F}}}_m(s)> {\tilde{\mathcal{F}}}_{m+1}(s)\).

Proof

From the recursion (10) with final condition given by (11) \(\forall s>0\) and \(i<m+1\) we get \(\mathcal{C}_i(s)>0\). From (10) it follows that we get \( \mathcal{F}_{m-1}\) if we impose \(\mathcal{C}_{m}=0\). From (10) it also follows that \(\mathcal{C}_i\) is monotonic with respect to \(\mathcal{C}_{i+1}\) (\(\mathcal{C}_{i+1}\) in the denominator of \(\mathcal{C}_{i}\)’s denominator), so the replacement of the final condition in (11) by \(\mathcal{C}_{m}=0\) decreases \(\mathcal{C}_{m-1}\), that consequentially decreases \(\mathcal{C}_{m-2}\), etc., and by induction we obtain \( \mathcal{F}_{m-1}< \mathcal{F}_m\).

For proving (ii), we recall that one can get \( {\tilde{\mathcal{F}}}_{m-1}\) by terminating the S-fraction with \(\mathcal{C}_{m}=+\infty \). Then with the same monotonicity argument as for \( \mathcal{F}_m\), it follows that such a substitution recursively increases \(\mathcal{C}_i\), \(i=m-1,m-2,\ldots , 1 \). \(\square \)

Now we are ready to formulate a two-sided Gauss–Radau-type estimate.

Proposition 3

Let Algorithm 1 produce \(\varvec{\beta }_j^T\varvec{\beta }_j>0\) for \(j\le m\). Then

Proof

We apply Theorem 1 to \( \mathcal{F}_m\) and \( {\tilde{\mathcal{F}}}_m\), yielding \(0< \mathcal{F}_{m-1}(s)< \mathcal{F}_{m}(s)\) and \( {\tilde{\mathcal{F}}}_{m}(s)< {\tilde{\mathcal{F}}}_{m-1}(s)\). To conclude the proof, we notice that for s satisfying the condition of the proposition \(\lim _{m\rightarrow \infty } \mathcal{F}_m(s)= \mathcal{F}(s)\), i.e., \( \mathcal{F}_m(s)< \mathcal{F}(s)\). From \(T_m- \tilde{T}_m\ge 0\) we obtain

with \(V(r)=[T_m+ r(\tilde{T}_m-T_m)+sI]^{-1}E_1\), that concludes the proof. \(\square \)

The ordering above provides us with a computable error estimate of the approximation of \( \mathcal{F}\), that is

The two-sided bounds of Proposition 3 indicate the possibility of using their averaging to compute an error correction. Such an approach was considered in the works of Reichel and his co-authors for Gauss–Radau quadrature with \(p=1\) [36] and for \(p>1\) for anti-Gauss quadratures [18]. Here we identify an important class of PDEs operators for which the averaging is particularly efficient.

5.2 Acceleration Via Averaged Gauss–Radau and Gauss Rules

We propose two averaging formulas for the developed block Gauss–Radau and block Gauss rules to accelerate convergence. We motivate and analyze these averaging formulas in “Appendix A”.

The first averaging formula, which we refer to as “Averaging 1" in the numerical experiments, is the arithmetic average of the Gauss and Gauss–Radau approximation

We stress that the computation of \(\tilde{ \mathcal{F}}_{m+1}(s)\) requires the same m steps of the block Lanczos algorithm as \( \mathcal{F}_{m}(s)\).

In “Appendix A” (for \(p=1\)) we show that for the cases with linear convergence (corresponding to the problems arising from discretzation of operators with continuous spectrum, e.g., PDEs in unbounded domains) such an averaging leads to approximate error reduction by a factor equal to the linear convergence rate of Gauss or Gauss–Radau quadratures, so the proposed averaging will be beneficial for problems with slow linear convergence.

The second averaging formula, which we refer to as “Averaging 2" in the numerical experiments is the geometric mean

of the arithmetic mean \(\widehat{\mathcal{F}}_m(s)\) and harmonic mean

This average is invariant if we substitute \( \mathcal{F}^{-1}\) for \( \mathcal{F}\) and reverse their order, i.e. the averaging formula for \( \mathcal{F}\) and \( \mathcal{F}^{-1}\) are the same. This is a significant property of relevance for matrices A stemming from discretizations of 2D PDEs as explained in “Appendix A”. The simple averaging formula (23) lacks this property.

6 Numerical Examples

In this section, we consider the application of the developed error bounds and show that the averaging formulas accelerate convergence for MIMO transfer function computations for PDE operators with continuous or very dense spectrum when Krylov subspace approximations lose their spectral adaptivity. This phenomenon can only be observed for large-scale problems, thus dimensions of our experiments are of order \(10^5-10^6\). Though the theory in this manuscript is limited to the resolvent with \(s\in \mathbb {R}^+\), we stress that the error bound, monotonicity and error correction via averaging seem to hold for imaginary s and other functions such as \(\phi (A)=\exp (-A t)\). We also explore possible accelerations stemming from adding random vectors to the initial block. All errors reported are measured in the 2-norm.

6.1 Transfer Functions of PDE Operators on Unbounded Domains

The computation of transfer functions of self-adjoint PDE operators on unbounded domains is the main target of the proposed approach. For accurate PDE discretization of such domains, we adopted the optimal grid approach [29] allowing spectral convergence in the exterior part of the computational domain, e.g., see [10] with a more detailed explanation of optimal grid implementation. A trade-off of the spectral approximation of the exterior problem is that A’s spectrum approximates the continuum spectrum of the exact problem well, and thus the Krylov subspace approximations lose their adaptability. The proposed averaging attempts to alleviate this problem by adapting to continuous spectra.

6.1.1 2D Diffusion

We discretize the operator

on an unbounded domain using second-order finite differences on a \(300\times 300\) grid. In the grid center where the variations of \(\sigma (\textbf{x})\) are supported, we use a grid size of \(\delta _x=1\). This formulation can be associated with both heat transfer and the scalar diffusion of electromagnetic fields. To approximate this, we consider a Dirichlet problem on a bounded domain with \(N_{opt}=10\) exponentially increasing grid steps in the exterior and a uniform grid in the interior part. Utilizing the optimal geometric factor of \(\exp {(\pi /\sqrt{10})}\) as indicated by [29], we achieve spectral accuracy in the exterior region of the domain, thereby effectively approximating the boundary condition at infinity (exponential decay). The resulting grid and function \(\sigma (\textbf{x})\) are shown in Fig. 1. Only the first two steps of the geometrically increasing grid are shown in the figure since they increase rapidly. In this case, B is a vector of discrete delta functions with entries at grid nodes corresponding to the transducer locations shown in the same figure as Trx.

The convergence for \(s=10^{-3}\) is shown in Fig. 2 alongside the convergence curve for purely imaginary shifts \(s=\imath \cdot 10^{-3}\) in Fig. 2a. For imaginary shifts s, block Lanczos converges slower. The monotonicity result from Theorem 1 and the error bound from Eq. (22) are only proven for \(s\in \mathbb {R}^+\). Despite this the convergence for purely imaginary s is monotone in this example, the upper bound holds and the averaged quadrature rules reduce the approximation error by an order of magnitude. For the chosen shifts both averaging formulas perform equally well.

In Fig. 2b we compare the introduced methods with the averaged anti-Gauss rules developed in [18]. An anti-Gauss rule can be obtained by modifying the last off block diagonal entry of \(T_{m+1}\), which we call \(\varvec{\beta }_{m+1}\), by multiplication with the factor \(\sqrt{2}\). Let this matrix be called \(T_{m+1}^\mathcal{H}\). There is no guarantee that this matrix is positive definite, and in about 38% of the iterations for the 2D diffusion problem it is not. In this experiment the averaged block anti-Gauss rule

has the same error order as the block Gauss rule but is less stable due the presence of negative eigenvalues.

In Fig. 2c the convergence for the matrix exponential \(\phi (A)=\exp {(-At)}\) for \(t=10^{5}\) is shown. The convergence is superlinear, the error bound becomes tight with increased iterations and the averaged quadrature rule from formula (24) performs better for low iteration counts. Numerical results for accelerating convergence further through subspace enrichment by adding random starting vectors to B in \(\mathcal {K}_m(A,B)\) are presented in “Appendix B”.

6.1.2 3D Electromagnetic Diffusion

In this example we solve the 3D Maxwell equations in the quasi-stationary (diffusive) approximation. Krylov subspace approximation of action of matrix functions for the solution of that problem was introduced in [11] and remains the method of choice for many important geophysical applications. The diffusive Maxwell equations in \({\mathbb {R}}^{3}\) can be transformed to the form

where \(\sigma \) is the electrical conductivity, \(\mu _0\) the vaccum permeability, \(\mathcal {J}^{\textrm{ext}}_{(r)}\) is an external current density indexed by transmitter index (r) causing the electrical field strength \(\mathcal {E}_{(r)}(\textbf{x},s)\). For \(s\notin {\mathbb {R}}_-\) the solution of (27) converges to 0 at infinity, i.e.

We approximate (27)–(28) by the conservative Yee grid similarly to [11]. Following the same framework as in the diffusion problem in \({\mathbb {R}}^2\) described earlier, we truncate the computational domain with Dirichlet boundary conditions for the tangential components of \(\mathcal {E}_{(r)}\) on the domain boundary with a optimally geometrically progressing grid, which gives spectral convergence of the condition (28) at infinity. In our particular realization, we used \(N_{opt}=6\) gridsteps geometrically increasing with geometric scaling factor \(\exp {(\pi /\sqrt{6})}\) according to [29]. The grid is \(N_x=80\), \(N_y=100\) and \(N_z=120\) gridsteps large leading to a matrix A of size \(N=2\, 821\, 100\). The configuration has a homogeneous conductivity of \(\sigma =1\) in the entire domain except for two inclusions shown in Fig. 3a where \(\sigma =0.01\). As current densities, we use 6 magnetic dipoles (approximated by electric loops), with 3 dipoles located above the inclusion and 3 dipoles located in the middle of the inclusions as highlighted by the arrows in Fig. 3a. Such configuration corresponds to the tri-axial borehole tools used in electromagnetic hydrocarbon exploration [41] and the matrix B thus has 6 columns.

The operator and its discretization are nonnegative definite; however, the vectors B corresponding to divergence-free magnetic sources are intrinsically orthogonal to the operator’s null space, consisting of potential solutions. Thus, it satisfies the conditions in Remark 3.

In Fig. 3b the convergence is shown for various real values of s after \(m=400\) block iterations. The error bound is tight across all values for s and there is no difference in averaging formulas. Further, in Fig. 3c the convergence for this test case is shown for a real shift of \(s=0.05\) and in Fig. 3d for an imaginary shift.

6.2 Graph Laplacian

Efficient computation of SISO and MIMO transfer functions of large graph-Laplacians is important for efficient clustering of big data sets, computation of centrality measures and other applications [4, 15, 36]. For our numerical experiments we take the web-BerkStan graph from the SNAP (Standford network analysis project) [33] and turn it into an undirected graph by symmetrization. The original graph has 685230 nodes and 7600595 edges and we construct the normalized graph Laplacian A as our test matrix. The nodes of the graph represent pages from berkeley/stanford.edu domains and directed edges (here converted to undirected edges) represent hyperlinks between them. The data were collected in 2002.

As B matrix we take \(p=3\) (graph) delta functions chosen at random nodes.Footnote 3 The graph Laplacian is nonnegative definite, where the null space of the graph Laplacian consists of constant vectors on every connected graph component. The columns of B are graph delta functions in our experiments and thus not orthogonal to the null space; however, excluding the case of connected graph components of dimension 1 (single disconnected nodes), the intersection of the column space of B with the null space of A is empty, and as such, satisfies the condition of Remark 3.

In Fig. 4a we evaluate the resolvent \(\phi (A,s)=(A+s I)^{-1}\) for \(s=10^{-4}\) showing that the error bound is tight and the averaging formulas give similar results. After \(m=50\) block iterations the error for various values of s is displayed in Fig. 4.

In contrast to PDE operators on unbounded domains, data-related graphs may exhibit spectral distributions with non-vanishing gaps even at very large dimensions. Consequently, they cannot be regarded as approximations of the problems discussed in Proposition 5. Nevertheless, as illustrated in Fig. 4a, quadrature approximations for such graphs can demonstrate linear convergence, in which case they satisfy tight block Gauss–Radau bounds and their averaging will be efficient.

7 Conclusions

In this paper, we derived a block extension of Gauss–Radau quadrature for the computation of MIMO transfer functions by leveraging the connection between the block Lanczos algorithm and matrix Stieltjes continued fractions. We proved that the block Gauss and Gauss–Radau quadrature rules provide monotonically convergent two-sided bounds for the resolvent, important for MIMO transfer functions of LTI dynamical systems with real Laplace frequencies. For problems involving the approximation of operators with a continuous spectrum, we also prove (in the SISO case) that these bounds are tight, thereby justifying the averaging of Gauss and Gauss–Radau approximations.

Our numerical experiments with large-scale graph-Laplacian, 2D diffusion, and 3D Maxwell systems confirmed the tightness of the derived bounds and the efficiency of the averaging formula, not only for the cases analyzed but also for transfer functions of LTI systems with imaginary Laplace frequency and time domain responses. Additionally, we explored the potential of using random enrichment of the initial Lanczos block within the developed algorithm framework. These findings highlight the robustness and broad applicability of our approach targeted for large-scale problems.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Notes

Deflation in the block recurrence is required if for some m, the generated space has dimension strictly less than mp. In that case, the redundant linearly dependent columns generated by the block Lanczos iteration need to be purged, and the block size reduced accordingly, see, e.g., [7]. In the numerical experiment presented in Sect. 6 breakdown did not occur.

This is related to having solved the associated linear system. Indeed, let \({\varvec{Q}}_i\) be the block Lanczos basis, and \(R_i=(A+sI) {\varvec{Q}}_i {U}_i - B\) be the residual matrix associated with the approximate solution \(X_i={\varvec{Q}}_i {U}_i\) to the linear system \((A+sI)X=B\). Using the Lanczos relation (3), it holds that \(R_i={\varvec{Q}}_i (T_i+sI) {U}_i - {\varvec{Q}}_iE_1 + Q_{i+1} {\varvec{\beta }}_{i+1} E_i^T {U}_i = Q_{i+1} {\varvec{\beta }}_{i+1} E_i^T {U}_i\), with \({U}_i=[\mathcal{U}_1;\ldots ; \mathcal{U}_i]\), that is \(R_i=Q_{i+1} {\varvec{\beta }}_{i+1} \mathcal{U}_i\).

With random seed 1 we obtain delta functions at nodes 207168, 100562, and 63274.

References

Amsel, N., Chen, T., Greenbaum, A., Musco, C., Musco, C.: Near-optimal approximation of matrix functions by the Lanczos method. In: Proceedings of the Thirty-Eighth Annual Conference on Neural Information Processing (2024)

Baker, G.A., Graves-Morris, P.: Padé Approximants, Encyclopedia of Mathematics and its Applications, 2nd edn. Cambridge University Press, Cambridge (1996)

Benzi, M., Boito, P.: Quadrature rule-based bounds for functions of adjacency matrices. Linear Algebra Appl. 433(3), 637–652 (2010)

Benzi, M., Boito, P.: Matrix functions in network analysis. GAMM-Mitteilungen 43 (2020)

Benzi, M., Golub, G.H.: Bounds for the entries of matrix functions with applications to preconditioning. BIT Numer. Math. 39, 417–438 (1999)

Berryman, J.G., Kohn, R.V.: Variational constraints for electrical-impedance tomography. Phys. Rev. Lett. 65, 325–328 (1990)

Cullum, J., Willoughby, R.A.: Lanczos algorithms for large symmetric eigenvalue computations. Birkhäuser, Basel (1985). Vol. 1, Theory, Vol. 2. Program

Druskin, V.: On monotonicity of the Lanczos approximation to the matrix exponential. Linear Algebra Appl. 429(7), 1679–1683 (2008)

Druskin, V., Greenbaum, A., Knizhnerman, L.: Using nonorthogonal Lanczos vectors in the computation of matrix functions. SIAM J. Sci. Comput. 19(1), 38–54 (1998)

Druskin, V., Güttel, S., Knizhnerman, L.: Near-optimal perfectly matched layers for indefinite Helmholtz problems. SIAM Rev. 58(1), 90–116 (2016)

Druskin, V., Knizhnerman, L.: A spectral semi-discrete method for numerical solution of 3D non-stationary problems in electrical prospecting. Phys. Sol. Earth 24, 641–648 (1988)

Druskin, V., Knizhnerman, L.: Spectral approach to solving three-dimensional Maxwell’s diffusion equations in the time and frequency domains. Radio Sci. 29(4), 937–953 (1994)

Druskin, V., Knizhnerman, L.: Krylov subspace approximation of eigenpairs and matrix functions in exact and computer arithmetic. Numer. Linear Algebra Appl. 2(3), 205–217 (1995)

Druskin, V., Mamonov, A.V., Zaslavsky, M.: Multiscale S-fraction reduced-order models for massive wavefield simulations. Multiscale Model. Simul. 15(1), 445–475 (2017)

Druskin, V., Mamonov, A.V., Zaslavsky, M.: Distance preserving model order reduction of graph-Laplacians and cluster analysis. J. Sci. Comput. 90(1), 32 (2022)

Dyukarev, Y.M.: Indeterminacy criteria for the Stieltjes matrix moment problem. Math. Notes 75, 66–82 (2004)

Dyukarev, Y.M., Choque Rivero, A.E.: The matrix version of Hamburger’s theorem. Math. Notes 91, 493–499 (2012)

Fenu, C., Martin, D., Reichel, L., Rodriguez, G.: Block Gauss and anti-Gauss quadrature with application to networks. SIAM J. Matrix Anal. Appl. 34(4), 1655–1684 (2013)

Frommer, A.: Monotone convergence of the Lanczos approximations to matrix functions of Hermitian matrices. Electron. Trans. Numer. Anal. 35, 118–128 (2009)

Frommer, A., Lund, K., Schweitzer, M., Szyld, D.B.: The Radau–Lanczos method for matrix functions. SIAM J. Matrix Anal. Appl. 38(3), 710–732 (2017)

Frommer, A., Lund, K., Szyld, D.B.: Block Krylov subspace methods for functions of matrices. Electron. Trans. Numer. Anal. 47, 100–126 (2017)

Frommer, A., Lund, K., Szyld, D.B.: Block Krylov subspace methods for functions of matrices II: Modified block FOM. SIAM J. Matrix Anal. Appl. 41(2), 804–837 (2020)

Frommer, A., Simoncini, V.: Error Bounds for Lanczos Approximations of Rational Functions of Matrices, pp. 203–216. Springer, Berlin (2009)

Golub, G.H., Meurant, G.: Matrices, Moments and Quadrature with Applications. Princeton University Press, Cambridge (2010)

Golub, G.H., Strakoš, Z.: Estimates in quadratic formulas. Numer. Algorith. 8(2), 241–268 (1994)

Golub, G.H., Underwood, R.: The block Lanczos method for computing eigenvalues. In: J.R. Rice (ed.) Mathematical Software, pp. 361–377. Academic Press (1977)

Greenbaum, A.: Behavior of slightly perturbed Lanczos and conjugate-gradient recurrences. Linear Algebra Appl. 113, 7–63 (1989)

Ingerman, D.: Discrete and continuous Dirichlet-to-Neumann maps in the layered case. SIAM J. Math. Anal. 31(6), 1214–1234 (2000)

Ingerman, D., Druskin, V., Knizhnerman, L.: Optimal finite difference grids and rational approximations of the square root I. Elliptic problems. Commun. Pure Appl. Math. 53(8), 1039–1066 (2000)

Jones, R.: The recursion method with a non-orthogonal basis. In: Pettifor, D.G., Weaire, D.L. (eds.) The Recursion Method and Its Applications, pp. 132–137. Springer, Berlin (1987)

Knizhnerman, L.: Adaptation of the Lanczos and Arnoldi methods to the spectrum, or why the two Krylov subspace methods are powerful. Chebyshev Digest 3(2), 141–164 (2002)

Knizhnerman, L.A.: The simple Lanczos procedure: estimates of the error of the Gauss quadrature formula and their applications. Comput. Math. Math. Phys. 36, 1481–1492 (1996)

Leskovec, J., Krevl, A.: SNAP Datasets: Stanford large network dataset collection. http://snap.stanford.edu/data (2014)

Liesen, J., Nasser, M.M.S., Sète, O.: Computing the logarithmic capacity of compact sets having (infinitely) many components with the charge simulation method. Numer. Algorith.93, 581–614 (2022). https://api.semanticscholar.org/CorpusID:246276213

Lund, K.: A new block Krylov subspace framework with applications to functions of matrices acting on multiple vectors. Phd thesis, Department of Mathematics, Temple University and Fakultät Mathematik und Naturwissenschaften der Bergischen Universität Wuppertal, Philadelphia, Pennsylvania, USA (2018)

López Lagomasino, G., Reichel, L., Wunderlich, L.: Matrices, moments, and rational quadrature. Linear Algebra Appl. 429(10), 2540–2554 (2008). (Special Issue in honor of Richard S. Varga)

Meurant, G., Tichý, P.: The behavior of the Gauss–Radau upper bound of the error norm in CG. Numer. Algorith. 94(2), 847–876 (2023)

Meurant, G., Tichý, P.: Error Norm Estimation in the Conjugate Gradient Algorithm. Society for Industrial and Applied Mathematics, Philadelphia, PA (2024)

O’Leary, D.P.: The block conjugate gradient algorithm and related methods. Linear Algebra and its Applications 29, 293–322 (1980). Special Volume Dedicated to Alson S. Householder

Reichel, L., Rodriguez, G., Tang, T.: New block quadrature rules for the approximation of matrix functions. Linear Algebra Appl.502, 299–326 (2016). Structured Matrices: Theory and Applications

Saputra, W., Ambia, J., Torres-Verdín, C., Davydycheva, S., Druskin, V., Zimmerling, J.: Adaptive multidimensional inversion for borehole ultra-deep azimuthal resistivity. In: SPWLA Annual Logging Symposium, p. D041S013R005. SPWLA (2024)

Xu, Q., Chen, T.: Xu, Q., Chen, T.: A posteriori error bounds for the block-Lanczos method for matrix function approximation. Numer. Algorith. 98(2), 903–927 (2025)

Acknowledgements

The authors like to thank Leonid Knizhnerman for helpful discussions related to the material in Sect. A and Michele Benzi for discussions related to the spectra occurring in large graphs. The authors would like to thank the anonymous reviewers for their comments and for pointing out relevant literature missed in a previous version of this manuscript.

Funding

Open access funding provided by Uppsala University. Part of this work was performed while Valeria Simoncini (VS) was in residence at the Institute for Computational and Experimental Research in Mathematics in Providence, RI, during the Numerical PDEs: Analysis, Algorithms, and Data Challenges semester program. The work of VS was also funded by the European Union—NextGenerationEU under the National Recovery and Resilience Plan (PNRR)—Mission 4 Education and research—Component 2 From research to business—Investment 1.1 Notice Prin 2022—DD N. 104 of 2/2/2022, entitled “Low-rank Structures and Numerical Methods in Matrix and Tensor Computations and their Application”, code 20227PCCKZ—CUP J53D23003620006. VS is a member of the INdAM Research Group GNCS; its continuous support is gladly acknowledged. The work of Vladimir Druskin was partially supported by AFOSR grants FA 955020-1-0079, FA9550-23-1-0220, and NSF grant DMS-2110773.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Posterior Quadrature Error Correction for Problems with Continuous Spectrum

In this work we mainly target matrices A obtained via (accurate) discretization of operators with continuous spectra corresponding to PDE problems on unbounded domains as detailed in the numerical results. For simplicity, instead of symmetric, matrix valued \( \mathcal{F}\) obtained from MIMO problems, in this appendix we analyze scalar, single-input single-output (SISO) transfer functions corresponding to \(p=1\). For the continuous problems we denote the transfer function as \( {f}\) and denote \( {f}_m\) and \(\tilde{ {f}}_m\) as the SISO counterparts of, respectively, \( \mathcal{F}_m\) and \(\tilde{ \mathcal{F}}_m\).

Specifically, we assume that the support of the continuous part of the spectral measure of \( {f}(z)\) has positive logarithmic capacity [2, 31, 34], e.g., consists of the union of intervals of the real positive semi axis.

That allows us to define a positive potential solution on the complex plane corresponding to the Green function of the unit charge located at \(z=\infty \) with Dirichlet boundary conditions on the support of the continuous components of the spectral measure of \( {f}(z)\). This solution plays a fundamental role in the convergence analysis of the Krylov subspace methods, e.g., g(0) is the linear convergence rate of the Conjugate Gradient Method [31].

First, we recall that the Gauss and Gauss–Radau quadratures match m and \(m-1\) matrix moments of the spectral measure respectively [36], thus

Next, we notice, that \( {f}_m(s)\) is a \([m-1/m]\) rational function, as follows from (9). Also, it follows from (16), that \(s\tilde{f}_m(s)\) is a \([m-1/m-1]\) rational function. Thus \( {f}_m(s)\) and \(s\tilde{f}_m(s)\) are near-diagonal Padé approximants of respectively \( {f}_m(s)\) and \(s {f}_m(s)\) at \(s=\infty \). Both of these functions are Stieltjes, and their Padé approximations converge uniformly in any compact set not including the support of the spectral measure. This together with Proposition 3 allows an adoption of Stahl’s Padé convergence Theorem [2, Theorem 6.6.9] based on potential theory, to this specific case and it can be stated as follows.

Proposition 4

\(\forall [a,b]\), with \(0<a<b\) and \(\forall \epsilon >0\) there exists an m such that

and

Proposition 4 guides us to the error correction formula \(\hat{f}_m=\frac{1}{2} (f_m+\tilde{f}_{m+1})\), which in the \(p>1\) setting is stated in Eq. (23).

Subtracting (31) from (30) we obtain the following estimate:

Proposition 5

For \(p=1\), small g(s) and thus \( 1- e^{-g(s)}\approx g(s)\) the error of the averaged \(\hat{f}_m(s)\) behaves as

for large m.

The potential solution g(s) is small when s is close to the boundary of continuous components of the spectra, i.e., in the case of the spectral interval \([0, \theta _{max}]\), for small positive s. Since 2g(s) is the linear rate of convergence of \( {f}_m\), slower convergence of \( {f}_m\) leads to better convergence of the averaged \(\widehat{f}_m\).

Remark 4

In the context of inverse problem theory, certain PDEs exhibit a significant symmetry structure known as duality, as illustrated in [6]. This duality implies that the Neumann-to-Dirichlet map for the primary problem can be linearly scaled to correspond to the Dirichlet-to-Neumann map for the dual problem. The transfer function \( \mathcal{F}(s)\), when B is localized at a (partial) boundary of the computational domain, can be interpreted as a discretization of the partial Neumann-to-Dirichlet map and, similarly, can be scaled to represent the Dirichlet-to-Neumann map of the dual problem, denoted as \( \mathcal{F}^{-1}(s)\). Following this logic, it can be demonstrated that \(\tilde{\mathcal{F}}_m(s)\) can be transformed into \( \mathcal{F}_{m+1}(s)\) for the dual problem. While a rigorous analysis of this phenomenon within the framework of error correction via averaging is warranted, we leverage this duality intuitively to refine the averaging formula. To adhere to the duality structure, the formula should remain invariant under the substitution of \( \mathcal{F}^{-1}\) for \( \mathcal{F}\), a property that (23) does not satisfy. Hence, we propose a similar, but dual-invariant, formula that satisfies Proposition 5, (see Eq. (24)),

with

Intuitive reasoning in this remark is limited to 2D problems, and interestingly enough our numerical experiments show advantage of such an averaging compared to the simple arithmetic one only for 2D models as shown in Sect. 6.1.1. We hope to study this phenomenon more rigorously in our future work.

Random Enrichment of Initial Block

Adding columns to the initial block B can reduce the number of block iterations only if the Krylov subspaces generated by the columns have a significant intersection. This is not the case for the problems with localized inputs/outputs considered in Sect. 6.1. Such problems however are important in remote sensing applications. To improve convergence for such a class of problems we experiment with adding random column vectors to B for the problem considered in Sect. 6.1.1. We used an enriched block \(B'=[B,R]\), where \(R\in {\mathbb {R}}^{n\times p'}\) is a block of random vectors. The Gauss and Gauss–Radau quadratures using \(\mathcal {K}_i(A,B')\) are computed and the leading \(p\times p\) block of the computed approximation to \({B'}^T\phi (A,s)B'\) is used to approximate \({B}^T\phi (A,s)B\).

In Fig. 5 we illustrate the effect of adding random vectors to B on the convergence of the method for the shift \(s=10^{-2}\) for the 2D diffusion experiments from Sect. 6.1.1. The vectors added have independent, identically distributed entries chosen from the normal distribution \(\mathcal {N}(0,1)\). Since the block Lanczos method is a memory-bandwidth limited algorithm on most modern CPU and GPU architectures, adding extra vectors to B comes at a small increase in computational cost per block Lanczos iteration, yet it greatly affects convergence rates. In the solid black curve only the 3 transducers are used in B, adding one random vector to B increases the convergence rate as shown by the dashed line, and adding two additional random vectors to B accelerated convergence further if expressed in terms of block iterations; see, e.g., [39] for a theoretical analysis. To reach an error of \(10^{-6}\) the block Lanczos iteration with no added vectors needed \(m=202\) iterations (solid black line), with one added random vector in needed \(m=169\) iterations (dashed black line), with 3 added vectors it required \(m=145\) iterations (dotted black line) and with 12 added vectors \(m=86\) iterations (dash-dotted black line). With averaging only \(m=129\) iterations were needed for the case of 3 added random vectors.

The acceleration of averaged quadrature rules is even greater at higher error levels, which can be qualitatively explained as follows. For problems with an (approximately) continuous spectrum, adding vectors to the subspace does not improve the linear convergence rate, resulting in sub-linear acceleration. This effect is amplified by the averaging, which, according to Proposition 5 is more significant at the low linear convergence rates observed in the final stage of sub-linear convergence. This phenomenon can be practically important for applications to inverse problems that tolerate low-precision computations due to measurement errors.

It is important to note that adding random starting vectors leads to an increase in the overall size of the matrix \(T_m\) due to the enlargement of the block size, despite the acceleration in convergence. Thus any reduction in computation speed comes from sparse matrix-matrix multiplications AB which are memory-bandwidth limited for small p.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zimmerling, J., Druskin, V. & Simoncini, V. Monotonicity, Bounds and Acceleration of Block Gauss and Gauss–Radau Quadrature for Computing \(B^T \phi (A) B\). J Sci Comput 103, 5 (2025). https://doi.org/10.1007/s10915-025-02799-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-025-02799-z