Abstract

Singly-TASE operators for the numerical solution of stiff differential equations were proposed by Calvo et al. in J.Sci. Comput. 2023 to reduce the computational cost of Runge–Kutta-TASE (RKTASE) methods when the involved linear systems are solved by some LU factorization. In this paper we propose a modification of these methods to improve the efficiency by considering different TASE operators for each stage of the Runge–Kutta. We prove that the resulting RKTASE methods are equivalent to W-methods (Steihaug and Wolfbrandt, Mathematics of Computation,1979) and this allows us to obtain the order conditions of the proposed Modified Singly-RKTASE (MSRKTASE) methods through the theory developed for the W-methods. We construct new MSRKTASE methods of order two and three and demonstrate their effectiveness through numerical experiments on both linear and nonlinear stiff systems. The results show that the MSRKTASE schemes significantly enhance efficiency and accuracy compared to previous Singly-RKTASE (SRKTASE) schemes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Solving stiff initial value problems (IVPs) efficiently and accurately remains a significant challenge in numerical analysis. Explicit Runge–Kutta (RK) method, face limitations when applied to stiff systems due to their stability restrictions. To address these limitations, a new class of time-advancing schemes for the numerical solution of stiff IVPs was proposed by Bassenne, Fu, and Mani in [1]. They introduced the concept of TASE (Time-Accurate and Stable Explicit) operators, designed to enhance the stability of explicit RK methods. In this approach, instead of solving the differential system

they proposed to solve another (stabilized) IVP

where \( T = T( \varDelta t)\in \mathbb {R}^{d\times d}\) is a linear operator that may depend on the time step size \(\varDelta t >0\) and on F, so that if \(U_{RK}(t_n)\) is the numerical approximation at \(t_n\) of the solution of Eq. (2) obtained by integrating it with an explicit RK method of order p, then it satisfies \( U_{RK}(t_0 + \varDelta t) - Y(t_0 + \varDelta t) = \mathcal {O}(\varDelta t ^{p+1}), \) i.e., approximates the local solution of Eq. (1) with order p, and also satisfies some stability requirements that are necessary for solving stiff systems such as A- or L- stability. In this way, the introduction of the TASE operator into the original governing Eq. (1) allows us to overcome the numerical stability restrictions of explicit RK time-advancing methods for solving stiff systems.

In terms of the accuracy order of the numerical solution of Eq. (2), if the explicit RK scheme has order p and if the TASE operator satisfies

it can be easily proved that the numerical solution of the stabilized system Eq. (2), \(U_{RK}(t_n)\), provided by an explicit RK method of order p has at least order \(\min (p,q)\). Some specific schemes combining explicit p–stage RK methods with orders \( p \le 4\) and TASE operators with the same order were derived in [1].

A more general family of TASE operators was derived in [2] by taking

where W is an approximation to the Jacobian matrix of the vector field F at the current integration step \((t_n, U_{RK}(t_n))\), \(\alpha _j >0\), \( j=1, \ldots ,p\), are free real parameters, and \(\beta _j\) are uniquely determined by the condition \( T_p ( \varDelta t ) = I + \mathcal {O} ( \varDelta t^p) \). The free parameters \(\alpha _j\) were selected to improve the linear stability properties of an explicit RK method with order p for the scaled equation Eq. (2). Further generalization of TASE operators were given in [3], where complex coefficients were allowed in the operator, yet working in real arithmetic.

In the TASE operators described by Eq. (4), each evaluation of T involves the solution of p linear systems with matrices \( ( I - \varDelta t\;\alpha _j W)\), \( j=1, \ldots ,p\). If these systems are to be solved by some LU matrix factorization, it would be much more efficient if the \(\alpha _j\) coefficients were all equal, but this is not compatible with order p for the TASE operator.

To make the methods more efficient, Calvo et al. [4] developed a different family of TASE operators, termed Singly-TASE operators, defined by

These operators lead to significant improvements in computational efficiency while maintaining the stability and accuracy necessary for solving stiff problems.

For an s–stage explicit RK method defined by the Butcher tableau

the numerical solution of Eq. (2) by a Singly-RKTASE method is advanced from \( (t_0, U_0) \rightarrow (t_1= t_0 + \varDelta t, U_1)\) by the formula

where \( K_j \), \( j=1, \ldots ,s\) are computed recursively from the formulas

In this paper, we propose a further modification of the Singly-RKTASE methods to enhance efficiency, so that for the ith stage of the RK method we use a TASE operator defined by

The coefficients \(\alpha >0\), r and \( \beta _{ij}\), \( j=1, \ldots ,r\) must be determined so that the resulting modified singly-RKTASE method

has order p. Compared to Singly-RKTASE methods, instead of s coefficients \(\beta _i\) we have sr coefficients \(\beta _{ij}\), providing more freedom to improve accuracy and stability properties. Although we could take different \(r_i\) for each stage, we have taken the same r for simplicity. In order to get order p for the resulting MSRKTASE method, we can impose, as with Singly-RKTASE methods, that all operators \(T_i\) in (7) have order p. However, this is only achieved if \(r \ge p\). For \(r=p\) the coefficients \(\beta _{ij}\) are uniquely determined, resulting in a Singly-RKTASE and if \(r>p\) we get more freedom in the coefficients, but at the price of increasing the computational cost. Then, we will consider operators \(T_i\) with order \(q < p\). In this case, only order \(\min (q,p)<p\) is guaranteed. Fortunately, we will see that if \(r=p\) it is possible to get final order p with operators \(T_i\) with orders \(q<p\). This will be done by studying the conditions that the methods’s coefficients must satisfy to achieve order p, using the order theory of W-methods [5], following the ideas in [6].

The rest of the paper is organized as follows. In Section 2, we prove that modified Singly-RKTASE methods are a particular case of W-methods. In Section 3, we derive the conditions that the coefficients \(\beta _{ij}\) and \(\alpha \) of the MSRKTASE must satisfy to have order p. In section 4 we develop MSRKTASE methods of orders 2 and 3 with optimal stability and accuracy properties. Finally, in section 5, through numerical experiments, we show the effectiveness of the newly developed methods, highlighting their potential for solving both linear and nonlinear stiff systems with improved performance.

2 Connection Between Modified Singly-RKTASE and W-Methods

Given a matrix W (usually some approximation to the Jacobian matrix of the vector field F(t, Y) at the current integration point \((t_n, Y_n)\)) a singly W-method [5] with \(\hat{s}\) stages provides an approximation to the solution of Eq. (1) at \(t_{n+1}=t_n + h\) by the formulas

The coefficients of the method can be arranged in a Butcher tableau as

On the other side, the product of the operator \(T_i\) in (7) by a vector v can be written as

Then, the Eqs. (7)(8) defining a Modified Singly-RKTASE scheme can be written as

Taking into account that \( {\widehat{K}}_j = {\widehat{K}}_{j-1} + h \alpha W {\widehat{K}}_j, \) we have

and this leads to

This is just an W-method with \({\hat{s}}=sr\) stages whose vector \({\widehat{b}}\) and matrices \({\widehat{A}}\), L are given by

with

This equivalence allows us to analyze the order of Modified Singly-RKTASE methods through the order conditions of W-methods. Also, the absolute stability can be studied through the W-methods. The stability function of a W-method is given by [5, 7]

and the limit of this function when z goes to infinity is given by

We will use this to develop Modified Singly-RKTASE methods. Recall that

-

A method is called A-stable if \(\vert {\hat{R}}( z)\vert \le 1\) for all Re \(z\le 0\);

-

A method is said to be L-stable if it is A-stable and \(\hat{R}(\infty )=0\);

-

A method is called \(A(\theta )\)-stable if \(\vert {\hat{R}}(z)\vert <1\) for all z such that \(\arg (-z) \le \theta \), that is, its stability region contains the left hand region of the complex plane with angle \(\theta \). If in addition \({\hat{R}}(\infty )=0\), it is called \(L(\theta )\)-stable.

3 Order Conditions

Denoting \(\varGamma = \alpha (I+L)\in \mathbb {R}^{{\hat{s}}\times {\hat{s}}}\), and \(\textbf{1}=(1,\ldots , 1)^T\in \mathbb {R}^{{\hat{s}}}\), the order conditions for a W-method are given in Table 1 (see e.g. [5, 7]).

In the case the operator W is exactly the Jacobian matrix, \(W=\partial f/\partial y (t_n, Y_n)\) (Rosenbrock-Wanner methods [8]), many elementary differentials become the same and the order conditions reduce to those in Table 2.

Let us analyze the order conditions for a Modified Singly-RKTASE scheme expressed as its equivalent W-method. We can take without losing generality

because this is equivalent to re-scale the coefficients b, A of the underlying RK method.

First, the order conditions that do not involve the matrix \(\varGamma \) reduce to the order conditions associated to the undelying RK method. For example, \({\hat{b}}^T \textbf{1}=b_1+ \ldots +b_s\), or \(\hat{b}^T {\hat{A}} {\hat{c}} = b^T A c\) which lead to the corresponding equations of the RK method.

On the other hand, the form of the matrix L introduces some incompatibility between some of the order conditions leading to the next theorem.

Theorem 1

A Modified Singly-RKTASE method can not have order \(r+1\).

Proof

Since the matrix \(\varGamma \) is a block diagonal matrix with blocks \(\alpha (I_r+L_r)\), it is clear that \((\varGamma - \alpha I)^r =(\alpha L)^r=0\) because \(L_r\) is strictly lower triangular with dimension r. Then, \((\varGamma - \alpha I)^r \textbf{1}=0\). If the method had order \(r+1\), by the order conditions it should be \(\widehat{b}^T(\varGamma - \alpha I)^r \textbf{1}=\alpha ^r\) which is not zero if \(\alpha \ne 0\). \(\square \)

With this result, a Modified Singly-RKTASE method with order p must have necessarily \(r \ge p\).

4 Modified Singly-RKTASE Methods with Orders 2 and 3

4.1 Methods with \(s=2\), \(r=2\) and order 2

There exists a family of Runge–Kutta schemes with two stages and order two that depend on one coefficient \(c_2\) given by

Since \(b^T A c=0\) for any \(c_2\), the associated order condition of order three does not depend on \(c_2\) and the other one vanishes for \(c_2= 2/3\). So, we will take this value of \(c_2\).

The only remaining condition for a Modified Singly-RKTASE with \(s=r=2\) to achieve order 2 is \({\hat{b}}^T \varGamma \textbf{1} =0\) which is satisfied for

and \(\beta _{11}=1-\beta _{12}, \beta _{21}=1-\beta _{22}\) according to (10). The limit of the stability function when z approaches infinity for the case of our Modified Singly-RKTASE method of order two is given by

There are two values of \(\beta _{12}\) that make \({\hat{R}}(\infty )=0\). The one that yields better results in terms of stability and accuracy is

There is only one free parameter \(\alpha \) left, which we will use to maximize the stability region and minimize the error coefficients. The 2-norm of the error coefficients of order 3 is given by

The corresponding norm when \(W= F'\) is

The method is A-stable (and therefore L-stable) if \(\alpha \in [ 0.3117, 3.257]\) and \(C_3\) and \(D_3\) increase monotonically when \(\alpha \) increases, so the smallest value of \(\alpha \) is more convenient. A good value of the parameter is \(\alpha =32/100\). With this value, we obtain an L-stable method of order 2, which we will name MSRKTASE2. The error coefficients are given in Table 3. For comparison, we also include in this Table the error coefficients of the Singly-RKTASE of order 2 obtained in [4], named SRKTASE2. As we can see, the new method achieves L-stability (SRKTASE2 has \({\hat{R}}(\infty )=1/2\)) and error coefficients that are about 20 times smaller (40 times smaller in the case where \(W=f'\)).

We could have used the parameter \(\beta _{12}\) to obtain smaller error coefficients without imposing the condition \(R(\infty )=0\). For example we could nullify the coefficient \(D_3\) (order three for the case \(W=f'\)) but in this case, we only achieve reasonable stability regions for large values of \(\alpha \) and \(C_3\) becomes very large (greater than one hundred).

The coefficients of the obtained method, MSRKTASE2, are given in the Appendix.

4.2 Methods with \(s=3\), \(r=3\) and Order 3

There exist a family of Runge–Kutta schemes with three stages and order three, defined by the coefficients

depending on two free parameters \(c_2\) and \(c_3\) with \(c_2\ne c_3\) and \(c_2 \ne 2/3\).

The remaining conditions for a Modified Singly-RKTASE with \(s=r=3\) to achieve order 3 form the set of four equations \({\hat{b}}^T \varGamma \textbf{1} =0\), \({\hat{b}}^T \varGamma ^2 \textbf{1} =0\), \({\hat{b}}^T {\hat{A}} \varGamma \textbf{1} =0 \), \({\hat{b}}^T \varGamma {\hat{A}} \textbf{1} =0\) which can be solved for the parameters \(\beta _{12}, \beta _{13}, \beta _{23}\) and \(\beta _{33}\) (\(\beta _{11}, \beta _{21}\) and \(\beta _{31}\) are obtained from (10)), resulting in:

depending on the parameters \(c_2, c_3, \beta _{22}\) and \(\beta _{32}\). Note that in this family of methods of order three, \(\alpha \) is also a free parameter. Imposing \(\beta _{32}=\beta _{22}=\beta _{12}\) we obtain the third order Singly-RKTASE methods obtained in [4].

The free parameters can be selected to maximize the stability region and to minimize the coefficients of the leading term of the local error. For these methods of order three, it is also satisfied that

and the other error coefficients of the term of order four are

If we solve \({\hat{b}}^T \varGamma ^2 {\hat{c}}=0\) and \({\hat{b}}^T{\hat{A}} \varGamma ^2 \textbf{1}=0\) for \(\beta _{22}\) and \(\beta _{32}\) we obtain the method in [4], independent of the values of \(c_2\) and \(c_3\).

The first four equations in (11) depend only on \(c_2\) and \(c_3\) and can not be satisfied simultaneously. The 2-norm of these four equations reaches its minimum value at \(c_2= 0.496188\), \(c_3=0.764887\), very close to \(c_2=1/2\), \(c_3=3/4\) for which

From now on, we will take \(c_2=1/2\) and \(c_3=3/4\). Thus, we have three coefficients \(\alpha , \beta _{22}, \beta _{32}\) to minimize the error coefficients and maximize the stability region.

The limit of the stability function of this method as z approaches infinity is given by

with

There are two values of \(\beta _{32}\) (as functions of \(\alpha \) and \(\beta _{22}\)) that make \({\widehat{R}}(\infty )=0\). We will select one of these values and use the coefficients \(\alpha \) and \(\beta _{22}\) to minimize the error coefficients and maximize the \(L(\theta )\)-stability angle.

The 2-norm of the error coefficients is given by

Minimizing this norm is equivalent to minimizing (the other coefficients are constant) \( C_{45}^2 + C_{46}^2 + C_{47}^2. \) A good compromise between large \(\theta \) and small \(C_4\) is obtained for \(\alpha = 0.54\), which yields \(\beta _{22} = -6.1\) and \(\beta _{32} = -2.75034\), resulting in a method, that we will name MSRKTASE3a, with stability angle and error coefficients given in Table 3. The coefficients of this method are given, with 32 digits, in the Appendix.

Alternatively, we can minimize the 2-norm of the coefficients of the leading term in the case that W is exactly the Jacobian matrix,

This is equivalent to minimize \(|D_{41}|= {\hat{b}}^T ( \varGamma +{\hat{A}}) ^3 \textbf{1} -1/24\) (the other coefficients are constant). This error coefficient can be vanished when

Now, the remaining coefficient \(\alpha \) can be used to minimizing \(\theta \) and \(C_4\). Unfortunately, when \(\alpha \) decreases \(C_4\) decreases but \(\theta \) increases. A good compromise between both quantities is obtained when \(\alpha = 0.56\), which yields \(\beta _{22} = 0.417075\) and \(\beta _{32} = -8.03347\), resulting in a method, that we will name MSRKTASE3b, with stability angle and error coefficients given in Table 3. The coefficients of this method, with 32 digits, are given in the Appendix.

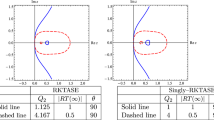

In Fig. 1 we plot the boundary of the stability regions of the proposed methods. The method with minimal \(D_4\) is shown in red-dashed, the method with minimal \(C_4\) in red-solid, and the method from [4] in blue.

We can see from these results that the new method MSRKTASE3a, has similar stability properties to the method of order 3 in [4], but has about 35 times smaller error coefficients. The new method is expected to provide more accurate approximations than the one in [4]. The other method, MSRKTASE3b, also has much smaller error coefficients, although the stability angle is smaller. Nonetheless, its stability region is not significantly worse.

5 Numerical Experiments

To evaluate the performance of the new methods, we considered two test problems and integrated them using the two new methods, MSRKTASE3a and MSRKTASE3b. For comparison, we also used the method proposed in [4] (SRKTASE3) to demonstrate the improvements of these new modified singly-RKTASE methods over the previous singly-RKTASE methods. Additionally, to benchmark the performance of the new methods against other known Runge–Kutta methods for stiff problems, we integrated the problems with a Singly-Diagonally Implicit RK method of order 3 (SDIRK3), proposed in [9, 10], that uses s=4 stages, and also with the W-method ROW3b with s=3, order 2 but order 3 when \(W= F'\), proposed by Gonzalez-Pinto and coworkers in [11]. The norm of the coefficients of the leading term of the local error of these methods is given in Table 3. Since the SDIRK3 method is implicit, we used a simplified Newton method to solve the stage equations, approximating the Jacobian matrix with the matrix W used in the TASE operators.

For each method and problem, we computed the CPU time required for solving it and the 2-norm of the global error at the end of the integration interval. These data points allowed us to plot the global error (in logarithmic scale) against the CPU time, resulting in an efficiency plot.

Problem 1: (taken from [1] and [2]) The 1D diffusion of a scalar function \(y = y(x, t)\), with a time dependent source term

The solution \(y = y(x, t)\) is assumed to be \(2\pi \)-periodic in x. For the spatial discretization, we used fourth-order centered difference schemes with a grid resolution of \(N = 512\), assuming periodic boundary conditions. The real part of the eigenvalues of the Jacobian matrix of the semi-discrete problem ranges from \(-3.5 \times 10^4\) to \(2.79 \times 10^{-5}\).

The efficiency plot of the methods for this problem is depicted in Fig. 2.

Since for this problem the operator \(I-\varDelta t\;\alpha W\) is constant, just one LU factorization is needed and the number of triangular linear systems solved is relevant for the total computational cost. The method MSRKTASE3b is as efficient as SDIRK3. MSRKTASE3b has higher computational cost per step (9 linear systems) than SDIRK3 (4 linear systems, since the problem is linear), but MSRKTASE3b has an error coefficient about ten times smaller than SDIRK3, resulting globally in similar efficiency. MSRKTASE3a is less efficient due to is larger error coefficient, and is clearly more efficient than SRKTASE3. On the other hand, ROW3b is the most efficient. This is due to its computational cost. ROW3b has an error coefficient larger than the ones of SDIRK3 and MSRKTASE3b but it requires only the solution of 3 linear systems per step.

Problem 2: The 1D Burgers’ equation written in the conservative form

The solution \(y=y(x,t)\) is assumed to be \(2\pi \)-periodic in x. For the spatial discretization, a fourth-order centered difference scheme with grid resolution of \(N=512\) has been taken.

The real part of the eigenvalues of the Jacobian matrix of the semi-discrete problem ranges from \(-3.5\times 10^{3}\) to \(2.6\times 10^{-6}\).

We integrated Burger’s problem using the four methods with three different options for the matrix W. Firstly, the Jacobian matrix is evaluated at every time step and \(W= \partial f(t_n, y_n)/\partial y \). With this option, the LU matrix factorization had to be computed at every step, increasing the computational cost. Secondly, the Jacobian matrix was evaluated only at the initial time step and \(W= \partial f(t_0, y_0)/\partial y \), requiring only one LU factorization and considerably reducing the CPU time. However, this could affect the accuracy in the case of TASE or W-methods, or the number of iterations required to solve the non linear system in the case of the SDIRK method. Finally, W was taken as the linear part of the semi-discrete differential equation (the matrix of the diffusion term). The computational cost is similar to the second option, but the accuracy could be lower. This option provided insight into the methods behaviour when W poorly approximates the Jacobian matrix.

Figures 3 and 4 show the efficiency plots for Burger’s problem integrated with these three options. As shown in the Figures, evaluating the Jacobian matrix only at the initial step reduces the CPU time but increases the global error for the TASE-based and W-methods, where the local error depends on W and is smaller when it coincides with the Jacobian matrix. For the SDIRK3 method, the error does no vary significantly with different W, but solving the nonlinear system requires more iterations when the Jacobian matrix is less accurately approximated, thereby increasing the computational cost.

MSRKTASE3a exhibited stability issues with the two largest time steps when W is not the Jacobian matrix at every step, likely due to a high sensitivity of the stability function to parameter changes. MSRKTASE3b also showed stability problems with the largest time step size and the poorest approximation of the Jacobian matrix. Further research is being carried out in this regard.

Concerning the efficiency of the methods, when the Jacobian matrix is evaluated at every step, SDIRK3 is the most efficient, followed by ROW3b, MSRKTASE3b and then by MSRKTASE3a. SRKTASE3 is the least efficient due to its larger error coefficients. When the Jacobian matrix is evaluated only at the initial point, ROW3b is the most efficient, MSRKTASE3a and MSRKTASE3b are more efficient than the other two while SRKTASE3 remains the least efficient. Finally, when the matrix W is the matrix of the diffusion term, ROW3b is the most efficient for large time steps, MSRKTASE3a is more efficient for small time steps, where it has no stability problems, due to the relevance of the error coefficient C4. MSRKTASE3b remains more efficient than SDIRK3 and SRKTASE3 is also more efficient than SDIRK3 except for the smallest time step. The solution of the nonlinear equations makes SDIRK3 less efficient.

6 Conclusions

We presented a modification of the Singly-RKTASE methods for the numerical solution of stiff differential equations by taking different TASE operators for each stage of the RK method. We proved that these methods are equivalent to W-methods which enabled us to derive the order conditions when the TASE operators have order smaller than the order p of the Runge–Kutta scheme. For the case where \(p=3\) we obtained Modified Singly-RKTASE methods that significantly reduce the error coefficients compared to Singly-RKTASE methods, while maintaining stability.

Numerical experiments on both linear and nonlinear stiff systems demonstrate that the modified Singly-RKTASE methods provide significant improvements in accuracy and computational efficiency over the original Singly-RKTASE schemes, making them competitive with other methods such as Diagonally Implicit RK methods.

Data Availibility

No data has been used in the research of this paper.

References

Bassenne, M., Fu, L., Mani, A.: Time-accurate and highly-stable explicit operators for stiff differential equations. J. Comput. Phys. 424, 109847 (2021)

Calvo, M., Montijano, J.I., Rández, L.: A note on the stability of time-accurate and highly-stable explicit operators for stiff differential equations. J. Comput. Phys. 436, 110316 (2021)

Aceto, L., Conte, D., Pagano, G.: On a generalization of time-accurate and highly-stable explicit operators for stiff problems. Appl. Numer. Math. 200, 2–17 (2024)

Calvo, M., Fu, L., Montijano, J.I., Rández, L.: Singly TASE operators for the numerical solution of stiff differential equations by explicit Runge-Kutta schemes. J. Sci. Comput. 96, 17 (2023)

Steihaug, T., Wolfbrandt, A.: An attempt to avoid exact Jacobian and nonlinear equations in the numerical solution of stiff differential equations. Math. Comput. 33, 521–534 (1979)

Conte, D., Gonzalez-Pinto, S., Hernandez-Abreu, D., Pagano, G.: On approximate matrix factorization and TASE \(W\)-methods for the time integration of parabolic partial differential equations. J. Sci. Comput. 100, 1–28 (2024)

Wanner, G., Hairer, E.: Solving Ordinary Differential Equations II. Springer, Berlin (1996)

Rosenbrock, H.H.: Some general implicit processes for the numerical solution of differential equations. Comput. J. 5, 329–330 (1963)

Kennedy, C.A., Carpenter, M.H.: Diagonally implicit Runge-Kutta methods for ordinary differential equations: a review. NASA/TM-2016-219173 NASA Langley Research Center, 1–162 (2016)

Kennedy, C.A., Carpenter, M.H.: Diagonally implicit Runge-Kutta methods for stiff ODEs. Appl. Numerical Math. 146, 221–244 (2019)

González-Pinto, S., Hernández-Abreu, D., Pérez-Rodríguez, S., Weiner, R.: A family of three-stage third order AMF-Wmethods for the time integration of advection diffusion reaction PDEs. Appl. Math. Comput. 274, 56–584 (2016)

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This work was supported by project PID2022-141385NB-I00 of Ministerio de Ciencia e Innovación of Spain.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Coefficients of MSRKTASE2

1.2 Coefficients of MSRKTASE3a

1.3 Coefficients of MSRKTASE3b

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Calvo, M., Montijano, J.I. & Rández, L. Modified Singly-Runge–Kutta-TASE Methods for the Numerical Solution of Stiff Differential Equations. J Sci Comput 103, 3 (2025). https://doi.org/10.1007/s10915-025-02813-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-025-02813-4