Abstract

In this paper we consider proper orthogonal decomposition (POD) methods that do not include difference quotients (DQs) of snapshots in the data set. The inclusion of DQs have been shown in the literature to be a key element in obtaining error bounds that do not degrade with the number of snapshots. More recently, the inclusion of DQs has allowed to obtain pointwise (as opposed to averaged) error bounds that decay with the same convergence rate (in terms of the POD singular values) as averaged ones. In the present paper, for POD methods not including DQs in their data set, we obtain error bounds that do not degrade with the number of snapshots if the function from where the snapshots are taken has certain degree of smoothness. Moreover, the rate of convergence is as close as that of methods including DQs as the smoothness of the function providing the snapshots allows. We do this by obtaining discrete counterparts of Agmon and interpolation inequalities in Sobolev spaces. Numerical experiments validating these estimates are also presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There seems to be an unclosed debate on wether it is necessary or advisable to include the difference quotients (DQs) of the snapshots (or function values) in the data set in proper orthogonal decomposition (POD) methods. These allow for a remarkable dimension reduction in large-scale dynamical systems by projecting the equations onto smaller spaces spanned by the first elements of the POD basis. This basis is extracted from the data set, originally the set of snapshots, although, as mentioned, some authors include their DQs. Inclusion of these has been essential to obtain optimal error bounds [8, 12, 15, 19] for POD methods. More recently, pointwise (in time) error bounds have been proved in case DQs are added to the set of snapshots [12], or if the DQs are complemented with just one snapshot [4]. In fact, counterexamples are presented in [12] showing that if DQs are not included, pointwise projection errors degrade with the number of snapshots. However, this degradation is hard to find in many practical instances, and, at the same time, while some authors report improvement in their numerical simulations if DQs are included in the data set [8], others find just the opposite [10, 11].

The present paper does not pretend to settle the argument, but hopes to shed some light on why, in agreement with the numerical experience of some authors, it may be possible to obtain good results in practice without including DQs. In particular, we show that if a function has first derivatives (with respect to time) square-integrable in time, POD projection errors do not degrade with the number of snapshots. Furthermore, if second or higher-order derivatives are square-integrable in time, POD methods are convergent and, the higher the order of the derivatives that are square integrable, the closer to optimal the rate of convergence becomes. We do this by obtaining discrete versions of Agmon and interpolation inequalities in Sobolev spaces, which allow to bound the \(L^\infty \) norm of a function in terms of the \(L^2\) norm and higher-order Sobolev’s norms.

We remark that our results do not contradict previous results in the literature. On the one hand, the error bounds we prove are as close to optimal as the smoothness of the solution allows, but not optimal. On the other hand, concerning the counterexamples in [12], we notice that they require the snapshots to be multiples of the POD basis and, hence, an orthogonal set, a property that is lost if a function is smooth and the snapshots are function values taken at increasingly closer times as the number of snapshots increases, because, on the one hand, the distance between two orthogonal vectors is not smaller than their norms and, on the other hand, for a continuous function, the distance between two consecutive function values of finer and finer partitions tends to zero. In conclusion, the snapshots of the counterexamples in [12] cannot be function values of the same function at denser and denser partitions of the same time interval.

The reference [15] is the first one showing convergence results for POD methods for parabolic problems. Starting with this paper one can find several references in the literature developing the error analysis of POD methods. We mention some references that are not intended to be a complete list. In [16] the same authors of [15] provide error estimates for POD methods for nonlinear parabolic systems arising in fluid dynamics. The authors of [3] show that comparing the POD approximation with the \(L^2\) projection over the reduced order space instead of the \(H_0^1(\Omega )\)-projection (as in [15]) DQs are not required in the set of snapshots. However, in that case, the norm of the gradient of the \(L^2\)-projection has to be bounded. This leads to non-optimal estimates for the truncation errors, see [12]. POD approximation errors in different norms and using different projections can also be found in [19].

The rest of the paper is as follows. In Sect. 2 we present the main results of this paper and their application to obtain pointwise error bounds in POD methods based only on snapshots. Section 3 present some numerical experiments. In Sect. 4, the auxiliary results needed to prove the main results in Sect. 2 are stated and proved, and the final section contains the conclusions.

2 Main Results

Let \(\Omega \) be a bounded region in \({\mathbb {R}}^d\) with a smooth boundary or a Lipschitz polygonal boundary. We use standard notation, so that \(H^s(\Omega )\) denotes the standard Sobolev space of index s. We also consider the space \(H^1_0(\Omega )\) of functions vanishing on the boundary and with square integrable first derivatives. Let \(T>0\) and let \(u: \Omega \times [0,T] \rightarrow {\mathbb {R}}\) be a function such that its partial derivative with respect to its second argument, denoted by t henceforth, satisfies that \(u\in H^m(0,T,X)\), where X denotes either \(L^2(\Omega )\) or \(H^1_0(\Omega )\), and

and \(\left\| \cdot \right\| _X\) denotes the norm in X, that is \(\left\| f\right\| _X=(f,f)^{1/2}\) if \(X=L^2(\Omega )\) or \(\left\| f\right\| _X=(\nabla f,\nabla f)^{1/2}\) if \(X=H^1_0(\Omega )\). Typically, in many practical instances, u is a finite element approximation to the solution of some time-dependent partial differential equation. The factors \(1/T^{2(m-j)}\) in the definition of \(\left\| \cdot \right\| _{H^m(0,T,X)}\) are introduced so that the constants in the estimates that follow are scale-invariant (see e.g., [2, Chapter 4]). In what follows, if a constant is denoted with a lower case letter, this means that it is scale-invariant.

Given a positive integer \(M>0\) and the corresponding time-step \(\Delta t=T/M\), we consider the time levels \(t_n=n\Delta t\), \(n=0,\ldots ,M\), and the function values or snapshots of u

We denote by \(\sigma _1\ge \ldots \ge \sigma _{J}\) the singular values and by \(\varphi ^1,\ldots ,\varphi ^{J}\) the left singular vectors of the operator from \({\mathbb {R}}^{M+1}\) (endorsed with the standard Euclidean norm) which maps every vector \(x=[x_1,\ldots ,x_{M+1}]^T\) to

operator which, being of finite rank, is compact. The singular values satisfy that \(\lambda _k=\sigma _k^2\), for \(k=1,\ldots ,J\) are the positive eigenvalues of the correlation matrix \(M=\frac{1}{M+1}((u^i,u^j)_X)_{0\le i,j\le M}\). The left singular vectors, known as the POD basis, are mutually orthogonal and with unit norm in X. For \(1\le r\le d\) let us denote by

and by \(P^r_X:X\rightarrow V_r\) the orthogonal projection of X onto \(V_r\). It is well-known that

In the present paper, the rate of decay given by the square root of the right-hand side above will be referred to as optimal (see [8]).

In many instances of interest in practice, at least when u is a finite element approximation to the solution of a dissipative partial differential equation (PDE), the singular values decay exponentially fast, so that for very small values of r (compared with the dimension of the finite element space) the right-hand side of (3) is smaller than the error of u with respect the solution of the PDE it approximates.

POD methods are Galerkin methods where the approximating spaces are the spaces \(V_r\). In POD methods, one obtains values \(u_r^n\in V_r\) intended to be approximations \(u_r^n\approx u^n\), \(n=0,\ldots , M\), and (3) is key in obtaining estimates for the errors \(u_r^n-u^n\). Yet, the fact that the left hand side (3) is an average allows only for averaged estimates of the errors \(u_r^n-u^n\), and one would be interested in pointwise estimates. In order to obtain them, some authors assume (without a result to support it) that the individual errors \(\bigl \Vert u^n-P_X^r u^n\bigr \Vert _X\) decay with r as the square root of the right-hand side of (3) (see e.g., [9, (2.9)]).

Yet, in principle, the only rigorous pointwise estimate that one can deduce from (3) is

This estimate is sharp as shown in examples provided in [12], where for at least one value of n, the equality is reached. Estimate (4) degrades with the number \(M+1\) of snapshots. One would expect then in practice that if M is increased while maintaining the value of r the individual errors \( \bigl \Vert u^n - P_X^r u^n\bigr \Vert _X\) should increase accordingly. Yet this is not necessary the case as results in Table 1 show, with correspond to the snapshots of the quadratic FE approximation to the periodic solution of the Brusselator problem described in [7] (see also Sect. 3 below) in the case where \(X=L^2(\Omega )\), \(r=25\) and T is one period.

The results in Table 1 are in agreement with the following result.

Theorem 2.1

Let u be bounded in \(H^1(0,T,X)\). Then, for the constant \(c_A\) defined in (40) below, the following bound holds:

If \(u_0+\cdots +u_M=0\), then the second term on the right-hand side above can be omitted.

Proof

The result is a direct consequence of the case \(m=1\) in estimate (73) in Theorem 4.12 below when applied to \(f=u-P_X^r u\) and the identity (3) above, as we now explain. The norm \(\left\| \cdot \right\| _0\) in (73) is defined in (38–39). With this norm and applying (3), we have

and the proof is finished by noticing that \((M+1)/M\le 2\), for \(M\ge 1\). \(\square \)

Observe that in the pointwise estimate in Theorem 2.1 no factor depending on M appears on the right-hand side, except, perhaps, the singular values, but if \(u\in H^1(0,T,X)\) then, \(\sigma _k\rightarrow \sigma _{c,k}\) as \(M\rightarrow \infty \), where \(\sigma _{c,1}\ge \sigma _{c,2} \ge \ldots \,\), are the singular values of the operator \(K_c:L^2(0,T)\rightarrow X\) given by [16, Section 3.2]

Observe also that, at worst,

Thus, Theorem 2.1 guarantees that pointwise projection errors do not degrade as the number of snapshots increases. Furthermore, they decay with r, although like \(\left( \Sigma _{k>r} \sigma _k^2\right) ^{1/4}\), which is the square root of the optimal rate. Yet, the following result shows that pointwise projection errors decay with a rate as close to optimal as the smoothness of u allows. In the sequel we define

Theorem 2.2

Let u be bounded in \(H^m(0,T,X)\). Then, for the constants \(c_A\) defined in (48) and \(c_m\) in Lemma 4.10 below, the following bound holds:

If \(u_0+\cdots +u_M=0\), then the second term on the right-hand side above can be omitted.

Proof

Similarly to Theorem 2.1, the result is a direct consequence of estimate (73) in Theorem 4.12 below applied to \(f=u-P_X^r u\), the identity (3) above and (5). \(\square \)

The above results account for projection errors, but in the analysis of POD methods the error between the POD approximation and the snapshots, \(u_r^n - u^n\) depends also on the difference quotients

for which we have the following result.

Theorem 2.3

In the conditions of Theorem 2.1 the following bound holds:

Proof

The proof is a direct consequence of estimate (72) in Theorem 4.12 below applied to \(f=u-P_X^r u\), the identity (3) and (5). \(\square \)

Remark 2.4

An important case in practice in that of periodic orbits, since they are key elements in bifurcation diagrams of many dynamical systems associated to PDEs. In this case, the snapshot \(u_0\) can be omitted in (2) and (3), and \(M+1\) can be replaced by M in previous formulae (see also Sect. 4.1) and, consequently, the ratio \(r_M\) in (6) can be replaced by 1. Also, in the estimates in Theorems 2.1 and 2.2 one can replace the constant \(c_m\) by 1, which gives much more favourable estimates (see values of constants \(c_m\) in Table 7 as well as \(\bigl \Vert (I-P_X^r)\partial _t u\bigr \Vert _{H^{m-1}(0,T,X)}\) can be replaced by \(\bigl \Vert (I-P_X^r)\partial _t^m u\bigr \Vert _{L^2(0,T,X)}\), that is

As in Theorem 2.1, the second term on the right-hand side of (8) can be omitted if the snapshots have zero mean. The proof of these estimates follows by applying Theorem 4.11 to \((I-P_X^r) u\).

Some reduction in the size of the constants \(c_m\) can be obtained also when computing quasi periodic orbits in an invariant tori if one uses the techniques described in [17] to compute them, since although \(u(T)\ne u(0)\), one still has \(\left\| u(T)-u(0)\right\| \ll 1\), and one can modify the technique so that this is also the case with first derivatives or higher order ones.

Remark 2.5

In the analysis of POD methods, as it will be the case in next section, one usually has to estimate \((I-P_X^r)\) in a norm \(\Vert \cdot \Vert \) other than that of X. If \(\left\| \cdot \right\| \) is the norm of a Hilbert space W containing the snapshots \(u^0,\ldots , u^M\), then one can use [12, Lemma 2.2] to get

Applying this estimate instead of the identity (3) in the proofs of Theorems 2.2 and 2.3 one gets the following estimates

As in Remark 2.4, when u is periodic in t with period T, then, \(c_m\) can be replaced by 1, \(\bigl \Vert (I-P_X^r)\partial _t u\bigr \Vert _{H^{m-1}(0,T,W)}\) by \(\bigl \Vert (I-P_X^r)\partial _t^m u\Vert _{L^2(0,T,W)}\) and \(r_M\) can also be replaced by 1.

2.1 Application to a POD Method

We now apply the previous results to obtain pointwise error bounds for a POD method applied to the heat equation where no DQ were included in the data set to obtain the POD basis \(\{\varphi _1,\ldots ,\varphi _J\}\). To discretize the time variable we consider two methods, the implicit Euler method and the second-order backward differentiation formula (BDF2). Analysis similar to part of computations done in the present section has been done before (see e.g., [6, Lemma 3]) but it is carried out here because better error constants are obtained.

Let us assume that u is a semi-discrete FE approximation to the solution of the heat equation with a forcing term f so that \(u(t)\in V\) for some FE space V of piecewise polynomials over a triangulation and satisfies

where \(\nu >0\) is the thermal diffusivity and, in this section, \((\cdot ,\cdot )\) denotes the standard inner product of \(L^2(\Omega )\). We consider the POD method

with

where \(R_r\) denotes the Ritz projection,

Instead of using the first-order convergent Euler method considered above we may use the BDF2, with gives the the following method

where

and, for simplicity,

We now prove the following result.

Theorem 2.6

Let X be \(H^1_0\) and let \(p=1\) in the case of method (14–15) and \(p=2\) otherwise. Assume that \(u\in H^{p+1}(0,T,L^2)\cap H^{m}(0,T,H^1_0)\) for some \(m\ge 2\). Then, the following bound holds for \(0\le n\le M\):

Proof

Since the POD basis \(\{\varphi _1,\ldots ,\varphi _J\}\) has been computed with respect to the inner product in \(H^1_0\), we have \(P_X^r=R_r\). We first analyze method (14–15). We notice that

where

Subtracting (20) from (14), for the error

we have the following relation,

Taking \(\varphi =\Delta t e_r^n\) and using that \((e_r^n - e_r^{n-1},e_r^n) = (\bigl \Vert e_r^n \bigr \Vert ^2 - \bigl \Vert e_r^{n-1} \bigr \Vert ^2 + \bigl \Vert e_r^n-e_r^{n-1} \bigr \Vert ^2)/2\), one obtains

If \(\bigl \Vert e_r^n\bigr \Vert > \bigl \Vert e_r^{n-1}\bigr \Vert \), then \(-1< -\bigl \Vert e_r^{n-1}\bigr \Vert /\bigl \Vert e_r^n\bigr \Vert \). Hence, dividing both sides of (22) by \(\bigl \Vert e_r^n\bigr \Vert \) we get

If, on the contrary, \(\bigl \Vert e_r^n\bigr \Vert \le \bigl \Vert e_r^{n-1}\bigr \Vert \), for the right-hand side in (22) we write \(\bigl \Vert \tau ^n\bigr \Vert \bigl \Vert e_r^n\bigr \Vert \le \bigl \Vert \tau ^n\bigr \Vert ( \bigl \Vert e_r^n\bigr \Vert + \bigl \Vert e_r^{n-1}\bigr \Vert )/2\) so that dividing both sides of (22) by \(\bigl \Vert e_r^n\bigr \Vert + \bigl \Vert e_r^{n-1}\bigr \Vert \), we also obtain (23). Summing from \(n=1\) to \(n=m\) and using (15) we obtain

where, in the last step we have applied Hölder’s inequality.

With respect to \(\tau ^n\), by adding \(\pm Du^n\) we have

If \(u\in H^2(0,T,L^2)\) Taylor expansion with integral remainder allows us to write

Thus, from (24) we get

Using Poincaré inequality

and applying Theorem 2.3 we have

Finally, to estimate the errors \(u^n-u_r^n\) we write \(u_r^n - u^n = e_r^n + P_X^r u^n-u^n\), and apply (25) and Theorem 2.2 to conclude (19).

The analysis of method (16–18) is very similar to that of (14), and we comment on the differences next. As it is well-known (and a simple calculation shows) \((\mathcal{D}e_r^n,e_r^n)=\mathcal{E}_n^2 - \mathcal{E}_{n-1}^2\), where

It is easy to check that \(\left\| e_r^n \right\| \le \mathcal{E}_n\), so that one can repeat (with obvious changes) the analysis above with the implicit Euler method with \(\left\| e_r^n\right\| \) replaced by \(\mathcal{E}_n\) to reach

instead of (24), where we have used that \(\mathcal{E}_1=0\) due to (18), and where

For the last term above we have (see e.g., [6, Lemma 4])

Consequently, from (26) and noticing that \(\bigl \Vert \mathcal{D}e_r^n\bigr \Vert \le (3/2)\bigl \Vert De_r^n\bigr \Vert + (1/2)\bigl \Vert De_r^{n-1}\bigr \Vert \), we have

so that applying (25) and Theorem 2.3 it follows that

and, for the error \(u_r^n - u = e_r^n + (I-P_X^r) u_n\), applying Theorem 2.2 we get (19). \(\square \)

Remark 2.7

In view of Remark 2.5, in the above estimates we may replace the Poincaré constant \(C_P\) by 1, \(\sigma _k^2\) by \(\sigma _k^2\bigl \Vert \varphi _k\bigr \Vert ^2\) and the norm \(\left\| \cdot \right\| _{H^{m-1}(0,T,H^1_0)}\) by \(\left\| \cdot \right\| _{H^{m-1}(0,T,L^2)}\). Also in view of Remark 2.4, when u is periodic in t with period T, the constant \(c_m\) can be replaced by 1 and \(\bigl \Vert (I-P_X^r)\partial _t u\bigr \Vert _{H^{m-1}(0,T,H^1_0)}\) by \(\bigl \Vert (I-P_X^r)\partial _t^m u\bigr \Vert _{L^2(0,T,H^1_0)}\) (or by \(\bigl \Vert (I-P_X^r)\partial _t^m u\bigr \Vert _{L^2(0,T,L^2)}\) if we use the estimates in Remark 2.5).

Remark 2.8

If, for the method (16), \(u_r^1\) is computed from \(u_r^0\) with one step of the implicit Euler method, instead of setting \(u_r^1=P_X^r u^1\), then one can use a general stability result for the BDF2 like [6, Lemma 3] and then, use standard estimates to express the term \(Du^1 - \partial _t u(\cdot , t_1)\) in \(\tau ^1\) as \( \big \Vert Du^1- \partial _t u(\cdot ,t_1)\bigr \Vert \le {\Delta t} \bigl \Vert \partial ^2_t u\bigr \Vert _{L^\infty (0,t_1,L^2)}. \) Thus, one gets estimates similar to (19) but with different constants multiplying the different terms and \(\bigl \Vert \partial _t^3u\bigr \Vert _{L^2(0,T,L^2)}\) replaced by \(\bigl \Vert \partial _t^3u\bigr \Vert _{L^2(0,T,L^2)} + \bigl \Vert \partial ^2_t u\bigr \Vert _{L^\infty (0,t_1,L^2)}\).

Remark 2.9

If \(X=L^2\), that is, if the POD basis is computed with respecto to the \(L^2\) inner product then, instead of \((\tau ^n,\varphi )\) in (20), one has \((\tau ^n,\varphi ) + \nu (\nabla (P_X^r -I)u^n,\nabla \varphi )\) and, thus, arguing as above, one has to estimate

which can be bounded in terms of the stiffness matrix of the POD basis or, according to [12, Lemma 2.2] can be bounded in terms of

Although in many practical problems one can check that the sum above is only a moderate factor larger than \(\sum _{r>k} \sigma _k^2\), at present, the only rigorous bound available is proportional to \(C_Ph^{-2}\sum _{r>k} \sigma _k^2\), where h is the mesh diameter of the FE mesh. The same can be said of the stiffness matrix of the POD basis. Consequently, at present, it is not possible to prove optimal bounds if the POD basis is computed with respect to the \(L^2\) inner product.

3 Numerical Experiments

3.1 Test 1: The Brusselator with Diffusion

We now check some of the estimates of previous sections. For the snapshots, we consider the semi-discrete piecewise quadratic FE approximation over a uniform \(80\times 80\) triangulation (with diagonals running southwest-northeast) of the unit square of the stable periodic orbit of the following system, which, in the abssence of diffusion, is known as the Brusselator (see e.g., [14, p. 115]):

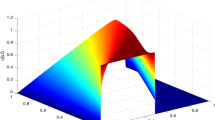

where \(\nu =0.002\), \(\Omega =[0,1]\times [0,1]\), \(\Gamma _1\subset \partial \Omega \) is the union of sides \(\{x=1\}\bigcup \{ y=1\}\), and \(\Gamma _2\) is the rest of \(\partial \Omega \) (see [7] for details). This system was chosen for numerical test in [7] because it has a stable limit cycle, and, hence, nontrivial asymptotic dynamics, as opposed to many reaction-diffusion systems where solutions tend to a steady state as time tends to infinity. The period of this limit cycle is \(T=7.090636\) (up to 7 significant digits). This system is particularly challenging for the size of its time derivatives, which, as it can be seen in Fig. 1, they vary considerably over one period.

\(L^2\) norms of the time derivatives of the finite element approximation \({\varvec{u}}_h\) to the periodic orbit solution of (28)

In what follows, \(M=256\). We took values \({\varvec{u}}(\cdot , t_n)=[u(\cdot ,t_n),v(\cdot , t_n)]^T\), \(n=0,\ldots ,M\), and, in order to have snapshots taking value zero on \(\Gamma _1\) we subtracted their mean so that \({\varvec{u}}^n = {\varvec{u}}(\cdot ,t_n) - m_0\), where \(m_0=({\varvec{u}}(\cdot ,t_1)+\cdots +{\varvec{u}}(\cdot ,t_M))/M\) (notice that being \({\varvec{u}}\) periodic with period T, there is no need to include \({\varvec{u}}(\cdot ,0)\) in the data set to obtain the POD basis, since \({\varvec{u}}(\cdot ,0)={\varvec{u}}(\cdot ,T)\)).

We first check estimate (8) for \(X=L^2\) and two values of r, \(r=24\) and \(r=31\). The value of \(r=24\) was chosen because it was the first value of r for which \(\gamma _r\) in (30) was below \(5.35\times 10^{-5}\), which, as explained in [7], is the maximum error of the snapshots with respect the true solution of (28) (recall that the snapshots are FE approximations, not the true solution). The value \(r=31\) was chosen for comparison. We have the following values for the left-hand side and tail of the singular values

and Table 2 shows the right-hand side of (8). We notice that there is an overestimation by a factor of 20.

We now check estimates (9) and (12) when \(W=L^2(\Omega )\) and \(\left\| \cdot \right\| \) its norm, which have been used in (27) in the previous section to obtain estimate (19) and in Remark. 2.7. We have the following values

Notice that \(X=H^1_0\) but we are measuring \( (I-P_X^r)D{\varvec{u}}^n\) in the \(L^2\) norm. Applying Poincaré inequality (25) and (9) the quantity above can be estimated by

On the other hand according to Remark 2.7, and taking into account that we are in the periodic case, it can also be estimated by

Table 3 shows the corresponding values. Notice that \(C_P=\lambda ^{-1/2}\), where \(\lambda \) is the smallest eigenvalue of the \(-\Delta \) operator subject to homogeneous Dirichlet boundary condition on \(\Gamma _1\) and homogeneous Neumann boundary condition on \(\Gamma _2\), and, thus, \(C_P=\sqrt{2}/\pi \). By comparing the values in Table 3 with those in (31), we can see that while \(\rho _m\) is not a good estimate, \(\mu _m\) slightly overestimates the quantities in (31) by a factor that does not reach 3.1.

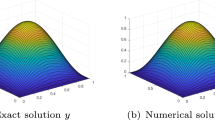

The fact that \(\mu _m\) is a much better estimate than \(\rho _m\) is explained in part by the size of the values \(\bigl \Vert \varphi _k\bigr \Vert \) which, as shown in Fig. 2, they are small and well below the constant \(C_P\) (marked with a red dotted line in the plot).

\(L^2\) norms of the elements \(\varphi ^1,\ldots ,\varphi ^J\) of the POD basis for \(X=H^1_0\) and constant \(C_P\) in (25)

3.2 Test 2: Flow Around a Cylinder

We now consider the well-known benchmark problem defined in [18] associated to the Navier–Stokes equations,

where, as it is well-known, \({\varvec{u}}\) is the velocity field of a fluid moving in the domain \(\Omega \), p the kinematic pressure, \(\nu >0\) the kinematic viscosity coefficient, and \({\varvec{f}}\) represents the accelerations due to external body forces acting on the fluid. The domain is given by

On the inflow boundary, \(x=0\), the velocity is prescribed by

and on the outflow boundary \(x=2.2\) we set the so-called “do nothing" boundary condition. On the rest of the boundary the velocity is set \({\varvec{u}}=\textbf{0}\). It is well known that for \({\varvec{f}}=\textbf{0}\) and \(\nu =0.001\) there is a stable periodic orbit.

We take the snapshots from the FE approximation to the velocity field \({\varvec{u}}\) computed in [5], which uses 27168 degrees of freedom for the velocity and 3480 for the pressure. It was checked in [5] that the relative errors in the velocity of this approximation are below 0.003 and 0.03 in the the \(L^2\) and \(H^1\) norms repectively, and the period of the periodic orbit of this approximation is \(T=0.331761\). As in the previous section we set \(M=256\), and the mean was subtracted to the function values \({\varvec{u}}(\cdot , t_n)\) in order to have snapshots with zero value on the inflow boundary.

This is also a challenging problem because of the size of the derivatives, which are shown in Table 4.

As in the previous section, we first check estimate (8) for \(X=L^2\) and the values of r given by \(r=12\) and \(r=20\), which were chosen with the same criteria as in Sect. 3.1. The following values for the left-hand of side of (8) and the tail of the singular values are

whereas those of the right-hand side of (8) are given in Table 5, where we appreciate an overestimation by a factor that does not reach the value of 20. We also checked all values of r between \(r=11\) and \(r=70\), and found that the factor of the overestimation was between 5.6 and 27.4, and the average value was 16.33.

In view of the large overestimation of (9) in Sect. 3.1, we only check estimate (12) in the present section. For the left-hand side, we have the following values

and in Table 6 we have those of the right-hand side. We see that the values of \(\mu _m\) overestimate (37) by a factor that does not reach 23. Indeed, repeating these computations for r ranging from \(r=11\) to \(r=80\), we found that the values of \(\mu _m\) for \(m=2,3,4,5\) were at least three times larger than those of the left-hand side of (12), but the overestimation was always by a factor below 26, the average factor being 10.3.

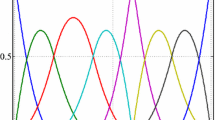

We now test the non periodic case. For this, we consider equally spaced snapshots over half a period and take \(M=128\). We first check that we are in the general case by computing the value \(\Vert {\varvec{u}}^M - {\varvec{u}}^0\Vert /\max ( \Vert {\varvec{u}}^0\Vert ,\Vert {\varvec{u}}^M\Vert )\), which turned out to be 1.66. With respect to estimates (11) and (12) we check if the values of the constants \(c_m\) in Table 7 are sharp or if they are a consequence of the techniques used in the proofs. We can see this by checking if the more benign estimates of the periodic case (where \(c_m=1\)) also hold for non periodic functions. This seems to be the case as it can be seen in Fig. 3, where, for both plots, the blue lines which correspond to the left-hand sides of estimates (11) and (12) are below the rest of the values, which correspond to the right-hand sides of the estimates (8) and (9) of the periodic case. We checked that the ratio of \(\max \Vert (I-P_X) u_n\Vert \) over the right-hand side of (8) in the left plot never exceded 18, and that of the left-hand side of (12) over \(\mu _m\), \(m=2,3,4,5\) in the right-plot never exceded 22. These suggests that, perhaps with different techniques for the proofs, the estimates for the general case may be improved. However, this will be subject of future work.

4 Abstract Results

In this section we state and prove a series of results which are needed to prove those in Sect. 2. We do this in a more general setting.

Let X be a Hilbert space with inner product \((\cdot ,\cdot )\) and associated norm \(\bigl \Vert \cdot \bigr \Vert \). In order to have shorter subindices in this section for \(T>0\) and positive integer M we denote

instead of \(\Delta t\) as in previous sections. Thus, we write, \(t_n=n\tau \), \(n=0,\ldots ,M\). For a sequence \(f_\tau =(f_n)_{n=0}^M\) in X we denote

and, for \(k=2,\ldots \,J\),

(Notice the backward notation). Also, for simplicity we will use

We define

The proof of the following lemma is the discrete counterpart of the following identity and estimate

Lemma 4.1

Let \(f_\tau =(f_n)_{n=0}^M\) a sequence in X satisfying that \(f_0+\cdots +f_M=0\). Then

where

Proof

For \(0\le m<n\), we write

Now applying Hölder’s inequality and noticing that \(\bigl \Vert a+b\bigr \Vert ^2 \le 2\bigl \Vert a\bigr \Vert ^2 + 2\bigl \Vert b\bigr \Vert ^2\), we have

and, hence,

A similar argument also shows (42) for \(n<m\le M\).

Also, for \(0\le l\le m\), we have \(f_m =f_l + \tau (Df_{l+1} + \cdots + Df_m)\), so that applying Hölder’s inequality it follows that

A similar argument shows that (43) also holds for \(m<l \le M\). Now, since we are assuming that \(f_\tau \) is of zero mean, we can write

and, thus, taking norms, applying (43)

Now, a simple calculation shows that

and it is not difficult to prove that the last expresion does not exceed \((2/3)\sqrt{M}\). Thus, it follows that

We now take m such that

We have that

From this inequality and (44) it follows that for \(f_m\) satisfying (45) we have

This, together with (42) finishes the proof. \(\square \)

Remark 4.2

Notice that (41) implies (42) also in the case where \(\left\| f_\tau \right\| \) is defined as

and that (46) also holds in this case. Thus, Lemma 4.1 is valid if \(\left\| f_\tau \right\| \) is defined as in (47).

Remark 4.3

If the Hilbert space X is either \({\mathbb {R}^d}\) for some \(d\ge 0\), or \(L^2(\Omega )\), for some \(\Omega \subset {\mathbb {R}}^d\) or (a subespace of) the Sobolev space \(H^s(\Omega )\) for some integer \(s>0\), then the constant \(c_A\) in (40) can be set to

We now show that this is the case for \(X=L^2(\Omega )\) since the argument is easily adapted to the other cases. We first notice that since the sequence \(f_\tau \) has zero mean, then, for every \(x\in ~\Omega \) (except maybe in a set of zero measure) not all values \((f_n(x))_{n=0}^M\) can have the same sign. Consequently, for every \(x\in \Omega \) there is an integer \(m_x\in [1,M]\) and a value \(\lambda _x\in [0,1]\) for which \(0=(1-\lambda _x) f_{m_x}(x) +\lambda _x f_{m_x-1}(x) =0\). We notice then that

Thus, for \(x\in \Omega \) such that \(m_x \le n\), arguing as in the proof of Lemma 4.1 we may write

This argument is easily adapted to the case where \(m_x>n\). Observe that the last inequality implies

and a similar argument shows that this inequality to be valid also when \(m_x>n\). Thus, integrating in \(\Omega \) it easily follows that \(\left\| f_n\right\| ^2 \le 2\left\| f_\tau \right\| _0 \left\| D f_\tau \right\| _0\).

We now treat first the periodic case, since it is less technical than the general one and the main ideas can be presented more easily.

4.1 The Periodic Case

In this subsection we assume that \(f_M=f_0\) and we extend periodically the sequence \(f_\tau \), that is \(f_{m} = f_{M+m}\) for \(m=\pm 1,\pm 2,\ldots \,\). Also, we will consider \(\left\| f_\tau \right\| _0\) as defined in (47). We define \(\bigl \Vert D^{k} f_\tau \bigr \Vert _{0}\) as

Notice that for \(k=1\) the expression above coincides with \(\left\| D f_\tau \right\| \) defined in (39). Also, since \(f_0=f_M\), the expression in (49) for \(k=0\) coincides with the alternative definition of \(\left\| f_\tau \right\| \) given in (47), for which, as observed in Remark 4.2 Lemma 4.1 is also valid.

The proof of the following lemma is the discrete counterpart of integration by parts in a periodic function

Lemma 4.4

Let \(f_\tau =(f_n)_{n\in {\mathbb {Z}}}\) be a periodic sequence in X with period M. Then, for \(k=2,\ldots ,M-1\),

Proof

Since \(\tau \bigl \Vert D^k f_n\bigr \Vert ^2=\tau (D^k f_n, D^k f_n)= (D^k f_n,D^{k-1} f_n - D^{k-1} f_{n-1})\), we have

Now we notice that for the second term on the right-hand side above we have \((D^kf_1,D^{k-1} f_{0})=(D^kf_{M+1},D^{k-1} f_{M})\), and thus

and the proof is finished by applying Hölder’s inequality. \(\square \)

The proof of the following reuslt follows the ideas in the proof of [1, Lemma 4.12] adapted to the discrete case.

Lemma 4.5

Let \(f_\tau =(f_n)_{n\in {\mathbb {Z}}}\) a periodic sequence in X with period M. Then, for \(m=2,\ldots ,M\),

Proof

We will prove below that (50) will be a consequence of the following inequality

which we will now proof by induction. Notice that (51) holds for \(k=1\) as a direct application of Lemma 4.4. Now assume that (51) holds for \(k=1,\ldots ,m-1\), and let us check that it also holds for \(k=m\). Applying Lemma 4.4 we have

Applying the induction hypothesis we have

so that dividing by \(\bigl \Vert D^{m} f_\tau \bigr \Vert _0^{\frac{m-1}{2m}}\) we obtain

from where (51) follows.

Now, to proof (50), we start with the case \(m=2\), which holds as a direct application of Lemma 4.4 for \(k=1\). Then we apply (51) repeatedly to the factor with the highest-order difference, that is

and the proof is finished by noticing that

\(\square \)

From Lemmas 4.1 and 4.5 the following result follows.

Lemma 4.6

Let \(f_\tau =(f_n)_{n\in {\mathbb {Z}}}\) a periodic sequence in X with period M and zero mean. Then, for \(k=1,\ldots ,M\), the following estimate holds:

where \(c_A\) is the constant in (40).

4.2 The General Case

In this section, and unless stated otherwise, \(f_\tau =(f_n)_{n=0}^M\) is a sequence in X with zero mean. We define

and, in order to obtain scale invariant constants, for each \(k=0,\ldots ,M\),

where, here and in the sequel, \(T_0=T\), and

We also denote

Observe that by applying Hölder’s inequality one gets

On the other hand, it is easy to check \(m_k = \min _{\alpha \in {\mathbb {R}}} \left\| D^kf_\tau - \alpha \right\| _0\), where by \(D^k f_\tau -\alpha \) we denote the sequence \( (D^k f_n -\alpha )_{n=k}^M\). Consequently, for the sequence \(D^k f_\tau -m_k = (D^k f_n -m_k)_{n=k}^M\), we have

Our first result extends Lemma 4.1 to the sequences \((D^kf_n)_{n=k}^M\), whose means are not 0.

Lemma 4.7

The following bound holds for \(k=1,\ldots M\),

where

and \(c_A\) is the constant in (40).

Proof

Applying Lemma 4.1 we have

where in the last inequality we have applied (57). Thus, by writing \(D^k f_n = D^k f_n -m_k + m_k\) and in view of (56), we have

Now applying Hölder’s inequality, \(ab + cd \le (a^p+c^p)^{\frac{1}{p}} (b^q+d^q)^{\frac{1}{q}}\), to the second factor above, with \(p=4/3\) and \(q=4\), the proof is finished. \(\square \)

Next, we extend Lemma 4.4 to the general case

Lemma 4.8

For \(k=1,\ldots ,M-1\),

where

Proof

We notice that \(D^k f_\tau = D(D^{k-1}f_\tau - m_{k-1})\) and let us denote

Thus, arguing as in the proof of Lemma 4.4 we have

We apply (59) and Lemma 4.7 to the first two terms on right-hand side above, and we apply (57) to the last one, to get,

Thus,

and the proof is finished for \(k\ge 2\). \(\square \)

Lemma 4.9

For \(m=2,\ldots ,M\),

where

and the constants \(\hat{c}_j\) are given in (66) below.

Proof

The result will be a consequence of the following inequality that will be proven below by induction:

If (63) holds for \(j=0,\ldots ,m-2\), then, applying it successively we have

where

so that (61) follows. We now prove (63). Lemma 4.8 shows it holds for \(j=0\) with \(\hat{c}_0 = c_{B,1}\). Let us assume that it holds for \(j=0,\ldots ,m-2\), and let us show that it holds for \(m-1\). We do this for \(k=1\) since the argument is the same for \(k>1\). We notice

and apply the induction hypotheses to the second term on the right-hand side above to get

Now, we use that, as argued above, the induction hypothesis implies that (61) holds, so that we can write

so that, dividing by \(\bigl \Vert D ^1f_\tau \bigr \Vert _{m-1}^{\frac{1}{m^2}}\) we have

which implies

Now we apply Holder’s inequality \(ab+cd \le (a^p+c^p)^{1/p}(b^q+d^q)^{1/q}\) to B, with \(p=m\) and \(q=m/(m-1)\)

which, together with (65), implies

This is (63) for \(j=m-1\) with

where \(c_{B,1}\) is the constant given in (60). \(\square \)

Table 7 shows the first 5 values of \(c_m\) for \(c_A\) given by (48). Computation of further values suggests that \(c_m\approx \gamma ^{m-1}\) with \(\gamma =5.9416\).

From Lemmas 4.1 and 4.9 the following result follows.

Lemma 4.10

Let \(f_\tau =(f_n)_{n=0}^M\) a sequence in X with zero mean. Then, for \(m=1,\ldots ,M-1\), the following estimate holds:

where \(c_A\) is the constant in (40) (or (48) for X being a space of functions or \({\mathbb {R}}^N\)) and \(c_m\) is \(c_m=1\) if \(m=1\) and the constant in (62) for \(m\ge 2\).

4.3 Application to Sequences of Function Values

We consider now the case where X is either \(L^2(\Omega )\) or some Sobolev space \(H^s(\Omega )\) for some domain \(\Omega \subset {\mathbb {R}}^d\), \(\left\| \cdot \right\| \) its norm, and \(f_n=f(\cdot , t_n)\) for some function \(f:\Omega \times [0,T] \rightarrow {\mathbb {R}}\) such that \(f\in H^m(0,T,X)\). We first notice that since for \(x\in \Omega \) we have \(D^{k-1} f(x,t_n) =\partial ^{k-1} f(x,\xi )\) for some \(\xi \in (t_{n-k+1},t_n)\), then it follows that

Consequently,

We have the following result.

Theorem 4.11

Let \(f:X\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) a function which is T-periodic in its last variable and such that \(f\in H^m(0,T,X)\). Then, the sequence \(f_\tau =(f_n)_{n=1}^M\) satisfies the following bounds for \(1\le m\le M-1\):

where \(c_m\) is the constant in Lemma 4.10. If \(f_1+\cdots +f_M=0\), the the last term on the right-hand side above can be omitted.

Proof

Let us denote \(m_0=(f_1+\cdots +f_M)/M\). Applying Hölder’s inequality is easy to check that \(\left| m_0\right| \le T^{-1/2}\bigl \Vert f_\tau \bigr \Vert _0\) and, consequently, \(\left\| f_\tau -m_0\right\| _0 \le 2\left\| f_\tau \right\| _0\). Thus, by expressing \(f_n=(f_n-m_0)+m_0\), applying Lemmas 4.5 and 4.6 to \(f_\tau - m_0\) and using (69) the result follows for \(m\ge 2\). For \(m=1\) the result follows form Lemma 4.6 and (69). \(\square \)

For the general case, in view of the definition of \(\bigl \Vert D^k f_\tau \bigl \Vert _m\) in (53) and (69) we have

where \(\left\| \cdot \right\| _{H^{m-1}(0,T,X)}\) is defined in (1). We now estimate the maximum above. In view of the expression of \(T_k\) in (54), for \(1\le k\le m-1\) we have

Now the function \(x\mapsto x(m-x)/(m(M-x))\) is monotone increasing in the interval \([1,m-1]\), unless \(m\le M/4\) where it has a relative maximum at \((M/2)-\sqrt{(M^2/4)-Mm}\), but is easy to check that this value is strictly larger than m. Consequently, its maximum is achieved at \(x=m-1\). Noticing that \(x^{1/x} \le e^{\frac{1}{e}}\) for \(x>0\), we conclude that, for \(2\le m\le M\)

One can check that \(e^{1+\frac{1}{e}}\le 3.93\). Thus, from (70) it follows that

Theorem 4.12

Let \(f\in H^m(0,T,X)\). Then, the sequence \(f_\tau =(f_n)_{n=0}^M\) satisfies the following bound for \(1\le m\le M\):

If \(f_0+\cdots +f_M=0\), the last term on the right-hand side above can be omitted.

Proof

Let us denote \(m_0=(f_0+\cdots +f_M)/(M+1)\). Applying Hölders inequality one sees that \(\left| m_0\right| \le T^{-1/2}\bigl \Vert f_\tau \bigr \Vert _0\) so that \(\left\| f_\tau -m_0\right\| _0 \le 2\left\| f_\tau \right\| _0\). Thus, by writing \(f_n=(f_n-m_0)+m_0\), applying Lemmas 4.9 (if \(m\ge 2\)) and 4.10 to \(f_\tau - m_0\) and using (71) the proof is finished. \(\square \)

5 Summary and Conclusions

Several estimates, pointwise and averaged, have been proved for POD methods whose data set does not include DQs of the snapshots. These estimates include pointwise projection errors of snapshots (Theorems 2.1 and 2.2 in the general case and estimate (8) in the case of periodic functions in time) for orthogonal projections in \(L^2\) and \(H^1_0\). They also include \(L^2\) norm of \(H^1_0\)-projection errors (estimate (11)), for which estimate (10) has been used. Our estimates also include (the square root of) the average of squared projection error of DQs (Theorem 2.3 for the general case and estimate (9) for periodic functions) again in the \(L^2\) and \(H^1_0\) cases, as well as the average of squares of \(L^2\) norms of Ritz projection errors of DQs (estimate (12)). In all cases, when projections are onto the space spanned by the first r elements of the POD basis, the rate of decay in terms of \(\sigma _{r+1}^2 + \cdots +\sigma _J^2\) is as close to the optimal value \((\sigma _{r+1}^2 +\cdots + \sigma _J^2)^{1/2}\) as the smoothness of the function from where the snapshots are taken allows.

These estimates have been used to obtain error bounds with the same rates of decay for POD methods applied to the heat equation, where both backward Euler method and the BDF2 are considered for discretization of the time variable (Theorem 2.6). The heat equation has been chosen for simplicity, but it is clear that, with techniques for nonlinear problems such as those, for example, in [5, 7, 9, 13, 16], it is possible to extend these estimates to POD methods not using DQs in the data set for nonlinear equations.

Numerical experiments are also presented in this paper, where snapshots are taken from two challenging problems due to the size of derivatives with respect to time (see Fig. 1) and Table 4), derivatives which feature in the estimates commented above. In these experiments, pointwise projection errors are overestimated by our error bounds by factors not exceeding 28, and by a factor also not exceeding 28 in the case of the (square root of) the average of squares of \(L^2\) norms of Ritz projection errors of DQs, if the estimates are those in Remark 2.7, although these factors are likely to be problem-dependent.

This research was motivated by a better understanding of POD methods that do not include DQs of the snapshots in the data set. In particular by the apparent contradiction that while counterexamples in [12] clearly show that pointwise projection errors do degrade with the number of snapshots, computations like those in Table 1 suggest that this may not necessarily be the case in practice. By taking into account the smoothness of functions from where the snapshots are taken, new estimates have been obtained and the apparent contradiction mentioned above has been explained, as well as better knowledge of the decay rate of pointwise errors when DQs are omitted from the data set.

Data Availability

The authors declare that the data supporting the findings of this study are available are availabe at https://doi.org/10.12795/11441/169465 and https://hdl.handle.net/11441/169465. Source code and part of data are also available at https://github.com/bgarchilla/pointwise/.

References

Adams, R. A.: Sobolev spaces, Academic Press [A subsidiary of Harcourt Brace Jovanovich, Publishers], New York-London, (1975)

Constantin, P., Foias, C.: Navier-Stokes Equations. Chicago Lectures on Mathematics. The University of Chicago Press, Chicago (1988)

Chapelle, D., Gariah, A., Sainte-Marie, J.: Galerkin approximation with proper orthogonal decomposition: new error estimates and illustrative examples. ESAIM: M2AN, 46 731-757 (2012)

Eskew, S.L., Singler, J.R.: A new approach to proper orthogonal decomposition with difference quotients. Adv. Comput. Math. 49(2), 13 (2023)

García-Archilla, B., John, V., Novo, J.: POD-ROMs for incompressible flows including snapshots of the temporal derivative of the full order solution. SIAM. J. Numer. Anal. 61(3), 1340–1368 (2023)

García-Archilla, B., John, V., Novo, J.: Second order error bounds for POD-ROM methods based on first order divided differences. Appl. Math. Letters 143, 108836 (2023)

García-Archilla, B., Novo, J.: POD-ROM methods: from a finite set of snapshots to continuous-in-time approximations. SIAM J. Numer. Anal. (to apper) https://doi.org/24M1645681. arXiv:2403.06967 [math.NA],

Iliescu, T., Wang, Z.: Are the snapshot difference quotients needed in the proper orthogonal decomposition? SIAM J. Sci. Comput. 36, A1221–A1250 (2014). https://doi.org/10.1137/130925141

Iliescu, T., Wang, Z.: Variational multiscale proper orthogonal decomposition: Navier-Stokes equations. Numer. Meth. PDEs 30(2), 641–663 (2014)

John, V., Moreau, B., Novo, J.: Error analysis of a SUPG-stabilized POD-ROM method for convection-diffusion-reaction equations. Comput. Math. Appl. 122, 48–60 (2022). https://doi.org/10.1016/j.camwa.2022.07.017

Kean, K., Schneier, M.: Error analysis of supremizer pressure recovery for POD based reduced-order models of the time-dependent Navier-Stokes equations. SIAM J. Numer. Anal. 58, 2235–2264 (2020). https://doi.org/10.1137/19M128702X

Koc, B., Rubino, S., Schneier, M., Singler, J., Iliescu, T.: On optimal pointwise in time error bounds and difference quotients for the proper orthogonal decomposition. SIAM J. Numer. Anal. 59(4), 2163–2196 (2021)

Koc, B., Chacón Rebollo, T., Rubino, S.: Uniform bounds with difference quotients for proper orthogonal decomposition reduced order models of the Burger’s equation. J. Sci. Comput. 95(2), 43 (2023)

Hairer, E., Nörset, S. P., Wanner, G.: Solving Ordinary Differential Equations I. Nonstiff Problems (2nd Ed.). Springer Series in Computational Mathematics 8, Springer-Verlag, Berlin, (1993)

Kunisch, K., Volkwein, S.: Galerkin proper orthogonal decomposition methods for parabolic problems. Numer. Math. 90(1), 117–148 (2001)

Kunisch, K., Volkwein, S.: Galerkin proper orthogonal decomposition methods for a general equation in fluid dynamics. SIAM J. Numer. Anal. 40, 492–515 (2002)

Sánchez, J., Net, M., Simó, C.: Computation of invariant tori by Newton–Krylov methods in large-scale dissipative systems. Phys. D 59(4), 2163–219 (2021). https://doi.org/10.1016/j.physd.2009.10.012

Schäfer, M., Turek, S., Durst, F., Krause, E., Rannacher, R.: Benchmark computations of laminar flow around a cylinder, Vieweg+Teubner Verlag, Wiesbaden, 1996, pp. 547–566, https://doi.org/10.1007/978-3-322-89849-4_39

Singler, J.R.: New POD error expressions, error bounds, and asymptotic results for reduced order models of parabolic PDEs. SIAM J. Numer. Anal. 239, 123–133 (2010). https://doi.org/10.1137/120886947

Funding

Funding for open access publishing: Universidad de Sevilla/CBUA. Bosco García-Archilla: Research is supported by grants PID2021-123200NB-I00 and PID2022-136550NB-I00 funded by MCIN/AEI/10.13039/501100011033 and by ERDF A way of making Europe, by the European Union. Julia Novo: Research is supported by grant PID2022-136550NB-I00 funded by MCINAEI/10.13039/501100011033 and by ERDF A way of making Europe, by the European Union.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

García-Archilla, B., Novo, J. Pointwise Error Bounds in POD Methods Without Difference Quotients. J Sci Comput 103, 24 (2025). https://doi.org/10.1007/s10915-025-02838-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-025-02838-9