Abstract

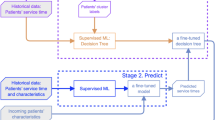

Patient no-shows and suboptimal patient appointment length scheduling reduce clinical efficiency and impair the clinic’s quality of service. The main objective of this study is to improve appointment scheduling in hospital outpatient clinics. We developed generic supervised machine learning models to predict patient no-shows and patient’s length of appointment (LOA). We performed a retrospective study using more than 100,000 records of patient appointments in a hospital outpatient clinic. Several machine learning algorithms were used for the development of our prediction models. We trained our models on a dataset that contained patients’, physicians’, and appointments’ characteristics. Our feature set combines both unstudied features and features adopted from previous studies. In addition, we identified the influential features for predicting LOA and no-show. Our LOA model’s performance was 6.92 in terms of MAE, and our no-show model’s performance was 92.1% in terms of F-score. We compared our models’ performance to the performance of previous research models by applying their methods to our dataset; our models demonstrated better performance. We show that the major effector of such differences is the use of our novel features. To evaluate the effect of our prediction results on the quality of schedules produced by appointment systems (AS), we developed an interface layer between our prediction models and the AS, where prediction results comprise the AS input. Using our prediction models, there was an 80% improvement in the daily cumulative patient waiting time and a 33% reduction in the daily cumulative physician idle time.

Similar content being viewed by others

Availability of data and materials

The dataset used in this study is available from the corresponding author upon reasonable request.

References

Merrit Hawkins, "survey of physician appointment wait times and medicare and medicaid acceptance rates," merritthawkin, 2017.

A. Baker, "Crossing the Quality Chasm: A New Health System for the 21st Century," National Academies Press, 2001.

D. Gupta and B. Denton, "Appointment scheduling in health care: Challenges and opportunities," IIE Transactions, vol. 40, pp. 800-819, 2008.

R. Lucas, H. Farley, J. Twanmoh, A. Urumov, N. Olsen, B. Evans and H. Kabiri, "Emergency department patient flow: the influence of hospital census variables on emergency department length of stay," the Society for Academic Emergency Medicine, pp. 597–602, 2009.

J. L. Wiler, C. Gentle, J. M. Halfpenny, A. Heins, A. Mehrotra, M. G. Mikhail and D. Fite, "Optimizing Emergency Department Front-End Operations," Annals of Emergency Medicine, pp. 142–160, 2010.

T. Cayirli and E. A. Veral, "Outpatient scheduling in health care: A review of literature," Production and Operations Management, vol. 12, pp. 519-549, 2009.

S. Barnes, E. Hamrock, M. Toerper, S. Siddiqui and S. Levin, "Real-time prediction of inpatient length of stay for discharge prioritization," Journal of the American Medical Informatics Association, 2015.

M. Rowan, T. Ryan, F. Hegarty and N. O’Hare, "The use of artificial neural networks to stratify the length of stay of cardiac patients based on preoperative and initial postoperative factors," Artificial Intelligence in Medicine, 2007.

D. Ben Tayeb, N. Lahrichi and L.-M. Rousseau, "Patient scheduling based on a service-time prediction model: A data-driven study for a radiotherapy center," Springer Health Care Management Science, 2018.

H. Lenzi, Â. J. Ben and A. T. Stein, "Development and validation of a patient no-show predictive model at a primary care setting in Southern Brazil," PLOS ONE, 2019.

D. Carreras-García, D. Delgado-Gómez, F. Llorente-Fernández and A. Arribas-Gil, "Patient no-show prediction: A systematic literature review," Entropy, 2020.

B. Zeng, A. Turkcan, J. Lin and M. Lawley, "Clinic scheduling models with overbooking for patients with heterogeneous no-show probabilities," Annals of Operations Research, vol. 178, pp. 121-144, 2009.

M. Heshmat, K. Nakata and A. Eltawil, "Solving the patient appointment scheduling problem in outpatient chemotherapy clinics using clustering and mathematical programming," Computers & Industrial Engineering, pp. 347–358, 2018.

K. Muthuraman and M. Lawley, "A stochastic overbooking model for outpatient clinical scheduling with no-shows," IIE Transactions, 2008.

R. M. Goffman, S. L. Harris, J. H. May, A. S. Milicevic, R. J. Monte, L. Myaskovsky, K. L. Rodriguez, Y. C. Tjader and D. V. L., "Modeling patient No-Show history and predicting future outpatient appointment behavior in the Veterans Health Administration," Military Medicine, 2017.

D. Hanauer and Y. Huang, "Patient no-show predictive model development using multiple data sources for an Effective Overbooking Approach," Applied Clinical Informatics, 2014.

L. F. Dantas, S. Hamacher, F. L. Cyrino Oliveira, S. D. Barbosa and F. Viegas, "Predicting patient no-show behavior: A study in a bariatric clinic," Obesity Surgery, 2018.

T. E. Raghunathan, J. M. Lepkowski, J. V. Hoewyk and P. Solenberger, "A Multivariate Technique for Multiply Imputing Missing Values Using a Sequence of Regression Models," Survey methodology, pp. 58–96, 2001.

S. Snowden, P. Weech, R. McClure, S. Smye and P. Dear, "A neural network to predict attendance of paediatric patients at outpatient clinics," Neural Computing & Applications, pp. 234–241, 1995.

H. B. Harvey, C. Liu, J. Ai, C. Jaworsky, E. C. Guerrier, E. Flores and O. Pianykh, "Predicting no-shows in radiology Using Regression modeling of data available in the electronic medical record," Journal of the American College of Radiology, vol. 14, pp. 1303-1309, 2017.

"Icd-9-cm chapters," 20 4 2021. [Online]. Available: https://icd.codes/icd9cm.

T. Chen and C. Guestrin, "XGBoost: A Scalable Tree Boosting System," in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, 2016.

G. Ke, Q. Meng, T. Finley, T. Wan, W. Chen, W. Ma, Q. Ye and T.-Y. Liu, "LightGBM: A Highly Efficient Gradient Boosting Decision Tree," in 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, 2017.

H. Ramchoun, M. Amine, J. Idrissi, Y. Ghanou and M. Ettaouil, "Multilayer Perceptron: Architecture Optimization and Training," International Journal of Interactive Multimedia and Artificial Intelligence, pp. 26–30, 2016.

G. Luo, "A review of automatic selection methods for machine learning algorithms and hyper-parameter values," Network Modeling Analysis in Health Informatics and Bioinformatics, 2016.

W. Li, X. Xing, F. Liu and Y. Zhang, "Application of Improved grid search algorithm on SVM for classification of Tumor Gene," International Journal of Multimedia and Ubiquitous Engineering, pp. 181–188, 2014.

H. A. A. Mohamad, "Agarwood oil QUALITY classification using support Vector classifier and grid Search cross Validation hyperparameter tuning," International Journal of Emerging Trends in Engineering Research, vol. 8, pp. 2551-2556, 2020.

M. Aladeemy, L. Adwan, A. B. Booth, M. T. Khasawneh and S. Poranki, "New feature selection methods based on opposition-based learning and self-adaptive cohort intelligence for predicting patient no-shows," Applied Soft Computing, vol. 86, p. 105866, 2020.

E. Štrumbelj and I. Kononenko, "Explaining prediction models and individual predictions with feature contributions," Knowledge and Information Systems, vol. 41, pp. 647-665, 2013.

S. Lipovetsky and M. Conklin, "Analysis of regression in game theory approach," Applied Stochastic Models in Business and Industry, vol. 17, pp. 319-330, 2001.

Batunacun, R. Wieland, T. Lakes and C. Nendel, "Using Shapley additive explanations to interpret extreme gradient boosting predictions of grassland degradation in Xilingol, China," Geoscientific Model Development, vol. 14, pp. 1493–1510, 2021.

A. Ahmadi-Javid, Z. Jalali and K. J. Klassen, "Outpatient appointment systems in healthcare: A review of optimization studies," European Journal of Operational Research, vol. 258, pp. 3-34, 2017.

W. Liang, S. Luo, G. Zhao and H. Wu, "Predicting hard ROCK Pillar Stability Using GBDT, XGBoost, and Lightgbm algorithms," Mathematics, vol. 8, p. 765, 2020.

C. Bentéjac, A. Csörgő and G. Martínez-Muñoz, "A comparative analysis of gradient boosting algorithms," Artificial Intelligence Review, vol. 54, pp. 1937-1967, 2020.

N. Schilling, M. Wistuba, L. Drumond and L. Schmidt-Thieme, "Machine Learning and Knowledge Discovery in Databases," Hyperparameter optimization with Factorized multilayer perceptrons, pp. 87–103, 2015.

H. Ramchoun, M. Amine, J. Idrissi, Y. Ghanou and M. Ettaouil, "Multilayer Perceptron: Architecture," International Journal of Interactive Multimedia and Artificial Intelligence, vol. 4, p. 26, 2016.

O. Babayoff and O. Shehory, "The role of semantics in the success of crowdfunding projects," PLOS ONE, vol. 17, p. e0263891, 2022.

S. Srinivas and H. Salah, "Consultation length and no-show prediction for improving appointment scheduling efficiency at a cardiology clinic: A data analytics approach," International Journal of Medical Informatics, vol. 145, p. 104290, 2021.

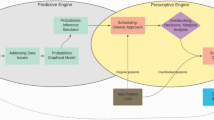

H. Salah and S. Srinivas, "Predict, then schedule: Prescriptive analytics approach for machine learning-enabled Sequential Clinical Scheduling," Computers & Industrial Engineering, vol. 169, p. 108270, 2022.

A. Kuiper and R. H. Lee, "Appointment scheduling for multiple servers," Management Science, vol. 68, pp. 7422-7440, 2022.

Funding

This research was supported in part by the Bar Ilan University DSI/VATAT under grant number 247049-900-01 500M. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

Orel babayoff – wrote the main manuscript text, conception and design of the study, acquisition of data, manuscript review and revision, data and models analysis. Onn Shehory – conception and design of the study, manuscript review and revision. Eli Sprecher, Ahuva Weiss-Meilik, Shitrit-Niselbaum, Shamir Geller – acquisition of data, manuscript review and revision.

Corresponding author

Ethics declarations

Ethical approval

This study was approved by the TASM institutional review board (IRB), approval number 0174-20-TLV. This study involves data about human participants but the IRB exempted this study from participant consent. The data were fully anonymized and then used for this study.

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Highlights

• We develop generic prediction models for patient length of appointment and no-show.

• Our models were trained on original data from a public hospital.

• Our feature set includes novel physicians’, patients’, and appointments’ features.

• We empirically demonstrate superiority of our prediction in comparison to the state-of-the-art.

• Our models deliver 80% improvement in the daily cumulative patient waiting time and 33% reduction in the daily cumulative physician idle time.

Appendices

Appendix A

F-score is the weighted harmonic mean of a model’s precision and recall. It is used to measure the performance of a model and is calculated as follows:

where tp is the number of true positive predictions, and fn and fp are the number of false negative and false positive predictions, respectively.

The Brier Score measures the accuracy of probabilistic predictions and is calculated as follows:

where y is the true value, i.e., 1 or 0, ŷ is the is the predicted probability and n is the number of records in the evaluation set.

A ROC curve plots precision vs. recall at different classification thresholds and AUC measures the entire two-dimensional area under the curve. AUC and F-score metrics range from 0 to 1. A higher score indicates better performance and is, therefore, preferred.

Accuracy is a metric for evaluating classification models, it as follows:

MAE measures the average absolute magnitude of the errors in a set of predictions. RMSE is the average of the quadratic magnitude of the error.

where y is the true value of the dependent variable in our LOA, ŷ is the predicted value and n is the number of records in the evaluation set. MAE and RMSE metrics range from 0 to infinity. A lower score indicates better performance and is, therefore, preferred.

Appendix B

We have calculated the LOAM’s uncertainty as follows. First, for each record in the test set, we used the model to predict a list of probabilities from each tree in the LightGBM. Then, for each record, we calculated the STD from the list of probabilities. Finally, we calculated the mean of the STDs. Following this flow, the derived uncertainty of the model was 1.35 min.

Appendix C

The outputs of running the LightGBM Internal Feature Importance Algorithm (LGFIA) on LOAM and NSM are shown in Figs. 1 and 2, respectively. The features are presented in decreasing order of importance. In Figs. 7 and 8, the number at the end of each bar indicates the split value, i.e., the number of times the feature is used when the model is trained. In Figs. 9 and 10, the number at the end of each bar indicates the average gain of the feature when the model is trained where the gain is the reduction in training loss that results from adding a split point, i.e., feature.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Babayoff, O., Shehory, O., Geller, S. et al. Improving Hospital Outpatient Clinics Appointment Schedules by Prediction Models. J Med Syst 47, 5 (2023). https://doi.org/10.1007/s10916-022-01902-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10916-022-01902-3