Abstract

In this paper, we are concerned with the problem of scheduling n jobs on m machines. The job processing rate is interdependent and the jobs are non-preemptive. During the last several decades, a number of related models for parallel machine scheduling with interdependent processing rates (PMS-IPR) have appeared in the scheduling literature. Some of these models have been studied independently from one another. The purpose of this paper is to present two general PMS-IPR models that capture the essence of many of these existing PMS-IPR models. Several new complexity results are presented. We discuss improvements on some existing models. Furthermore, for an extension of the two related PMS-IPR models where they include many resource constraint models with controllable processing times, we propose an efficient dynamic programming procedure that solves the problem to optimality.

Similar content being viewed by others

References

Adiri, I., & Yehudai, Z. (1987). Scheduling on machines with variable service rates. Computers & Operations Research, 14(4), 289–297. https://doi.org/10.1016/0305-0548(87)90066-9.

Aicardi, M., Giglio, D., & Minciardi, R. (2008). Optimal strategies for multiclass job scheduling on a single machine with controllable processing times. IEEE Transactions on Automatic Control, 53(2), 479–495. https://doi.org/10.1109/TAC.2007.915166.

Alidaee, B., & Ahmadian, A. (1993). Two parallel machine sequencing problems involving controllable job processing times. European Journal of Operational Research, 70(3), 335–341. https://doi.org/10.1016/0377-2217(93)90245-I.

Alidaee, B., & Kochenberger, G. A. (2005). A note on a simple dynamic programming approach to the single-sink, fixed-charge transportation problem. Transportation Science, 39(1), 140–143. https://doi.org/10.1287/trsc.1030.0055.

Alidaee, B., & Li, H. (2014). Parallel machine selection and job scheduling to minimize sum of machine holding cost, total machine time costs, and total tardiness costs. IEEE Transactions on Automation Science and Engineering, 11(1), 294–301. https://doi.org/10.1109/TASE.2013.2247757.

Alidaee, B., & Womer, N. K. (1999). Scheduling with time dependent processing times: Review and extensions. Journal of the Operational Research Society, 50(7), 711–720. https://doi.org/10.1057/palgrave.jors.2600740.

Allahverdi, A., Gupta, J. N. D., & Aldowaisan, T. (1999). A review of scheduling research involving setup considerations. Omega, 27(2), 219–239. https://doi.org/10.1016/S0305-0483(98)00042-5.

Alon, N., Azar, Y., Woeginger, G. J., & Yadid, T. (1998). Approximation schemes for scheduling on parallel machines. Journal of Scheduling, 1(1), 55–66.

Amaddeo, H. F., Nawijn, W. M., & van Harten, A. (1997). One-machine job-scheduling with non-constant capacity—Minimizing weighted completion times. European Journal of Operational Research, 102(3), 502–512. https://doi.org/10.1016/S0377-2217(96)00240-8.

Baker, K. R., & Nuttle, H. L. (1980). Sequencing independent jobs with a single resource. Naval Research Logistics Quarterly, 27(3), 499–510. https://doi.org/10.1002/nav.3800270313.

Baradaran, S., Fatemi Ghomi, S. M. T., Ranjbar, M., & Hashemin, S. S. (2012). Multi-mode renewable resource-constrained allocation in PERT networks. Applied Soft Computing, 12(1), 82–90. https://doi.org/10.1016/j.asoc.2011.09.007.

Beliën, J., Demeulemeester, E., De Bruecker, P., Van den Bergh, J., & Cardoen, B. (2013). Integrated staffing and scheduling for an aircraft line maintenance problem. Computers & Operations Research, 40(4), 1023–1033. https://doi.org/10.1016/j.cor.2012.11.011.

Biskup, D. (2008). A state-of-the-art review on scheduling with learning effects. European Journal of Operational Research, 188(2), 315–329. https://doi.org/10.1016/j.ejor.2007.05.040.

Burke, E. K., Dror, M., & Orlin, J. B. (2008). Scheduling malleable tasks with interdependent processing rates: Comments and observations. Discrete Applied Mathematics, 156(5), 620–626. https://doi.org/10.1016/j.dam.2007.08.008.

Chandra, A. K., & Wong, C. K. (1975). Worst-case analysis of a placement algorithm related to storage allocation. SIAM Journal on Computing, 4(3), 249–263. https://doi.org/10.1137/0204021.

Chen, Z.-L., & Hall, N. G. (2008). Maximum profit scheduling. Manufacturing & Service Operations Management, 10(1), 84–107. https://doi.org/10.1287/msom.1060.0144.

Cheng, T. C. E., Ding, Q., & Lin, B. M. T. (2004). A concise survey of scheduling with time-dependent processing times. European Journal of Operational Research, 152(1), 1–13. https://doi.org/10.1016/S0377-2217(02)00909-8.

Cody, R. A., & Jr Coffman, E. G. (1976). Record allocation for minimizing expected retrieval costs on drum-like storage devices. Journal of the ACM, 23(1), 103–115. https://doi.org/10.1145/321921.321933.

Deuermeyer, B. L., Friesen, D. K., & Langston, M. A. (1982). Scheduling to maximize the minimum processor finish time in a multiprocessor system. SIAM Journal on Algebraic Discrete Methods, 3(2), 190–196. https://doi.org/10.1137/0603019.

Dror, M., Stern, H. I., & Lenstra, J. K. (1987). Parallel machine scheduling: Processing rates dependent on number of jobs in operation. Management Science, 33(8), 1001–1009. https://doi.org/10.1287/mnsc.33.8.1001.

Epstein, L., & Sgall, J. (2004). Approximation schemes for Schedulingon uniformly related and identical parallel machines. Algorithmica, 39(1), 43–57. https://doi.org/10.1007/s00453-003-1077-7.

Friesen, D. K., & Deuermeyer, B. L. (1981). Analysis of greedy solutions for a replacement part sequencing problem. Mathematics of Operations Research, 6(1), 74–87. https://doi.org/10.1287/moor.6.1.74.

Fündeling, C. U., & Trautmann, N. (2010). A priority-rule method for project scheduling with work-content constraints. European Journal of Operational Research, 203(3), 568–574. https://doi.org/10.1016/j.ejor.2009.09.019.

Garey, M., & Johnson, D. (1979). Computers and intractability: A guide to the theory of NP-hardness. San Fransisco: WH Freeman.

Gawiejnowicz, S. (1996). A note on scheduling on a single processor with speed dependent on a number of executed jobs. Information Processing Letters, 57(6), 297–300. https://doi.org/10.1016/0020-0190(96)00021-X.

Gokbayrak, K., & Selvi, O. (2007). Constrained optimal hybrid control of a flow shop system. IEEE Transactions on Automatic Control, 52(12), 2270–2281. https://doi.org/10.1109/TAC.2007.910668.

Gokbayrak, K., & Selvi, O. (2008). Optimization of a flow shop system of initially controllable machines. IEEE Transactions on Automatic Control, 53(11), 2665–2668. https://doi.org/10.1109/TAC.2008.2007153.

Gonzalez, T., & Sahni, S. (1978). Preemptive scheduling of uniform processor systems. Journal of the ACM, 25(1), 92–101. https://doi.org/10.1145/322047.322055.

Gorczyca, M., & Janiak, A. (2010). Resource level minimization in the discrete–continuous scheduling. European Journal of Operational Research, 203(1), 32–41. https://doi.org/10.1016/j.ejor.2009.07.021.

Gorczyca, M., Janiak, A., & Janiak, W. (2011). Neighbourhood properties in some single processor scheduling problem with variable efficiency and additional resources. Decision Making in Manufacturing and Services, 5, 5–17.

Gorczyca, M., Janiak, A., Janiak, W., & Dymański, M. (2015). Makespan optimization in a single-machine scheduling problem with dynamic job ready times—Complexity and algorithms. Discrete Applied Mathematics, 182, 162–168. https://doi.org/10.1016/j.dam.2013.10.003.

Graham, R. L. (1969). Bounds on multiprocessing timing anomalies. SIAM Journal on Applied Mathematics, 17(2), 416–429. https://doi.org/10.1137/0117039.

Hochbaum, D. S., & Shmoys, D. B. (1988). A polynomial approximation scheme for scheduling on uniform processors: using the dual approximation approach. SIAM Journal on Computing, 17(3), 539–551. https://doi.org/10.1137/0217033.

Huang, H. C. (1986). On minimizing flow time on processors with variable unit processing time. Operations Research, 34(5), 801–802. https://doi.org/10.1287/opre.34.5.801.

Janiak, A. (1978). Time-optimal control of a sequence of complexes of independent operations with concave models. Systems Science, 4(4), 325–338.

Janiak, A., Kovalyov, M. Y., & Marek, M. (2007). Soft due window assignment and scheduling on parallel machines. IEEE Transactions on Systems, Man, and Cybernetics—Part A: Systems and Humans, 37(5), 614–620. https://doi.org/10.1109/TSMCA.2007.893485.

Janiak, A., Krysiak, T., & Trela, R. (2011). Scheduling problems with learning and ageing effects: A survey. Decision Making in Manufacturing and Services, 5(1), 19–36.

Janiak, A., & Rudek, R. (2009). Experience-based approach to scheduling problems with the learning effect. IEEE Transactions on Systems, Man, and Cybernetics—Part A: Systems and Humans, 39(2), 344–357. https://doi.org/10.1109/TSMCA.2008.2010757.

Ji, M., Tang, X., Zhang, X., & Cheng, T. C. E. (2016). Machine scheduling with deteriorating jobs and DeJong’s learning effect. Computers & Industrial Engineering, 91, 42–47. https://doi.org/10.1016/j.cie.2015.10.015.

Józefowska, J., & Węglarz, J. (1998). On a methodology for discrete–continuous scheduling. European Journal of Operational Research, 107(2), 338–353. https://doi.org/10.1016/S0377-2217(97)00346-9.

Julius, S., & Ali, D. (1988). Minimizing the sum of weighted completion times of n-independent jobs when resource availability varies over time: Performance of a simple priority rule. Naval Research Logistics (NRL), 35(1), 35–47. https://doi.org/10.1002/1520-6750(198802)35:1%3c35:AID-NAV3220350104%3e3.0.CO;2-3.

Lai, P.-J., & Lee, W.-C. (2011). Single-machine scheduling with general sum-of-processing-time-based and position-based learning effects. Omega, 39(5), 467–471. https://doi.org/10.1016/j.omega.2010.10.002.

Lawler, E. L. (1983). Recent results in the theory of machine scheduling. In A. Bachem, B. Korte, & M. Grötschel (Eds.), Mathematical programming the state of the art: Bonn 1982 (pp. 202–234). Berlin, Heidelberg: Springer.

Lawler, E. L., & Labetoulle, J. (1978). On preemptive scheduling of unrelated parallel processors by linear programming. Journal of the ACM, 25(4), 612–619. https://doi.org/10.1145/322092.322101.

Lawler, E. L., Lenstra, J. K., Rinnooy Kan, A. H. G., & Shmoys, D. B. (1993). Chapter 9 Sequencing and scheduling: Algorithms and complexity. In V. Linetsky (Ed.), Handbooks in operations research and management science (Vol. 4, pp. 445–522). Amsterdam: Elsevier.

Lawler, E. L., & Martel, C. U. (1989). Preemptive scheduling of two uniform machines to minimize the number of late jobs. Operations Research, 37(2), 314–318. https://doi.org/10.1287/opre.37.2.314.

Lawler, E. L., & Sivazlian, B. D. (1978). Minimization of time-varying costs in single-machine scheduling. Operations Research, 26(4), 563–569. https://doi.org/10.1287/opre.26.4.563.

Lee, W.-C. (2011). Scheduling with general position-based learning curves. Information Sciences, 181(24), 5515–5522. https://doi.org/10.1016/j.ins.2011.07.051.

Lee, C. Y., & Leon, V. J. (2001). Machine scheduling with a rate-modifying activity1. This research was supported in part by NSF Grant DMI-9610229, and the US Army Contract DAAB07-95-C-D321.1. European Journal of Operational Research, 128(1), 119–128. https://doi.org/10.1016/S0377-2217(99)00066-1.

Liao, C.-J., & Lin, C.-H. (2003). Makespan minimization for two uniform parallel machines. International Journal of Production Economics, 84(2), 205–213. https://doi.org/10.1016/S0925-5273(02)00427-9.

Lin, J., Qian, Y., Cui, W., & Goh, T. N. (2015). An effective approach for scheduling coupled activities in development projects. European Journal of Operational Research, 243(1), 97–108. https://doi.org/10.1016/j.ejor.2014.11.019.

Lodree, E. J., Geiger, C. D., & Jiang, X. (2009). Taxonomy for integrating scheduling theory and human factors: Review and research opportunities. International Journal of Industrial Ergonomics, 39(1), 39–51. https://doi.org/10.1016/j.ergon.2008.05.001.

Meilijson, I., & Tamir, A. (1984). Minimizing flow time on parallel identical processors with variable unit processing time. Operations Research, 32(2), 440–448. https://doi.org/10.1287/opre.32.2.440.

Mosheiov, G., & Sidney, J. B. (2003). Scheduling with general job-dependent learning curves. European Journal of Operational Research, 147(3), 665–670. https://doi.org/10.1016/S0377-2217(02)00358-2.

Oron, D. (2011). Scheduling a batching machine with convex resource consumption functions. Information Processing Letters, 111(19), 962–967. https://doi.org/10.1016/j.ipl.2011.07.005.

Pan, Y., & Shi, L. On the optimal solution of the general min–max sequencing problem. In 2004 43rd IEEE conference on decision and control (CDC) (IEEE Cat. No.04CH37601), 17–17 December 2004 (Vol. 3, 3183, pp. 3189–3190). https://doi.org/10.1109/cdc.2004.1428964.

Reddy Dondeti, V., & Mohanty, B. B. (1998). Impact of learning and fatigue factors on single machine scheduling with penalties for tardy jobs. European Journal of Operational Research, 105(3), 509–524. https://doi.org/10.1016/S0377-2217(97)00070-2.

Rustogi, K., & Strusevich, V. A. (2014). Combining time and position dependent effects on a single machine subject to rate-modifying activities. Omega, 42(1), 166–178. https://doi.org/10.1016/j.omega.2013.05.005.

Senthilkumar, P., & Narayanan, S. (2010). Literature review of single machine scheduling Problem with uniform parallel machines. Intelligent Information Management, 2(08), 457.

Shabtay, D., & Kaspi, M. (2006). Parallel machine scheduling with a convex resource consumption function. European Journal of Operational Research, 173(1), 92–107. https://doi.org/10.1016/j.ejor.2004.12.008.

Shabtay, D., & Steiner, G. (2007). A survey of scheduling with controllable processing times. Discrete Applied Mathematics, 155(13), 1643–1666. https://doi.org/10.1016/j.dam.2007.02.003.

Shen, W., Wang, L., & Hao, Q. (2006). Agent-based distributed manufacturing process planning and scheduling: A state-of-the-art survey. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), 36(4), 563–577. https://doi.org/10.1109/tsmcc.2006.874022.

Vickson, R. G. (1980a). Choosing the job sequence and processing times to minimize total processing plus flow cost on a single machine. Operations Research, 28(5), 1155–1167. https://doi.org/10.1287/opre.28.5.1155.

Vickson, R. G. (1980b). Two single machine sequencing problems involving controllable job processing times. A I I E Transactions, 12(3), 258–262. https://doi.org/10.1080/05695558008974515.

Wang, H., & Alidaee, B. (2019). Effective heuristic for large-scale unrelated parallel machines scheduling problems. Omega, 83, 261–274. https://doi.org/10.1016/j.omega.2018.07.005.

Wang, H., & Alidaee, B. (2018). Unrelated parallel machine selection and job scheduling with the objective of minimizing total workload and machine fixed costs. IEEE Transactions on Automation Science and Engineering, 15(4), 1955–1963. https://doi.org/10.1109/TASE.2018.2832440.

Węglarz, J., Józefowska, J., Mika, M., & Waligóra, G. (2011). Project scheduling with finite or infinite number of activity processing modes—A survey. European Journal of Operational Research, 208(3), 177–205. https://doi.org/10.1016/j.ejor.2010.03.037.

Woeginger, G. J. (1997). A polynomial-time approximation scheme for maximizing the minimum machine completion time. Operations Research Letters, 20(4), 149–154. https://doi.org/10.1016/S0167-6377(96)00055-7.

Yang, W.-H. (2009). Scheduling jobs on a single machine to maximize the total revenue of jobs. Computers & Operations Research, 36(2), 565–583. https://doi.org/10.1016/j.cor.2007.10.018.

Yin, Y., Liu, M., Hao, J., & Zhou, M. (2012). Single-machine scheduling with job-position-dependent learning and time-dependent deterioration. IEEE Transactions on Systems, Man, and Cybernetics—Part A: Systems and Humans, 42(1), 192–200. https://doi.org/10.1109/TSMCA.2011.2147305.

Yusriski, R., Sukoyo, S., Samadhi, T., & Halim, A. (2015). Integer batch scheduling problems for a single-machine with simultaneous effect of learning and forgetting to minimize total actual flow time. International Journal of Industrial Engineering Computations, 6(3), 365–378.

Zhang, L., & Sun, R. An improvement of resource-constrained multi-project scheduling model based on priority-rule based heuristics. In ICSSSM11, 25–27 June 2011 (pp. 1–5). https://doi.org/10.1109/icsssm.2011.5959321.

Acknowledgements

The authors would like to thank the associate editor and the anonymous referee for their constructive and helpful comments on initial version of this paper. Their careful readings have helped us produce an improved paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Example A.1

Consider a 3-job 3-machine scheduling problem as follows. Let the basic processing time (the processing time if a single machine is assigned to the job for processing) of each job be \( d_{1} = 2,d_{2} = 3,d_{3} = 5 \). The scheduling objective is to minimize makespan. Several cases are considered as follows.

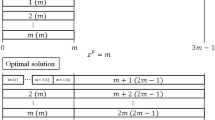

Case 1 Consider scheduling Model 1 with processing time rate equal to \( g_{i} (Y(t)) = Y(t) \) for \( Y(t) \in \{ 1,2,3\} \), f(1) = 1 and \( i \in \{ 1,2,3\} \). Note that this is exactly the model presented by Adiri and Yehudai (1987). In that, the demand rate is 1/Y(t). The optimal makespan is equal to \( \tilde{D}_{3} = 10 \) independent of schedule of jobs on machines (single- or multiple-machines scheduling). For example, assign job i to machine i as illustrated in the following scenario.

Case 2 Consider scheduling Model 1 with processing time rate equal to \( g_{i} (Y(t)) = 1/Y(t) \) for \( Y(t) \in \{ 1,2,3\} \), f(1) = 1 and \( i \in \{ 1,2,3\} \). The optimal makespan is equal to \( \tilde{D}_{3} = 19/6 \) and accomplished by assigning job i to machine i as illustrated below.

Case 3 Consider scheduling model (2) with \( g_{i} (Y(t)) = 1/Y(t) \) for \( Y(t) \in \{ 1,2,3\} \), f(1) = 1 and \( i \in \{ 1,2,3\} \). The optimal makespan is equal to \( \tilde{D}_{3} = 10 \) independent of schedule of jobs on machines (single- or multiple-machines scheduling); see a scenario as follows:

Note that here the sum of demands in the left-hand sides is a constant number equal to 10.

Case 4: Consider scheduling model (2) with \( g_{i} (Y(t)) = Y(t) \) for \( Y(t) \in \{ 1,2,3\} \), f(1) = 1 and \( i \in \{ 1,2,3\} \). The optimal makespan is equal to \( \tilde{D}_{3} = 19/6 \) calculated as follows:

Here, also the sum of demands in the left-hand side is a constant number equal to 10.

In cases 5–7, we consider malleable task scheduling, with different assumptions, where the processing time of a job is exactly inversely proportional to the number of machines assigned to that job. Thus, in each case we have the processing time of a job \( j \in \{ 1,2,3\} \) equal to \( \tilde{d}_{j} = d_{j} /Y\left( t \right) \) for \( Y(t) = 1,2,3. \)

Case 5: Assume idle processors cannot join a group of operating processors in the middle of job processing, and dropping out to a different job before the job is completed is also not allowed. An optimal makespan can be found by assigning 3 machines to each job simultaneously. The optimal makespan is (2 + 3 + 5)/3 = 10/3, and this is a unique optimal schedule. The next best schedule is to assign 2 machines to job 3 (with actual processing time 5/2), assign one machine to job 2 (with actual processing time 3) and assign two machines (previously were assigned to job 3) to job 1 (with actual processing time 1). The makespan is now 5/2 + 1=7/2.

Case 6. Assume idle processors are able to join a group of operating processors at any point in the job processing; however, dropping out to a different job before the job is completed is not allowed. Here, the optimal schedule is similar to the case 5.

Case 7. Assume idle processors are able to join a group of operating processors at any point in the job processing, and dropping out to a different job before the job is completed is also allowed. Similar to cases 5 and 6, an optimal makespan can be found by assigning 3 machines to each job simultaneously with the makespan equal to 10/3. However, here an optimal schedule is not unique as it was in cases 5 and 6. The following schedule is another optimal solution. Assign 3 machines to job 3 for 3 units of its processing time to account for 3/3 actual processing time (there are 2 more time units left on this job to be processed). Assign 3 machines to job 2 for one unit of its time to account for 1/3 actual processing time (here also there are 2 more time units left of the job to be processed). Now, each job has 2 time units to be processed. Assign one machine to each job for 2 time units to account for 2 actual processing times. The total elapsed time for finishing all jobs (i.e., makespan) is 3/3 + 1/3 + 2 = 10/3.

Obviously, this example shows that different models can produce different results. In particular, since Model 1 and 2 are inverse of each other when f(1) = 1, \( t \ne 0 \), they provided similar results (compare cases 1–4). Note that in cases 1 and 3 any schedule is optimal, while this is not the case for cases 2 and 4. The malleable task scheduling (cases 5–7) gave very different results when compared to Model 1 (cases 1, 2) and Model 2 (cases 3, 4). In particular, malleable task scheduling in cases 5 and 6 (these assumptions were made in [6]) have a unique solution in each case. However, for Model 1 (case 1) and Model 2 (case 3), any schedule is optimal. When any of the assumptions in case 5, 6, or 7 is satisfied, it is easy to prove that an optimal schedule exists by assigning all machines to all jobs irrespective to order of the job processing. It is important to realize that this is not to say ‘any schedule of jobs on machines is optimal.’ Also, note that comparison of cases 2 and 4 with cases 5, 6, and 7 show no apparent relationship between malleable task scheduling and Model 1 and 2.

Rights and permissions

About this article

Cite this article

Alidaee, B., Wang, H., Kethley, R.B. et al. A unified view of parallel machine scheduling with interdependent processing rates. J Sched 22, 499–515 (2019). https://doi.org/10.1007/s10951-019-00605-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10951-019-00605-x