Abstract

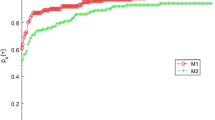

In this paper, we propose a three-term conjugate gradient method based on secant conditions for unconstrained optimization problems. Specifically, we apply the idea of Dai and Liao (in Appl. Math. Optim. 43: 87–101, 2001) to the three-term conjugate gradient method proposed by Narushima et al. (in SIAM J. Optim. 21: 212–230, 2011). Moreover, we derive a special-purpose three-term conjugate gradient method for a problem, whose objective function has a special structure, and apply it to nonlinear least squares problems. We prove the global convergence properties of the proposed methods. Finally, some numerical results are given to show the performance of our methods.

Similar content being viewed by others

References

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer Series in Operations Research. Springer, New York (2006)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2, 21–42 (1992)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49, 409–436 (1952)

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10, 177–182 (1999)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2, 35–58 (2006)

Perry, A.: A modified conjugate gradient algorithm. Oper. Res. 26, 1073–1078 (1978)

Dai, Y.H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43, 87–101 (2001)

Yabe, H., Takano, M.: Global convergence properties of nonlinear conjugate gradient methods with modified secant condition. Comput. Optim. Appl. 28, 203–225 (2004)

Zhou, W., Zhang, L.: A nonlinear conjugate gradient method based on the MBFGS secant condition. Optim. Methods Softw. 21, 707–714 (2006)

Ford, J.A., Narushima, Y., Yabe, H.: Multi-step nonlinear conjugate gradient methods for unconstrained minimization. Comput. Optim. Appl. 40, 191–216 (2008)

Kobayashi, M., Narushima, Y., Yabe, H.: Nonlinear conjugate gradient methods with structured secant condition for nonlinear least squares problems. J. Comput. Appl. Math. 234, 375–397 (2010)

Yabe, H., Sakaiwa, N.: A new nonlinear conjugate gradient method for unconstrained optimization. J. Oper. Res. Soc. Jpn. 48, 284–296 (2005)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Zhang, L., Zhou, W., Li, D.H.: Global convergence of a modified Fletcher-Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104, 561–572 (2006)

Zhang, L., Zhou, W., Li, D.H.: A descent modified Polak-Ribiére-Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26, 629–640 (2006)

Zhang, L., Zhou, W., Li, D.H.: Some descent three-term conjugate gradient methods and their global convergence. Optim. Methods Softw. 22, 697–711 (2007)

Narushima, Y., Yabe, H., Ford, J.A.: A three-term conjugate gradient method with sufficient descent property for unconstrained optimization. SIAM J. Optim. 21, 212–230 (2011)

Zhang, J.Z., Deng, N.Y., Chen, L.H.: New quasi-Newton equation and related methods for unconstrained optimization. J. Optim. Theory Appl. 102, 147–167 (1999)

Zhang, J.Z., Xu, C.X.: Properties and numerical performance of quasi-Newton methods with modified quasi-Newton equations. J. Comput. Appl. Math. 137, 269–278 (2001)

Li, D.H., Fukushima, M.: A modified BFGS method and its global convergence in nonconvex minimization. J. Comput. Appl. Math. 129, 15–35 (2001)

Ford, J.A., Moghrabi, I.A.: Alternative parameter choices for multi-step quasi-Newton methods. Optim. Methods Softw. 2, 357–370 (1993)

Ford, J.A., Moghrabi, I.A.: Multi-step quasi-Newton methods for optimization. J. Comput. Appl. Math. 50, 305–323 (1994)

Cheng, W.: A two-term PRP-based descent method. Numer. Funct. Anal. Optim. 28, 1217–1230 (2007)

Cheng, W., Liu, Q.: Sufficient descent nonlinear conjugate gradient methods with conjugacy condition. Numer. Algorithms 53, 113–131 (2010)

Dennis, J.E. Jr., Martinez, H.J., Tapia, R.A.: Convergence theory for the structured BFGS secant method with an application to nonlinear least squares. J. Optim. Theory Appl. 61, 161–178 (1989)

Engels, J.R., Martinez, H.J.: Local and superlinear convergence for partially known quasi-Newton methods. SIAM J. Optim. 1, 42–56 (1991)

Huschens, J.: On the use of product structure in secant methods for nonlinear least squares problems. SIAM J. Optim. 4, 108–129 (1994)

Bongartz, I., Conn, A.R., Gould, N.I.M., Toint, P.L.: CUTE: constrained and unconstrained testing environment. ACM Trans. Math. Softw. 21, 123–160 (1995)

Gould, N.I.M., Orban, D., Toint, P.L.: CUTEr web site. http://www.cuter.rl.ac.uk/

Hager, W.W., Zhang, H.: CG_DESCENT Version 1.4 User’s Guide. University of Florida (2005). http://www.math.ufl.edu/~hager/

Hager, W.W., Zhang, H.: Algorithm 851: CG_DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 32, 113–137 (2006)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Moré, J.J., Garbow, B.S., Hillstrom, K.E.: Testing unconstrained optimization software. ACM Trans. Math. Softw. 7, 17–41 (1981)

Moré, J.J., Thuente, D.J.: Line search algorithms with guaranteed sufficient decrease. ACM Trans. Math. Softw. 20, 286–307 (1994)

Nocedal, J.: http://www.ece.northwestern.edu/~nocedal/software.html

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Liqun Qi.

Rights and permissions

About this article

Cite this article

Sugiki, K., Narushima, Y. & Yabe, H. Globally Convergent Three-Term Conjugate Gradient Methods that Use Secant Conditions and Generate Descent Search Directions for Unconstrained Optimization. J Optim Theory Appl 153, 733–757 (2012). https://doi.org/10.1007/s10957-011-9960-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-011-9960-x