Abstract

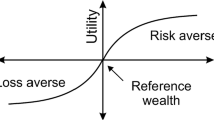

In economics and decision theory, loss aversion refers to people’s tendency to strongly prefer avoiding losses to acquiring gains. Many studies have revealed that losses are more powerful, psychologically, than gains. We initially introduce loss aversion into the decision framework of the robust newsvendor model, to provide the theoretical guidance and referential decision for loss-averse decision makers when only the mean and variance of the demand distribution are known. We obtain the explicit expression for the optimal order policy that maximizes the loss-averse newsvendor’s worst-case expected utility. We find that the robust optimal order policy for the loss-averse newsvendor is quite different from that for the risk-neutral newsvendor. Furthermore, the impacts of loss aversion level on the robust optimal order quantity and on the traditional optimal order quantity are roughly the same.

Similar content being viewed by others

References

Kahneman, D., Tversky, A.: Prospect theory: an analysis of decisions under risk. Econometrica 47(2), 263–291 (1979)

Fiegenbaum, A., Thomas, H.: Attitudes toward risk and the risk-return paradox: prospect theory explanations. Acad. Manag. J. 31, 85–106 (1988)

Putler, D.: Incorporating reference price effects into a theory of household choice. Mark. Sci. 11, 287–309 (1992)

Benartzi, S., Thaler, R.H.: Myopic loss aversion and the equity premium puzzle. Q. J. Econ. 110, 73–92 (1995)

Camerer, C., Babcock, L., Loewenstein, G., Thaler, R.H.: Labor supply of New York City cabdrivers: one day at a time. Q. J. Econ. 112(2), 407–442 (1997)

Bowman, D., Minehart, D., Rabin, M.: Loss aversion in a consumption–savings model. J. Econ. Behav. Org. 38, 155–178 (1999)

Genesove, D., Mayer, C.: Loss aversion and seller behavior: evidence from the housing market. Q. J. Econ. 116, 1233–1260 (2001)

MacCrimmon, K.R., Wehrung, D.A.: Taking Risks: The Management of Uncertainty. Free Press, New York (1986)

Duxbury, D., Summers, B.: Financial risk perception: Are individuals variance averse or loss averse? Econ. Lett. 84, 21–28 (2004)

Brooks, P., Zank, H.: Loss averse behavior. J. Risk Uncertain. 31, 301–325 (2005)

Schweitzer, M.E., Cachon, G.P.: Decision bias in the newsvendor problem with a known demand distribution: experimental evidence. Manag. Sci. 46, 404–420 (2000)

Wang, C.X., Webster, S.: The loss-averse newsvendor problem. Omega 37, 93–105 (2009)

Scarf, H.: A min-max solution of an inventory problem. In: Arrow, K., Karlin, S., Scarf, H. (eds.) Studies in The Mathematical Theory of Inventory and Production, pp. 201–209. Stanford University Press, Stanford, CA (1958)

Gallego, G., Moon, I.: The distribution free newsboy problem: review and extensions. J. Oper. Res. Soc. 44(8), 825–834 (1993)

Moon, I., Choi, S.: Distribution free newsboy problem with balking. J. Oper. Res. Soc. 46(4), 537–542 (1995)

Moon, I., Choi, S.: Distribution free procedures for make-to-order (MTO), make-in-advance (MIA), and composite policies. Int. J. Prod. Econ. 48(1), 21–28 (1997)

Alfares, H.K., Elmorra, H.H.: The distribution-free newsboy problem: extension to the shortage penalty case. Int. J. Prod. Econ. 93–94, 465–477 (2005)

Mostard, J., Koster, R., Teunter, R.: The distribution-free newsboy problem with resalable returns. Int. J. Prod. Econ. 97(3), 329–342 (2005)

Yue, J., Chen, B., Wang, M.: Expected value of distribution information for the newsvendor problem. Oper. Res. 54(6), 1128–1136 (2006)

Liao, Y., Banerjee, A., Yan, C.: A distribution-free newsvendor model with balking and lost sales penalty. Int. J. Prod. Econ. 133, 224–227 (2011)

Lee, C., Hsu, S.: The effect of advertising on the distribution-free newsboy problem. Int. J. Prod. Econ. 129, 217–224 (2011)

Pal, B., Sana, S.S., Chaudhuri, K.: A distribution-free newsvendor problem with nonlinear holding cost. Int. J. Syst. Sci. 46(7), 1269C1277 (2013)

Zhu, Z., Zhang, J., Ye, Y.: Newsvendor optimization with limited distribution information. Opt. Methods Softw. 28(3), 640–667 (2013)

Kysang, K., Taesu, C.: A minimax distribution-free procedure for a newsvendor problem with free shipping. Eur. J. Oper. Res. 232, 234–240 (2014)

Tversky, A., Kahneman, D.: Loss aversion in riskless choice: a reference-dependent model. Q. J. Econ. 106, 1039–1061 (1991)

Barberis, N., Huang, M.: Mental accounting, loss aversion, and individual stock returns. J. Finance 56, 1247–1292 (2001)

Natarajan, K., Zhou, L.: A mean-variance bound for a three-piece linear function. Prob. Eng. Inf. Sci. 21, 611–621 (2007)

Silver, E.A., Peterson, R.: Decision Systems for Inventory Management and Production Planning, 2nd edn. Wiley, New York (1985)

Acknowledgments

This research was partially supported by the Fundamental Research Funds for the Central Universities of China (Grant No. CDJSK100211) and by the Scientific Research Foundation and the Project Spark of the Chongqing University of Technology.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Proof of Theorem 3.1

Firstly, we prove the closed-form expression for U(q). Our proof is based on constructing a pair of primal-dual feasible solutions for (P) and (D), and make sure that they satisfy the complementary slackness condition. The optimality will then follow.

-

Case 1:

Three-point distribution. The remaining two bounds correspond to different three-point distribution.

-

(1a):

Suppose that the smooth curve g(x) tangents the lines \(l_{0}:y=\lambda [(p-s)x-(c-s)q]\), \(l_{1}:y=(p-s)x-(c-s)q\) and \(l_{2}:y=(p-c)q\). Let us denote these points as \(x_{0}\), \(x_{1}\) and \(x_{2}\), where \(0\le x_{0}<\frac{c-s}{p-s}q\), \(\frac{c-s}{p-s}q\le x_{1}< q\) and \(x_{2}\ge q\). Due to the tangency condition, they must satisfy the following system of equations:

$$\begin{aligned}&g(x_{0})-u(\pi (q,x_{0}))=0, g'(x_{0})-u'(\pi (q,x_{0}))=0,\\&g(x_{1})-u(\pi (q,x_{1}))=0, g'(x_{1})-u'(\pi (q,x_{1}))=0,\\&g(x_{2})-u(\pi (q,x_{2}))=0, g'(x_{2})-u'(\pi (q,x_{2}))=0. \end{aligned}$$From the above system of equations, it is easy to get

$$\begin{aligned} x_{0}= & {} \tfrac{\lambda (c-s)-(\lambda -1)(p-c)}{\lambda (p-s)} q, \\ x_{1}= & {} \tfrac{\lambda (c-s)+(\lambda -1)(p-c)}{\lambda (p-s)} q, \\ x_{2}= & {} \tfrac{\lambda (c-s)+(\lambda +1)(p-c)}{\lambda (p-s)} q. \end{aligned}$$It is easy to see that \(x_{0}<\frac{c-s}{p-s}q\le x_{1}<q\le x_{2}\). And \(x_{0}\ge 0\) is ensured if \(\frac{c-s}{p-s}\ge \frac{\lambda -1}{2\lambda -1}\). Note that the three-point distribution has to satisfy the mean and variance constraints. This can be obtained using the probabilities of these three points constructed explicitly as:

$$\begin{aligned} p_{x_{0}}= & {} \tfrac{\sigma ^{2}+(\mu -x_{1})(\mu -x_{2})}{(x_{0}-x_{1})(x_{0}-x_{2})},\\ p_{x_{2}}= & {} \tfrac{\sigma ^{2}+(\mu -x_{0})(\mu -x_{1})}{(x_{2}-x_{0})(x_{2}-x_{1})},\\ p_{x_{1}}= & {} 1-p_{x_{0}}-p_{x_{2}}. \end{aligned}$$For the solution \(F^{*}(x)\) (\((x_{i},p_{x_{i}}), i=1,2,3\) construct the three-point distribution \(F^{*}(x)\)) to be primal feasible, we need to ensure that the values of \(p_{x_{i}}\) are nonnegative. This is ensured if \(\max \{(x_{1}-\mu )(\mu -x_{0}),(x_{2}-\mu )(\mu -x_{1})\}\le \sigma ^{2}\le (x_{2}-\mu )(\mu -x_{0})\). With the values of \(x_{0}\), \(x_{1}\) and \(x_{2}\), it is easy to check that

$$\begin{aligned} y_{0}^{*}= & {} (p-c)q+y_{2}^{*}x_{2}^{2}=\tfrac{[3(p-c)+\lambda (p-s)][(p-c)-\lambda (p-s)]q}{4(p-c)},\\ y_{1}^{*}= & {} -2y_{2}^{*}x_{2}=\tfrac{(p-s)[(p-c)+\lambda (p-s)]}{2(p-c)},\\ y_{2}^{*}= & {} \tfrac{p-s}{2(x_{1}-x_{2})}=\tfrac{-\lambda (p-s)^{2}}{4(p-c)q}, \end{aligned}$$is a dual feasible solution and satisfies the complementarity slackness condition with the primal feasible solution \(F^{*}(x)\) that we had identified before. Therefore (P) and (D) have the same optimal objective value, which is equal to

$$\begin{aligned} U(q)= & {} \lambda [(p-s)x_{0}-(c-s)q]p_{x_{0}}+[(p-s)x_{1}-(c-s)q]p_{x_{1}}\\&+\,(p-c)qp_{x_{2}}\\= & {} y_{0}^{*} +\mu y_{1}^{*}+ (\mu ^{2}+\sigma ^{2}) y_{2}^{*}\\= & {} \tfrac{(p-s)[\lambda (p-s)+(p-c)]\mu }{2(p-c)}+\big [(p-c)-\tfrac{(\lambda (p-s)+(p-c))^{2}}{4\lambda (p-c)}\big ]q\\&-\tfrac{\lambda (p-s)^{2}\big (\mu ^{2}+\sigma ^{2}\big )}{4(p-c)q}. \end{aligned}$$ -

(1b):

Suppose that g(x) intersects \(l_{0}\) at the origin and tangents \(l_{1}\) and \(l_{2}\). The following proof is similar to that of (1a).

-

(1a):

-

Case 2:

Two-point distribution. The remaining five bounds correspond to different two-point distributions.

-

(2a):

Suppose that g(x) tangents the lines \(l_{0}\) and \(l_{1}\) only. Let these tangent points be \(\bar{x}_{0}\) and \(\bar{x}_{1}\), respectively. Due to the tangency condition, we can get

$$\begin{aligned} \bar{x}_{0}+\bar{x}_{1}= & {} 2\tfrac{c-s}{p-s}q. \end{aligned}$$(2)

-

(2a):

For the solution to be primal feasible, we need to construct the two-point distribution \(F^{*}(x)\) as follows by using a positive variable \(\tau \):

Substituting (3) into the above Eq. (2), we deduce that

It is easy to see that \(\bar{x}_{0}<\frac{c-s}{p-s}q<\bar{x}_{1}\) and prove the following results:

-

1.

\(\frac{c-s}{p-s}\ge \frac{\lambda -1}{2\lambda -1}\) and \(\sigma ^{2}<(x_{1}-\mu )(\mu -x_{0})\Rightarrow \sigma ^{2}<(\omega -\mu )(\mu -\hat{x}_{0})\);

-

2.

\(\frac{c-s}{p-s}< \frac{\lambda -1}{2\lambda -1}\) and \(\sigma ^{2}<(\omega -\mu )(\mu -\hat{x}_{0})\Rightarrow \sigma ^{2}<(x_{1}-\mu )(\mu -x_{0})\);

-

3.

\(\sigma ^{2}<(\omega -\mu )(\mu -\hat{x}_{0})\Leftrightarrow \bar{x}_{0}>0\);

-

4.

\(\sigma ^{2}<(x_{1}-\mu )(\mu -x_{0})\Leftrightarrow \sqrt{(\mu -\frac{c-s}{p-s}q)^{2}+\sigma ^{2}}<\frac{\lambda -1}{\lambda }\cdot \frac{p-c}{p-s}q\Rightarrow \bar{x}_{1}<q\).

The corresponding dual solution which satisfies the complementarity slackness condition with the primal feasible solution \(F^{*}(x)\) is

In this case, we still need to guarantee that the solution \((y_{0}^{*},y_{1}^{*},y_{2}^{*})\) also satisfies the dual feasibility condition by checking \(y_{0}^{*}+y_{1}^{*}x+y_{2}^{*}x^{2}<(p-c)q\) for all \(x\ge q\). Let \(\varDelta \) be the discriminant of the quadratic function \(y_{2}^{*}x^{2}+y_{1}^{*}x+[y_{0}^{*}-(p-c)q]\). If \(\frac{c-s}{p-s}\ge \frac{\lambda -1}{2\lambda -1}\) and \(\sigma ^{2}<(x_{1}-\mu )(\mu -x_{0})\), or else if \(\frac{c-s}{p-s}<\frac{\lambda -1}{2\lambda -1}\) and \(\sigma ^{2}<(\omega -\mu )(\mu -\hat{x}_{0})\), then we have

Since \(\varDelta <0\) and \(y_{2}^{*}<0\), the dual feasibility condition is satisfied. Thus the optimality holds by this pair of solutions.

-

(2b):

Suppose that g(x) tangents the lines \(l_{1}\) and \(l_{2}\). The following proof is similar to that of (2a).

-

(2c):

Suppose that g(x) tangents \(l_{0}\) and \(l_{2}\). The following proof is similar to that of (2a).

-

(2d):

Suppose that g(x) intersects \(l_{0}\) at the origin and tangents \(l_{1}\). The following proof is similar to that of (2a).

-

(2e):

Suppose that g(x) intersects \(l_{0}\) at the origin and g(x) tangents \(l_{2}\). The following proof is similar to that of (2a).

Secondly, we prove the differentiability of U(q) on \([0,+\infty ]\). We denote by \(U_{1a}(q)\), \(U_{1b}(q)\), \(U_{2a}(q)\), \(U_{2b}(q)\), \(U_{2c}(q)\), \(U_{2d}(q)\), \(U_{2e}(q)\), respectively, the tight lower bound U(q) of cases (1a), (1b), (2a), (2b), (2c), (2d), (2e) in Theorem 3.1.

Since U(q) is a piecewise function made up of seven differentiable cases, we just need to show the differentiability of adjoining points. We indicate the proof for the differentiability of the adjoining point between case (1b) and case (2e) only, and the other proof is similar. From \(\sigma ^{2}=(\hat{x}_{2}(q)-\mu )(\mu -\hat{x}_{0}(q))\), we can get that the adjoining point between case (1b) and case (2e) is

It is easy to verify that

and

Finally, we prove the concavity of U(q) on \([0,+\infty ]\).

-

(1a):

For any \(q\ge 0\), we can calculate that

$$\begin{aligned} {U}_{1a}^{''}(q)=-\tfrac{\lambda (p-s)^{2}(\mu ^{2}+\sigma ^{2})}{2(p-c)q^{3}}<0. \end{aligned}$$So, \(U_{1a}(q)\) is concave on \([0,+\infty ]\).

-

(1b),

(2a), (2b), (2c): These proofs are similar to that of (1a).

-

(2d):

It is obvious that \(U_{2d}(q)\) is a linear function, so \(U_{2d}(q)\) is a concave function.

-

(2e):

This proof is similar to that of (2d). Since the differentiable function U(q) is a piecewise function made up of seven concave cases, U(q) is concave on \([0,+\infty ]\). \(\square \)

Appendix 2

Proof of Theorem 3.2

-

(1a):

Setting

$$\begin{aligned} {U}_{1a}^{'}(q)=(p-c)-\tfrac{[\lambda (c-s)+(\lambda +1)(p-c)]^{2}}{4\lambda (p-c)}+\tfrac{\lambda (p-s)^{2}(\mu ^{2}+\sigma ^{2})}{4(p-c)q^{2}}=0, \end{aligned}$$we can get a positive stationary point

$$\begin{aligned} q_{1a}=\lambda (p-s)\sqrt{\tfrac{\mu ^{2}+\sigma ^{2}}{(\lambda (c-s)+(\lambda +1)(p-c))^{2}-4\lambda (p-c)^{2}}} \end{aligned}$$Since \(U_{1a}(q)\) is concave, \(\hbox {arg}\max \limits _{q}U_{1a}(q)=q_{1a}\). Thus, if \(q_{1a}\) satisfies conditions (1a), then \(q^{*}=q_{1a}\).

-

(1b):

This proof is similar to that of (1a).

-

(2a):

For any \(q\ge 0\),

$$\begin{aligned} {U}_{2a}^{'}(q)=-\tfrac{(\lambda +1)(c-s)}{2}\Big [1-\tfrac{(\lambda -1)(\mu -\frac{c-s}{p-s}q)}{(\lambda +1)\sqrt{(\mu -\frac{c-s}{p-s}q)^{2}+\sigma ^{2}}}\Big ]<0. \end{aligned}$$Obviously, \(U_{2a}(q)\) is monotone decreasing in q on \([0,+\infty ]\), and \(\hbox {arg}\max \limits _{q}U_{2a}(q)=0\). But \(q=0\) does not satisfy condition (2a), so \(q^{*}\) can not be attained under this case.

-

(2b):

Setting

$$\begin{aligned} {U}_{2b}^{'}(q)=\tfrac{(p-c)-(c-s)}{2}+\tfrac{(p-s)(\mu -q)}{2\sqrt{(\mu -q)^{2}+\sigma ^{2}}}=0, \end{aligned}$$we can get a unique stationary point

$$\begin{aligned} q_{2b}=\mu +\tfrac{\sigma }{2}\Big (\sqrt{\tfrac{p-c}{c-s}}-\sqrt{\tfrac{c-s}{p-c}}\Big ). \end{aligned}$$If \(\tfrac{\mu }{\sigma }<\tfrac{(c-s)-(p-c)}{2(p-c)(c-s)}\), then \(q_{2b}<0\). Since \(U_{2b}(q)\) is concave, \(\hbox {arg}\max \limits _{q}U_{2b}(q)=0\). But \(q=0\) does not satisfy condition (2b). If \(\tfrac{\mu }{\sigma }\ge \tfrac{(c-s)-(p-c)}{2(p-c)(c-s)}\), then \(q_{2b}\ge 0\). Since \(U_{2b}(q)\) is concave, \(\hbox {arg}\max \limits _{q}U_{2b}(q)=q_{2b}\). To sum up, if \(q_{2b}\) satisfies conditions (2b), then \(q^{*}=q_{2b}\).

-

(2c):

This proof is similar to that of (2b).

-

(2d):

It is easy to verify that if \(U_{2d}(q)\) is monotone decreasing in q, \(\hbox {arg}\max \limits _{q}U_{2d}(q)=0\). But \(q=0\) does not satisfy condition (2d), so \(q^{*}\) can not be attained under this case.

-

(2e):

It is obvious that

$$\begin{aligned}&U_{2e}(q)\hbox { is monotone increasing in }q,\quad \hbox {if}\; \big (\tfrac{\mu }{\sigma }\big )^{2}>\frac{\lambda (c-s)}{p-c},\\&U_{2e}(q)\hbox { is monotone decreasing in }q, \quad \hbox {if}\; \big (\tfrac{\mu }{\sigma }\big )^{2}\le \frac{\lambda (c-s)}{p-c}. \end{aligned}$$

If \(\big (\tfrac{\mu }{\sigma }\big )^{2}>\frac{\lambda (c-s)}{p-c}\), \(q^{*}\) can not be attained under this case.

If \(\big (\tfrac{\mu }{\sigma }\big )^{2}\le \frac{\lambda (c-s)}{p-c}\), then \(\hbox {arg}\max \limits _{q\in [0,+\infty ]}U_{2e}(q)=q_{2e}=0\). It is obvious that \(q_{2e}\) satisfies condition (2e), so \(q^{*}=q_{2e}\). \(\Box \)

Appendix 3

Proof of Corollary 3.1

Let \(\lambda =1\), then \(\frac{c-s}{p-s}\ge \frac{\lambda -1}{2\lambda -1}=0\), \(x_{0}(q)=x_{1}(q)=\frac{c-s}{p-s}q\), \(x_{2}(q)=\frac{(c-s)+2(p-c)}{p-s}q\) and \(q_{2b}=q_{2c}=\mu +\tfrac{\sigma }{2}\Big (\sqrt{\tfrac{p-c}{c-s}}-\sqrt{\tfrac{c-s}{p-c}}\Big )\). Furthermore, the five cases of the robust optimal order quantity \(q^{*}\) in Theorem 3.2 can reduce to the following three cases:

-

(1a):

If \(\sigma ^{2}=(x_{2}(q_{1a})-\mu )(\mu -x_{0}(q_{1b}))\), then \(q^{*}=q_{1a}\).

-

(2b),

(2c): If \(\sigma ^{2}<(x_{2}(q_{2b})-\mu )(\mu -x_{1}(q_{2b}))\), or else if \((x_{2}(q_{2b})-\mu )(\mu -x_{0}(q_{2b}))<\sigma ^{2}\le (\nu (q_{2b})-\mu )(\mu -\hat{x}_{0}(q_{2b}))\), then \(q^{*}=q_{2b}\).

-

(2e):

If \(\big (\tfrac{\mu }{\sigma }\big )^{2}\le \frac{c-s}{p-c}\), then \(q^{*}=q_{2e}=0\).

Moreover, it is easy to verify that

-

1.

\(\big (\tfrac{\mu }{\sigma }\big )^{2}>\frac{c-s}{p-c}\Rightarrow \sigma ^{2}\le (\nu (q_{2b})-\mu )(\mu -\hat{x}_{0}(q_{2b}))\).

-

2.

From the result 2 in the proof of Theorem 3.1 (2b), we can get

$$\begin{aligned} \sigma ^{2}=(x_{2}(q_{2b})-\mu )(\mu -x_{1}(q_{2b}))\Leftrightarrow \mu =\tfrac{[3(p-c)+(c-s)]\sigma }{2(p-c)}\sqrt{\tfrac{c-s}{p-c}}. \end{aligned}$$ -

3.

Substitute \(\mu =\tfrac{[3(p-c)+(c-s)]\sigma }{2(p-c)}\sqrt{\tfrac{c-s}{p-c}}\) into \(q_{1a}\) and \(q_{2b}\), we can obtain that

$$\begin{aligned} q_{1a}=q_{2b}=\tfrac{(p-s)^{2}\sigma }{2(p-c)\sqrt{(p-c)(c-s)}}. \end{aligned}$$

To sum up, from the results 1, 2, 3, we can obtain that

\(\square \)

Rights and permissions

About this article

Cite this article

Yu, H., Zhai, J. & Chen, GY. Robust Optimization for the Loss-Averse Newsvendor Problem. J Optim Theory Appl 171, 1008–1032 (2016). https://doi.org/10.1007/s10957-016-0870-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-016-0870-9