Abstract

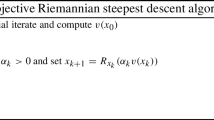

We study the issue of convergence for inexact steepest descent algorithm (employing general step sizes) for multiobjective optimizations on general Riemannian manifolds (without curvature constraints). Under the assumption of the local convexity/quasi-convexity, local/global convergence results are established. Furthermore, without the assumption of the local convexity/quasi-convexity, but under an error bound-like condition, local/global convergence results and convergence rate estimates are presented, which are new even in the linear space setting. Our results improve/extend the corresponding ones in (Wang et al. in SIAM J Optim 31(1):172–199, 2021) for scalar optimization problems on Riemannian manifolds to multiobjective ones. Finally, for the special case when the inexact steepest descent algorithm employing Armijo rule, our results improve/extend the corresponding ones in (Ferreira et al. in J Optim Theory Appl 184:507–533, 2020) by relaxing curvature constraints.

Similar content being viewed by others

References

Absil, P.A., Mahony, R., Andrews, B.: Convergence of the iterates of descent methods for analytic cost functions. SIAM J. Optim. 16, 531–547 (2005)

Absil, P.A., Mahony, R., Sepulchre, R.: Optimization algorithms on matrix manifolds. Princeton University Press, Princeton (2008)

Bento, G.C., Ferreira, O.P., Oliveira, P.R.: Unconstrained steepest descent method for multicriteria optimization on Riemannian manifolds. J. Optim. Theory Appl. 154, 88–107 (2012)

Bento, G.C., Neto, J.X.D.C., Santo, P.S.M.: An inexact steepest descent method for multicriteria optimization on Riemannian manifolds. J. Optim. Theory Appl. 159, 108–124 (2013)

Bishop, R.L., Crittenden, R.J.: Geometry of Manifold. Acdemic Press, New York and London (1964)

Bonnel, H., Iusem, A.N., Svaiter, B.F.: Proximal methods in vector optimization. SIAM J. Optim. 15(4), 953–970 (2005)

Chen, G.Y., Yang, X.H.X.: Vector Optimization. Springer, Berlin Heidelberg (2005)

Das, I., Dennis, J.E.: Normal-boundary intersection: a new method for generating the Pareto surface in nonlinear multicriteria optimization problems. SIAM J. Optim. 8(3), 631–657 (1998)

DoCarmo, M.P.: Riemannian Geometry. Birkhäuser Boston, Boston (1992)

Drummond, L.M.G., Iusem, A.N.: A projected gradient method for vector optimization problems. Comput. Optim. Appl. 28(1), 5–29 (2004)

Drummond, L.M.G., Maculan, N., Svaiter, B.F.: On the choice of parameters for the weighting method in vector optimization. Math. Program. 111, 201–216 (2008)

Drummond, L.M.G., Svaiter, B.F.: A steepest descent method for vector optimization. J. Comput. Appl. Math. 175(2), 395–414 (2005)

Ermol’ev, Y.M.: On the method of generalized stochastic gradients and quasi-Fejér sequences. Cybernetics 5, 208–220 (1969)

Ferreira, O.P., Lucambio Pérez, L.R., Németh, S.Z.: Singularities of monotone vector fields and an extragradient-type algorithm. J. Global Optim. 31, 133–151 (2005)

Ferreira, O.P., Louzeiro, M.S., Prudente, L.F.: Iteration-Complexity and asymptotic analysis of steepest descent method for multiobjective optimization on Riemannian manifolds. J. Optim. Theory Appl. 184, 507–533 (2020)

Fliege, J., Drummond, L.M.G., Svaiter, B.F.: Newton’s method for multiobjective mptimization. SIAM J. Optim. 20(2), 602–626 (2009)

Fliege, J., Svaiter, B.F.: Steepest descent methods for multicriteria optimization. Math. Methods Oper. Res. 51(3), 479–494 (2000)

Fonseca, C.M., Fleming, P.J.: An overview of evolutionary algorithms in multiobjective optimization. Evol. Comput. 3(1), 1–16 (1995)

Fukuda, E.H., Drummond, L.M.G.: On the convergence of the projected gradient method for vector optimization. Optim. 60(8–9), 1009–10,221 (2011)

Fukuda, E.H., Drummond, L.M.G.: Inexact projected gradient method for vector optimization. Comput. Optim. Appl. 54(3), 493–493 (2013)

Geoffrion, A.M.: Proper efficiency and the theory of vector maximization. J. Math. Anal. Appl. 22(3), 618–630 (1968)

Jahn, J.: Scalarization in vector optimization. Math. Program. 29, 203–218 (1984)

Li, C., Mordukhovich, B.S., Wang, J., Yao, J.C.: Weak sharp minima on Riemannian manifolds. SIAM J. Optim. 21(4), 1523–1560 (2011)

Li, C., Yao, J.C.: Variational inequalities for set-valued vector fields on Riemannian manifolds: convexity of the solution set and the proximal point algorithm. SIAM J. Control. Optim. 50(4), 2486–2514 (2012)

Li, S., Li, C., Liou, Y., Yao, J.: Existence of solutions for variational inequalities on Riemannian manifolds. Nonlinear Anal. 71(11), 5695–5706 (2009)

Łojasiewicz, S.: Ensembles semi-analytiques. Inst. Hautes Études Sci, France (1965)

Luc, D.T.: Theory of vector optimization. Lecture Notes in Economy and Mathematical Systems, Springer Berlin Heidelberg, New York (1989)

Mahony, R.E.: The constrained Newton method on Lie group and the symmetric eigenvalue problem. Linear Algebra Appl. 248, 67–89 (1996)

Miettinen, K.M.: Nonlinear Multiobjective Optimization. Kluwer, Norwel (1999)

Miller, S.A., Malick, J.: Newton methods for nonsmooth convex minimization: connections among U-Lagrangian, Riemannian Newton and SQP methods. Math. Program. 104, 609–633 (2005)

Papa Quiroz, E.A., Quispe, E.M., Oliveira, P.R.: Steepest descent method with a generalized Armijo search for quasiconvex functions on Riemannian manifolds. J. Math. Anal. Appl. 341(1), 467–477 (2008)

Polyak, B. T. Introduction to Optimization. Moscow (1983)

Ryu, J.H., Kim, S.: A derivative-free trust-region method for biobjective optimization. SIAM J. Optim. 24, 334–362 (2014)

Sakai, T.: Riemannian Geometry. Transl. Math. Monogr., AMS, Providence, (1996)

Smith, S.T.: Geometric optimization methods for adaptive filtering. Harvard University, Cambridge, Massachusetts (1994)

Udriste, C.: Convex functions and optimization methods on Riemannian manifolds. Math. Appl, Kluwer, Dordrecht (1994)

Wang, J.H., Hu, Y., Yu, C.K.W., Li, C., Yang, X.: Extended Newton methods for multiobjective optimization: majorizing function technique and convergence analysis. SIAM J. Optim. 29, 2388–2421 (2019)

Wang, J.H., López, G., Martín-Márquez, V., Li, C.: Monotone and accretive vector fields on Riemannian manifolds. J. Optim. Theory Appl. 146, 691–708 (2010)

Wang, J.H., Wang, X.M., Li, C., Yao, J.C.: Convergence analysis of gradient algorithms on Riemannian manifolds without curvature constraints and application to Riemannian mass. SIAM J. Optim. 31(1), 172–199 (2021)

Wang, X.M.: Subgradient algorithms on Riemannian manifolds of lower bounded curvatures. Optim. 67(1), 179–194 (2018)

Wang, X.M.: An inexact descent algorithm for multicriteria optimizations on general Riemannian manifolds. J. Nonlin. Convex Anal. 21(10), 2367–2377 (2020)

Yang, Y.: Globally convergent optimization algorithms on Riemannian manifolds: uniform framework for unconstrained and constrained optimization. J. Optim. Theory Appl. 132(2), 245–265 (2007)

Tanabe, H., Fukuda, E.H., Yamashita, N.: Convergence rates analysis of a multiobjective proximal gradient method. Optim. Lett. 17(2), 333–50 (2022)

Yamaguchi, T.: Locally geodesically quasiconvex functions on complete Riemannian manifolds. Trans. Am. Math. Soc. 298(1), 307–330 (1986)

Acknowledgements

The authors are indebted to the handling editor and two anonymous referees for their very helpful remarks and comments, which allowed us to improve the original presentation. Research of the first author is supported in part by the National Natural Science Foundation of China (Grant 12161017) and Guizhou Provincial Natural Science Foundation of China (Grant ZK[2022]110). Research of the second author was supported in part by the National Natural Science Foundation of China (Grant 12171131). Research of the third author was supported in part by the National Natural Science Foundation of China (Grant 11971429).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Sándor Zoltán Németh.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, X.M., Wang, J.H. & Li, C. Convergence of Inexact Steepest Descent Algorithm for Multiobjective Optimizations on Riemannian Manifolds Without Curvature Constraints. J Optim Theory Appl 198, 187–214 (2023). https://doi.org/10.1007/s10957-023-02235-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-023-02235-y

Keywords

- Riemannian manifold

- Sectional curvature

- Multiobjective optimization

- Inexact descent algorithm

- Full convergence

- Convergence rate