Abstract

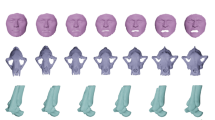

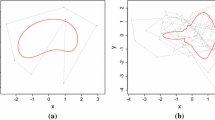

This work introduces a new Riemannian optimization method for registering open parameterized surfaces with a constrained global optimization approach. The proposed formulation leads to a rigorous theoretic foundation and guarantees the existence and the uniqueness of a global solution. We also propose a new Bayesian clustering approach where local distributions of surfaces are modeled with spherical Gaussian processes. The maximization of the posterior density is performed with Hamiltonian dynamics which provide a natural and computationally efficient spherical Hamiltonian Monte Carlo sampling. Experimental results demonstrate the efficiency of the proposed method.

Similar content being viewed by others

Data Availability

Datasets and matlab code generated and/or analyzed during the current study are available from the corresponding author on reasonable request

References

Adouani, I., Samir, C.: A constructive approximation of interpolating Bézier curves on Riemannian symmetric spaces. J. Opt. Theory Appl. 187, 158–180 (2020)

Bernal, J., Dogan, G., Hagwood, C.R.: Fast dynamic programming for elastic registration of curves. In: Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 1066–1073. IEEE, Las Vegas, NV, USA (2016)

Beyhaghi, P., Alimo, R., Bewley, T.: A derivative-free optimization algorithm for the efficient minimization of functions obtained via statistical averaging. Comput. Opt. Appl. 71, 1–31 (2020)

Biasotti, S., Marini, S., Spagnuolo, M., Falcidieno, B.: Sub-part correspondence by structural descriptors of 3D shapes. Comput.-Aid. Des. 38, 1002–1019 (2006)

Botev, Z., Grotowski, J., Kroese, D.: Kernel density estimation via diffusion. Annals Stat. 38, 2916–2957 (2010)

Bouix, S., Pruessner, J.C., Collins, D.L., Siddiqi, K.: Hippocampal shape analysis using medial surfaces. Neuroimage 25, 1077–1089 (2005)

Bruni, C., Bruni, R., De Santis, A., Iacoviello, D., Koch, G.: Global optimal image reconstruction from blurred noisy data by a Bayesian approach. J. Opt. Theory Appl. 115, 67–96 (2002)

Cencov, N.: Evaluation of an unknown distribution density from observations. Doklady 3, 1559–1562 (1962)

Collignon, A.M.F., Vandermeulen, D., Suetens, P., Marchal, G.: Surface-based registration of 3D medical images. In: Medical Imaging 1993: Image Processing, pp. 32 – 42. International Society for Optics and Photonics, SPIE (1993)

Croquet, B., Christiaens, D., Weinberg, S.M., Bronstein, M., Vandermeulen, D., Claes, P.: Unsupervised diffeomorphic surface registration and non-linear modelling. In: Medical Image Computing and Computer Assisted Intervention–MICCAI, pp. 118–128. Springer International Publishing, Strasbourg, France (2021)

Davies, R.H., Twining, C.J., Cootes, T.F., Taylor, C.J.: Building 3-D statistical shape models by direct optimization. IEEE Trans. Med. Imaging 29, 961–981 (2010)

De Lara, L., González-Sanz, A., Loubes, J.M.: Diffeomorphic registration using Sinkhorn divergences. SIAM J. Imag. Sci. 16, 250–279 (2023)

Dogan, G., Bernal, J., Hagwood, C.R.: A fast algorithm for elastic shape distances between closed planar curves. In: Conference on Computer Vision and Pattern Recognition CVPR, pp. 4222–4230. IEEE Computer Society, Boston, MA, USA (2015)

Feydy, J., Charlier, B., Vialard, F.X., Peyré, G.: Optimal transport for diffeomorphic registration. In: Medical Image Computing and Computer Assisted Intervention–MICCAI, pp. 291–299. Springer International Publishing, Quebec City, QC, Canada (2017)

Fradi, A., Samir, C., Braga, J., Joshi, S.H., Loubes, J.M.: Nonparametric Bayesian regression and classification on manifolds, with applications to 3D cochlear shapes. IEEE Trans. Image Process. 31, 2598–2607 (2022)

Garnett, R.: Bayesian Optimization. Cambridge University Press, Cambridge (2023)

Gilks, W.R., Wild, P.: Adaptive rejection sampling for Gibbs sampling. J. Royal Stat. Soc. 41, 337–348 (1992)

Gomez, J.: Stochastic global optimization algorithms: a systematic formal approach. Inf. Sci. 472, 53–76 (2019)

Gorczowski, K., Styner, M., Jeong, J.Y., Marron, J., Piven, J., Hazlett, H.C., Pizer, S.M., Gerig, G.: Multi-object analysis of volume, pose, and shape using statistical discrimination. Trans. Pattern Anal. Mach. Int. 32, 652–661 (2009)

Han, A., Mishra, B., Jawanpuria, P., Gao, J.: Riemannian accelerated gradient methods via extrapolation. In: Proceedings of The 26th International Conference on Artificial Intelligence and Statistics, Proceedings of Machine Learning Research, pp. 1554–1585. PMLR (2023)

Hernández-Lobato, J.M., Gelbart, M., Adams, R., Hoffman, M., Ghahramani, Z.: A general framework for constrained Bayesian optimization using information-based search. J. Mach. Learn. Res. 17, 1–53 (2016)

Holbrook, A., Lan, S., Streets, J., Shahbaba, B.(2020) Nonparametric Fisher geometry with application to density estimation. In: Proceedings of the 36th Conference on Uncertainty in Artificial Intelligence (UAI), Proceedings of Machine Learning Research, 124, 101–110

Huang, Q.X., Adams, B., Wicke, M., Guibas, L.: Nonrigid registration under isometric deformations. Eurograph. Symp. Geomet. Process. 8, 1449–1457 (2008)

Huang, W., Wei, K.: Riemannian proximal gradient methods. Math. Program. 194, 371–413 (2022)

Huber, D., Kapuria, A., Donamukkala, R., Hebert, M.: Parts-based 3D object classification. In: Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 82–89. IEEE Computer Society, Washington, D.C., USA (2004)

Jermyn, I.H., S., K., Klassen, E., Srivastava, A.: Elastic shape matching of parameterized surfaces using square root normal fields. In: ECCV, Lecture Notes in Computer Science, pp. 804–817. Springer, Heidelberg (2012)

Kilian, M., Mitra, N.J., Pottmann, H.: Geometric modeling in shape space. ACM Trans. Graph. 26, 1–8 (2007)

Kurtek, S., Klassen, E., Ding, Z., Avison, M.J., Srivastava, A.: Parameterization-invariant shape statistics and probabilistic classification of anatomical surfaces. In: Proceedings of the 22nd International Conference on Information Processing in Medical Imaging, pp. 147–158. Springer-Verlag, Berlin, Heidelberg (2011)

Lan, S., Zhou, B., Shahbaba, B.: Spherical Hamiltonian Monte Carlo for constrained target distributions. In: Proceedings of the 31st International Conference on Machine Learning, pp. 629–637. PMLR, Bejing, China (2014)

Lei, N., Gu, X.: FFT-OT: A fast algorithm for optimal transportation. In: International Conference on Computer Vision (ICCV), pp. 6260–6269. IEEE, Montreal, QC, Canada (2021)

Micheli, M., Michor, P.W., Mumford, D.: Sobolev metrics on diffeomorphism groups and the derived geometry of spaces of submanifolds. Izvestiya: Math. 77, 541–570 (2013)

Niroomand, M.P., Dicks, L., Pyzer-Knapp, E.O., Wales, D.J.: Physics inspired approaches to understanding Gaussian processes. CoRR abs/2305.10748 (2023)

Ovsjanikov, M., Mérigot, Q., Mémoli, F., Guibas, L.: One point isometric matching with the heat kernel. Comput. Graph. Forum 29, 1555–1564 (2010)

Pauly, M., Mitra, N.J., Giesen, J., Gross, M., Guibas, L.J.: Example-based 3D scan completion. In: Proceedings of the Third Eurographics Symposium on Geometry Processing, SGP 05, Eurographics Association, Vienna, Austria (2005)

Pennec, X.: Intrinsic statistics on Riemannian manifolds: basic tools for geometric measurements. J. Math. Imag. Vis. 25, 127–154 (2006)

Per, B.: Reliable updates of the transformation in the iterative closest point algorithm. Comput. Opt. Appl. 63, 543–557 (2016)

Per, B., Ove, E.: Robust registration of point sets using iteratively reweighted least squares. Comput. Opt. Appl. 58, 543–561 (2014)

Raposo, C., Barreto, J.P.: 3D registration of curves and surfaces using local differential information. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9300–9308. IEEE, Salt Lake City (2018)

Sergeyev, Y.D., Mukhametzhanov, M.S., Kvasov, D.E., Lera, D.: Derivative-free local tuning and local improvement techniques embedded in the univariate global optimization. J. Opt. Theory Appl. 171, 186–208 (2016)

Srivastava, A., Klassen, E.: Functional and shape data analysis. Springer, New York (2016)

Srivastava, A., Klassen, E., Joshi, S.H., Jermyn, I.H.: Shape analysis of elastic curves in Euclidean spaces. Trans. Pattern Anal. Mach. Int. 33, 1415–1428 (2011)

Žilinskas, A., Calvin, J.: Bi-objective decision making in global optimization based on statistical models. J. Global Opt. 74, 599–609 (2019)

Wang, Y., Gupt, M., Zhang, S., Wang, S., Gu, X., Samaras, D., Huang, P.: High resolution tracking of non-rigid motion of densely sampled 3D data using harmonic maps. Int. J. Comput. Vis. 76, 283–300 (2008)

Yang, X., Deng, C., Zheng, F., Yan, J., Liu, W.: Deep spectral clustering using dual autoencoder network. arXiv preprint arXiv:1904.13113 (2019)

Zheng, X., Wen, C., Lei, N., Ma, M., Gu, X.: Surface registration via foliation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 938–947. IEEE, Venice, Italy (2017)

Zhigljavsky, A., Žilinskas, A.: Selection of a covariance function for a Gaussian random field aimed for modeling global optimization problems. Opt. Lett. 13, 249–259 (2019)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Panos M. Pardalos.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

More results

Proofs

Proof of Theorem 3.1

Let \(\gamma \in \varGamma \) be defined as follows

where \(\gamma _{1}(\xi )=\xi _{1}\) and \(\gamma _{2}(\lambda , \xi _{2})=\alpha ^{\lambda }(\xi _{2})\) for \(\lambda \in [0,1]\). Hence, the determinant of the Jacobi of \(\gamma \) is

Now let \(q^{\lambda }(\xi _{2})\) and \(p^{\lambda }(\xi _{2})\) denote \(Q_{1}(\lambda , \xi _{2})\) and \(Q_{2}(\lambda , \xi _{2})\) respectively. We have

which completes the proof for \(h^{\lambda }\). Likewise we obtain a proof for \(k^{\lambda }\). \(\square \)

Proof of Theorem 3.2

Substituting \(\gamma \) with its truncated version \(\gamma _{m}\) obtained from the K–L expansion of \(\psi _j\), we can rewrite (4) as

If \(B \in \mathcal {S}^{m^2-1}\) then \(\alpha ^{\lambda }_{2,m}\) is a 1D reparametrization which gives the optimal minimizer \(\hat{B}\), see [15] for further details about solution of optimization problem (A1). Similar considerations apply to (5) to obtain the optimal minimizer \(\hat{A}\).

Proof of Theorem 4.1

From (9), the complete log-likelihood term is

From (10), we can write the constrained log-prior as

under the constraint that \(A^1,\dots , A^K, B^1,\dots , B^K\) belong to \(\mathcal {S}^{m^2-1}\). The desired result in (11) yields by plugging (A2) and (A3) into the log-posterior probability term. \(\square \)

Optimal Reparametrization Between Two Distinct Curves

Let I be a certain univariate interval of parametrization and let \({{\,\mathrm{\mathbb {L}^2}\,}}(I, \mathbb {R}^{n})\) be the set of square integrable functions from I to \(\mathbb {R}^{n}\). Let \(\beta : I \rightarrow \mathbb {R}^{n}\) denote a parametrized curve in \({{\,\mathrm{\mathbb {L}^2}\,}}(\mathcal {D}, \mathbb {R}^{n})\), where \(\mathcal {D}\) is \(I=[0,1]\) for open curves. We are going to restrict to those \(\beta \) satisfying: (i) \(\beta \) is absolutely continuous, (ii) \(\dot{\beta } \in {{\,\mathrm{\mathbb {L}^2}\,}}(\mathcal {D}, \mathbb {R}^{n})\). Note that absolute continuity is equivalent to \(\dot{\beta }\) exists for almost \(t \in \mathcal {D}\), that \(\dot{\beta }\) is summable and that \(\beta (t) =\int _{0}^{t} \dot{\beta }(s) ds\). In [41], Srivastava et al. proposed the Square Root Velocity Function (SRVF) for comparing absolutely continuous curves in \( \mathbb {R}^{n}\). The SRVF representation \(q: \rightarrow \mathbb {R}^{n}\) of \(\beta \) is defined as follows

where \(||. ||_{2}\) denotes the 2-norm in \(\mathbb {R}^{n}\). Conversely, given \(q \in {{\,\mathrm{\mathbb {L}^2}\,}}(I, \mathbb {R}^n)\), the original curve \(\beta \) can be recovered using \(\beta (t) = \beta _{0} + \int _{0}^{t} q(s)||q(s)||_2 ds\), where \(\beta _{0}\in \mathbb {R}^{n}\) is the starting point of \(\beta \). Let \(\bar{M}_I\) denote the set of all SRVFs. It is easily seen that in this case of open curves \(\bar{M}_I= {{\,\mathrm{\mathbb {L}^2}\,}}(I,\mathbb {R}^n) \). The reparameterization group for open curves in \(\mathbb {R}^n \) is

and its action on the curve \((\beta , \gamma )= \beta \circ \gamma \) is equivalent to the action \((q,\gamma )= \sqrt{\dot{\gamma }}q \circ \gamma \). The shape space for open curves, given by the quotient: \(\mathcal {M}_{I}= \lbrace [q]| q \in \bar{M}_I \rbrace \), where \([q]= \lbrace (q,\gamma )| \gamma \in \varGamma _{I} \rbrace \), is an orbit. It has been shown in [41], that the group action on q is isometric with respect to the \({{\,\mathrm{\mathbb {L}^2}\,}}\) metric. The isometry property is that if any two curves are reparameterized by the same function \(\gamma \), then the resulting distance between them under the elastic metric does not change. Hence, \(\mathcal {M}_{I}\) equipped with the \({{\,\mathrm{\mathbb {L}^2}\,}}\) metric is a well defined metric space. Thus, the cost function to find the optimal reparametrization between two distinct curves is,

The Hilbert Sphere

We list some analytical expressions that are useful for analyzing elements of the Hilbert sphere \(\mathcal {H}\).

-

Geodesic path. Given \(\psi \in \mathcal {H}\) and a vector \(g \in T_{\psi }(\mathcal {H})\), the geodesic path with initial condition \(\psi \) and velocity g at any time instant t can be parameterized as

$$\begin{aligned} \psi (t)=\cos \big (t|| g||_{{{\,\mathrm{\mathbb {L}^2}\,}}} \big ) \psi + \sin \big (t|| g||_{{{\,\mathrm{\mathbb {L}^2}\,}}}\big ) \frac{g}{|| g||_{{{\,\mathrm{\mathbb {L}^2}\,}}}}. \end{aligned}$$ -

Geodesic distance. The arc length of the geodesic path in \(\mathcal {H}\) between two functions \(\psi _1\) and \(\psi _2\), called geodesic distance, is given by

$$\begin{aligned} \text {dist}\big (\psi _1,\psi _2\big )_{\mathcal {H}}=\arccos \big (\big <\psi _1,\psi _2\big >_{{{\,\mathrm{\mathbb {L}^2}\,}}}\big ). \end{aligned}$$ -

Exponential map. Let \(\psi \) be any element of \(\mathcal {H}\) and \(g \in T_{\psi }(\mathcal {H})\). We define the exponential map as an isometry from \(T_{\psi }(\mathcal {H})\) to \(\mathcal {H}\), satisfying

$$\begin{aligned} \exp _{\psi }(g)=\cos \big (|| g||_{{{\,\mathrm{\mathbb {L}^2}\,}}}\big ) \psi + \sin \big (|| g||_{{{\,\mathrm{\mathbb {L}^2}\,}}}\big ) \frac{g}{|| g||}_{{{\,\mathrm{\mathbb {L}^2}\,}}}. \end{aligned}$$The exponential map is a bijection between the tangent space and the unit sphere if we restrict \(|| g||_{{{\,\mathrm{\mathbb {L}^2}\,}}}\) so that \(|| g||_{{{\,\mathrm{\mathbb {L}^2}\,}}} \in [0, \pi )\).

-

Log map. For \(\psi _1,\psi _2 \in \mathcal {H}\), we define \(\zeta \in T_{\psi _1}(\mathcal {H})\) to be the inverse exponential (log) map of \(\psi _2\) if \(\exp _{\psi _1}(\zeta )=\psi _2\). We use the notation \(\zeta =\log _{\psi _1}(\psi _2)\) where

$$\begin{aligned} \zeta =\frac{\alpha }{||\alpha ||}_{{{\,\mathrm{\mathbb {L}^2}\,}}} \text {dist}\big (\psi _1,\psi _2\big )_{\mathcal {H}} \; \text {and} \; \alpha =\psi _2-\psi _1\big <\psi _2,\psi _1\big >_{{{\,\mathrm{\mathbb {L}^2}\,}}} \psi _1. \end{aligned}$$

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fradi, A., Samir, C. & Adouani, I. A New Bayesian Approach to Global Optimization on Parametrized Surfaces in \(\mathbb {R}^{3}\). J Optim Theory Appl 202, 1077–1100 (2024). https://doi.org/10.1007/s10957-024-02473-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-024-02473-8

Keywords

- Riemannian optimization

- Bayesian optimization

- Spherical HMC

- Parametrized surfaces

- Spherical Gaussian processes