Abstract

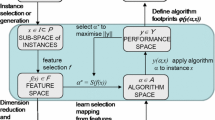

Machine Learning studies often involve a series of computational experiments in which the predictive performance of multiple models are compared across one or more datasets. The results obtained are usually summarized through average statistics, either in numeric tables or simple plots. Such approaches fail to reveal interesting subtleties about algorithmic performance, including which observations an algorithm may find easy or hard to classify, and also which observations within a dataset may present unique challenges. Recently, a methodology known as Instance Space Analysis was proposed for visualizing algorithm performance across different datasets. This methodology relates predictive performance to estimated instance hardness measures extracted from the datasets. However, the analysis considered an instance as being an entire classification dataset and the algorithm performance was reported for each dataset as an average error across all observations in the dataset. In this paper, we developed a more fine-grained analysis by adapting the ISA methodology. The adapted version of ISA allows the analysis of an individual classification dataset by a 2-D hardness embedding, which provides a visualization of the data according to the difficulty level of its individual observations. This allows deeper analyses of the relationships between instance hardness and predictive performance of classifiers. We also provide an open-access Python package named PyHard, which encapsulates the adapted ISA and provides an interactive visualization interface. We illustrate through case studies how our tool can provide insights about data quality and algorithm performance in the presence of challenges such as noisy and biased data.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Availability of data and material

The benchmark datasets and outputs of their analysis are available at our open repository under the fold ‘experiments’ (https://gitlab.com/ita-ml/pyhard). The real-data COVID dataset had the features names uncharacterized for anonymization.

Notes

Available in the Python rankaggregation package.

Recalling that IH takes the average likelihood of misclassification of an instance \({\mathbf {x}}_i\) by the multiple algorithms in the pool \({\mathcal {A}}\), the threshold on IH cannot be based on Proposition 1.

The benchmark datasets and outputs of their analysis are available at the shared url https://gitlab.com/ita-ml/pyhard. The real-data COVID dataset in the repository had the features names uncharacterized for anonymization.

Online tool for Instance Space Analysis, available at https://www.matilda.unimelb.edu.au.

References

Arruda, J. L., Prudêncio, R. B., & Lorena, A. C. (2020). Measuring instance hardness using data complexity measures. In Brazilian Conference on Intelligent Systems, Springer, pp 483–497.

Barek, M. A., Aziz, M. A., & Islam, M. S. (2020). Impact of age, sex, comorbidities and clinical symptoms on the severity of covid-19 cases: A meta-analysis with 55 studies and 10014 cases. Heliyon, 6(12), e05684.

Bergstra, J., Bardenet, R., Bengio, Y., & Kégl, B. (2011). Algorithms for hyper-parameter optimization. In Proceedings of the 24th International Conference on Neural Information Processing Systems, Curran Associates Inc., Red Hook, NY, USA, NIPS’11, p 2546–2554.

Bergstra, J., Yamins, D., & Cox, D. D. (2013). Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures. In Proc. 30th International Conference on International Conference on Machine Learning - Volume 28, p I–115–I–123.

Böken, B. (2021). On the appropriateness of platt scaling in classifier calibration. Information Systems, 95, 101641.

Corbett-Davies, S., & Goel, S. (2018). The measure and mismeasure of fairness: A critical review of fair machine learning. arXiv preprint arXiv:1808.00023

Edelsbrunner, H. (2010). Alpha shapes–a survey. Tessellations in the Sciences, 27, 1–25.

Friedler, S. A., Scheidegger, C., Venkatasubramanian, S., Choudhary, S., Hamilton, E. P., Roth, D. (2019). A comparative study of fairness-enhancing interventions in machine learning. In Proceedings of the conference on fairness, accountability, and transparency, pp 329–338.

Gao, S., Ver Steeg, G., & Galstyan, A. (2015). Efficient estimation of mutual information for strongly dependent variables. In International Conference on Artificial Intelligence and Statistics (AISTATS), pp 277–286.

Garcia, L. P., de Carvalho, A. C., & Lorena, A. C. (2015). Effect of label noise in the complexity of classification problems. Neurocomputing, 160, 108–119.

Giraud-Carrier, C., & Provost, F. (2005). Toward a justification of meta-learning: Is the no free lunch theorem a show-stopper. In Proc. ICML-2005 Workshop on Meta-learning, pp 12–19.

Hajian, S., Bonchi, F., & Castillo, C. (2016). Algorithmic bias: From discrimination discovery to fairness-aware data mining. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp 2125–2126.

Hillinger, C. (2004). Voting and the cardinal aggregation of judgments. SSRN 548662

Kandanaarachchi, S., Muñoz, M. A., Hyndman, R. J., & Smith-Miles, K. (2020). On normalization and algorithm selection for unsupervised outlier detection. Data Mining and Knowledge Discovery, 34(2), 309–354.

Kang, Y., Hyndman, R. J., & Smith-Miles, K. (2017). Visualising forecasting algorithm performance using time series instance spaces. International Journal of Forecasting, 33(2), 345–358.

Khademi, A., & Honavar, V. (2020). Algorithmic bias in recidivism prediction: A causal perspective (student abstract). Proceedings of the AAAI Conference on Artificial Intelligence, 34(10), 13839–13840.

Khan, K., Rehman, S. U., Aziz, K., Fong, S., & Sarasvady, S. (2014). Dbscan: Past, present and future. In The fifth international conference on the applications of digital information and web technologies (ICADIWT 2014), IEEE, pp 232–238.

Kletzander, L., Musliu, N., & Smith-Miles, K. (2021). Instance space analysis for a personnel scheduling problem. Annals of Mathematics and Artificial Intelligence, 89, 617–637.

Leyva, E., González, A., & Pérez, R. (2014). A set of complexity measures designed for applying meta-learning to instance selection. IEEE Transactions on Knowledge and Data Engineering, 27(2), 354–367.

Leyva, E., González, A., & Pérez, R. (2015). Three new instance selection methods based on local sets: A comparative study with several approaches from a bi-objective perspective. Pattern Recognition, 48(4), 1523–1537.

Li, J., Cheng, K., Wang, S., Morstatter, F., Trevino, R. P., Tang, J., & Liu, H. (2017). Feature selection: A data perspective. ACM Computing Surveys, 50(6), 1–45.

Maletic, J. I., & Marcus, A. (2000). Data cleansing: Beyond integrity analysis. In Iq, pp 200–209.

Muñoz, M. A., Villanova, L., Baatar, D., & Smith-Miles, K. (2018). Instance spaces for machine learning classification. Machine Learning, 107(1), 109–147.

Muñoz, M. A., & Smith-Miles, K. A. (2017). Performance analysis of continuous black-box optimization algorithms via footprints in instance space. Evolutionary computation, 25(4), 529–554.

Muñoz, M. A., Yan, T., Leal, M. R., Smith-Miles, K., Lorena, A. C., Pappa, G. L., & Rodrigues, R. M. (2021). An instance space analysis of regression problems. ACM Transactions on Knowledge Discovery from Data (TKDD), 15(2), 1–25.

Platt, J., et al. (1999). Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Advances in large margin classifiers, 10(3), 61–74.

Prati, R. C. (2012). Combining feature ranking algorithms through rank aggregation. In: The 2012 International joint conference on neural networks (IJCNN), IEEE, pp 1–8.

Rice, J. R. (1976). The algorithm selection problem. Advances in Computers (Vol. 15, pp. 65–118). Elsevier.

Rudin, C., Wang, C., & Coker, B. (2020). The age of secrecy and unfairness in recidivism prediction. Harvard Data Science Review. https://doi.org/10.1162/99608f92.6ed64b30.

Sani, H. M., Lei, C., & Neagu, D. (2018). Computational complexity analysis of decision tree algorithms. In: International conference on innovative techniques and applications of artificial intelligence, Springer, pp 191–197.

Smith, M. R., Martinez, T., & Giraud-Carrier, C. (2014). An instance level analysis of data complexity. Machine Learning, 95(2), 225–256.

Smith-Miles, K., & Bowly, S. (2015). Generating new test instances by evolving in instance space. Computers & Operations Research, 63, 102–113.

Smith-Miles, K., & Lopes, L. (2011). Generalising algorithm performance in instance space: A timetabling case study. In: International conference on learning and intelligent optimization, Springer, pp 524–538.

Smith-Miles, K., & Tan, T. T. (2012). Measuring algorithm footprints in instance space. In: 2012 IEEE congress on evolutionary computation, IEEE, pp 1–8.

Smith-Miles, K., Baatar, D., Wreford, B., & Lewis, R. (2014). Towards objective measures of algorithm performance across instance space. Computers and Operations Research, 45, 12–24.

Smith-Miles, K., Christiansen, J., & Muñoz, M. A. (2021). Revisiting where are the hard knapsack problems? via instance space analysis. Computers & Operations Research, 128, 105184.

Smith-Miles, K. A. (2009). Cross-Disciplinary Perspectives on Meta-Learning for Algorithm Selection. ACM Computing Surveys, 41(1), 1–25.

Snoek, J., Larochelle, H., & Adams, R. P. (2012). Practical bayesian optimization of machine learning algorithms. In: Proceedings. 25th international conference on neural information processing systems - Volume 2, Curran Associates Inc., Red Hook, NY, USA, p 2951–2959.

Vanschoren, J. (2019). Meta-learning. In Automated Machine Learning, Springer, pp 35–61.

Vilalta, R., & Drissi, Y. (2002). A perspective view and survey of meta-learning. Artificial Intelligence Review, 18(2), 77–95.

Wolpert, D. H. (2002). The Supervised Learning No-Free-Lunch Theorems (pp. 25–42). Springer London.

Yarrow, S., Razak, K. A., Seitz, A. R., & Seriès, P. (2014). Detecting and quantifying topography in neural maps. PloS one, 9(2), e87178.

Zhu, X., & Wu, X. (2004). Class noise vs. attribute noise: A quantitative study. Artificial intelligence review, 22(3), 177–210.

Acknowledgements

The authors are thankful to the São José dos Campos municipal health secretariat and IPPLAN (Instituto de Pesquisa e Planejamento) for providing data on COVID cases.

Funding

This work was partially supported by the Brazilian research agencies Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001 (main grant 88887.507037/2020-00), CNPq (grant 307892/2020-4) and FAPESP (grant 2021/06870-3) and by the Australian Research Council (grant FL140100012).

Author information

Authors and Affiliations

Contributions

P. Y. A. Paiva implemented PyHard and PyIspace, along with all the parts of the framework dedicated to the ISA analysis of a single dataset and has run experiments with the label noise datasets. C. C. Moreno has run and performed the analysis of the COMPAS dataset. K. Smith-Miles has contributed with paper organization, with the validation of the experiments and is the proponent of the original ISA framework. M. G. Valeriano has built, run and performed the analysis of the Covid dataset. A. C. Lorena proposed framing the ISA for the analysis of a single dataset and supervised all the work. All authors contributed with paper writing and organization.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Code availability

The source code of the cited Python packages used in the experiments section is available at the public repositories PyHard (https://gitlab.com/ita-ml/pyhard) and PyISpace (https://gitlab.com/ita-ml/pyispace).

Ethical approval

The anonymized Covid dataset was obtained as part of a partnership between the project “Data science for fighting outbreaks, epidemics and pandemics in hospitals” coordinated by researcher A. C. Lorena and the São José dos Campos health department.

Consent to participate

Not Applicable.

Consent for publication

Not Applicable.

Additional information

Editor: Salvador Garcia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Proof of proposition

Proposition 1

(cross-entropy bounds). For any classification problem with C classes there is a lower bound \(L_{\mathrm {lower}}\) and an upper bound \(L_{\mathrm {upper}}\) for the cross-entropy loss (aka log-loss) such that: if \(\mathrm {logloss}({\mathbf {x}}_i) < L_{lower}\), the prediction was correct; if \(\mathrm {logloss}({\mathbf {x}}_i) > L_{upper}\), the prediction was incorrect; and if \(L_{\mathrm {lower}} \le {\mathrm {logloss}}({\mathbf {x}}_i) \le L_{\mathrm {upper}}\), the prediction can be either correct or incorrect , where \({\mathrm {logloss}}({\mathbf {x}}_i)\) is the log-loss of instance \({\mathbf {x}}_i\). Specifically, these bounds can be set as \(L_{\mathrm {lower}}=-\log \big (\frac{1}{2}\big ){=\log 2}\) and \(L_{\mathrm {upper}}=-\log \big (\frac{1}{C}\big )={\log C}\).

Proof

Given a multiclass setting consisting of C classes, the outcome of a classifier is the predicted probability vector \([p_1, p_2, \dots , p_{C}]\), and the predicted class is defined as \(\mathop {\hbox {arg max}}\limits _j p_j\). And \(y_{j, c} :=I_{j=c}\) indicates the true class c.

We first prove that if the classifier succeeds in correctly predicting the class of instance \({\mathbf {x}}_i\), then \({\mathrm {logloss}}({\mathbf {x}}_i) < L_{\mathrm {upper}}\) and that the value of \(L_{\mathrm {upper}}\) is \(-\log (\frac{1}{C})\). Without loss of generality, suppose \(j=1\) is the correct class, since the classes can be always reordered so that each one of them becomes the first in the set. In that case, \(\mathop {\hbox {arg max}}\limits _j p_j = 1\), which implies that:

Summing all those inequalities results in

On the other hand, \(\sum _j p_j = 1\). Therefore,

However, if \({\mathrm {logloss}}({\mathbf {x}}_i) < L_{\mathrm {upper}}\) it does not necessarily imply that the instance \({\mathbf {x}}_i\) was correctly classified. We show this by counterexample: take the particular predicted probability vector \(\big [\frac{1}{2} - \varepsilon , \frac{1}{2} + \varepsilon , 0, \dots , 0\big ]\), and define the right class as \(c=1\). For this vector, the classifier predicts the class 2, since it has the highest probability. The log-loss value is

If we choose \(0< \varepsilon < \frac{1}{2} - \frac{1}{C}\), which is always possible for \(C \ge 3\), then

Specifically for binary problems with only two classes, if \({\mathrm {logloss}}({\mathbf {x}}_i) < - \log \frac{1}{2}\), then

So, in the particular case of binary classification problems, \(L_{\mathrm {lower}} = L_{\mathrm {upper}}\). Thus,

To find \(L_{\mathrm {lower}}\), we first show that a multiclass problem can be reduced to a binary classification problem in the sense of log loss metric. Herewith, the vector \([p_1, p_2, \dots , p_n]\) is equivalent to \([p_1, p_2']\), with \(p_2' = \sum _{j=2}^{C} p_j\). In both cases, the log loss value is the same. Thus, we assume \(L_{\mathrm {lower}} = -\log \frac{1}{2}\) and prove it by contradiction.

Assume that \({\mathrm {logloss}}({\mathbf {x}}_i) < -\log \frac{1}{2}\), that the correct class is \(c=1\) and \(\exists p_k : p_k > p_1\) (classification error). Then,

Therefore, \(L_{\mathrm {lower}} = -\log \frac{1}{2}\). \(\square\)

Appendix B Additional figures

Additional figures from the analysis of the COMPAS dataset are presented here. They show the Lasso selections of easy and hard instances (Fig. 14) and distributions of some other attributes besides race, namely number of priors (Fig. 15a), age (Fig. 15b) and sex (Fig. 15c). Median values are represented by vertical dashed lines. A summary of these results is presented and discussed in Sect. 4.2 using Table 2.

Rights and permissions

About this article

Cite this article

Paiva, P.Y.A., Moreno, C.C., Smith-Miles, K. et al. Relating instance hardness to classification performance in a dataset: a visual approach. Mach Learn 111, 3085–3123 (2022). https://doi.org/10.1007/s10994-022-06205-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-022-06205-9