Abstract

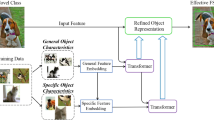

Traditional object detection methods rely on manually annotated data, which can be costly and time-consuming, particularly for objects with low occurrence frequency or those that are neglected in existing datasets. When we need to generalize the model from the training datasets to the target datasets, false positive detection will appear with limited annotations in some categories and the model performance will decrease for unseen categories. In this paper, we found that the problems are related to the model’s overfitting to foreground objects during the training stage and the inadequate robustness of feature representations. In order to effectively improve generalization of deep learning network, we propose a task-decoupled interactive embedding network. We decouple the sub-tasks in the detection pipeline with parallel convolution branches, with gradient propagation independently and anchor boxes generation from coarse to fine. And we introduce an embedding-interactive self-supervised decoder into the detector, so that the weaker object representations can be enhanced, and the representations of the same object can be closely aggregated, providing multi-scale semantic information for detection. Our method achieves great results on two visual tasks: few-shot object detection and open world object detection. It can effectively improve generalization on novel classes without hurting the detection of base classes and have good generalization ability for unknown categories detection. Our code is available at: https://github.com/hommelibrelm/DINet.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Code/Data availability

Our code is available on https://github.com/hommelibrelm/DINet.

Notes

N-way K-shot: N determines the number of novel classes and K denotes the number of annotated instances per novel class.

To enable our detector to perform better on open world object detection which is class-agnostic, we replace the classification branch with a localization quality box regression branch. This idea is the same as that in OLN-Box (Kim et al., 2022).

References

Ba, J. L., Kiros, J. R., & Hinton, G. E. (2016) Layer normalization. arXiv:1607.06450

Deng, J., Dong, W., Socher, R., et al. (2009) Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition (pp. 248–255). Ieee.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., et al. (2020). An image is worth 16x16 words: Transformers for image recognition at scale. arXiv:2010.11929

Everingham, M., Eslami, S. A., Van Gool, L., et al. (2015). The pascal visual object classes challenge: A retrospective. International Journal of Computer Vision, 111, 98–136.

Everingham, M., Van Gool, L., Williams, C. K., et al. (2010). The pascal visual object classes (voc) challenge. International Journal of Computer vision, 88, 303–338.

Fan, Z., Ma, Y., Li, Z., et al. (2021). Generalized few-shot object detection without forgetting. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4527–4536).

Guirguis, K., Hendawy, A., Eskandar, G., et al. (2022) Cfa: Constraint-based finetuning approach for generalized few-shot object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4039–4049).

Gupta, A., Narayan, S., Joseph, K., et al. (2022) Ow-detr: Open-world detection transformer. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 9235–9244).

He, K., Chen, X., Xie, S., et al. (2021). Masked autoencoders are scalable vision learners. arXiv:2111.06377

He, K., Gkioxari, G., Dollár. P., et al. (2017) Mask r-cnn. In Proceedings of the IEEE international conference on computer vision (pp. 2961–2969).

Jiang, B., Luo, R., Mao, J., et al. (2018). Acquisition of localization confidence for accurate object detection. In Proceedings of the European conference on computer vision (ECCV) (pp. 784–799).

Joseph, K., Khan, S., Khan, F.S., et al. (2021). Towards open world object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5830–5840).

Kang, B., Liu, Z., Wang, X., et al. (2019). Few-shot object detection via feature reweighting. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 8420–8429).

Kim, D., Lin, T. Y., Angelova, A., et al. (2022). Learning open-world object proposals without learning to classify. IEEE Robotics and Automation Letters, 7(2), 5453–5460.

Li, Y., Mao, H., Girshick, R. B., et al. (2022). Exploring plain vision transformer backbones for object detection. arXiv:abs/2203.16527

Lin, T. Y., Goyal, P., Girshick, R., et al. (2017). Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision (pp. 2980–2988).

Lin, T. Y., Maire, M., Belongie, S., et al. (2014). Microsoft coco: Common objects in context. In Computer vision–ECCV 2014: 13th European conference, Zurich, Switzerland, September 6–12, 2014, proceedings, Part V 13 (pp. 740–755). Springer.

Liu, Y., Sangineto, E., Bi, W., et al. (2021a). Efficient training of visual transformers with small datasets. In Conference on neural information processing systems (NeurIPS)

Liu, Z., Lin, Y., Cao, Y., et al. (2021b). Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF international conference on computer vision (ICCV)

Lu, Z., Xie, H., Liu, C., et al. (2022) Bridging the gap between vision transformers and convolutional neural networks on small datasets. In Oh, A. H., Agarwal, A., Belgrave, D., et al (Eds.), Advances in neural information processing systems. https://openreview.net/forum?id=bfz-jhJ8wn

Ma, J., Niu, Y., Xu, J., et al. (2023). Digeo: Discriminative geometry-aware learning for generalized few-shot object detection. arXiv:2303.09674

O Pinheiro, P. O., Collobert, R., Dollár, P. (2015). Learning to segment object candidates. Advances in Neural Information Processing Systems, 28.

Pont-Tuset, J., Arbelaez, P., Barron, J. T., et al. (2016). Multiscale combinatorial grouping for image segmentation and object proposal generation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(1), 128–140.

Ren, S., He, K., Girshick, R., et al. (2015). Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in Neural Information Processing Systems, 28.

Saito, K., Hu, P., Darrell, T., et al. (2022). Learning to detect every thing in an open world. In X. X. I. V. Part (Ed.), Computer vision-ECCV 2022: 17th European conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings (pp. 268–284). Springer.

Shao, S., Li, Z., Zhang, T., et al. (2019) Objects365: A large-scale, high-quality dataset for object detection. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 8430–8439).

Tian, K., Jiang, Y., Diao, Q., et al. (2023). Designing bert for convolutional networks: Sparse and hierarchical masked modeling. arXiv:2301.03580

Tian, Z., Shen, C., Chen, H., et al. (2019) Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 9627–9636).

Vu, T., Jang, H., Pham, T.X., et al. (2019) Cascade rpn: Delving into high-quality region proposal network with adaptive convolution. Advances in Neural Information Processing Systems, 32.

Wang, W., Feiszli, M., Wang, H., et al. (2022). Open-world instance segmentation: Exploiting pseudo ground truth from learned pairwise affinity. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4422–4432).

Wang, X., Huang, T. E., Darrell, T., et al. (2020). Frustratingly simple few-shot object detection. arXiv:2003.06957

Wu, J., Liu, S., Huang, D., et al. (2020). Multi-scale positive sample refinement for few-shot object detection. In Computer vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XVI 16 (pp. 456–472). Springer.

Xiao, Y., Marlet, R. (2020). Few-shot object detection and viewpoint estimation for objects in the wild. In European conference on computer vision (ECCV).

Xie, Z., Zhang, Z., Cao, Y., et al. (2022) Simmim: A simple framework for masked image modeling. In International conference on computer vision and pattern recognition (CVPR).

Yan, X., Chen, Z., Xu, A., et al. (2019) Meta r-cnn: Towards general solver for instance-level low-shot learning. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 9577–9586).

Zhang, G., Luo, Z., Cui, K., et al. (2022) Meta-detr: Image-level few-shot detection with inter-class correlation exploitation. In IEEE transactions on pattern analysis and machine intelligence.

Zitnick, C.L., Dollár, P. (2014). Edge boxes: Locating object proposals from edges. In Computer vision–ECCV 2014: 13th European conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13 (pp. 391–405). Springer.

Funding

Not applicable

Author information

Authors and Affiliations

Contributions

ML contributed to the idea, algorithm, theoretical analysis, writing, and experiments. JJ contributed to the idea, and writing. NL and MP contributed to the writing.

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no conflict of interest.

Additional information

Editors: Vu Nguyen, Dani Yogatama.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, M., Jiao, J., Li, N. et al. Task-decoupled interactive embedding network for object detection. Mach Learn 113, 3829–3848 (2024). https://doi.org/10.1007/s10994-023-06430-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-023-06430-w