Abstract

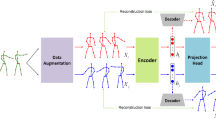

Traditional contrastive learning frameworks for skeleton-based action recognition use data augmentation and memory bank techniques to obtain positive/negative samples required for training, but this instance-level pseudo-label generation mechanism does not take full advantage of the rich cluster-level semantic information contained in human skeleton sequences. In this paper, we propose a Progressive Semantic Learning method (ProSL), which gradually optimizes the pseudo-label generation mechanism in self-supervised contrastive learning through an iterative framework, so that representation learning can effectively capture action semantic information. Specifically, the existing contrastive learning methods can output an initial skeleton encoder. Then, on the basis of this encoder, clustering methods can be applied to generate a Codebook containing the semantic information of human actions, which is further used to improve the pseudo-label generation mechanism. Finally, based on the above two-step iterations, we achieve progressive semantic learning and obtain a more reasonable skeleton encoder. Extensive experiments on four datasets demonstrate that our proposed method achieves SOTA on multiple downstream tasks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The data used in this work are all public.

Code availability

The source code is provided in the Supplementary Materials and it will be released after publishing.

References

Bardes, A., Ponce, J., LeCun, Y.: Vicreg: Variance-invariance-covariance regularization for self-supervised learning. arXiv preprint arXiv:2105.04906 (2021)

Caron, M., Touvron, H., Misra, I., Jégou, H., Mairal, J., Bojanowski, P., Joulin, A.: Emerging properties in self-supervised vision transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9650–9660 (2021)

Caron, M., Misra, I., Mairal, J., Goyal, P., Bojanowski, P., & Joulin, A. (2020). Unsupervised learning of visual features by contrasting cluster assignments. Advances in neural information processing systems, 33, 9912–9924.

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: International Conference on Machine Learning, pp. 1597–1607 (2020). PMLR

Chen, Y., Zhao, L., Yuan, J., Tian, Y., Xia, Z., Geng, S., Han, L., Metaxas, D.N.: Hierarchically self-supervised transformer for human skeleton representation learning. In: European Conference on Computer Vision, pp. 185–202 (2022). Springer

Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from incomplete data via the em algorithm. Journal of the royal statistical society: series B (methodological), 39(1), 1–22.

Dong, J., Sun, S., Liu, Z., Chen, S., Liu, B., Wang, X.: Hierarchical contrast for unsupervised skeleton-based action representation learning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, pp. 525–533 (2023)

Grill, J.-B., Strub, F., Altché, F., Tallec, C., Richemond, P., Buchatskaya, E., Doersch, C., Avila Pires, B., Guo, Z., Gheshlaghi Azar, M., et al. (2020). Bootstrap your own latent-a new approach to self-supervised learning. Advances in neural information processing systems, 33, 21271–21284.

Guo, T., Liu, H., Chen, Z., Liu, M., Wang, T., Ding, R.: Contrastive learning from extremely augmented skeleton sequences for self-supervised action recognition. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, pp. 762–770 (2022)

Guo, Y., Xu, M., Li, J., Ni, B., Zhu, X., Sun, Z., Xu, Y.: Hcsc: Hierarchical contrastive selective coding. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9706–9715 (2022)

He, K., Chen, X., Xie, S., Li, Y., Dollár, P., Girshick, R.: Masked autoencoders are scalable vision learners. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16000–16009 (2022)

He, K., Fan, H., Wu, Y., Xie, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9729–9738 (2020)

Hershey, J.R., Olsen, P.A.: Approximating the kullback leibler divergence between gaussian mixture models. In: 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP’07, vol. 4, p. 317 (2007). IEEE

Hua, Y., Wu, W., Zheng, C., Lu, A., Liu, M., Chen, C., Wu, S.: Part aware contrastive learning for self-supervised action recognition. arXiv preprint arXiv:2305.00666 (2023)

Kim, B., Chang, H.J., Kim, J., Choi, J.Y.: Global-local motion transformer for unsupervised skeleton-based action learning. In: European Conference on Computer Vision, pp. 209–225 (2022). Springer

Li, L., Wang, M., Ni, B., Wang, H., Yang, J., Zhang, W.: 3d human action representation learning via cross-view consistency pursuit. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4741–4750 (2021)

Li, J., Zhou, P., Xiong, C., Hoi, S.C.: Prototypical contrastive learning of unsupervised representations. arXiv preprint arXiv:2005.04966 (2020)

Lin, L., Song, S., Yang, W., Liu, J.: Ms2l: Multi-task self-supervised learning for skeleton based action recognition. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 2490–2498 (2020)

Lin, L., Zhang, J., Liu, J.: Actionlet-dependent contrastive learning for unsupervised skeleton-based action recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2363–2372 (2023)

Liu, J., Song, S., Liu, C., Li, Y., Hu, Y.: A benchmark dataset and comparison study for multi-modal human action analytics. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 16(2), 1–24 (2020)

Liu, J., Shahroudy, A., Perez, M., Wang, G., Duan, L.-Y., & Kot, A. C. (2019). Ntu rgb+ d 120: A large-scale benchmark for 3d human activity understanding. IEEE transactions on pattern analysis and machine intelligence, 42(10), 2684–2701.

Maaten, L., Hinton, G.: Visualizing data using t-sne. Journal of machine learning research 9(11) (2008)

MacQueen, J., et al.: Some methods for classification and analysis of multivariate observations. In: Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, vol.1, pp. 281–297 (1967). Oakland, CA, USA

Mao, Y., Zhou, W., Lu, Z., Deng, J., Li, H.: Cmd: Self-supervised 3d action representation learning with cross-modal mutual distillation. In: European Conference on Computer Vision, pp. 734–752 (2022). Springer

Noroozi, M., Vinjimoor, A., Favaro, P., Pirsiavash, H.: Boosting self-supervised learning via knowledge transfer. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9359–9367 (2018)

Oord, A.v.d., Li, Y., Vinyals, O.: Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748 (2018)

Peng, W., Hong, X., Chen, H., Zhao, G.: Learning graph convolutional network for skeleton-based human action recognition by neural searching. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 2669–2676 (2020)

Rao, H., Xu, S., Hu, X., Cheng, J., & Hu, B. (2021). Augmented skeleton based contrastive action learning with momentum lstm for unsupervised action recognition. Information Sciences, 569, 90–109.

Shah, A., Roy, A., Shah, K., Mishra, S., Jacobs, D., Cherian, A., Chellappa, R.: Halp: Hallucinating latent positives for skeleton-based self-supervised learning of actions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 18846–18856 (2023)

Shahroudy, A., Liu, J., Ng, T.-T., Wang, G.: Ntu rgb+ d: A large scale dataset for 3d human activity analysis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1010–1019 (2016)

Su, Y., Lin, G., Wu, Q.: Self-supervised 3d skeleton action representation learning with motion consistency and continuity. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 13328–13338 (2021)

Su, K., Liu, X., Shlizerman, E.: Predict & cluster: Unsupervised skeleton based action recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9631–9640 (2020)

Thoker, F.M., Doughty, H., Snoek, C.G.: Skeleton-contrastive 3d action representation learning. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 1655–1663 (2021)

Wang, T., Isola, P.: Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In: International Conference on Machine Learning, pp. 9929–9939 (2020). PMLR

Wang, F., Liu, H.: Understanding the behaviour of contrastive loss. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2495–2504 (2021)

Wu, Z., Xiong, Y., Yu, S.X., Lin, D.: Unsupervised feature learning via non-parametric instance discrimination. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3733–3742 (2018)

Wu, X., Kumar, V., Ross Quinlan, J., Ghosh, J., Yang, Q., Motoda, H., McLachlan, G. J., Ng, A., Liu, B., Yu, P. S., et al. (2008). Top 10 algorithms in data mining. Knowledge and information systems, 14, 1–37.

Yang, S., Liu, J., Lu, S., Er, M.H., Kot, A.C.: Skeleton cloud colorization for unsupervised 3d action representation learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 13423–13433 (2021)

Zhang, H., Hou, Y., Zhang, W., Li, W.: Contrastive positive mining for unsupervised 3d action representation learning. In: European Conference on Computer Vision, pp. 36–51 (2022). Springer

Zhang, J., Lin, L., Liu, J.: Hierarchical consistent contrastive learning for skeleton-based action recognition with growing augmentations. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, pp. 3427–3435 (2023)

Zheng, N., Wen, J., Liu, R., Long, L., Dai, J., Gong, Z.: Unsupervised representation learning with long-term dynamics for skeleton based action recognition. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Zhou, Y., Duan, H., Rao, A., Su, B., Wang, J.: Self-supervised action representation learning from partial spatio-temporal skeleton sequences. arXiv preprint arXiv:2302.09018 (2023)

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant 42394060 and 42394064.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 42394060 and 42394064.

Author information

Authors and Affiliations

Contributions

Hao Qin and Luyuan Chen conceived the ideas in this paper, conducted the experiments, and wrote the manuscript. Ming Kong and Zhuoran Zhao assisted with the theoretical analysis. Xianzhou Zeng and Mengxu Lu revised the manuscript. Qiang Zhu was responsible for the overall direction and planning. All authors discussed the results and contributed to the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

None.

Ethics approval

Not applicable.

Consent for publication

Not applicable.

Additional information

Editors: Kee-Eung Kim, Shou-De Lin.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix A Rationality of the training process

Appendix A Rationality of the training process

Iterative algorithms have proven their effectiveness in many fields (Dempster et al., 1977; MacQueen et al., 1967; Wu et al., 2008). Here, we analyze ProSL based on the Expectation-Maximization algorithm. Suppose in the t-th iteration, the parameters of the network are denoted as \(\theta ^t\), and the parameters of SCoBo are denoted as \(\varphi ^t\). For n samples, the objective of self-supervised skeleton sequence representation learning is to maximize the log-likelihood function:

After introducing ScoBo, we maximize the log-likelihood function of the model distribution as follows:

Equation 14 is derived based on the marginal probability of \(x_i\), and we cannot directly calculate \(\theta ^{t+1}\). To address this, introduce an unknown new distribution \(Q_i(\varphi _i^t)\) and scale Eq. 14 using Jensen’s inequality as follows:

If we want to satisfy the equality of Jensen’s inequality, then:

Equation 15 provides a lower bound for the log-likelihood function. Therefore, in the Maximization step, i.e., the representation learning process, our objective is:

For the Expectation step, which is the action semantic learning process in ProSL, we need to estimate \(p(\varphi _i^{t+1};x_i, \theta ^{t+1})\) to obtain \(SCoBo^{t+1}\).

The iterative process has been proven to converge (Dempster et al., 1977), however, the final iteration results are highly influenced by the initialization, and model parameters may get stuck in local optimal points. In ProSL, to ensure the quality of \(\varphi _{i}^0\), we choose to first perform several rounds of representation learning before starting the iterative training. Figure 4b in the main text illustrates the necessity of this strategy.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qin, H., Chen, L., Kong, M. et al. Progressive semantic learning for unsupervised skeleton-based action recognition. Mach Learn 114, 64 (2025). https://doi.org/10.1007/s10994-024-06667-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10994-024-06667-z