Abstract

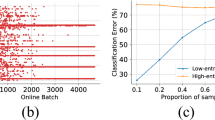

Test-time adaptation is a task that a pre-trained source model is updated during inference with given test data from target domains with different distributions. However, frequent updates in a long time without resetting the model will bring two main problems, i.e., error accumulation and catastrophic forgetting. Although some recent methods have alleviated the problems by designing new loss functions or update strategies, they are still very fragile to hyperparameters or suffer from storage burden. Besides, most methods treat each target domain equally, neglecting the characteristics of each target domain and the situation of the current model, which will mislead the update direction of the model. To address the above issues, we first leverage the mean cosine similarity per test batch between the features output by the source and updated models to measure the change of target domains. Then we summarize the elasticity of the mean cosine similarity to guide the model to update and restore adaptively. Motivated by this, we propose a frustratingly simple yet efficient method called Elastic-Test-time ENTropy Minimization (E-TENT) to dynamically adjust the mean cosine similarity based on the built relationship between it and the momentum coefficient. Combined with the extra three minimal improvements, E-TENT exhibits significant performance gains and strong robustness on CIFAR10-C, CIFAR100-C and ImageNet-C along with various practical scenarios.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The datasets are all available online for public. CIFAR10-C: https://zenodo.org/records/2535967#.ZBiI7NDMKUk CIFAR100-C: https://zenodo.org/records/3555552#.ZBiJA9DMKUk ImageNet-C: https://zenodo.org/records/2235448#.Yj2RO_co_mF

References

Ben-David, S., Blitzer, J., Crammer, K., Kulesza, A., Pereira, F., & Vaughan, J. W. (2010). A theory of learning from different domains. Machine Learning, 79, 151–175.

Boudiaf, M., Mueller, R., Ben Ayed, I., & Bertinetto, L. (2022). Parameter-free online test-time adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8344–8353.

Brahma, D., & Rai, P. (2023). A probabilistic framework for lifelong test-time adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3582–3591.

Chen, D., Wang, D., Darrell, T., & Ebrahimi, S. (2022). Contrastive test-time adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 295–305.

Chen, C., Xie, W., Huang, W., Rong, Y., Ding, X., Huang, Y., Xu, T., & Huang, J. (2019). Progressive feature alignment for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 627–636.

Choi, S., Yang, S., Choi, S., & Yun, S. (2022). Improving test-time adaptation via shift-agnostic weight regularization and nearest source prototypes. In: European Conference on Computer Vision, pp. 440–458. Springer.

Croce, F., Andriushchenko, M., Sehwag, V., Debenedetti, E., Flammarion, N., Chiang, M., Mittal, P., & Hein, M. (2021). Robustbench: A standardized adversarial robustness benchmark. In: 35th Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 2).

De Lange, M., Aljundi, R., Masana, M., Parisot, S., Jia, X., Leonardis, A., Slabaugh, G., & Tuytelaars, T. (2021). A continual learning survey: Defying forgetting in classification tasks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(7), 3366–3385.

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE

Ding, N., Xu, Y., Tang, Y., Xu, C., Wang, Y., & Tao, D. (2022). Source-free domain adaptation via distribution estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7212–7222.

Döbler, M., Marsden, R.A., & Yang, B. (2023). Robust mean teacher for continual and gradual test-time adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7704–7714.

Fang, Y., Yap, P.-T., Lin, W., Zhu, H., & Liu, M. (2024). Source-free unsupervised domain adaptation: A survey. Neural Networks, 174, 106230.

Gong T, Jeong J, Kim T, et al. (2022) Note: Robust continual test-time adaptation against temporal correlation[J]. Advances in Neural Information Processing Systems, 35, 27253–27266.

He, K., Fan, H., Wu, Y., Xie, S., & Girshick, R. (2020). Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9729–9738.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778.

Hendrycks, D., & Dietterich, T. (2019). Benchmarking neural network robustness to common corruptions and perturbations. In: International Conference on Learning Representations

Ioffe, S., & Szegedy, C. (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning, pp. 448–456. PMLR

Iwasawa, Y., & Matsuo, Y. (2021). Test-time classifier adjustment module for model-agnostic domain generalization. Advances in Neural Information Processing Systems, 34, 2427.

Jung, S., Lee, J., Kim, N., Shaban, A., Boots, B., & Choo, J. (2023) Cafa: Class-aware feature alignment for test-time adaptation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 19060–19071.

Kang, J., Kim, N., Kwon, D., Ok, J., & Kwak, S. (2023). Leveraging proxy of training data for test-time adaptation. In: Proceedings of the 40th International Conference on Machine Learning, pp. 15737–15752. PMLR

Kirkpatrick, J., Pascanu, R., Rabinowitz, N., Veness, J., Desjardins, G., Rusu, A. A., Milan, K., Quan, J., Ramalho, T., Grabska-Barwinska, A., et al. (2017). Overcoming catastrophic forgetting in neural networks. Proceedings of the National Academy of Sciences, 114(13), 3521–3526.

Krizhevsky, A. (2009). Learning multiple layers of features from tiny images. Master’s thesis, University of Toronto

Krizhevsky A, Sutskever I, Hinton G E. (2012) Imagenet classification with deep convolutional neural networks[J]. Advances in neural information processing systems, 25.

Lee, K., Lee, K., Shin, J., & Lee, H. (2019). Overcoming catastrophic forgetting with unlabeled data in the wild. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 312–321.

Li, Y., Wang, N., Shi, J., Liu, J., & Hou, X. (2017) Revisiting batch normalization for practical domain adaptation. In International Conference on Learning Representations.

Li, J., Yu, Z., Du, Z., Zhu, L., & Shen, H. T. (2024). A comprehensive survey on source-free domain adaptation. In IEEE Transactions on Pattern Analysis and Machine Intelligence

Liang, J., Hu, D., & Feng, J. (2020). Do we really need to access the source data? source hypothesis transfer for unsupervised domain adaptation. In: International Conference on Machine Learning, pp. 6028–6039. PMLR

Liang, J., He, R., & Tan, T. (2023). A comprehensive survey on test-time adaptation under distribution shifts. arXiv preprint arXiv:2303.15361

Lin, S., Ju, P., Liang, Y., & Shroff, N. (2023). Theory on forgetting and generalization of continual learning. In: International Conference on Machine Learning (ICML).

Maaten, L., & Hinton, G. (2008). Visualizing data using t-SNE. Journal of Machine Learning Research, 9(11), 2579.

Marsden, R.A., Döbler, M., & Yang, B. (2024). Universal test-time adaptation through weight ensembling, diversity weighting, and prior correction. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 2555–2565.

McCloskey, M., & Cohen, N.J. (1989). Catastrophic interference in connectionist networks: The sequential learning problem. In Psychology of Learning and Motivation, (vol. 24, pp. 109–165). Elsevier

Niu, S., Wu, J., Zhang, Y., Chen, Y., Zheng, S., Zhao, P., & Tan, M. (2022). Efficient test-time model adaptation without forgetting. In International Conference on Machine Learning, pp. 16888–16905. PMLR

Niu, S., Wu, J., Zhang, Y., Wen, Z., Chen, Y., Zhao, P., & Tan, M. (2022). Towards stable test-time adaptation in dynamic wild world. In The Eleventh International Conference on Learning Representations.

Parisi, G. I., Kemker, R., Part, J. L., Kanan, C., & Wermter, S. (2019). Continual lifelong learning with neural networks: A review. Neural Networks, 113, 54–71.

Prabhu, A., Torr, P.H., & Dokania, P.K. (2020). Gdumb: A simple approach that questions our progress in continual learning. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16, pp. 524–540. Springer

Sadd, M. H. (2009). Elasticity: Theory, Applications, and Numerics. Academic Press.

Schneider, S., Rusak, E., Eck, L., Bringmann, O., Brendel, W., & Bethge, M. (2020). Improving robustness against common corruptions by covariate shift adaptation. Advances in Neural Information Processing Systems, 33, 11539.

Schwarz, J., Czarnecki, W., Luketina, J., Grabska-Barwinska, A., Teh, Y.W., Pascanu, R., & Hadsell, R. (2018). Progress & compress: A scalable framework for continual learning. In International Conference on Machine Learning, pp. 4528–4537. PMLR

Song, J., Park, K., Shin, I., Woo, S., & Kweon, I.S. (2022). Cd-tta: Compound domain test-time adaptation for semantic segmentation. arXiv preprint arXiv:2212.08356

Sun, Y., Wang, X., Liu, Z., Miller, J., Efros, A., & Hardt, M. (2020). Test-time training with self-supervision for generalization under distribution shifts. In International Conference on Machine Learning, pp. 9229–9248. PMLR

Tarvainen, A., Valpola, H. (2017) Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results[J]. Advances in neural information processing systems, 30.

Tomar, D., Vray, G., Bozorgtabar, B., & Thiran, J.-P. (2023). Tesla: Test-time self-learning with automatic adversarial augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 20341–20350.

Ven, G.M., & Tolias, A.S. (2019). Three scenarios for continual learning. arXiv preprint arXiv:1904.07734

Wang, M., & Deng, W. (2018). Deep visual domain adaptation: A survey. Neurocomputing, 312, 135–153.

Wang, Q., Fink, O., Van Gool, L., & Dai, D. (2022). Continual test-time domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7201–7211

Wang, F., Han, Z., Gong, Y., & Yin, Y. (2022) Exploring domain-invariant parameters for source free domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7151–7160.

Wang, J., Lan, C., Liu, C., Ouyang, Y., Zeng, W., & Qin, T. (2021) Generalizing to unseen domains: A survey on domain generalization. arXiv preprint arXiv:2103.03097

Wang, D., Shelhamer, E., Liu, S., Olshausen, B., & Darrell, T. (2020). Tent: Fully test-time adaptation by entropy minimization. In International Conference on Learning Representations

Wang, L., Zhang, X., Su, H., & Zhu, J. (2023). A comprehensive survey of continual learning: Theory, method and application. arXiv preprint arXiv:2302.00487

Wilson, G., & Cook, D. J. (2020). A survey of unsupervised deep domain adaptation. ACM Transactions on Intelligent Systems and Technology (TIST), 11(5), 1–46.

Wolpert, D. H., & Macready, W. G. (1997). No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation, 1(1), 67–82.

Wu, Q., Yue, X., & Sangiovanni-Vincentelli, A. (2021). Domain-agnostic test-time adaptation by prototypical training with auxiliary data. In NeurIPS 2021 Workshop on Distribution Shifts: Connecting Methods and Applications

Xie, S., Girshick, R., Dollár, P., Tu, Z., & He, K. (2017). Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1492–1500.

Ye, F., & Bors, A.G. (2020). Learning latent representations across multiple data domains using lifelong vaegan. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XX 16, pp. 777–795. Springer

Ye, F., & Bors, A.G. (2024). Online task-free continual generative and discriminative learning via dynamic cluster memory. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 26202–26212.

Yuan, L., Xie, B., & Li, S. (2023). Robust test-time adaptation in dynamic scenarios. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15922–15932.

Zagoruyko, S., & Komodakis, N. (2016). Wide residual networks. In Procedings of the British Machine Vision Conference 2016. British Machine Vision Association

Zhang, M., Levine, S., & Finn, C. (2022). Memo: Test time robustness via adaptation and augmentation. In Advances in Neural Information Processing Systems.

Zhang, J., Qi, L., Shi, Y., & Gao, Y. (2023). Domainadaptor: A novel approach to test-time adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 18971–18981.

Zhao, B., Chen, C., & Xia, S.-T. (2023). Delta: Degradation-free fully test-time adaptation. In The 11th International Conference on Learning Representations.

Zhao, H., Liu, Y., Alahi, A., & Lin, T. (2023). On pitfalls of test-time adaptation. In International Conference on Machine Learning (ICML)

Zhou, K., Liu, Z., Qiao, Y., Xiang, T., & Loy, C.C. (2021). Domain generalization: A survey. arXiv preprint arXiv:2103.02503

Acknowledgements

This work was supported in part by the National Key Research and Development Program of China under Grant 2022YFB3304602, Beijing Natural Science Foundation under Grant L243009, the National Nature Science Foundation of China under Grant 62473367 and the Science and Technology Service Network Initiative (STS) Project of Chinese Academy of Sciences under Grant STS-HP-202308.

Author information

Authors and Affiliations

Contributions

Conceptualization and methodology: JL. Formal analysis: JL and XB. Software: JL, JC and YW. Writing—original draft preparation: JL. Writing—review and editing: All authors. Funding acquisition: CL and JT. Supervision: All authors.

Corresponding author

Ethics declarations

Conflict of interest

Not applicable

Ethical approval

Not applicable

Consent to participate

Not applicable

Consent for publication

Not applicable

Additional information

Editor: Joao Gama.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, J., Liu, C., Bai, X. et al. Compression and restoration: exploring elasticity in continual test-time adaptation. Mach Learn 114, 104 (2025). https://doi.org/10.1007/s10994-025-06739-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10994-025-06739-8