Abstract

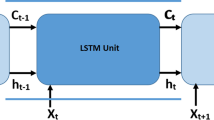

Resources allocation (RA) is a challenging task in many fields and applications including communications and computer networks. The conventional solutions of such problems usually come with a time and memory cost, especially for massive networks such as Internet-of-Things (IoT) networks. In this paper, two RA deep network models are proposed for enabling a clustered underlay IoT deployment, where a group of IoT nodes are uploading information to a centralized gateway in their vicinity by reusing the communication channels of conventional cellular users. The RA problem is formulated as a two-dimensional matching problem, which can be expressed as a traditional linear sum assignment problem (LSAP). The two proposed models are based on the recurrent neural network (RNN). Specifically, we investigate the performance of two long short-term memory (LSTM) based architectures. The results show that the proposed techniques could be used as replacement of the well-known Hungarian algorithm for solving LSAPs due to its ability to find the solution for the problems with different sizes, high accuracy, and very fast execution time. Additionally, the results show that the obtained accuracy outperforms the state-of-the-art deep network techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Xia N, Chen HH, Yang CS (2018) Radio resource management in machine-to-machine communications-a survey. IEEE Commun Surveys Tuts 20(1):791–828

Molisch AF, Win MZ (2004) MIMO systems with antenna selection. IEEE Microw Mag 5(1):46–56

Le NP, Safaei F, Tran LC (2016) Antenna selection strategies for MIMO-OFDM wireless systems: an energy efficiency perspective. IEEE Trans Veh Technol 65(4):2048–2062

Xiong K, Fan P, Letaief KB, Yi S, Lei M (2012) Joint subcarrier-pairing and resource allocation for two-way multi-relay OFDM networks Proc. IEEE GLOBECOM, pp. 4874–4879

Elmesalawy MM (2016) D2D communications for enabling Internet of things underlaying LTE cellular networks. J Wireless Network Commun 6(1):1–9

Wang T, Wen CK, Wang H, Gao F, Jiang T, Jin S (2017) Deep learning for wireless physical layer: opportunities and challenges. China Commun 14(11):92–111

Mao Q, Hu F, Hao Q (2018) Deep learning for intelligent wireless networks: a comprehensive survey. IEEE Commun Surveys Tuts, 1–1

Kim K, Lee J, Choi J (2018) Deep learning based pilot allocation scheme (DL-PAS) for 5G massive MIMO system. IEEE Commun Lett 22(4):828–831

Sallent O, Perez-Romero J, Ferrus R, Agusti R (2015) Learning-based coexistence for lte operation in unlicensed bands. In: Proc. IEEE ICCW, pp 2307–2313

Mao F, Blanco E, Fu M, Jain R, Gupta A, Mancel S, Yuan R, Guo S, Kumar S, Tian Y (2017) Small boxes big data: a deep learning approach to optimize variable sized bin packing. In: Proc. IEEE BigDataService, pp 80–89

You Y, Qin C, Gong Y (2017) Resource allocation for a full-duplex base station aided OFDMA system. In: Proc. IEEE SPAWC, pp 1–4

Easterfield T (1946) A combinatorial algorithm. J London Math Soc 21:219–226

Kuhn HW (1955) The hungarian method for the assignment problem. Naval Res Logist (NRL) 2(1–2):83–97

Munkres J (1957) Algorithms for the assignment and transportation problems. J SIAM 5:32–38

Edmonds J, Karp R (1972) Theoretical improvements in algorithmic efficiency for network flow problems. J ACM 19:348–264

Feo TA, Resende MGC (1995) Greedy randomized adaptive search procedures. J Global Optim 6(2):109–133

Naiem A, El-Beltagy M (2013) On the optimality and speed of the deep greedy switching algorithm for linear assignment problems. In: Proc. IEEE IPDPSW, pp 1828–1837

Lee M, Xiong Y, Yu G, Li GY (2018) Deep neural networks for linear sum assignment problems. IEEE Wireless Commun Let, 1–1

Zaky AB, Huang JZ, Wu K, ElHalawany BM (2020) Generative neural network based spectrum sharing using linear sum assignment problems. China Communications 17(2):14–29. https://doi.org/10.23919/JCC.2020.02.002.

ElHalawany BM, Ruby R, Wu K (2019) D2D communication for enabling internet-of-things: outage probability analysis. IEEE Trans Veh Technol 68(3):2332–2345

Hochreiter S, Bengio Y, Frasconi P, Schmidhuber J (2001) Gradient flow in recurrent nets: the difficulty of learning long-term dependencies

Wang T, Wen CK, Wang H, Gao F, Jiang T, Jin S (2017) Deep learning for wireless physical layer: opportunities and challenges. China Commun 14(11):92–111

Kingma D, Ba J (2015) Adam: a method for stochastic optimization. Computer Science

Funding

This research was supported in part by the China NSFC Grant 61872248, Guangdong NSF 2017A030312008, Fok Ying-Tong Education Foundation for Young Teachers in the Higher Education Institutions of China (Grant No. 161064), GDUPS (2015), Shenzhen Science and Technology Foundation (No. ZDSYS20190902092853047), Scientific Research Fund of Benha University (Project 2/1/14).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

ElHalawany, B.M., Wu, K. & Zaky, A.B. Deep Learning Based Resources Allocation for Internet-of-Things Deployment Underlaying Cellular Networks. Mobile Netw Appl 25, 1833–1841 (2020). https://doi.org/10.1007/s11036-020-01566-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11036-020-01566-8