Abstract

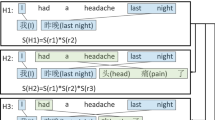

Machine translation has become an irreplaceable application in the use of mobile phones. However, the current mainstream neural machine translation models depend on continuously increasing the amount of parameters to achieve better performance, which is not applicable to the mobile phone. In this paper, we improve the performance of neural machine translation (NMT) with shallow syntax (e.g., POS tag) of target language, which has better accuracy and latency than deep syntax such as dependency parsing. In particular, our models take less parameters and runtime than other complex machine translation models, making mobile applications possible. In detail, we present three RNN-based NMT decoding models (independent decoder, gates shared decoder and fully shared decoder) to jointly predict target word and POS tag sequences. Experiments on Chinese-English and German-English translation tasks show that the fully shared decoder can acquire the best performance, which increases the BLEU score by 1.4 and 2.25 points respectively compared with the attention-based NMT model. In addition, we extend the idea to transformer-based models, and the experimental results also show that the BLEU score is further improved.

Similar content being viewed by others

Notes

Six combinations (shared gates / independent gates): {[input / forget, output], [input, forget / output], [input,output / forget], [forget / input, output], [output / input, forget], [forget, output / input]}.

The codes are implemented with Pytorch, which we plan to release to the community.

The corpora includes LDC2002E18, LDC2003E07, LDC2003E14, the Hansards portion of LDC2004T08, and LDC2005T06.

The value of kappa is 0.65 in 1-5 scale on two dimensions.

References

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436

Bentivogli L, Bisazza A, Cettolo M et al (2016) Neural versus phrase-based machine translation quality: A case study

Eriguchi A, Tsuruoka Y, Cho K (2017) Learning to parse and translate improves neural machine translation

Hashimoto K, Tsuruoka Y (2017) Neural machine translation with source-side latent graph parsing. Proceedings of the conference on machine translation (WMT) 2017, pp 125–135 Association for Computational Linguistics

Geng X, Feng X, Qin B, Liu T (2018) Adaptive multi-pass decoder for neural machine translation. Proceedings of the 2018 conference on empirical Methods in Natural Language Processing (EMNLP

Nadejde M, Reddy S, Sennrich R et al (2017) Predicting target language CCG supertags improves neural machine translation. Proceedings of the conference on machine translation (WMT) 2017, vol 1, pp 68–79. Association for computational linguistics

Luong M-T, Le QV, Sutskever I, Vinyals O, Kaiser L (2016) Multi-task sequence to sequence learning. Proceedings of the conference on machine translation (WMT) 2016 ICLR

Niehues J, Cho E (2017) Exploiting linguistic resources for neural machine translation using multi-task learning. Proceedings of the conference on machine translation (WMT) 2017, vol 1, pp 80–89. Association for computational linguistics

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. In: NIPS

Cho K, van Merrienboer B, Gulcehre C, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv:1406.1078

Feng X, Feng Z, Zhao W, Zou N, Qin B, Liu T Improved neural machine translation with pos-tagging through joint decoding. International conference on artificial intelligence for communications and networks aicon 2019: Artificial intelligence for communications and networks pp 159–166

Gong H, Feng X, Qin B, et al. (2019) Table-to-text generation with effective hierarchical encoder on three dimensions (Row column and time)[J]

Han S, Zhang Y, Meng* W, Li C, Zhang Z (2019) Full-duplex relay-assisted macrocell with millimeter wave backhauls: Framework and prospects. IEEE Netw 33(5):190–197

Han S, Huang Y, Meng W, Li C, Xu N, Chen D (2019) Optimal power allocation for SCMA downlink systems based on maximum capacity. IEEE Trans Commun 67(2):1480–1489

Sun Y, Tang D, Duan N, Qin T, Liu S, Yan Z, Zhou M, Lv Y, Yin W, Feng X, Qin B, Liu T (2019) Joint learning of question answering and question generation. IEEE Transactions on Knowledge and Data Engineering

Yin Q, Zhang Y, Zhang W, Liu T (2017, September) Chinese zero pronoun resolution with deep memory network. In: Proceedings of the 2017 conference on empirical methods in natural language processing, pp 1309–1318

Yin Q, Zhang Y, Zhang W N, Liu T, Wang W Y (2018) Deep reinforcement learning for chinese zero pronoun resolution. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1:, Long Papers), vol 1, pp 569–578

Cho K, van Merrienboer B, Bahdanau D, Bengio Y (2014a) On the properties of neural machine translation: Encoder–decoder approaches. In: Proceedings of SSST-8, 8th workshop on syntax, semantics and structure in statistical translation. Association for Computational Linguistics, Doha, pp 103–111

Eriguchi A, Hashimoto K, Tsuruoka Y (2016) Tree-to-sequence attentional neural machine translation. Association for Computational Linguistics, Berlin, pp 823–833

Luong M-T, Le QV, Sutskever I, Vinyals O, Kaiser L (2016) Multi-task sequence to sequence learning. In: Inproceedings of international conference on learning representations ICLR, p 2016

Niehues J, Ha T-L, Cho E, Waibel A (2016) Using factored word representation in neural network language models. In: Proceedings of the 1st conference on machine translation. Berlin, Germany

Martínez García M, Barrault L, Bougares F (2016) Factored neural machine translation architectures. In: International workshop on spoken language translation (IWSLT’16)

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate

Luong T, Pham H, Manning CD (2015) Effective approaches to attention-based neural machine translation. In: EMNLP

Mikolov T, Sutskever I, Chen K, Corrado GS, Dean J (2013) Distributed representations of words and phrases and their compositionality. In: 27th annual conference on neural information processing systems, vol 2013, pp 3111–3119

Manning C, Surdeanu M, Bauer J, Finkel J, Bethard S, McClosky D (2014) The stanford corenlp natural language processing toolkit. In: Proceedings of 52nd annual meeting of the association for computational linguistics: System demonstrations, pp 55–60

Ranzato M, Chopra S, Auli M et al (2015) Sequence level training with recurrent neural networks. Computer Science

Zhou H, Tu Z, Huang S et al (2017) Chunk-based bi-scale decoder for neural machine translation. Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Short Papers), pp 580-586. Association for Computational Linguistics

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Feng, X., Feng, Z., Zhao, W. et al. Enhanced Neural Machine Translation by Joint Decoding with Word and POS-tagging Sequences. Mobile Netw Appl 25, 1722–1728 (2020). https://doi.org/10.1007/s11036-020-01582-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11036-020-01582-8