Abstract

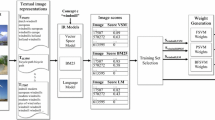

Visual concept detection consists in assigning labels to an image or keyframe based on its semantic content. Visual concepts are usually learned from an annotated image or video database with a machine learning algorithm, posing this problem as a multiclass supervised learning task. Some practical issues appear when the number of concept grows, in particular in terms of available memory and computing time, both for learning and testing. To cope with these issues, we propose to use a multiclass boosting algorithm with feature sharing and reduce its computational complexity with a set of efficient improvements. For this purpose, we explore a limited part of the possible parameter space, by adequately injecting randomness into the crucial steps of our algorithm. This makes our algorithm able to handle a problem of classification with many classes in a reasonable time, thanks to a linear complexity with regards to the number of concepts considered as well as the number of feature and their size. The relevance of our algorithm is evaluated in the context of information retrieval, on the benchmark proposed into the ImageCLEF international evaluation campaign and shows competitive results.

Similar content being viewed by others

References

Bay H, Ess A, Tuytelaars T, Gool LV (2008) Surf: speeded up robust features. Comput Vis Image Underst 110(3):346–359

Breiman L (1996) Bagging predictors. Mach Learn 2(24):123–140

Breiman L (2001) Random forests. Mach Learn 1(45):5–32

Dance C, Willamowski J, Fan L, Bray C, Csurka G (2004) Visual categorization with bags of keypoints. In: ECCV international workshop on statistical learning in computer vision

Deselaers T, Hanbury A (2008) The visual concept detection task in imageclef 2008. In: CLEF working notes. Aarhus, Denmark, pp 531–538

Dietterich B (1995) Solving multiclass learning problems via ecocs. J Artif Intell Res 2:263–286

Efron B, Tibshirani RJ (1993) An introduction to the bootstrap. Chapman & Hall, New York

Everingham M, Van Gool L, Williams CKI, Winn J, Zisserman A (2007) The PASCAL visual object classes challenge 2007 (VOC2007) results. http://www.pascal-network.org/challenges/VOC/voc2007/workshop/index.html. Accessed May 2010

Fergus R, Perona P, Zisserman A (2003) Object class recognition by unsupervised scale-invariant learning. In: IEEE conference on computer vision and pattern recognition

Freund Y (1995) Boosting a weak learning algorithm by majority. Inf Comput 121(2):256–285

Friedman J, Hastie T, Tibshirani R (2000) Additive logistic regression: a statistical view of boosting. Ann Stat 28(2):337–407

Ho TK (1998) The random subspace method for constructing decision forests. IEEE Trans Pattern Anal Mach Intell 20(8):832–844

Honnorat N, Le Borgne H (2009) Accélérer le boosting avec partage de caractéristiques. In: CORESA. Toulouse, France, pp 203–208

Joint M, Moëllic PA, Hède P, Adam P (2004) Piria: a general tool for indexing, search and retrieval of multimedia content. In: SPIE ElectronicImaging, San Jose, California, USA

Lazebnik, S, Schmid, C, Ponce, J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In: IEEE conference on computer vision and pattern recognition

Le Borgne H, Guérin Dugué A, O’Connor N (2007) Learning midlevel image features for natural scene and texture classification. IEEE Trans Circuits Syst Video Technol 17(3):286–297

Marszałek M, Schmid C, Harzallah H, van de Weijer J (2007) Learning object representations for visual object class recognition. In: Visual recognition challenge workshop, in conjunction with ICCV

Nowak S, Dunker P (2009) Overview of the clef 2009 large scale visual concept detection and annotation task. In: CLEF working notes, Corfu, Greece

Oliva A, Torralba A (2001) Modeling the shape of the scene: a holistic representation of the spatial envelope. Int J Comput Vis 3(42):145–175

Perronnin F (2008) Universal and adapted vocabularies for generic visual categorization. IEEE Trans Pattern Anal Mach Intell 7(30):1243–1256

Perronnin F, Dance C (2007) Fisher kernels on visual vocabularies for image categorization. In: IEEE conference on computer vision and pattern recognition, pp 1–8

Schapire R (1990) Strength of weak learnability. Mach Learn (5):197–227

Shotton J, Winn J, Rother C (2009) A criminisi.textonboost for image understanding: multi-class object recognition and segmentation by jointly modeling texture, layout, and context. Int J Comput Vis 1(81):2–23

Torralba A, Murphy KP, Freeman W (2007) Sharing visual features for multiclass and multiview object detection. IEEE Trans Pattern Anal Mach Intell 5(29):854–869

van Gemert JC, Geusebroek JM, Veenman CJ, Smeulders AWM (2008) Kernel codebooks for scene categorization. In: European conference on computer vision

Zhang J, Marszałek M, Lazebnik S, Schmid C (2007) Local features and kernels for classiffication of texture and object categories: a comprehensive study. Int J Comput Vis 2(73):213–238

Acknowledgements

We would like to thank the two reviewers for their fruitful comments. We acknowledge support from the ANR (project Yoji) and the DGCIS for funding us through the regional business cluster Cap Digital (projects Géorama and Roméo).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Le Borgne, H., Honnorat, N. Fast shared boosting for large-scale concept detection. Multimed Tools Appl 60, 389–402 (2012). https://doi.org/10.1007/s11042-010-0607-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-010-0607-y