Abstract

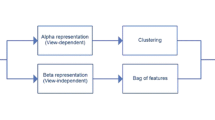

Existing action matching methods from the geometric respect typically assume the collinearity or coplanarity for view invariance. These assumptions curb the application to uncontrolled action patterns. In this paper, a new projective invariant named characteristic number (CN) is used, which can be used to describe 3D non-coplanar points. For motion trajectories of actions, we propose the temporal CN (TCN) for individual joint point of a human body in temporal series. This view-invariant feature can characterize an action well with limited number of joints(a single one in our experiments). In addition to TCN, we are also able to define the spatial characteristic number (SCN) on several (five in our paper) joint points in the spatial domain for one frame. SCN works complementary to temporal features, when limited snapshots of an action are available. We validate both SCN and TCN on the widely used CMU Motion Capture Database (Mocap) database, KTH Multiview Football Dataset II and IXMAS dataset. The promising recognition results indicate the invariance to varying viewpoints compared with the state-of-the-art. The results on CMU and KTH database corrupted by noise show the robustness to noise.

Similar content being viewed by others

References

Ahmad M, Lee SW (2006) HMM-based human action recognition using multiview image sequences. In: Proceedings of ICPR

Ashraf N, Shen Y, Cao X, Foroosh H (2013) View invariant action recognition using weighted fundamental ratios. Computer Vision and Image Understanding

Cuzzolin F (2006) Using bilinear models for view-invariant action and identity recognition. In: Proceedings of CVPR

Efros AA, Berg AC, Mori G, Malik J (2003) Recognizing action at a distance. In: Proceedings of CVPR

Farhadi A, Tabrizi MK (2008) Learning to recognize activities from the wrong view point. In: Computer vision–ECCV 2008. Springer, pp 154–166

Gondal I, Murshed M, ul Haq A (2011) On dynamic scene geometry for view-invariant action matching. In: Proceedings of CVPR

Grabner H, Bischof H (2006) On-line boosting and vision. In: 2006 IEEE computer society conference on computer vision and pattern recognition. IEEE, vol 1, pp 260–267

Huang K, Zhang Y, Tan T (2012) A discriminative model of motion and cross ratio for view-invariant action recognition. IEEE Trans Image Processing 21(4):2187–2197

Iosifidis A, Tefas A, Pitas I (2012) View-invariant action recognition based on artificial neural networks. IEEE Transactions on Neural Networks and Learning Systems 23(3):412–424

Junejo IN, Dexter E, Laptev I, Pérez P (2008) Cross-view action recognition from temporal self-similarities. Springer

Laptev I (2005) On space-time interest points. Int J Comput Vis 64(2-3):107–123

Le QV, Zou WY, Yeung SY, Ng AY (2011) Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 3361–3368

Li R, Zickler T (2012) Discriminative virtual views for cross-view action recognition. In: Proceedings of CVPR

Luo Z, Zhou X, Gu DX (2014) From a projective invariant to some new properties of algebraic hypersurfaces. Science China Mathematics 57(11):2273–2284

Lv F, Nevatia R (2007) Single view human action recognition using key pose matching and viterbi path searching. In: Proceedings of CVPR

Moeslund TB, Hilton A, Kruger V (2006) A survey of advances in vision-based human motion capture and analysis. Comput Vis Image Underst 104(2):90–126

Parameswaran V, Chellappa R (2003) View invariants for human action recognition. In: Proceedings of CVPR

Rao C, Yilmaz A, Shah M (2002) View-invariant representation and recognition of actions. Int J Comput Vis 50(2):203–226

Reddy KK, Liu J, Shah M (2009) Incremental action recognition using feature-tree. In: 2009 IEEE 12th international conference on computer vision. IEEE, pp 1010–1017

Richard H, Zisserman A (2004) Multiple view geometry in computer vision, 2nd edn. Cambridge University Press

Schuldt C, Laptev I, Caputo B (2004) Recognizing human actions: a local SVM approach. In: Proceedings of ICPR

Shen Y, Foroosh H (2008) View-invariant action recognition using fundamental ratios. In: Proceedings of CVPR

Srestasathiern P, Yilmaz A (2011) Planar shape representation and matching under projective transformation. Comput Vis Image Underst 115(11):1525–1535

Vahid K, Burenius M, Azizpour H, Sullivan J (2013) Multi-view body part recognition with random forests. In: Proceedings of BMVC

Weinland D, Boyer E, Ronfard R (2007) Action recognition from arbitrary views using 3d exemplars. In: Proceedings of ICCV

Weinland D, Özuysal M, Fua P (2010) Making action recognition robust to occlusions and viewpoint changes. In: Computer vision ECCV 2010. Springer, pp 635–648

Wu X, Jia Y (2012) View-invariant action recognition using latent kernelized structural SVM. In: Proceedings of ECCV

Yan P, Khan SM, Shah M (2008) Learning 4d action feature models for arbitrary view action recognition. In: IEEE conference on computer vision and pattern recognition, 2008. CVPR 2008. IEEE, pp 1–7

Zhang Y, Huang K, Huang Y, Tan T (2009) View-invariant action recognition using cross ratios across frames. In: Proceedings of ICIP

Zhang Z, Wang C, Xiao B, Zhou W, Liu S, Shi C (2013) Cross-view action recognition via a continuous virtual path. In: Proceedings of CVPR

Zhu G, Xu C, Gao W, Huang Q (2006) Action recognition in broadcast tennis video using optical flow and support vector machine. In: Computer vision in human-computer interaction. Springer, pp 89–98

Acknowledgments

This work is partially supported by the Natural Science Foundation of China under grant Nos. 61402077, 61033012, 11171052, 61272371, 61003177 and 61328206, the program for New Century Excellent Talents (NCET-11-0048), Civil Aviation Administration of China (No. U1233110), and Fundamental Research Funds for the Central Universities.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof of Theorem 1

Appendix: Proof of Theorem 1

Assume A is the matrix of a given projective transformation Φ. \(\mathcal {P}^{\prime }=\{P_{i}^{\prime }\}_{i=1}^{r}\) represent the projected points of \(\mathcal {P}=\{P_{i}\}_{i=1}^{r}\) with Φ, and P i ′=Φ(P i )=k i A P i (P r+1 = P 1, k i+1 = k 1). \(\mathcal {Q}=\left \{Q_{i}^{(j)}\right \}_{i=1,2,\ldots ,r}^{j=1,2,\ldots ,n}\) are projected to \(\mathcal {Q}^{\prime }=\left \{Q_{i}^{(j)\prime }\right \}_{i=1,2,\ldots ,r}^{j=1,2,\ldots ,n}\), then

Thus, the characteristic number of transformed points is given by

which indicates that the characteristic number is invariant under projective transformations.

Rights and permissions

About this article

Cite this article

Jia, Q., Fan, X., Luo, Z. et al. Cross-view action matching using a novel projective invariant on non-coplanar space-time points. Multimed Tools Appl 75, 11661–11682 (2016). https://doi.org/10.1007/s11042-015-2704-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-015-2704-4