Abstract

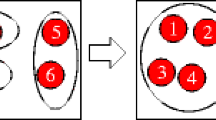

The Bag of Words (BoW) model is one of the most popular and effective image representation methods and has been drawn increasing interest in computer vision filed. However, little attention is paid on it in visual tracking. In this paper, a visual tracking method based on Bag of Superpixels (BoS) is proposed. In BoS, the training samples are oversegmented to generate enough superpixel patches. Then K-means algorithm is performed on the collected patches to form visual words of the target and a superpixel codebook is constructed. Finally the tracking is accomplished via searching for the highest likelihood between candidates and codebooks within Bayesian inference framework. In this process, an effective updating scheme is adopted to help our tracker resist occlusions and deformations. Experimental results demonstrate that the proposed method outperforms several state-of-the-art trackers.

Similar content being viewed by others

References

Achanta R, Shaji A, Smith K et al (2012) SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans Pattern Anal Mach Intell 34 (11):2274–2282

Adam A, Rivlin E, Shimshoni I (2006) Robust fragments-based tracking using the integral histogram. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 798–805

Babenko B, Yang M-H, Belongie S (2011) Robust object tracking with online multiple instance learning. IEEE Trans Pattern Anal Mach Intell 33(8):1619–1632

Bay H, Ess A, Tuytelaars T et al (2008) Speeded-up robust features (SURF). Comput Vis Image Und 110(3):346–359

Bolovinou A, Pratikakis I, Perantonis S (2013) Bag of spatio-visual words for context inference in scene classification. Pattern Recogn 46(3):1039–1053

Celebi ME, Kingravi HA, Vela PA (2013) A comparative study of efficient initialization methods for the k-means clustering algorithm. Expert Syst Appl 40 (1):200–210

Dance C, Willamowski J, Fan L et al (2004) Visual categorization with bags of keypoints. In: European conference on computer vision workshop on statistical learning in computer vision (ECCVW), pp 59–74

David GL (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Everingham M, Gool LV, Williams CKI et al (2010) The PASCAL visual object classes challenge. Int J Comput Vis 88(2):303–338

Fan H, Xiang JH, Xu J et al (2014) Part-based visual tracking via online weighted P-N learning. Sci World J 2014:13

Fan H, Xiang JH, Liao HH et al (2015) Robust tracking based on local structural cell graph. J Vis Commun Image R 31:54–63

Fergus R, Li F-F, Perona P et al (2005) Learning object categories from Google’s image search. In: IEEE international conference on computer vision (ICCV), pp 1816–1823

Iosifidis A, Tefas A, Pitas I (2014) Discriminant bag of words based representation for human action recognition. Pattern Recogn Lett 49(1):224–230

Joachims T (1998) Text categorization with suport vector machines: learning with many relevant features. In: European conference on machine learning (ECML), pp 137–142

Kalal Z, Mikolajczyk K, Matas J (2012) Tracking-learning-detection. IEEE Trans Pattern Anal Mach Intell 34(7):1409–1422

Li F-F, Perona P (2005) A Bayesian hierarchical model for learning natural scene categories. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 524–531

Li X, Hu W, Shen C et al (2013) A survey of appearance models in visual object tracking. ACM Trans Intel Syst Tec 4(4):2411–2418

Mei X, Ling HB (2011) Robust visual tracking and vehicle classification via sparse representation. IEEE Trans Pattern Anal Mach Intell 33(11):2259–2272

Ojala T, Pietikäinen M, Mäenpää T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 24(7):971–987

Ross D, Lim J, Lin RS et al (2008) Incremental learning for robust visual tracking. Int J Comput Vis 77(1):125–141

Sivic J, Zisserman A (2003) Video google: A text retrieval approach to object matching in videos. In: IEEE international conference on computer vision (ICCV), pp 1470–1477

Sivic J, Russell BC, Efros AA et al (2005) Discovering objects and their location in images. In: IEEE international conference on computer vision (ICCV), pp 370–377

Tsagkatakis G, Savakis A (2011) Online distance metric learning for object tracking. IEEE Trans Circ Syst Vid 21(12):1810–1821

Wang F, Yu S, Yang J (2008) A novel fragments-based tracking algorithm using mean shift. In: International conference on control, automation, robotics and vision (ICARCV), pp 694–698

Wang XG, Feng B, Bai X et al (2014) Bag of contour fragments for robust shape classification. Pattern Recogn 47(6):2116–2125

Yang F, Lu HC, Zhang WL et al (2012) Visual tracking via bag of features. IET Image Process 6(2):115–128

Yang F, Lu HC, Yang M-H (2014) Robust superpixel tracking. IEEE Trans Image Process 23(4):1639–1651

Zhang KH, Song H (2013) Real-time tracking via online weighted multiple instance learning. Pattern Recogn 46:397–411

Zhang KH, Zhang L, Yang M-H (2014) Fast compressive tracking. IEEE Trans Pattern Anal Mach Intell 36(10):2002–2015

Acknowledgment

Jinhai Xiang is supported by the Fundamental Research Funds for the Central Universities (Program No.2014BQ083). Liang Zhao is supported by the Fundamental Research Funds for Central Universities (Program No.2014QC008).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fan, H., Xiang, J. & Zhao, L. Robust visual tracking via bag of superpixels. Multimed Tools Appl 75, 8781–8798 (2016). https://doi.org/10.1007/s11042-015-2790-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-015-2790-3