Abstract

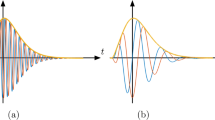

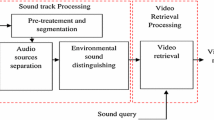

In this paper, an audio-driven algorithm for the detection of speech and music events in multimedia content is introduced. The proposed approach is based on the hypothesis that short-time frame-level discrimination performance can be enhanced by identifying transition points between longer, semantically homogeneous segments of audio. In this context, a two-step segmentation approach is employed in order to initially identify transition points between the homogeneous regions and subsequently classify the derived segments using a supervised binary classifier. The transition point detection mechanism is based on the analysis and composition of multiple self-similarity matrices, generated using different audio feature sets. The implemented technique aims at discriminating events focusing on transition point detection with high temporal resolution, a target that is also reflected in the adopted assessment methodology. Thereafter, multimedia indexing can be efficiently deployed (for both audio and video sequences), incorporating the processes of high resolution temporal segmentation and semantic annotation extraction. The system is evaluated against three publicly available datasets and experimental results are presented in comparison with existing implementations. The proposed algorithm is provided as an open source software package in order to support reproducible research and encourage collaboration in the field.

Similar content being viewed by others

References

Ajmera J, McCowan IA, Bourlard H (2001) Speech/music discrimination using entropy and dynamism features in a hmm classification framewor. Tech. rep., IDIAP

Carey MJ, Parris ES, Lloyd-Thomas H (1999) A comparison of features for speech, music discrimination. In: IEEE international conference on acoustics, speech, and signal processing, 1999. Proceedings. 1999, vol 1. IEEE, pp 149–152

Continuous frequency activation yaafe plugin repository. https://github.com/mcrg-fhstp/cba-yaafe-extension. [Online; accessed 30-July-2016]

Dimoulas CA, Symeonidis AL (2015) Syncing shared multimedia through audiovisual bimodal segmentation. IEEE MultiMedia 22(3):26–42

El-Maleh K, Klein M, Petrucci G, Kabal P (2000) Speech/music discrimination for multimedia applications. In: IEEE international conference on acoustics, speech, and signal processing, 2000. ICASSP’00. Proceedings. 2000, vol 6. IEEE, pp 2445–2448

Elizalde B, Friedland G (2013) Lost in segmentation: three approaches for speech/non-speech detection in consumer-produced videos. In: 2013 IEEE international conference on multimedia and expo (ICME). IEEE, pp 1–6

Foote J (1999) Visualizing music and audio using self-similarity. In: Proceedings of the seventh ACM international conference on multimedia (part 1). ACM, pp 77–80

Foote J (2000) Automatic audio segmentation using a measure of audio novelty. In: IEEE international conference on multimedia and expo, 2000. ICME 2000. 2000, vol 1. IEEE, pp 452–455

Gtzan music speech dataset. http://marsyasweb.appspot.com/download/data_sets/. [Online; accessed 30-July-2016]

Jiang H, Lin T, Zhang H (2000) Video segmentation with the support of audio segmentation and classification. In: Proceedings of IEEE ICME

Jun S, Rho S, Hwang E (2015) Music structure analysis using self-similarity matrix and two-stage categorization. Multimedia Tools and Applications 74(1):287–302

Khonglah BK, Prasanna SM (2016) Speech/music classification using speech-specific features. Digital Signal Process 48:71–83

Kinnunen T, Chernenko E, Tuononen M, Fränti P, Li H (2007) Voice activity detection using mfcc features and support vector machine. In: International conference on speech and computer (SPECOM07), Moscow, Russia, vol 2, pp 556–561

Kotsakis R, Kalliris G, Dimoulas C (2012) Investigation of broadcast-audio semantic analysis scenarios employing radio-programme-adaptive pattern classification. Speech Comm 54(6):743–762

Labrosa music-speech corpus. http://labrosa.ee.columbia.edu/sounds/musp/scheislan.html. [Online; accessed 30-July-2016]

Lavner Y, Ruinskiy D (2009) A decision-tree-based algorithm for speech/music classification and segmentation. EURASIP Journal on Audio, Speech, and Music Processing 2009(1):1

Lim C, Chang JH (2015) Efficient implementation techniques of an svm-based speech/music classifier in smv. Multimedia Tools and Applications 74(15):5375–5400

Mathieu B, Essid S, Fillon T, Prado J, Richard G (2010) Yaafe, an easy to use and efficient audio feature extraction software. In: ISMIR, pp 441–446

Minami K, Akutsu A, Hamada H, Tonomura Y (1998) Video handling with music and speech detection. IEEE Multimedia 5(3):17–25

Mirex 2015 muspeak sample dataset. http://www.music-ir.org/mirex/wiki/2015:music/speech_classification_and_detection#dataset_2. [Online; accessed 30-July-2016]

Miro XA, Bozonnet S, Evans N, Fredouille C, Friedland G, Vinyals O (2012) Speaker diarization: a review of recent research. IEEE Trans Audio Speech Lang Process 20(2):356–370

Moattar M, Homayounpour M (2009) A simple but efficient real-time voice activity detection algorithm. In: Signal processing conference, 2009 17th European. IEEE, pp 2549–2553

Panagiotakis C, Tziritas G (2005) A speech/music discriminator based on rms and zero-crossings. IEEE Trans Multimedia 7(1):155–166

Peakutils. http://pythonhosted.org/peakutils/. [Online; accessed 30-July-2016]

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V et al. (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12(Oct):2825–2830

Pikrakis A, Giannakopoulos T, Theodoridis S (2006) Speech/music discrimination for radio broadcasts using a hybrid hmm-bayesian network architecture. In: Signal processing conference, 2006 14th European. IEEE, pp 1–5

Pikrakis A, Giannakopoulos T, Theodoridis S (2008) A speech/music discriminator of radio recordings based on dynamic programming and bayesian networks. IEEE Trans Multimedia 10(5):846–857

Pikrakis A, Theodoridis S (2014) Speech-music discrimination: a deep learning perspective. In: 2014 22nd European signal processing conference (EUSIPCO). IEEE, pp 616–620

Ramalingam T, Dhanalakshmi P (2014) Speech/music classification using wavelet based feature extraction techniques. J Comput Sci 10(1):34–44

Sang-Kyun K, Chang JH (2009) Speech/music classification enhancement for 3gpp2 smv codec based on support vector machine. IEICE Trans Fundam Electron Commun Comput Sci 92(2):630–632

Saunders J (1996) Real-time discrimination of broadcast speech/music. In: ICASSP, vol 96, pp 993–996

Scheirer E, Slaney M (1997) Construction and evaluation of a robust multifeature speech/music discriminator. In: IEEE international conference on acoustics, speech, and signal processing, 1997. ICASSP-97., 1997, vol 2. IEEE, pp 1331–1334

Sell G, Clark P (2014) Music tonality features for speech/music discrimination. In: 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 2489– 2493

Seyerlehner K, Pohle T, Schedl M, Widmer G (2007) Automatic music detection in television productions. In: Proceedings of the 10th international conference on digital audio effects (DAFx’07). Citeseer

Shirazi J, Ghaemmaghami S (2010) Improvement to speech-music discrimination using sinusoidal model based features. Multimedia Tools and Applications 50(2):415–435

Speech - music discrimination demo version 1.0. http://cgi.di.uoa.gr/~sp_mu/download.html. [Online; accessed 30-July-2016]

Tsipas N, Vrysis L, Dimoulas C, Papanikolaou G (2015) Mirex 2015: Methods for speech/music detection and classification. In: Proceedings of the music information retrieval evaluation eXchange (MIREX). Malaga

Tsipas N, Vrysis L, Dimoulas CA, Papanikolaou G (2015) Content-based music structure analysis using vector quantization. In: Audio engineering society convention 138. Audio engineering society

Tsipas N, Zapartas P, Vrysis L, Dimoulas C (2015) Augmenting social multimedia semantic interaction through audio-enhanced web-tv services. In: Proceedings of the audio mostly 2015 on interaction with sound. ACM, p 34

Wang W, Gao W, Ying D (2003) A fast and robust speech/music discrimination approach. In: Proceedings of the 2003 joint conference of the fourth international conference on information, communications and signal processing, 2003 and fourth pacific rim conference on multimedia, vol 3. IEEE, pp 1325–1329

Wieser E, Husinsky M, Seidl M (2014) Speech/music discrimination in a large database of radio broadcasts from the wild. In: 2014 IEEE International conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 2134–2138

Zhang T, Kuo CCJ (2001) Audio content analysis for online audiovisual data segmentation and classification. IEEE Transactions on Speech and Audio Processing 9 (4):441–457

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tsipas, N., Vrysis, L., Dimoulas, C. et al. Efficient audio-driven multimedia indexing through similarity-based speech / music discrimination. Multimed Tools Appl 76, 25603–25621 (2017). https://doi.org/10.1007/s11042-016-4315-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-016-4315-0