Abstract

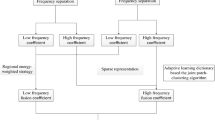

Image fusion is an important technique which aims to produce a synthetic result by leveraging the cross information available in the existing data. Sparse Representation (SR) is a powerful signal processing theory used in wide variety of applications like image denoising, compression and fusion. Construction of a proper dictionary with reduced computational efficiency is a major challenge in these applications. Owing to the above criterion, we propose a supervised dictionary learning approach for the fusion algorithm. Initially, gradient information is obtained for each patch of the training data set. Then, the edge strength and information content are measured for the gradient patches. A selection rule is finally employed to select the patches with better focus features for training the over complete dictionary. By the above process, the number of input patches for dictionary training is reduced to a greater extent. At the fusion step, the globally learned dictionary is used to represent the given set of source image patches. Experimental results with various source image pairs demonstrate that the proposed fusion framework gives better visual quality and competes with the existing methodologies quantitatively.

Similar content being viewed by others

References

http://decsai.ugr.es/cvg/CG/base.htm. Accessed September 2016

http://figshare.com/articles/TNO_Image_Fusion_Dataset/1008029. Accessed September 2016

http://home.ustc.edu.cn/~liuyu1/. Accessed September 2016

http://www.cs.technion.ac.il/~ronrubin/software.html. Accessed September 2016

Aharon M, Elad M, Bruckstein A (2006) K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans Signal Process 54(11):4311–4322

Aishwarya N, Asnath Victy Phamila Y, Amutha R (2013) Multi-focus image fusion using multi structure top hat transform and image variance. In: Proceedings of IEEE International Conference on Communication and Signal Processing: 686–689

Aslantas V, Bendes E, Toprak AN, Kurban R (2011) A comparison of image fusion methods on visible, thermal and multi-focus images for surveillance applications. In: Proceedings of IET Conference on Imaging for crime detection and prevention 1–6

Bai X, Zhang Y, Zhou F, Xue B (2015) Quad tree-based multi-focus image fusion using a weighted focus-measure. Inf. Fusion 22:105–118

Bruckstein AM, Donoho DL, Elad M (2007) From sparse solutions of systems of equations to sparse modeling of signals and images. SIAM Rev 51(1):34–81

Chen Y, Blum R (2009) A new automated quality assessment algorithm for image fusion. Image Vis Comput 27(10):1421–1432

De I, Chanda B (2013) Multi-focus image fusion using a morphology-based focus measure in a quad-tree structure. Inf. Fusion 14(2):136–146

Elad M, Figueiredo M, Ma Y (2010) On the role of sparse and redundant representations in image processing. Proc IEEE 98(6):972–982

Goshtasby A, Nikolov S (2007) Image fusion: advances in the state of the art. Guest Editorial Inf Fusion 8:114–118

Han Y, Cai Y, Cao Y, Xu X (2013) A new image fusion performance metric based on visual information fidelity. Inf. Fusion 14(2):127–135

James AP, Dasarathy B (2014) Medical image fusion: a survey of the state of the art. Inf. Fusion 19:14–19

Kim M, Han DK, Ko H (2016) Joint patch clustering-based dictionary learning for multimodal image fusion. Inf. Fusion 27:198–214

Kong WW, Zhang LJ, Yang L (2014) Novel fusion method for visible light and infrared images based on NSST–SF–PCNN. Infrared Phys Technol 65:103–112

Li S, Yang B, Hu J (2011) Performance comparison of different multi-resolution transforms for image fusion. Inf Fusion 12(2):74–84

Li H, Li L, Zhang J (2015) Multi-focus image fusion based on sparse feature matrix decomposition and morphological filtering. Opt Commun 342:1–11

Liu Y, Wang Z (2015) Simultaneous image fusion and denoising with adaptive sparse representation. IET Image Process 9(5):347–357

Liu Y, Liu S, Wang Z (2015) A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 24:147–164

Lu X, Zhang B, Zhao Y, Liu H, Pei H (2014) The infrared and visible image fusion algorithm based on target separation and sparse representation. Infrared Phys Technol 67:397–407

Nejati M, Samavi S, Shirani S (2015) Multi-focus image fusion using dictionary-based sparse representation. Inf Fusion 25:72–84

Phamila YAV, Amutha R (2014) Discrete cosine transform based fusion of multi-focus images for visual sensor networks. Signal Process 95:161–170

Piella G, Heijmans H (2003) A new quality metric for image fusion In: Proceedings of 10th International Conference on Image Processing 173–176

Qu G, Zhang D, Yan P (2002) Information measure for performance of image fusion. Electron Lett 38(7):313–315

Shreyamsha Kumar BK (2013) Multifocus and multispectral image fusion based on pixel significance using discrete cosine harmonic wavelet transform J. SIViP 7(6):1125–1143

Tian J, Chen L (2012) Adaptive multi-focus image fusion using a wavelet-based statistical sharpness measure. Signal Process 92(9):2137–2146

Tian J, Chen L, Ma L, Yu W (2011) Multi-focus image fusion using a bilateral gradient-based sharpness criterion. Opt Commun 284(1):80–87

Wang J, Peng J, Feng X, He G, Wu J, Yan K (2013) Image fusion with nonsubsampled contourlet transform and sparse representation. J Electron Imaging 22(4):043019

Wang J, Peng J, Feng X, He G, Fan J (2014) Fusion method for infrared and visible images by using non-negative sparse representation. Infrared Phys Technol 67:477–489

Xydeas C, Petrovic V (2000) Objective image fusion performance measure. Electron Lett 36(4):308–309

Yang B, Li S (2010) Multifocus image fusion and restoration with sparse representation. IEEE Trans Instrum Meas 59(4):884–892

Yang B, Li S (2012) Pixel-level image fusion with simultaneous orthogonal matching pursuit. Inf. Fusion 13(1):10–19

Yang C, Zhang J, Wang X, Liu X (2008) A novel similarity based quality metric for image fusion. Inf. Fusion 9(2):156–160

Yang B, Luo J, Li S (2012) Color image fusion with extend joint sparse model In: Proceedings of the 21st International Conference on Pattern Recognition 376–379

Yu N, Qiu T, Bi F, Wang A (2011) Image features extraction and fusion based on joint sparse representation. IEEE J Sel Top Signal Proces 5(5):1074–1082

Zhang Q, Guo B (2009) Multi-focus image fusion using the nonsubsampled contourlet transform. Signal Process 89(7):1334–1346

Zheng Y, Essock EA, Hansen BC, Haun AM (2008) A new metric based on extended spatial frequency and its application to DWT based fusion algorithms. Inf. Fusion 8:177–192

Author information

Authors and Affiliations

Corresponding author

Appendix-1 (Sparse coefficients fusion)

Appendix-1 (Sparse coefficients fusion)

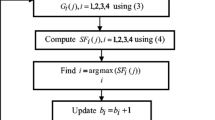

In image fusion, the sparse coefficients capture the local features of source images by optimum selection of dictionary atoms. To select the best sparse coefficients \( {\alpha}_F^t \) at the t th location, the following steps are carried out.

-

Step 1:

Activity level measurement- The absolute value of sparse coefficients \( {\alpha}_A^t\;\mathrm{and}\kern0.24em {\alpha}_B^t \) at each coefficient location is computed.

-

Step 2:

Fusion of Coefficients- The sum of absolute value of these coefficients \( {\alpha}_A^t\;\mathrm{and}\kern0.24em {\alpha}_B^t \) (L1-norm) are then estimated. Finally, fused sparse coefficients \( {\alpha}_F^t \) are found by selecting either \( {\alpha}_A^t \) or \( {\alpha}_B^t \) with the largest value.

Rights and permissions

About this article

Cite this article

Aishwarya, N., Bennila Thangammal, C. An image fusion framework using novel dictionary based sparse representation. Multimed Tools Appl 76, 21869–21888 (2017). https://doi.org/10.1007/s11042-017-4583-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4583-3