Abstract

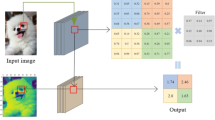

In this paper, we propose to extend the flexibility of the commonly used 2 × 2 non-overlapping max pooling for Convolutional Neural Network. We name it as Bi-linearly Weighted Fractional Max-Pooling. This proposed method enables max pooling operation below stride size 2, and is computed based on four bi-linearly weighted neighboring input activations. Currently, in a 2 × 2 non-overlapping max pooling operation, as spatial size is halved in both x and y directions, three-quarter of activations in the feature maps are discarded. As such reduction is too abrupt, amount of said pooling operation within a Convolutional Neural Network is very limited: further increasing the number of pooling operation results in too little activation left for subsequent operations. Using our proposed pooling method, spatial size reduction can be more gradual and can be adjusted flexibly. We applied a few combinations of our proposed pooling method into 50-layered ResNet and 19-layered VGGNet with reduced number of filters, and experimented on FGVC-Aircraft, Oxford-IIIT Pet, STL-10 and CIFAR-100 datasets. Even with reduced memory usage, our proposed methods showed reasonable improvement in classification accuracy with 50-layered ResNet. Additionally, with flexibility of our proposed pooling method, we change the reduction rate dynamically every training iteration, and our evaluation results indicated potential regularization effect.

Similar content being viewed by others

References

Coates A, Lee H, Ng AY (2011) An analysis of single-layer networks in unsupervised feature learning. In: AISTATS 2011, vol 1001

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: AISTATS, vol 9, pp 249–256

Graham B (2014) Fractional max-pooling. arXiv:1412.6071

Han D, Kim J, Kim J (2016) Deep pyramidal residual networks. arXiv:1610.02915

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In: IEEE international conference on computer vision (ICCV) 2015

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR)

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: Proceedings of the 32nd international conference on machine learning, ICML 2015, lille, France, 6-11 July 2015, pp 448–456

Jia Y, Shelhamer E, Donahue J, Karayev S, Long J, Girshick R, Guadarrama S, Darrell T (2014) Caffe: Convolutional architecture for fast feature embedding. In: Proceedings of the 22nd ACM international conference on multimedia. ACM, pp 675–678

Krizhevsky A, Hinton G (2009) Learning multiple layers of features from tiny images

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Maji S, Rahtu E, Kannala J, Blaschko M, Vedaldi A (2013) Fine-grained visual classification of aircraft. arXiv:1306.5151

Parkhi OM, Vedaldi A, Zisserman A, Jawahar C (2012) Cats and dogs. In: 2012 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 3498–3505

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L (2015) ImageNet large scale visual recognition challenge. Int J Comput Vis (IJCV) 115(3):211–252. doi:10.1007/s11263-015-0816-y

Sermanet P, Eigen D, Zhang X, Mathieu M, Fergus R, LeCun Y (2013) Overfeat: Integrated recognition, localization and detection using convolutional networks. In: International conference on learning representations (ICLR 2014). CBLS, p 16

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on learning representations

Srivastava N, Hinton GE, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Xu B, Wang N, Chen T, Li M (2015) Empirical evaluation of rectified activations in convolutional network. In: Deep learning workshop, ICML 2015

Zeiler M, Fergus R (2013) Stochastic pooling for regularization of deep convolutional neural networks. In: Proceedings of the international conference on learning representation (ICLR)

Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. In: European conference on computer vision. Springer International Publishing, pp 818–833

Acknowledgements

We would like to thank MEXT KAKENHI, Grant-in-Aid for Challenging Exploratory Research, Grant Number 15K12027 for partial support of our work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hang, S.T., Aono, M. Bi-linearly weighted fractional max pooling. Multimed Tools Appl 76, 22095–22117 (2017). https://doi.org/10.1007/s11042-017-4840-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4840-5