Abstract

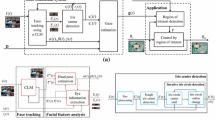

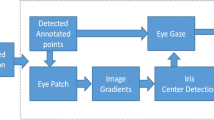

With the development of eye gaze tracking technology, much research has been performed to adopt this technology for interfacing with home appliances by severely disabled and wheelchair-bound users. For this purpose, two cameras are usually required, one for calculating the gaze position of the user, and the other for detecting and recognizing the home appliance. In order to accurately calculate the gaze position on the home appliance that the user looks at, the Z-distance and direction of the home appliance from the user should be correctly measured. Therefore, stereo cameras or depth-measuring devices such as Kinect are necessary, but they have limitations such as the need for additional camera calibration, and low acquisition speed for two cameras or a large-size of Kinect device. To overcome this problem, we propose a new method for estimating the continuous Z-distances and discrete directions of home appliances using one (small-sized) near-infrared (NIR) web camera and a fuzzy system. Experimental results show that the proposed method can accurately estimate the Z-distances and directions to home appliances.

Similar content being viewed by others

References

Adeli H, Sarma KC (2006) Cost optimization of structures: fuzzy logic, genetic algorithms, and parallel computing. Willey, Hoboken

Al-Rahayfeh A, Faezipour M (2013) Eye tracking and head movement detection: a state-of-art survey. IEEE J Translational Eng Health Medicine 1:2100212–2100212

Barea R, Boquete L, Ortega S, López E, Rodríguez-Ascariz JM (2012) EOG-based eye movements codification for human computer interaction. Expert Syst Appl 39:2677–2683

Barua A, Mudunuri LS, Kosheleva O (2014) Why trapezoidal and triangular membership functions work so well: towards a theoretical explanation. J Uncertain Syst 8:164–168

Bayu BS, Miura J (2013) Fuzzy-based illumination normalization for face recognition. In: Proceedings of the IEEE Workshop on advanced robotics and its social impacts, Tokyo, Japan, 7–9 November 2013, pp. 131–136

Broekhoven EV, Baets BD (2006) Fast and accurate center of gravity defuzzification of fuzzy system outputs defined on trapezoidal fuzzy partitions. Fuzzy Sets Syst 157:904–918

Chao C-H, Hsueh B-Y, Hsiao M-Y, Tsai S-H, Li T-HS (2009) Fuzzy target tracking and obstacle avoidance of mobile robots with a stereo vision system. Int J Fuzzy Syst 11:183–191

Choi C, Kim J (2007) A real-time EMG-based assistive computer interface for the upper limb disabled. In: Proceedings of the 10th International Conference on rehabilitation robotics, Noordwijk, Netherlands, 13–15 June 2007; pp. 459–462

Cohen J (1992) A power primer. Psychol Bull 112:155–159

Cui J, Liu Y, Xu Y, Zhao H, Zha H (2013) Tracking generic human motion via fusion of low- and high-dimensional approaches. IEEE Trans Syst Man Cybern Part A Syst Hum 43:996–1002

Deng LY, Hsu C-L, Lin T-C, Tuan J-S, Chang S-M (2010) EOG-based human-computer Interface system development. Expert Syst Appl 37:3337–3343

Galdi C, Nappi M, Riccio D, Wechsler H (2016) Eye movement analysis for human authentication: a critical survey. Pattern Recogn Lett 84:272–283

Gonzalez RC, Woods RE (1992) Digital Image Processing, 1st edn. Addison-Wesley, Boston

Hales J, Rozado D, Mardanbegi D (2013) Interacting with objects in the environment by gaze and hand gestures. In: Proceedings of the 3rd International Workshop on pervasive eye tracking and mobile eye-based interaction, Lund, Sweden, 13 august 2013, pp. 1–9

Heo H, Lee JM, Jung D, Lee JW, Park KR (2014) Nonwearable gaze tracking system for controlling home appliance. Sci World J 2014:1–20

Homography (computer vision). Available online: https://en.wikipedia.org/wiki/Homography_(computer_vision). Accessed on 4 Feb 2016

Jacob RJK, Karn KS (2003) Eye tracking in human–computer interaction and usability research: ready to deliver the promises. In: The mind's eye: cognitive and applied aspects of eye movement research, 1st edn. Elsevier, Oxford, pp 573–605

Joglekar A, Joshi D, Khemani R, Nair S, Sahare S (2011) Depth estimation using monocular camera. Int J Comput Sci Inf Technol 2:1758–1763

Kinect for Windows. Available online: http://www.microsoft.com/en-us/kinectforwindows/meetkinect/default.aspx. Accessed on 7 July 2015

Klir GJ, Yuan B (1995) Fuzzy Sets and fuzzy logic-theory and applications. Prentice-Hall, NJ

Ko E, Ju JS, Kim EY, Goo NS (2009) An intelligent wheelchair to enable mobility of severely disabled and elder people. In: Proceedings of the International Conference on consumer electronics, Las Vegas, USA, 10-14 January 2009, pp. 1-2

Kocejko T, Bujnowski A, Wtorek J (2008) Eye mouse for disabled. In: Proceedings of the Conference on human system interactions, Krakow, Poland, 25–27 May 2008, pp. 199–202

Lankford C (2000) Effective eye-gaze input into WindowsTM. In: Proceedings of the symposium on eye tracking research & applications, Palm Beach Gardens, FL, USA, 6–8 November 2000, pp. 23–27

Leekwijck WV, Kerre EE (1999) Defuzzification: Criteria and classification. Fuzzy Sets Syst 108:159–178

Lim Y-C, Lee C-H, Kwon S, Jung W-Y (2008) Distance estimation algorithm for both long and short ranges based on stereo vision system. In: Proceedings of IEEE intelligent vehicles symposium, Eindhoven, Netherlands, 4–6 June 2008, pp. 841–846

Lin C-S, Ho C-W, Chen W-C, Chiu C-C, Yeh M-S (2006) Powered wheelchair controlled by eye-tracking system. Opt Appl 36:401–412

Liu Y, Zhang X, Cui J, Wu C, Aghajan H, Zha H (2010) Visual analysis of child-adult interactive behaviors in video sequences. In: Proceedings of the 16th International Conference on virtual systems and multimedia, Seoul, Republic of Korea, 20-23 October 2010, pp. 26-33

Liu Y, Cui J, Zhao H, Zha H (2012) Fusion of low-and high-dimensional approaches by trackers sampling for generic human motion tracking. In: Proceedings of the 21st International Conference on pattern recognition, Tsukuba, Japan, 11-15 November 2012, pp. 898-901

Liu Y, Nie L, Han L, Zhang L, Rosenblum DS (2015) Action2Activity: recognizing complex activities from sensor data. In: Proceedings of the 24th International joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25-31 July 2015, pp. 1617-1623

Liu Y, Zheng Y, Liang Y, Liu S, Rosenblum DS (2016) Urban water quality prediction based on multi-task multi-view learning. In: Proceedings of the 25th International joint Conference on Artificial Intelligence, New York, USA, 9-15 July 2016, pp. 2576-2582

Liu Y, Liang Y, Liu S, Rosenblum DS, Zheng Y (2016) Predicting urban water quality with ubiquitous data. CoRR, pp. 1–14

Liu Y, Zhang L, Nie L, Yan Y, Rosenblum DS (2016) Fortune teller: predicting your career path. In: Proceedings of the 13th AAAI Conference on Artificial Intelligence, phoenix, USA, 12-17 February 2016, pp. 201-207

Liu Y, Nie L, Liu L, Rosenblum DS (2016) From action to activity: sensor-based activity recognition. Neurocomputing 181:108–115

Liu L, Cheng L, Liu Y, Jia Y, Rosenblum DS (2016) Recognizing complex activities by a probabilistic interval-based model. In: Proceedings of the 13th AAAI Conference on Artificial Intelligence, Phoenix, USA, 12-17 February 2016, pp. 1266-1272

Lu Y, Wei Y, Liu L, Zhong J, Sun L, Liu Y (2017) Towards unsupervised physical activity recognition using smartphone accelerometers. Multimed Tools Appl in press

Majaranta P, Bulling A (2014) Eye tracking and eye-based human-computer interaction. In: Advances in physiological computing. Springer, London, pp 39–65

Mardanbegi D, Hansen DW (2011) Mobile gaze-based screen interaction in 3D environments. In: Proceedings of the Conference on novel gaze-controlled applications, Karlskrona, Sweden, 25–26 May 2011

Mauri C, Granollers T, Lorés J, García M (2006) Computer vision interaction for people with severe movement restrictions. Hum Technol 2:38–54

Medeiros MD, Gonçalves LMG, Frery AC (2010) Using fuzzy logic to enhance stereo matching in multiresolution images. Sensors 10:1093–1118

Mrovlje J, Vrančić D (2008) Distance measuring based on stereoscopic pictures. In: Proceedings of the 9th International PhD Workshop on systems and control: young generation viewpoint, Izola, Slovenia, 1–3 October 2008, pp. 1–6

Nilsson S, Gustafsson T, Carleberg P (2009) Hands free interaction with virtual information in a real environment: eye gaze as an interaction tool in an augmented reality system. PsychNology J 7:175–196

OpenCV. Available online: http://opencv.org/. Accessed on 6 Feb 2017

Pinheiro CG, Naves ELM, Pino P, Losson E, Andrade AO, Bourhis G (2011) Alternative Communication Systems for People with Severe Motor Disabilities: a Survey. Biomed Eng Online 10:1–28

Rebsamen B, Teo CL, Zeng Q, Ang MH, Burdet E, Guan C, Zhang H, Laugier C (2007) Controlling a wheelchair indoors using thought. IEEE Intell Syst 22:18–24

Ren Y-Y, Li X-S, Zheng X-L, Li Z, Zhao Q-C (2015) Analysis of drivers’ eye-movement characteristics when driving around curves. Discrete Dyn Nat Soc 462792:1–10

Ross T, Fuzzy J (2010) Logic with engineering applications. Hoboken, Willey

Shi F, Gale A, Purdy K (2007) A new gaze-based Interface for environmental control. Lect Notes Comput Sci 4555:996–1005

Siddique N, Adeli H (2013) Computational Intelligence: synergies of fuzzy logic, neural networks and evolutionary computing. Willey, Hoboken

Standard score. Available online: https://en.wikipedia.org/wiki/Standard_score. Accessed on 31 July 2015

Student’s T-Test. Available online: http://en.wikipedia.org/wiki/Student's_t-test. Accessed on 31 July 2015

Su M-C, Wang K-C, Chen G-D (2006) An eye tracking system and its application in aids for people with severe disabilities. Biomed Eng: Appl Basis Commun 18:319–327

Suh IH, Kim TW (2000) A visual servoing algorithm using fuzzy logics and fuzzy-neural networks. Mechatronics 10:1–18

Tu J, Tao H, Huang T (2007) Face as mouse through visual face tracking. Comput Vis Image Underst 108:35–40

Turner J, Bulling A, Gellersen H (2012) Extending the visual field of a head-mounted eye tracker for pervasive eye-based interaction. In: Proceedings of the symposium on eye tracking research and applications, Santa Barbara, USA, 28–30 March 2012, pp. 269–272

Vernon D, Tistarelli M (1990) Using camera motion to estimate range for robotic parts manipulation. IEEE Trans Robot Autom 6:509–521

Webcam C600. Available online: https://support.logitech.com/en_us/product/5869. Accessed on 7 July 2015

Weibel N, Fouse A, Emmenegger C, Kimmich S, Hutchins E (2012) Let’s look at the cockpit: exploring mobile eye-tracking for observational research on the flight deck. In: Proceedings of the symposium on eye tracking research and applications, Santa Barbara, USA, 28–30 March 2012, pp. 107–114

Zhao J, Bose BK (2002) Evaluation of membership functions for fuzzy logic controlled induction motor drive. In: Proceedings of the IEEE Annual Conference of the Industrial Electronics Society, Sevilla, Spain, 5–8 November 2002, pp. 229–234

Acknowledgments

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2015R1D1A1A01056761), and in part by the Bio & Medical Technology Development Program of the NRF funded by the Korean government, MSIP (NRF-2016M3A9E1915855).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jang, J.W., Heo, H., Bang, J.W. et al. Fuzzy-based estimation of continuous Z-distances and discrete directions of home appliances for NIR camera-based gaze tracking system. Multimed Tools Appl 77, 11925–11955 (2018). https://doi.org/10.1007/s11042-017-4842-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4842-3