Abstract

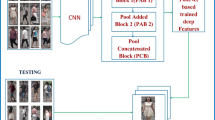

In this paper, we introduce a deep multi-instance learning framework to boost the instance-level person re-identification performance. Motivated by the observation of considerably dramatic and complex varieties of visual appearances in many current person re-identification datasets, we explicitly represent a deep feature representation learning method for the final person re-identification task. However, most public datasets for person re-identification are usually small, that usually make deep learning model suffer from seriously over-fitting problem. To alleviate this matter, we formulate the problem of person re-identification as a deep multi-instance learning (DMIL) task. More specifically, We build a novel end-to-end person re-identification system by unifying DMIL with the convolutional feature learning. For well capturing these intra-class diversities and inter-class ambiguities of input visual samples across cameras, a multi-scale convolutional feature learning method is proposed by optimizing the Contrastive Loss function. Comprehensive evaluations over three public benchmark datasets (including VIPeR, ETHZ, and CUHK01 datasets) well demonstrate the encouraging performance of our proposed person re-identification framework on small datasets.

Similar content being viewed by others

Notes

In our method, we only devote to single shot person re-identification, that is, we assume that there is only one image for a person under one camera.

VIPeR dataset is available from https://vision.soe.ucsc.edu/?q=node/178

The ETHZ dataset is available from http://homepages.dcc.ufmg.br/~william/dataset.html

The CUHK01 dataset is available from http://www.ee.cuhk.edu.hk/xgwang/CUHK_identification.html

References

Ahmed E, Jones M, Marks TK (2015) An improved deep learning architecture for person re-identification CVPR, pp 3908–3916

Amores J (2013) Multiple instance classification: review, taxonomy and comparative study. AI 201(4):81–105

Babenko A, Lempitsky V (2015) Aggregating local deep features for image retrieval. In: ICCV, pp 1269–1277

Baltieri D, Vezzani R, Cucchiara R (2011) 3dpes: 3d people dataset for surveillance and forensics. In: International ACM Workshop on Multimedia Access to 3d Human Objects, pp 59–64

Barrow HG, Tenenbaum JM, Bolles RC, Wolf HC (1977) Parametric correspondence and chamfer matching: two new techniques for image matching. Proc Int Joint Conf Artif Intell 2(11):659–663

Bromley J, Bentz JW, Bottou L, Guyon I, LeCun Y, Moore C, Säckinger Ex, Shah R (1993) Signature verification using a “siamese” time delay neural network. IJPRAI 7(04):669–688

Chen CL, Xiang T, Gong S (2010) Time-delayed correlation analysis for multi-camera activity understanding. IJCV 90(1):106–129

Chen SZ, Guo CC, Lai J (2015) Deep ranking for person re-identification via joint representation learning. IEEE Trans Image Process Publ IEEE Signal Process Soc 25(5):2353–2367

Chen Z, Chi Z, Fu H, Feng D (2013) Multi-instance multi-label image classification: A neural approach. Neurocomputing 99:298–306

Choe G, Yuan C, Wang T, Qi F, Hyon G, Choe C, Ri J, Ji G (2015) Combined salience based person re-identification. MTA, pp 1–22

Chopra S, Hadsell R, LeCun Y (2005) Learning a similarity metric discriminatively, with application to face verification CVPR, vol 1, pp 539–546

Davis JV, Kulis B, Jain P, Sra S, Dhillon IS (2007) Information-theoretic metric learning. In: ICML, pp 209–216

Farenzena M, Bazzani L, Perina A, Murino V, Cristani M (2010) Person re-identification by symmetry-driven accumulation of local features. In: CVPR, pp 2360–2367

Globerson A, Roweis ST (2005) Metric learning by collapsing classes. In: NIPS, pp 451–458

Gray D, Brennan S, Tao H (2007) Evaluating appearance models for recognition, reacquisition, and tracking. In: PETS, vol 3, pp 1–7

Gray D, Tao H (2008) Viewpoint invariant pedestrian recognition with an ensemble of localized features. In: ECCV, pp 262–275

Hadsell R, Chopra S, LeCun Y (2006) Dimensionality reduction by learning an invariant mapping. In: CVPR, vol 2, pp 1735–1742

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: CVPR, pp 770–778

Heilbron FC, Escorcia V, Ghanem B, Niebles JC (2015) Activitynet: a large-scale video benchmark for human activity understanding. In: CVPR, pp 961–970

Hinton GE, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov RR, Hinton GE, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov RR (2012) Improving neural networks by preventing co-adaptation of feature detectors. Comput Sci 3(4):212–223

Hirzer M, Beleznai C, Roth PM, Bischof H (2011) Person re-identification by descriptive and discriminative classification. In: Scandinavian Conference on Image Analysis, pp 91–102

Hirzer M, Roth PM, Köstinger M, Bischof H (2012) Relaxed pairwise learned metric for person re-identification. In: ECCV, pp 780–793

Kawanishi Y, Wu Y, Mukunoki M, Minoh M (2014) Shinpuhkan2014: A multi-camera pedestrian dataset for tracking people across multiple cameras. In: FCV, pp 322–329

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: NIPS, pp 1097–1105

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Li D, Wang J, Zhao X, Liu Y, Wang D (2014) Multiple kernel-based multi-instance learning algorithm for image classification. JVCIR 25(5):1112–1117

Li W, Wang X (2013) Locally aligned feature transforms across views. In: CVPR, pp 3594–3601

Li W, Zhao R, Wang X (2012) Human reidentification with transferred metric learning. In: ACCV, pp 31–44

Li W, Zhao R, Xiao T, Wang X (2014) Deepreid: Deep filter pairing neural network for person re-identification. In: CVPR, pp 152–159

Li Z, Chang S, Liang F, Huang TS, Cao L, Smith JR (2013) Learning locally-adaptive decision functions for person verification. In: CVPR, pp 3610–3617

Liu C, Gong S, Chen CL, Lin X (2012) Person re-identification: what features are important? In: ECCV, pp 391–401

Liu H, Ma B, Qin L, Pang J, Zhang C, Huang Q (2015) Set-label modeling and deep metric learning on person re-identification. Neurocomputing 151:1283–1292

Ma B, Su Y, Jurie F (2012) Bicov: a novel image representation for person re-identification and face verification. In: BMVC, pp 1–11

Nguyen C, Zhan D, Zhou Z (2013) Multi-modal image annotation with multi-instance multi-label lda. In: IJCAI, pp 1558–1564

Schwartz WR, Davis LS (2009) Learning discriminative appearance-based models using partial least squares. In: Proceedings of the XXII Brazilian Symposium on Computer Graphics and Image Processing, pp 322–329

Sermanet P, Kavukcuoglu K, Chintala S, LeCun Y (2013) Pedestrian detection with unsupervised multi-stage feature learning. In: CVPR, pp 3626–3633

Shen L, Zheng N, Zheng S, Li W (2010) Secure mobile services by face and speech based personal authentication. In: ICIS, vol 3, pp 97–100

Shen L., Zheng S. (2012) Hyperspectral face recognition using 3d gabor wavelets. In: ICPR, pp 1574–1577

Simonyan K, Vedaldi A, Zisserman A (2013) Deep fisher networks for large-scale image classification. In: NIPS, pp 163–171

Szegedy C, Liu W, Jia Y, Sermanet P (2015) Going deeper with convolutions. In: CVPR, pp 1–9

Van Noord N, Postma E Learning scale-variant and scale-invariant features for deep image classification. Pattern Recognition, pp 583–592

Wang F, Zuo W, Lin L, Zhang D, Zhang L (2016) Joint learning of single-image and cross-image representations for person re-identification. In: CVPR, pp 1288–1296

Weinberger KQ, Saul LK (2006) Distance metric learning for large margin nearest neighbor classification. 10:207–244

Wu L, Shen C, Van Den Hengel A Personnet: Person re-identification with deep convolutional neural networks. pp 1–7

Xiao T, Li H, Ouyang W, Wang X (2016) Learning deep feature representations with domain guided dropout for person re-identification, pp 1–10

Xu C, Lu C, Liang X, Gao J, Zheng W, Wang T, Yan S (2015) Multi-loss regularized deep neural network. IEEE Trans Circ Syst Video Technol 12 (26):2273–2283

Yu XXW, Shao L, Fang W (2015) A rapid learning algorithm for vehicle classification. Inf Sci 295(1):395–406

Yi D, Lei Z, Li SZ (2014) Deep metric learning for practical person re-identification. Computer Science, pp 34–39

Yi D, Lei Z, Liao S, Li SZ (2014) Deep metric learning for person re-identification. In: ICPR, pp 34–39

Zeng X, Ouyang W, Wang M, Wang X (2014) Deep learning of scene-specific classifier for pedestrian detection. In: ECCV, pp 472–487

Zhang R, Lin L, Zhang R, Zuo W, Zhang L (2015) Bit-scalable deep hashing with regularized similarity learning for image retrieval and person re-identification. IEEE Trans Image Process 24(12):4766–4779

Zhao F, Huang Y, Wang L, Tan T (2015) Deep semantic ranking based hashing for multi-label image retrieval. In: CVPR, pp 1556–1564

Zhao R, Ouyang W, Wang X (2013) Person re-identification by salience matching. In: ICCV, pp 2528–2535

Zhao R, Ouyang W, Wang X (2013) Unsupervised salience learning for person re-identification. In: CVPR, pp 3586–3593

Zhao R, Ouyang W, Wang X (2014) Learning mid-level filters for person re-identification. In: Computer Vision and Pattern Recognition, pp 144–151

Zheng L, Wang S, Lu T, He F, Liu Z, Qi T (2015) Query-adaptive late fusion for image search and person re-identification. In: CVPR, pp 1741–1750

Zheng WS, Gong S, Xiang T (2009) Associating groups of people. In: BMVC, pp 1–11

Zheng WS, Gong S, Xiang T (2011) Person re-identification by probabilistic relative distance comparison. In: CVPR, pp 649–656

Zheng W, Gong S, Xiang T (2013) Reidentification by relative distance comparison. IEEE Trans Pattern Anal Mach Intell 35(3):653–668

Zhou Z, Zhang M (2006) Multi-instance multi-label learning with application to scene classification. In: NIPS, pp 1609–1616

Acknowledgment

This work is supported by the Natural Science Foundation of China (Grant 61572214, 61602244 and U1536203), Independent Innovation Research Fund Sponsored by Huazhong university of science and technology (Project No. 2016YXMS089) and partially sponsored by CCF-Tencent Open Research Fund. In addition, we would like to extend our sincere thanks to Gwangmin Choe who gave us some good suggestions at the early stage of our work.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The Contrastive Loss function on one sample pair is defined as (3). During one backpropagation in the SGD algorithm, the integral loss L W is

in which, N is the batch size of SGD. In (3), y is 1 if (x 1,x 2) is a genuine fair, or y is 0. Then, when the ith pair is a true pair, loss generated by it is

And loss generated by a false pair is

Specifically,

The objectiveness of SGD is to minimize the whole loss L W . The partial derivative of the loss is

The computing of \(\frac {\partial {l_{W}(x_{1},x_{2},y)^{i}}}{\partial W}\) is relied on the values of y and D W (x i ,y i ) of the training pair. In the feedforward, the distance between the image pair need to be calculated firstly, and then loss is generated by computing on (12), (13) or (14). Then for the selected equation, compute the corresponding partial derivatives. Based on (12), (13) and (14), it will be easy to achieve at the partial derivations in (5).

Rights and permissions

About this article

Cite this article

Yuan, C., Xu, C., Wang, T. et al. Deep multi-instance learning for end-to-end person re-identification. Multimed Tools Appl 77, 12437–12467 (2018). https://doi.org/10.1007/s11042-017-4896-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4896-2