Abstract

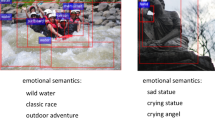

Affective image analysis, which estimates humans’ emotion reflection on images, has attracted increasing attention. Most of the existing methods focus on developing efficient visual features according to theoretical and empirical concepts, and extract these features from an image as a whole. However, analyzing emotion from an entire image, can only extract the dominant emotion conveyed by the whole image, which ignores the affective differences existing among different regions within the image. This may reduce the performance of emotion recognition, and limit the range of possible applications. In this paper, we are the first to propose the concept of affective map, by which image emotion can be represented at region-level. In an affective map, the value of each pixel represents the probability of the pixel belonging to a certain emotion category. Two popular application exemplars, i.e. affective image classification and visual saliency computing, are explored to prove the effectiveness of the proposed affective map. Analyzing detailed image emotion at a region-level, the accuracy of affective image classification has been improved 5.1% on average. The Area Under the Curve (AUC) of visual saliency detection has been improved 15% on average.

Similar content being viewed by others

References

Achanta R, Shaji A, Smith K, Lucchi A, Fua P, Susstrunk S (2012) Slic superpixels compared to state-of-the-art superpixel methods. IEEE Trans Pattern Anal Mach Intell 34(11):2274–2282

Antonisse HJ (1982) Image segmentation in pyramids. Comput Graphics Image Process 19(4):367– 383

Borji A, Sihite D, Itti L (2012) Probabilistic learning of task-specific visual attention. In: IEEE conference on computer vision and pattern recognition, pp 470–477

Bosch A, Zisserman A, Muñoz X (2006) Scene classification via plsa. In: Computer vision–ECCV 2006. Springer, pp 517–530

Bruce N, Tsotsos J (2005) Saliency based on information maximization. In: Advances in neural information processing systems, pp 155–162

Cao D, Ji R, Lin D, Li S (2016) Visual sentiment topic model based microblog image sentiment analysis. Multimed Tools Appl 75(15):8955–8968

Fei-Fei L, Perona P (2005) A bayesian hierarchical model for learning natural scene categories. In: IEEE computer society conference on computer vision and pattern recognition, vol 2, pp 524–531

Gao D, Vasconcelos N (2007) Bottom-up saliency is a discriminant process. In: 11th international conference on computer vision, pp 1–6

Gopalakrishnan V, Hu Y, Rajan D (2010) Random walks on graphs for salient object detection in images. IEEE Trans Image Process 19(12):3232–3242

Guo M, Zhao Y, Zhang C, Chen Z (2014) Fast object detection based on selective visual attention. Neurocomputing 144:184–197

Hanjalic A (2006) Extracting moods from pictures and sounds: towards truly personalized tv. IEEE Signal Process Mag 23(2):90–100

Hanjalic A, Xu L-Q (2005) Affective video content representation and modeling. IEEE Trans Multimedia 7(1):143–154

Harel J, Koch C, Perona P (2006) Graph-based visual saliency. In: Advances in neural information processing systems, pp 545–552

Hou X, Zhang L (2007) Saliency detection: a spectral residual approach. In: IEEE conference on computer vision and pattern recognition, pp 1–8

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid scene analysis. IEEE Trans Pattern Anal Mach Intell 11:1254–1259

Judd T, Ehinger K, Durand F, Torralba A (2009) Learning to predict where humans look. In: 12th international conference on computer vision, pp 2106–2113

Lang PJ, Bradley MM, Cuthbert BN (2008) International affective picture system (iaps): Affective ratings of pictures and instruction manual, Technical report A-8

Lee M-F, Chen G-S, Hung JC, Lin K-C, Wang J-C (2016) Data mining in emotion color with affective computing. Multimed Tools Appl 75(23):15185–15198

Li J, Tian Y, Huang T, Gao W (2010) Probabilistic multi-task learning for visual saliency estimation in video. Int J Comput Vis 90(2):150–165

Liu H, Xu M, Wang J, Rao T, Burnett I (2016) Improving visual saliency computing with emotion intensity. IEEE Trans Neural Netw Learn Syst 27(6):1201–1213

Machajdik J, Hanbury A (2010) Affective image classification using features inspired by psychology and art theory. In: Proceedings of the international conference on multimedia, pp 83–92

Maron O, Ratan AL (1998) Multiple-instance learning for natural scene classification. In: ICML, vol 98, pp 341–349

Mikels JA, Fredrickson BL, Larkin GR, Lindberg CM, Maglio SJ, Reuter-Lorenz PA (2005) Emotional category data on images from the international affective picture system. Behav Res Methods 37(4):626–630

Peters RJ, Itti L (2007) Beyond bottom-up: Incorporating task-dependent influences into a computational model of spatial attention. In: IEEE conference on computer vision and pattern recognition, pp 1–8

Ramanathan S, Katti H, Sebe N, Kankanhalli M, Chua T-S (2010) An eye fixation database for saliency detection in images. In: European conference on computer vision, pp 30–43

Schauerte B, Stiefelhagen R (2012) Quaternion-based spectral saliency detection for eye fixation prediction. In: Computer vision-ECCV, vol 2012, pp 116–129

Siersdorfer S, Minack E, Deng F, Hare J (2010) Analyzing and predicting sentiment of images on the social web. In: Proceedings of the 18th ACM international conference on multimedia. ACM, pp 715–718

Solli M, Lenz R (2009) Color based bags-of-emotions. In: Computer analysis of images and patterns, pp 573–580

Walthera D, Koch C (2006) Modeling attention to salient proto-objects. Neural Netw 19(9):1395–1407

Wang W, He Q (2008) A survey on emotional semantic image retrieval. In: ICIP, pp 117–120

Wang S, Wang Z, Ji Q (2015) Multiple emotional tagging of multimedia data by exploiting dependencies among emotions. Multimed Tools Appl 74(6):1863–1883

Wang W, Wang Y, Huang Q, Gao W (2010) Measuring visual saliency by site entropy rate. In: IEEE conference on computer vision and pattern recognition, pp 2368–2375

Wang S, Liu Z, Zhu Y, He M, Chen X, Ji Q (2015) Implicit video emotion tagging from audiences’ facial expression. Multimed Tools Appl 74(13):4679–4706

Xu M, Luo S, Jin JS (2008) Affective content detection by using timing features and fuzzy clustering. In: Advances in multimedia information processing-PCM 2008. Springer, pp 685–692

Xu M, Jin JS, Luo S, Duan L (2008) Hierarchical movie affective content analysis based on arousal and valence features. In: Proceedings of the 16th ACM international conference on multimedia, pp 677–680

Xu M, Wang J, He X, Jin JS, Luo S, Lu H (2014) A three-level framework for affective content analysis and its case studies. Multimed Tools Appl 70(2):757–779

Yanulevskaya V, Van Gemert J, Roth K, Herbold A-K, Sebe N, Geusebroek J-M (2008) Emotional valence categorization using holistic image features. In: 15th IEEE international conference on image processing, pp 101–104

Zhang H, Gönen M, Yang Z, Oja E (2015) Understanding emotional impact of images using bayesian multiple kernel learning. Neurocomputing

Zhang L, Tong MH, Marks TK, Shan H, Cottrell GW (2008) Sun: A bayesian framework for saliency using natural statistics. J Vis 8(7):32–32

Zhao S, Gao Y, Jiang X, Yao H, Chua T-S, Sun X (2014) Exploring principles-of-art features for image emotion recognition. In: Proceedings of the ACM international conference on multimedia, pp 47–56

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

Rao, T., Xu, M. & Liu, H. Generating affective maps for images. Multimed Tools Appl 77, 17247–17267 (2018). https://doi.org/10.1007/s11042-017-5289-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-5289-2