Abstract

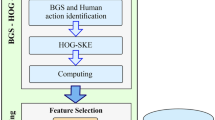

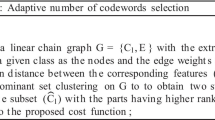

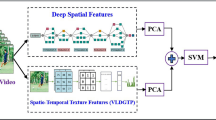

BoF statistic-based local space-time features action representation is very popular for human action recognition due to its simplicity. However, the problem of large quantization error and weak semantic representation decrease traditional BoF model’s discriminant ability when applied to human action recognition in realistic scenes. To deal with the problems, we investigate the generalization ability of BoF framework for action representation as well as more effective feature encoding about high-level semantics. Towards this end, we present two-layer hierarchical codebook learning framework for human action classification in realistic scenes. In the first-layer action modelling, superpixel GMM model is developed to filter out noise features in STIP extraction resulted from cluttered background, and class-specific learning strategy is employed on the refined STIP feature space to construct compact and descriptive in-class action codebooks. In the second-layer of action representation, LDA-Km learning algorithm is proposed for feature dimensionality reduction and for acquiring more discriminative inter-class action codebook for classification. We take advantage of hierarchical framework’s representational power and the efficiency of BoF model to boost recognition performance in realistic scenes. In experiments, the performance of our proposed method is evaluated on four benchmark datasets: KTH, YouTube (UCF11), UCF Sports and Hollywood2. Experimental results show that the proposed approach achieves improved recognition accuracy than the baseline method. Comparisons with state-of-the-art works demonstrates the competitive ability both in recognition performance and time complexity.

Similar content being viewed by others

References

Bhushan K et al. (2017) A novel approach to defend multimedia flash crowd in cloud environment. Multimedia Tools and Applications 2017(3):1–31

Castrodad A, Sapiro G, Castrodad A et al (2012) Sparse modelling of human actions from motion imagery. Int J Comput Vis 100(1):1–15

Csurka G, Dance C, Fan L et al (2004) Visual Categorization with Bags of Keypoints. Workshop on Statistical Learning in Computer Vision(ECCV), pp.1–22

Ding C, Li T (2007) Adaptive Dimension Reduction Using Discriminant Analysis and K-means Clustering. Proceedings of the 24th International Conference on Machine learning, pp. 521–528

Dollar P, Rabaud V, Cottrell G et al (2005) Behaviour recognition via sparse spatio-temporal features. Proceedings of the International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, pp. 65–72

Garcica RH, Cozar JR, Guil N, Reyes EG, Sahli H (2017) Improving bag-of-visual-words model using visual n-grams for human action classification. Expert Syst Appl 92:182–191

Guha T, Ward RK (2012) Learning sparse representations for human action recognition. IEEE Trans Pattern Anal Mach Intell 34(8):1576–1588

Gupta B, Agrawal DP, Yamaguchi S (eds) (2016) Handbook of research on modern cryptographic solutions for computer and cyber security. IGI Global Publisher, USA

Gupta A, Kembhavi A, Davis LS et al (2009) Observing human-object interactions: using spatial and functional compatibility for recognition. IEEE Trans Pattern Anal Mach Intell 31(10):1775–1789

Gupta S, et al (2016) XSS-secure as a service for the platforms of online social network-based multimedia web applications in cloud. Multimedia Tools and Applications, pp.1–33

Gupta S et al (2017) Enhancing the browser-side context-aware sanitization of suspicious HTML5 code for halting the DOM-based XSS vulnerabilities in cloud. Int J Cloud Appl Comput 7(1):1–31

Ji S, Xu W, Yang M et al (2013) 3D convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231

Laptev I, Lindeberg T (2004) Velocity adaption of space-time interest points. Proceedings of the 17th International Conference on Pattern Recognition, pp.52–56

Laptev I, Lindeberg T (2013) Space-time interest points. Proceedings of the International Conference on Computer Vision, pp.432–439

Laptev I, Marszałek M, Schmid C, et al (2008) Learning realistic human actions from movies. Proceedings of the conference on computer vision and pattern recognition, pp. 1–8

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. IEEE Conference on Computer Vision and Pattern Recognition, pp. 2169–2178

Le QV, Zou WY, Yeung SY et al. (2011) Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 3361–3368. https://doi.org/10.1109/CVPR.2011.5995496

Li Y, Peng Z, Liang D et al (2016) Facial age estimation by using stacked feature composition and selection. Vis Comput 32(12):1525–1536

Li Y, Wang G, Lin N, Wang Q (2017) Distance metric optimization driven convolutional neural network for age invariant face recognition. Pattern Recogn 75:51–62

Liu J, Luo J, Shah M (2009) Recognizing realistic actions from videos in the Wild. IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, pp.1996–2003

Liu J, Luo J, Shah M (2009) Recognizing realistic actions from videos in the wild. Proceedings of the Computer Vision and Pattern Recognition, pp. 1996–2003

Liu J, Yang Y, Saleemi I et al (2012) Learning semantic features for action recognition via diffusion maps. Comput Vis Image Underst 116(3):361–377

Marszalek M, Laptev I, Schmid C (2009) Actions in context. IEEE Conference on Computer Vision and Pattern Recognition, pp. 2929–2936

Niebles JC, Fei-Fei L (2007) A hierarchical model of shape and appearance for human action classification. Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), pp.1–8

Rodriguez MD, Ahmed J, Shah M (2008) Action MACH: A spatio-temporal maximum average correlation height filter for action recognition. in 26th IEEE Conference on Computer Vision and Pattern Recognition(CVPR), 2008:1–8

Schuldt C, Laptev I, Caputo B (2004) Recognizing human actions: a local SVM approach. Proceedings of the International Conference on Pattern Recognition (ICPR), pp. 3:32–36

Christian Schuldt, Ivan Laptev, Barbara Caputo (2004) Recognizing human actions: a local SVM approach. Proceedings of the International Conference on Pattern Recognition, pp. 3:32–36

Sun J, Wu X, Yan S, et al (2009) Hierarchical spatio-temporal context modelling for action recognition. Proceedings of the Conference on Computer Vision and Pattern Recognition, pp. 1–8

Sun Q, Liu H, Ma L, Zhang T (2016) A novel hierarchical bag-of-words model for compact action representation. Neurocomputing 174:722–732

Wang H, Ullah MM, Klaser A, et al (2010) Evaluation of local spatio-temporal features for action recognition. British Machine Vision Conference, pp. 1–11

Wu J, Thompson J, Zhang H, Kilper DC (2014) Green communications and computing networks [series editorial]. IEEE Commun Mag 52(11):102–103

Wu J, Thompson J, Zhang H, Kilper DC (2015) Green communications and computing networks [series editorial]. IEEE Commun Mag 53(11):214–215

Wu J, Guo S, Li J, Zeng D (2016) Big data meet green challenges: big data toward green applications. IEEE Syst J 10(3):888–900

Wu J, Guo S, Li J, Zeng D (2016) Big Data Meet Green Challenges: Greening Big Data. IEEE Syst J 10(3):873–887

Zain A, Mohammed A et al (2015) Multi-cloud data management using Shamir's secret sharing and quantum byzantine agreement schemes. Int J Cloud Appl Comput 5(3):35–52

Zhen XT, Shao L (2016) Action recognition via spatio-temporal local features: a comprehensive study. Image Vis Comput 50:1–13

Acknowledgments

The authors would like to thank the anonymous reviewers for the valuable and insightful comments of this manuscript. This work is supported by the National Nature Science Foundation of China (Grant no. 61502182), the Natural Science Foundation of Fujian Province, China (Grant no.2017 J01110,2015 J01253,2015 J01256,2015 J01258), the Science and Technology Plan Projects in Fujian Province, China (Grant no.2015H0025), the Scientific Research Funds of Huaqiao University, China (16BS812).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lei, Q., Zhang, H., Xin, M. et al. A hierarchical representation for human action recognition in realistic scenes. Multimed Tools Appl 77, 11403–11423 (2018). https://doi.org/10.1007/s11042-018-5626-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-5626-0