Abstract

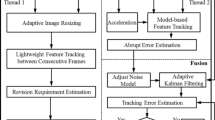

Pose tracking is an important task in Augmented Reality (AR), interactive systems, and robotic systems. The frame-by-frame pose tracking that is effective in many cases still faces challenges in complex environments such as occlusions, illumination changes and flipping. In this paper, based on the optimization model offered by Ye et al. J Vis Commun Image Represent 44:72–81 (2017), three improvements are further proposed. First, a feature adjustment strategy based on a group of neighbors is offered to alleviate a sharp reduction of features. Then, when the features are no longer well representing the scene of interest, a score model based on a weighted histogram for result evaluations is presented to realize an adaptive interval. Besides, a forward-backward algorithm is provided to improve the accuracy by replacing the detection method with the tracking method. Experimental results manifest the effectiveness of the proposed algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Alldieck T, Kassubeck M, Wandt B, Rosenhahn B, Magnor M (2017) Optical flow-based 3d human motion estimation from monocular video. In: German Conference on Pattern Recognition. Springer, pp 347–360

Aly M, Munich M, Perona P Bag of words for large scale object recognition, computational vision lab, Caltech, Pasadena

Bay H, Ess A, Tuytelaars T, Van Gool L (2008) Speeded-up robust features (surf). Comput Vis Image Underst 110(3):346–359

Bosch A, Zisserman A, Munoz X (2007) Image classification using random forests and ferns. In: 2007 IEEE 11th International Conference on Computer Vision. IEEE, pp 1–8

Brachmann E, Krull A, Michel F, Gumhold S, Shotton J, Rother C (2014) Learning 6d object pose estimation using 3d object coordinates. In: Computer Vision–ECCV 2014. Springer, pp 536–551

Chen W-C, Xiong Y, Gao J, Gelfand N, Grzeszczuk R (2007) Efficient extraction of robust image features on mobile devices. In: Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality. IEEE Computer Society, pp 1–2

Doumanoglou A, Kouskouridas R, Malassiotis S, Kim T-K (2016) Recovering 6d object pose and predicting next-best-view in the crowd. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3583–3592

Gammeter S, Gassmann A, Bossard L, Quack T, Van Gool L (2010) Server-side object recognition and client-side object tracking for mobile augmented reality. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). IEEE, pp 1–8

Hinterstoisser S, Benhimane S, Navab N, Fua P, Lepetit V (2008) Online learning of patch perspective rectification for efficient object detection 2008 IEEE Conference on Computer Vision and Pattern Recognition, 2008. CVPR. IEEE, pp 1–8

Hong Z, Chen Z, Wang C, Mei X, Prokhorov D, Tao D (2015) Multi-store tracker (muster): A cognitive psychology inspired approach to object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 749–758

Kalal Z, Mikolajczyk K, Matas J (2012) Tracking-learning-detection. IEEE Trans Pattern Anal Mach Intell 34(7):1409–1422

Kalman RE, Bucy RS (1961) New results in linear filtering and prediction theory. J Basic Eng 83(1):95–108

Kalman RE et al (1960) A new approach to linear filtering and prediction problems. J Basic Eng 82(1):35–45

Ke Y, Sukthankar R (2004) Pca-sift: A more distinctive representation for local image descriptors. In: 2004. CVPR 2004. Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol 2. IEEE, pp II–506

Koyama J, Makar M, Araujo AF, Girod B (2014) Interframe compression with selective update framework of local features for mobile augmented reality. In: 2014 IEEE International Conference on Multimedia and Expo Workshops (ICMEW). IEEE, pp 1–6

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Lucas BD, Kanade T et al (1981) An iterative image registration technique with an application to stereo vision. In: IJCAI, vol 81, pp 674–679

Makar M, Tsai SS, Chandrasekhar V, Chen D, Girod B (2013) Interframe coding of canonical patches for low bit-rate mobile augmented reality. Int J Semantic Comput 7(01):5–24

Mikolajczyk K, Schmid C (2005) A performance evaluation of local descriptors. IEEE Trans Pattern Anal Mach Intell 27(10):1615–1630. http://lear.inrialpes.fr/pubs/2005/MS05

Mooser J, You S, Neumann U (2007) Real-time object tracking for augmented reality combining graph cuts and optical flow. In: Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality. IEEE Computer Society, pp 1–8

Mooser J, Wang Q, You S, Neumann U (2008) Fast simultaneous tracking and recognition using incremental keypoint matching. In: International Symposium on 3D Data Processing, Visualization and Transmission

Nair BM, Kendricks KD, Asari VK, Tuttle RF (2014) Optical flow based kalman filter for body joint prediction and tracking using hog-lbp matching. In: Video Surveillance and Transportation Imaging Applications 2014, vol 9026. International Society for Optics and Photonics, p 90260H

Özuysal M, Calonder M, Lepetit V, Fua P (2010) Fast keypoint recognition using random ferns. IEEE Trans Pattern Anal Mach Intell 32(3):448–461

Pauwels K, Rubio L, Diaz J, Ros E (2013) Real-time model-based rigid object pose estimation and tracking combining dense and sparse visual cues. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, pp 2347–2354

Pauwels K, Rubio L, Ros E (2014) Real-time model-based articulated object pose detection and tracking with variable rigidity constraints. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, pp 3994–4001

Qu X, Zhao F, Zhou M, Huo H (2014) A novel fast and robust binary affine invariant descriptor for image matching. Mathematical Problems in Engineering

Rublee E, Rabaud V, Konolige K, Bradski G (2011) Orb: An efficient alternative to sift or surf. In: 2011 IEEE International Conference on Computer Vision (ICCV). IEEE, pp 2564–2571

Simon G, Fitzgibbon AW, Zisserman A (2002) Markerless tracking using planar structures in the scene, In: IEEE and ACM International Symposium on Augmented Reality, pp 120–128

Skrypnyk I, Lowe DG (2004) Scene modelling, recognition and tracking with invariant image features. In: 2004. ISMAR 2004. Third IEEE and ACM International Symposium on Mixed and Augmented Reality. IEEE, pp 110–119

Ta D-N, Chen W-C, Gelfand N, Pulli K (2009) Surftrac: Efficient tracking and continuous object recognition using local feature descriptors. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009. CVPR. IEEE, pp 2937–2944

Takacs G, Chandrasekhar V, Tsai S, Chen D, Grzeszczuk R, Girod B (2010) Unified real-time tracking and recognition with rotation-invariant fast features. In: 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, pp 934–941

Tejani A, Tang D, Kouskouridas R, Kim T-K (2014) Latent-class hough forests for 3d object detection and pose estimation. In: Computer Vision–ECCV 2014. Springer, pp 462–477

Thachasongtham D, Yoshida T, de Sorbier F, Saito H (2013) 3d object pose estimation using viewpoint generative learning. In: Image Analysis. Springer, pp 512–521

Ufkes A, Fiala M (2013) A markerless augmented reality system for mobile devices. In: 2013 International Conference on Computer and Robot Vision (CRV). IEEE, pp 226–233

Wagner D, Reitmayr G, Mulloni A, Drummond T, Schmalstieg D (2008) Pose tracking from natural features on mobile phones. In: Proceedings of the 7th IEEE/ACM International Symposium on Mixed and Augmented Reality. IEEE Computer Society, pp 125–134

Wohlhart P, Lepetit V (2015) Learning descriptors for object recognition and 3d pose estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3109– 3118

Ye S, Liu C, Li Z, Al-Ahmari A (2017) Iterative optimization for frame-by-frame object pose tracking. J Vis Commun Image Represent 44:72–81

Zach C, Penate-Sanchez A, Pham M-T (2015) A dynamic programming approach for fast and robust object pose recognition from range images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 196–203

Acknowledgements

This work was supported by the National Natural Science Foundation of China [grant number 61373063, 61373062, 61473155]; the project of Ministry of Industry and Information Technology of China [grant number E0310/1112/02-1]; the Research Award Fund for Young Teachers of Education Department of Fujian Province [grant number JAT170037]; the Natural Science Foundation Project of Fujian Province [grant number 2017J01110]; the Science and Technology Planning Project of Fujian Province [grant number 2018H01010060]; the Science and Technology Planning Project of Quanzhou City, Fujian Province [grant number No.2017T003].

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ye, S., Liu, C., Li, Z. et al. Improved frame-by-frame object pose tracking in complex environments. Multimed Tools Appl 77, 24983–25004 (2018). https://doi.org/10.1007/s11042-018-5736-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-5736-8