Abstract

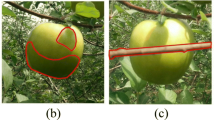

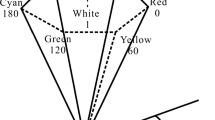

Accurate segmentation of apple fruit under natural illumination conditions provides benefits for growers to plan relevant applications of nutrients and pesticides. It also plays an important role for monitoring the growth status of the fruit. However, the segmentation of apples throughout various growth stages had only achieved a limited success so far due to the color changes of apple fruit as it matures as well as occlusion and the non-uniform background of apple images acquired in an orchard environment. To achieve the segmentation of apples with different colors and with various illumination conditions for the whole growth stage, a segmentation method independent of color was investigated. Features, including saliency and contour of the image, were combined in this algorithm to remove background and extract apples. Saliency using natural statistics (SUN) visual attention model was used for background removal and it was combined with threshold segmentation algorithm to extract salient binary region of apple images. The centroids of the obtained salient binary region were then extracted as initial seed points. Image sharpening, globalized probability of boundary-oriented watershed transform-ultrametric contour map (gPb-OWT-UCM) and Otsu algorithms were applied to detect saliency contours of images. With the built seed points and extracted saliency contours, a region growing algorithm was performed to accurately segment apples by retaining as many fruit pixels and removing as many background pixels as possible. A total of 556 apple images captured in natural conditions were used to evaluate the effectiveness of the proposed method. An average segmentation error (SE), false positive rate (FPR), false negative rate (FNR) and overlap Index (OI) of 8.4, 0.8, 7.5 and 90.5% respectively, were achieved and the performance of the proposed method outperformed other six methods in comparison. The method developed in this study can provide a more effective way to segment apples with green, red, and partially red colors without changing any features and parameters and therefore it is also applicable for monitoring the growth status of apples.

Similar content being viewed by others

References

Arbeláez P, Maire M, Fowlkes C, Malik J (2011) Contour detection and hierarchical image segmentation. IEEE Trans Pattern Anal Mach Intell 33(5):898–916

Barnea E, Mairon R, Ben-Shahar O (2016) Colour-agnostic shape-based 3D fruit detection for crop harvesting robots. Biosyst Eng 146:57–70

Bulanon DM, Kataoka T (2010) Fruit detection system and an end effector for robotic harvesting of Fuji apples. Agric Eng Int CIGR J 12(1):203–210

Bulanon DM, Kataoka T, Ota Y, Hiroma T (2002) A segmentation algorithm for the automatic recognition of Fuji apples at harvest. Biosyst Eng 83(4):405–412

Chen J, Chen J, Cao H, Li R, Xia T, Ling H, Chen Y (2017) Saliency detection using suitable variant of local and global consistency. IET Comput Vis 11(6):479–487

Chen J, Chen J, Ling H, Cao H, Sun W, Fan Y, Wu W (2017) Salient object detection via spectral graph weighted low rank matrix recovery. J Vis Commun Image Represent 2018(50):270–279

Chen J, Ma B, Cao H, Chen J, Fan Y, Xia T, Li R (2017) Attention region detection based on closure prior in layered bit planes. Neurocomputing 251(C):16–25

Chen J, Ma B, Cao H, Chen J, Fan Y, Li R, Wu W (2017) Updating initial labels from spectral graph by manifold regularization for saliency detection. Neurocomputing 266:79–90

Chen SW, Skandan SS, Dcunha S, Das J, Okon E, Qu C, Taylor CJ, Kumaret V (2017) Counting apples and oranges with deep learning: a data driven approach. IEEE Robotics & Automation Letters 2(2):781–788

Cheng MM, Mitra NJ, Huang X, Torr PH, Hu SM (2015) Global contrast based salient region detection. In: Proceedings IEEE transactions on pattern analysis and machine intelligence, 37(3), pp 569–582

Gongal A, Amatya S, Karkee M, Zhang Q, Lewis K (2015) Sensors and systems for fruit detection and localization: a review. Comput Electron Agric 116(C):8–19

Gonzalez RC, Woods RE, Eddins SL (2009) Digital image processing using MATLAB, 2nd edn. Publishing House of Electronics Industry, Beijing

Han J, Zhang D, Hu X, Guo L, Ren J, Wu F (2015) Background prior-based salient object detection via deep reconstruction residual. IEEE Trans Circuits Syst Video Technol 25(8):1309–1321

Hu P, Shuai B, Liu J, Wang G (2017) Deep level sets for salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 540–549

Ji W, Zhao D, Cheng F, Xu B, Zhang Y, Wang J (2012) Automatic recognition vision system guided for apple harvesting robot. Comput Electr Eng 38(5):1186–1195

Jiang H, Wang J, Yuan Z, Wu Y (2017) Salient object detection: a discriminative regional feature integration approach. Int J Comput Vis 123:251–268

Kanan C, Cottrell G (2010) Robust classification of objects, faces, and flowers using natural image statistics. Computer Vision and Pattern Recognition 119:2472–2479

Kelman E, Linker R (2014) Vision-based localisation of mature apples in tree images using convexity. Biosyst Eng 118(1):174–185

Krähenbühl P, Koltun V (2012) Efficient inference in fully connected CRFs with Gaussian edge potentials. Adv Neural Inf Proces Syst:109–117

Li H, Lee WS, Wang K (2016) Immature green citrus fruit detection and counting based on fast normalized cross correlation (FNCC) using natural outdoor colour images. Precis Agric 17:678–697

Linker R, Kelman E (2015) Apple detection in nighttime tree images using the geometry of light patches around highlights. Comput Electron Agric 114(C):154–162

Linker R, Cohen O, Naor A (2012) Determination of the number of green apples in rgb images recorded in orchards. Comput Electron Agric 81(1):45–57

Liu T, Sun J, Zheng NN, Tang X, Shum HY (2011) Learning to detect a salient object. IEEE Trans Pattern Anal Mach Intell 33(2):353–366

Liu Y, Nie L, Han L, Zhang L, Rosenblum DS (2015) Action2Activity: recognizing complex activities from sensor data. In: Proceedings of the international joint conference on artificial Intellige. IJCAI, Buenos Aires, pp 1617–1623

Liu L, Cheng L, Liu Y, Jia Y, Rosenblum DS (2016) Recognizing complex activities by a probabilistic interval-based model. In: Proceedings of the thirtieth AAAI conference on artificial intelligence. AAAI, Phoenix, pp 1266–1272

Liu Y, Nie L, Liu L, Rosenblum DS (2016) From action to activity: sensor-based activity recognition. Neurocomputing 181:108–115

Liu Y, Zhang L, Nie L, Yan Y, Rosenblum DS (2016) Fortune teller: predicting your career path. In: Proceedings of the thirtieth AAAI conference on artificial intelligence. AAAI, Phoenix, pp 201–207

Murray N, Vanrell M, Otazu X, Parraga CA (2011) Saliency estimation using a non-parametric low-level vision model. Computer Vision and Pattern Recognition 42:433–440

Qu WF, Shang WJ, Shao YH, Wang DD, Yu XL, Song HB (2015) Segmentation of foreground apple targets by fusing visual attention mechanism and growth rules of seed points. Span J Agric Res 13(3):e0214

Sa I, Ge Z, Dayoub F, Upcroft B, Perez T, Mccool C (2016) Deepfruits: a fruit detection system using deep neural networks. Sensors 16(8):1222

Schertz CE, Brown GK (1968) Basic considerations in mechanizing citrus harvest. Trans ASABE 11:343–346

Schölkopf B, Platt J, Hofmann T (2006) Graph-based visual saliency. Nips 19:545–552

Sengupta S, Lee WS (2014) Identification and determination of the number of immature green citrus fruit in a canopy under different ambient light conditions. Biosyst Eng 117(1):51–61

Si Y, Liu G, Feng J (2015) Location of apples in trees using stereoscopic vision. Comput Electron Agric 112(C):68–74

Silwal A, Gongal A, Karkee M (2014) Identification of red apples in field environment with over the row machine vision system. Agric Eng Int CIGR J 16(4):66–75

Song Y, Glasbey CA, Horgan GW, Polder G, Dieleman JA, van der Heijden GWAM (2014) Automatic fruit recognition and counting from multiple images. Biosyst Eng 118(1):203–215

Stein M, Bargoti S, Underwood J (2016) Image based mango fruit detection, localisation and yield estimation using multiple view geometry. Sensors 16(11):1915

Wang Q, Nuske S, Bergerman M, Singh S (2012) Automated crop yield estimation for apple orchards. International Symposium of Experimental Robotics 88:745–758

Wang W, Shen J, Li X, Porikli F (2015) Robust video object cosegmentation. IEEE Trans Image Process 24(10):3137–3148

Wang D, Song H, Tie Z, Zhang W, He D (2016) Recognition and localization of occluded apples using k-means clustering algorithm and convex hull theory: a comparison. Multimed Tools Appl 75(6):3177–3198

Wang X, Ma H, Chen X (2016) Geodesic weighted Bayesian model for saliency optimization. Pattern Recogn Lett 75:1–8

Wang X, Ma H, Chen X, You S (2017) Edge preserving and multi-scale contextual neural network for salient object detection. IEEE Trans Image Process 99:1–14

Yang J, Yang MH (2012) Top-down visual saliency via joint CRF and dictionary learning. Computer Vision and Pattern Recognition 157:2296–2303

Zhang L, Tong MH, Marks TK, Shan H, Cottrell GW (2008) Sun: a bayesian framework for saliency using natural statistics. J Vis 8(7):1–20

Zhao C, Lee WS, He D (2016) Immature green citrus detection based on colour feature and sum of absolute transformed difference (SATD) using colour images in the citrus grove. Comput Electron Agric 124:243–253

Zhou R, Damerow L, Sun Y, Blanke MM (2012) Using colour features of CV. ‘Gala’ apple fruits in an orchard in image processing to predict yield. Precis Agric 13(5):568–580

Acknowledgments

We would like to thank all the anonymous reviewers for their kind and helpful comments and suggestions on the original manuscript. We are also grateful to Changying Li for his assistance with manuscript revisions and proofreading.

Funding

This work was supported by the National High Technology Research and Development Program of China (863 Program) (SS2013AA100304).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, D., He, D., Song, H. et al. Combining SUN-based visual attention model and saliency contour detection algorithm for apple image segmentation. Multimed Tools Appl 78, 17391–17411 (2019). https://doi.org/10.1007/s11042-018-7106-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-7106-y