Abstract

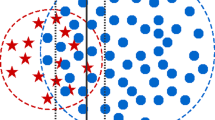

Imbalanced learning has become a research emphasis in recent years because of the growing number of class-imbalance classification problems in real applications. It is particularly challenging when the imbalanced rate is very high. Sampling, including under-sampling and over-sampling, is an intuitive and popular way in dealing with class-imbalance problems, which tries to regroup the original dataset and is also proved to be efficient. The main deficiency is that under-sampling methods usually ignore many majority class examples while over-sampling methods may easily cause over-fitting problem. In this paper, we propose a new algorithm dubbed KA-Ensemble ensembling under-sampling and over-sampling to overcome this issue. Our KA-Ensemble explores EasyEnsemble framework by under-sampling the majority class randomly and over-sampling the minority class via kernel based adaptive synthetic (Kernel-ADASYN) at meanwhile, yielding a group of balanced datasets to train corresponding classifiers separately, and the final result will be voted by all these trained classifiers. Through combining under-sampling and over-sampling in this way, KA-Ensemble is good at solving class-imbalance problems with large imbalanced rate. We evaluated our proposed method with state-of-the-art sampling methods on 9 image classification datasets with different imbalanced rates ranging from less than 2 to more than 15, and the experimental results show that our KA-Ensemble performs better in terms of accuracy (ACC), F-Measure, G-Mean, and area under curve (AUC). Moreover, it can be used in both dichotomy and multi-classification on both image classification and other class-imbalance problems.

Similar content being viewed by others

References

Cenggoro TW et al (2018) Deep learning for imbalance data classification using class expert generative adversarial network. Procedia Comput Sci 135:60–67

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) SMOTE: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Chawla NV, Lazarevic A, Hall LO, Bowyer KW (2003) SMOTEBoost: improving prediction of the minority class in boosting. In: European conference on principles of data mining and knowledge discovery. Springer, pp 107–119

Cherkassky V (1997) The nature of statistical learning theory. IEEE Trans Neural Netw 8(6):1564

Domingos P, Pazzani M (1997) On the optimality of the simple Bayesian classifier under zero-one loss. Mach Learn 29(2–3):103–130

Drummond C, Holte RC et al (2003) C4. 5, class imbalance, and cost sensitivity: why under-sampling beats over-sampling. In: ICML Workshop on Learning from Imbalanced Datasets II, vol 11. Citeseer, pp 1–8

Estabrooks A, Jo T, Japkowicz N (2004) A multiple resampling method for learning from imbalanced data sets. Comput Intell 20(1):18–36

Fan W, Stolfo SJ, Zhang J, Chan PK (1999) AdaCost: misclassification cost-sensitive boosting. In: International conference on machine learning, pp 97–105

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Goodfellow I, Bengio Y, Courville A, Bengio Y (2016) Deep learning. MIT Press, Cambridge

Guo H, Viktor HL (2004) Learning from imbalanced data sets with boosting and data generation: the databoost-im approach. ACM Sigkdd Explor Newsl 6(1):30–39

Han H, Wang W-Y, Mao B-H (2005) Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. In: International conference on intelligent computing. Springer, pp 878–887

Hart P (1968) The condensed nearest neighbor rule (Corresp.) IEEE Trans Inf Theory 14(3):515–516

He H, Garcia EA (2008) Learning from imbalanced data. IEEE Trans Knowl Data Eng 9:1263–1284

He H, Ma Y (2013) Imbalanced learning: foundations, algorithms, and applications. Wiley, New York

He H, Bai Y, Garcia EA, Li S (2008) “ADASYN: adaptive synthetic sampling approach for imbalanced learning. In: IEEE international joint conference on neural networks. IEEE, pp 1322–1328

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Huang G-B, Zhou H, Ding X, Zhang R (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern B (Cybern) 42(2):513–529

Huang C, Li Y, Change Loy C, Tang X (2016) Learning deep representation for imbalanced classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5375–5384

Jo T, Japkowicz N (2004) Class imbalances versus small disjuncts. ACM Sigkdd Explor Newsl 6(1):40–49

Kaur P, Negi V (2016) Techniques based upon boosting to counter class imbalance problem—a survey. In: International conference on computing for sustainable global development. IEEE, pp 2620–2623

Krawczyk B (2016) Learning from imbalanced data: open challenges and future directions. Progr Artif Intell 5(4):221–232

Kubat M, Matwin S et al (1997) Addressing the curse of imbalanced training sets: one-sided selection. In: International conference on machine learning, vol 97, Nashville, pp 179–186

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521 (7553):436–444

Lin T-Y, Goyal P, Girshick R, He K, Dollár P (2018) Focal loss for dense object detection. IEEE Trans Pattern Anal Mach Intell. https://doi.org/10.1109/TPAMI.2018.2858826

Liu X-Y, Wu J, Zhou Z-H (2009) Exploratory undersampling for class-imbalance learning. IEEE Trans Syst Man Cybern B (Cybern) 39(2):539–550

Lu H, Li Y, Chen M, Kim H, Serikawa S (2018) Brain intelligence: go beyond artificial intelligence. Mobile Netw Appl 23(2):368–375

Lu H, Li Y, Mu S, Wang D, Kim H, Serikawa S (2018) Motor anomaly detection for unmanned aerial vehicles using reinforcement learning. IEEE Internet Things J 5(4):2315–2322

Lu H, Wang D, Li Y, Li J, Li X, Kim H, Serikawa S, Humar I (2019) CONet: a cognitive ocean network. IEEE Wirel Commun 26(3):1–10

Ma J, Li S, Qin H, Hao A (2017) Unsupervised multi-class co-segmentation via joint-cut over l1-manifold hyper-graph of discriminative image regions. IEEE Trans Image Process 26(3):1216–1230

Mao W, He L, Yan Y, Wang J (2017) Online sequential prediction of bearings imbalanced fault diagnosis by extreme learning machine. Mech Syst Signal Process 83:450–473

Mao W, Liu Y, Ding L, Li Y (2019) Imbalanced fault diagnosis of rolling bearing based on generative adversarial network: a comparative study. IEEE Access 7:9515–9530

Mohamed AM, Busch-Vishniac I (1995) Imbalance compensation and automation balancing in magnetic bearing systems using the Q-parameterization theory. IEEE Trans Control Syst Technol 3(2):202–211

Najafabadi MM, Villanustre F, Khoshgoftaar TM, Seliya N, Wald R, Muharemagic E (2015) Deep learning applications and challenges in big data analytics. J Big Data 2(1):1–21

Ou W, Yuan D, Liu Q, Cao Y (2018) Object tracking based on online representative sample selection via non-negative least square. Multimed Tools Appl 77 (9):10569–10587

Peng L, Zhang H, Yang B, Chen Y (2014) A new approach for imbalanced data classification based on data gravitation. Inf Sci 288:347–373

Press SJ, Wilson S (1978) Choosing between logistic regression and discriminant analysis. J Am Stat Assoc 73(364):699–705

Quinlan JR (1993) C4.5: programs for machine learning. Morgan Kaufmann Publishers Inc., San Francisco

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323(6088):533–536

Serikawa S, Lu H (2014) Underwater image dehazing using joint trilateral filter. Comput Electr Eng 40(1):41–50

Shi B, Bai X, Yao C (2017) An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans Pattern Anal Mach Intell 39(11):2298–2304

Tang B, He H (2015) KernelADASYN: kernel based adaptive synthetic data generation for imbalanced learning. In: IEEE congress on evolutionary computation. IEEE, pp 664–671

Wang Q, Li S, Qin H, Hao A (2016) Super-resolution of multi-observed RGB-D images based on nonlocal regression and total variation. IEEE Trans Image Process 25(3):1425–1440

Wang C, Yu Z, Zheng H, Wang N, Zheng B (2017) CGAN-Plankton: towards large-scale imbalanced class generation and fine-grained classification. In: IEEE international conference on image processing. IEEE, pp 855–859

Weiss GM (2004) Mining with rarity: a unifying framework. ACM Sigkdd Explor Newsl 6(1):7–19

Yen S-J, Lee Y-S (2009) Cluster-based under-sampling approaches for imbalanced data distributions. Expert Syst Appl 36(3):5718–5727

Yu H, Ni J, Zhao J (2013) ACOSampling: an ant colony optimization-based undersampling method for classifying imbalanced DNA microarray data. Neurocomputing 101:309–318

Zhou Q, Zheng B, Zhu W, Latecki LJ (2016) Multi-scale context for scene labeling via flexible segmentation graph. Pattern Recogn 59:312–324

Zhou Y, Bai X, Liu W, Latecki LJ (2016) Similarity fusion for visual tracking. Int J Comput Vis 118(3):337–363

Zhou Q, Yang W, Gao G, Ou W, Lu H, Chen J, Latecki LJ (2019) Multi-scale deep context convolutional neural networks for semantic segmentation. World Wide Web 22(2):555–570

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported in part by the National Natural Science Foundation of China under Grants 61771440, 41776113 and 41674037, in part by the Qingdao Municipal Science and Technology Program under Grant 17-1-1-5-jch, in part by the China Scholarship Council under Grant 201806335022, and in part by the Foundation of Shandong provincial Key Laboratory of Digital Medicine and Computer Assisted Surgery under Grant SDKL-DMCAS-2018-07.

Rights and permissions

About this article

Cite this article

Ding, H., Wei, B., Gu, Z. et al. KA-Ensemble: towards imbalanced image classification ensembling under-sampling and over-sampling. Multimed Tools Appl 79, 14871–14888 (2020). https://doi.org/10.1007/s11042-019-07856-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-07856-y