Abstract

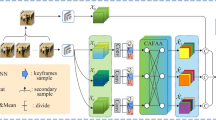

With the large amount of micro-videos available in social network applications, micro-video venue category provides extremely valuable venue information that assists location-oriented applications, personalized services, etc. In this paper, we formulate micro-video venue classification as a multi-modal sequential modeling problem. Unlike existing approaches that use long short-term memory (LSTM) models to capture temporal patterns for micro-video, we propose multi-modality sequence model with gated fully convolutional blocks. Specifically, we firstly adopt three parallel gated fully convolutional blocks to extract spatiotemporal features from visual, acoustic and textual modalities of micro-videos. Then, an additional gated fully convolutional block is used to fuse such three modalities of spatiotemporal features. Finally, corresponding prototype is simultaneously learned to improve the robustness against softmax classification function. Extensive experimental results on a real-world benchmark dataset demonstrate the effectiveness of our model in terms of both Micro-F and Macro-F scores.

Similar content being viewed by others

References

Bai S, Kolter JZ, Koltun V (2018) An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv:1803.01271

Bengio Y, Simard P, Frasconi P (2002) Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw 5(2):157–166

Cao G, Zhao Y, Ni R, Li X (2014) Contrast enhancement-based forensics in digital images. IEEE Trans Inf Forensics Secur 9(3):515–525

Chen J (2016) Multi-modal learning: Study on a large-scale micro-video data collection. In: ACM on multimedia conference, pp 1454–1458

Chen J, Song X, Nie L, Wang X, Zhang H, Chua TS (2016) Micro tells macro: Predicting the popularity of micro-videos via a transductive model. In: ACM on multimedia conference, pp 898–907

Chenggang Y, Yunbin T, Xingzheng W, Yongbing Z, Xinhong H, Yongdong Z, Qionghai D (2019) Stat: Spatial-temporal attention mechanism for video captioning. IEEE transactions on multimedia

Cho K, Merrienboer BV, Bahdanau D, Bengio Y (2014) On the properties of neural machine translation: Encoder-decoder approaches. Computer Science

Donahue J, Hendricks LA, Rohrbach M, Venugopalan S, Guadarrama S, Saenko K, Darrell T (2017) Long-term recurrent convolutional networks for visual recognition and description. IEEE Trans Pattern Anal Mach Intell 39(4):677–691

Feng Y, Ma L, Liu W, Luo J (2019) Spatio-temporal video re-localization by warp lstm. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Gehring J, Auli M, Grangier D, Yarats D, Dauphin YN (2017) Convolutional sequence to sequence learning. arXiv:1705.03122

Guo J, Nie X, Cui C, Xi X, Ma Y, Yin Y (2018) Getting more from one attractive scene: Venue retrieval in micro-videos. In: Advances in multimedia information processing - PCM 2018 - Part I, pp 721–733

Hays J, Efros AA (2008) Im2gps: Estimating geographic information from a single image. In: IEEE Conference on computer vision and pattern recognition, pp 1–8

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Huang L, Luo B (2017) Tag refinement of micro-videos by learning from multiple data sources. Multimed Tools Appl 76(3):1–18

Jing P, Su Y, Nie L, Bai X, Liu J, Wang M (2018) Low-rank multi-view embedding learning for micro-video popularity prediction. IEEE Trans Knowl Data Eng PP(99):1519–1532

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: International conference on neural information processing systems, pp 1106–1114

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Lepri B, Mana N, Cappelletti A, Pianesi F (2009) Automatic prediction of individual performance from “thin slices” of social behavior. In: Proceedings of the 17th international conference on multimedia 2009, pp 733–736

Li Y, Yao T, Mei T, Chao H, Rui Y (2016) Share-and-chat: Achieving human-level video commenting by search and multi-view embedding. In: ACM on multimedia conference, pp 928–937

Liu M, Nie L, Wang M, Chen B (2017) Towards micro-video understanding by joint sequential-sparse modeling. In: ACM on multimedia conference, pp 970–978

Liu W, Huang X, Cao G, Song G, Yang L (2018) Joint learning of lstms-cnn and prototype for micro-video venue classification. In: Advances in multimedia information processing - PCM 2018 - Part II, pp 705–715

Luo W, Liu W, Gao S (2017) Remembering history with convolutional lstm for anomaly detection. In: 2017 IEEE International conference on multimedia and expo (ICME). IEEE, pp 439–444

Miech A, Laptev I, Sivic J (2017) Learnable pooling with context gating for video classification. arXiv:1706.06905

Mikolov T, Sutskever I, Chen K, Corrado G, Dean J (2013) Distributed representations of words and phrases and their compositionality. Adv Neural Inf Proces Syst 26:3111–3119

Nguyen PX, Rogez G, Fowlkes C, Ramanan D (2016) The open world of micro-videos. arXiv:1603.09439

Nie L, Wang X, Zhang J, He X, Zhang H, Hong R, Tian Q (2017) Enhancing micro-video understanding by harnessing external sounds. In: ACM on multimedia conference, pp 1192–1200

Redi M, Hare NO, Schifanella R, Trevisiol M, Jaimes A (2014) 6 seconds of sound and vision: Creativity in micro-videos. In: Computer vision and pattern recognition, pp 4272–4279

Rochan M, Ye L, Wang Y (2018) Video summarization using fully convolutional sequence networks. In: Computer vision - ECCV 2018 - 15th european conference, Munich, Germany, September 8-14, 2018, proceedings, Part XII, pp 358–374

Sanden C, Zhang JZ (2011) Enhancing multi-label music genre classification through ensemble techniques. In: Proceeding of the 34th international ACM SIGIR conference on research and development in information retrieval, SIGIR 2011, pp 705–714

Schuster M, Paliwal KK (1997) Bidirectional recurrent neural networks. IEEE Trans Signal Process 45(11):2673–2681

Shi X, Chen Z, Wang H, Yeung D, Wong W, Woo W (2015) Convolutional LSTM network: a machine learning approach for precipitation nowcasting. In: Advances in neural information processing systems 2015, pp 802–810

Song S, Huang H, Ruan T (2018) Abstractive text summarization using lstm-cnn based deep learning. Multimed Tools Appl 78(10):1–19

Xu K, Wen L, Li G, Bo L, Huang Q (2019) Spatiotemporal cnn for video object segmentation. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Yan C, Li L, Zhang C, Liu B, Dai Q (2019) Cross-modality bridging and knowledge transferring for image understanding. IEEE Transactions on Multimedia

Yan C, Xie H, Chen J, Zha Z, Hao X, Zhang Y, Dai Q (2018) A fast uyghur text detector for complex background images. IEEE Trans Multimedia 20 (12):3389–3398

Yang H, Zhang X, Yin F, Liu C (2018) Robust classification with convolutional prototype learning. In: IEEE conference on computer vision and pattern recognition

Ye M, Yin P, Lee WC (2010) Location recommendation for location-based social networks. In: ACM sigspatial international symposium on advances in geographic information systems, acm-gis 2010, November 3-5, 2010, San Jose, CA, USA, proceedings, pp 458–461

Yue Z, Qi L, Song L (2018) Sentence-state lstm for text representation. In: Proceedings of the 56th annual meeting of the association for computational linguistics (ACL)

Zhang J, Nie L, Wang X, He X, Huang X, Chua TS (2016) Shorter-is-better: Venue category estimation from micro-video. In: ACM on multimedia conference, pp 1415–1424

Zhao B, Li X, Lu X (2018) Hsa-rnn: Hierarchical structure-adaptive rnn for video summarization. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Zhu L, Huang Z, Liu X, He X, Sun J, Zhou X (2017) Discrete multi-modal hashing with canonical views for robust mobile landmark search. IEEE Trans Multimedia 19(9):2066–2079

Acknowledgements

This work was supported by the National Natural Science Foundation of China (61401408, 61772539), and the Fundamental Research Funds for the Central Universities (CUC2019B021).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, W., Huang, X., Cao, G. et al. Multi-modal sequence model with gated fully convolutional blocks for micro-video venue classification. Multimed Tools Appl 79, 6709–6726 (2020). https://doi.org/10.1007/s11042-019-08147-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-08147-2