Abstract

Speaker verification is the process used to recognize a speaker from his/her voice characteristics by extracting the features. Speaker verification with text-independent data is a process of verifying the speaker identity without limitation in the speech content. In the speaker verification process, long utterances are normally used but it contains lot of silences leading to complexity and more disruptions. So, we are performing speaker verification method based on short utterance data. The main objective of the research work is to extract, characterize, and recognize the information about speaker identity. Our proposed work contains four stages: 1) utterance partitioning, 2) feature extraction, 3) feature selection, and 4) classification. In our proposed model, an utterance partitioning approach is used to shorten the full-length speech into numerous short-length utterances before the pre-processing stage. In the feature extraction phase, noise removal is carried out with pre-emphasis filter in the pre-processing step. The Mel Advanced Hilbert-Huang Cepstral Coefficients (MAHCC) technique is used for extracting the features from the given input speech signal. Furthermore, the feature selection process is done with the help of a Crow Search Algorithm (CSA) by ranking the given feature set to obtain optimal features for classification. In the classification stage, the Deep Hidden Markov Model (DHMM) method is introduced to classify the features for speaker verification with discriminative pre-training process. Thus, the proposed approach provides an accurate classification and the implementation results show that the performance of the proposed method is better than the existing methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Al-Ali AKH, Dean D, Senadji B, Chandran V, Naik G (2017) Enhanced forensic speaker verification using a combination of DWT and MFCC feature warping in the presence of noise and reverberation conditions. IEEE Access

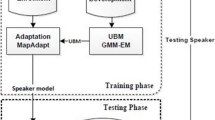

Chowdhury MFR, Selouani SA, O'Shaughnessy D (2010) Text-independent distributed speaker identification and verification using GMM-UBM speaker models for mobile communications. In Information sciences signal processing and their applications (ISSPA), 2010 10th international conference on (pp. 57–60). IEEE

Dehak KN, Dehak PJ, Dumouchel R, Ouellet P (2011) Front-end factor analysis for speaker verification. IEEE Trans Audio Speech Lang Process 19(4):788–798

Deng S, Huang L, Taheri J, Yin J, Zhou M, Zomaya AY (2017) Mobility-aware service composition in mobile communities. IEEE Transactions on Systems, Man, and Cybernetics: Systems 47(3):555–568

Furui S (1981) Comparison of speaker recognition methods using statistical features and dynamic features. IEEE Trans Acoust Speech Signal Process 29(3):342–350

Furui S (1986) Speaker-independent isolated word recognition using dynamic features of speech spectrum. IEEE Trans Acoust Speech Signal Process 34(1):52–59

Hong C, Yu J, Tao D, Wang M (2014) Image-based three-dimensional human pose recovery by multiview locality-sensitive sparse retrieval. IEEE Trans Ind Electron 62(6):3742–3751

Hong C, Yu J, Wan J, Tao D, Wang M (2015) Multimodal deep autoencoder for human pose recovery. IEEE Trans Image Process 24(12):5659–5670

Hori T, Chen Z, Erdogan H, Hershey JR, Le Roux J, Mitra V, Watanabe S (2017) Multi-microphone speech recognition integrating beamforming, robust feature extraction, and advanced DNN/RNN backend. Comput Speech Lang 46:401–418

Khodabakhsh A, Mohammadi A, Demiroglu C (2017) Spoofing voice verification systems with statistical speech synthesis using limited adaptation data. Comput Speech Lang 42:20–37

Kounoudes A, Kekatos V, Mavromoustakos S (2006) Voice biometric authentication for enhancing internet service security. In Information and communication technologies, 2006. ICTTA'06. 2nd (Vol. 1, pp. 1020–1025). IEEE

Krothapalli SR, Koolagudi SG (2013) Characterization and recognition of emotions from speech using excitation source information. International journal of speech technology 16(2):181–201

Kua JMK, Epps J, Ambikairajah E (2013) I-vector with sparse representation classification for speaker verification. Speech Comm 55(5):707–720

Larcher A, Lee KA, Ma B, Li H (2014) Text-dependent speaker verification: classifiers, databases and RSR2015. Speech Comm 60:56–77

Lei Y, Scheffer N, Ferrer L, McLaren M (2014) A novel scheme for speaker recognition using a phonetically-aware deep neural network. In 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 1695–1699). IEEE

Li L, Zhao Y, Jiang D, Zhang Y, Wang F, Gonzalez I, Sahli H (2013) Hybrid Deep Neural Network--Hidden Markov Model (DNN-HMM) Based Speech Emotion Recognition. In 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction (pp. 312–317). IEEE

Liu Z, Wu Z, Li T, Li J, Shen C (2018) GMM and CNN hybrid method for short utterance speaker recognition. IEEE Transactions on Industrial Informatics 14(7):3244–3252

Ma J, Sethu V, Ambikairajah E, Lee KA (2018) Generalized variability model for speaker verification. IEEE Signal Processing Letters 25(12):1775–1779

Misra A, Hansen JH (2018) Maximum-likelihood linear transformation for unsupervised domain adaptation in speaker verification. IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP) 26(9):1549–1558

Narendra NP, Airaksinen M, Story B, Alku P (2019) Estimation of the glottal source from coded telephone speech using deep neural networks. Speech Comm 106:95–104

Ozaydin S (2017) Design of a text independent speaker recognition system. In 2017 international conference on electrical and computing technologies and applications (ICECTA) (pp. 1–5). IEEE

Rahulamathavan Y, Sutharsini KR, Ray IG, Lu R, Rajarajan M (2019) Privacy-preserving iVector-based speaker verification. IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), 27(3), 496–506

Raitio T, Suni A, Vainio M, Alku P (2014) Synthesis and perception of breathy, normal, and lombard speech in the presence of noise. Comput Speech Lang 28(2):648–664

Sarkar S, Rao KS (2014) Stochastic feature compensation methods for speaker verification in noisy environments. Appl Soft Comput 19:198–214

Shankar S, Udupi VR (2016) Recognition of faces–an optimized algorithmic chain. Procedia Computer Science 89:597–606

Shifani HJM, Kannan P (2017) Design and analysis of sub-band coding of speech signal under Noisy condition using. Multi rate Signal Processing 4(4):1046–1065

Sigtia S, Stark AM, Krstulović S, Plumbley MD (2016) Automatic environmental sound recognition: performance versus computational cost. IEEE/ACM Transactions on Audio, Speech, and Language Processing 24(11):2096–2107

Soong FK, Rosenberg AE, Juang BH, Rabiner LR (1987) Report: a vector quantization approach to speaker recognition. AT&T technical journal 66(2):14–26

Sreekumar KT, George KK, Arunraj K, Kumar CS (2014). Spectral matching based voice activity detector for improved speaker recognition. In international conference on power signals control and computations (EPSCICON). IEEE (pp. 1–4).

Sun L, Xie K, Gu T, Chen J, Yang Z (2019) Joint dictionary learning using a new optimization method for single-channel blind source separation. Speech Comm 106:85–94

Tan Z, Mak MW, Mak BKW (2018) DNN-based score calibration with multitask learning for noise robust speaker verification. IEEE/ACM Transactions on Audio, Speech, and Language Processing 26(4):700–712

Tan Z, Mak MW, Mak BKW, Zhu Y (2018) Denoised senone i-vectors for robust speaker verification. IEEE/ACM Transactions on Audio, Speech, and Language Processing 26(4):820–830

Yao Q, Mak MW (2018) SNR-invariant multitask deep neural networks for robust speaker verification. IEEE Signal Processing Letters 25(11):1670–1674

Yao S, Zhou R, Zhang P, Yan Y (2018) Discriminatively learned network for i-vector based speaker recognition. Electron Lett 54(22):1302–1304

Yu J, Rui Y, Tao D (2014) Click prediction for web image reranking using multimodal sparse coding. IEEE Trans Image Process 23(5):2019–2032

Yu J, Tao D, Wang M, Rui Y (2014) Learning to rank using user clicks and visual features for image retrieval. IEEE transactions on cybernetics 45(4):767–779

Yu J, Yang X, Gao F, Tao D (2016) Deep multimodal distance metric learning using click constraints for image ranking. IEEE transactions on cybernetics 47(12):4014–4024

Yu H, Tan ZH, Ma Z, Martin R, Guo J (2017) Spoofing detection in automatic speaker verification systems using DNN classifiers and dynamic acoustic features. IEEE transactions on neural networks and learning systems:99), 1–99),12

Zhang C, Koishida K, Hansen JH (2018) Text-independent speaker verification based on triplet convolutional neural network embeddings. IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP) 26(9):1633–1644

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Arora, S.V., Vig, R. An efficient text-independent speaker verification for short utterance data from Mobile devices. Multimed Tools Appl 79, 3049–3074 (2020). https://doi.org/10.1007/s11042-019-08196-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-08196-7