Abstract

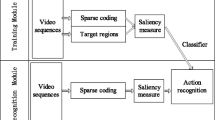

Dense tracking has been proven successful in action recognition, but it may produce a large number of features in background, which are not so relevant to actions and may hurt recognition performance. To obtain the action-relevant features for action recognition, this paper proposes a three-stage saliency detection technique to recover action-relevant regions. In the first stage, low-rank matrix recovery optimization is employed to decompose the overall motion of each sub-video (temporally split video) into a low-rank part and a sparse part, and the latter is used to compute initial saliency to discriminate candidate foreground from definite background. In the second stage, using the dictionary formed by the patches in definite background, the sparse representation for each patch in candidate foreground is obtained based on motion and appearance information to compute the refined saliency, which ensures the action-relevant regions tend to be distinguished more clearly from background. In the third stage, the saliency is spatially updated based on the motion and appearance similarity so that the action-relevant regions can be better highlighted due to the increase of spatial saliency coherence. Finally, a binary saliency map is created by comparing the updated saliency with a given threshold to indicate action-relevant regions, which is fused into dense tracking to extract action-relevant trajectory features in a video for action recognition. Experimental results on four benchmark datasets demonstrate that the proposed method performs better than the conventional dense tracking and competitively with its improved versions.

Similar content being viewed by others

References

Bregonzio M, Li J, Gong S, Xiang T (2010) Discriminative topics modelling for action feature selection and recognition. In: Proceedings of British machine vision conference, pp 1–11

Cai Z, Wang L, Peng X, Qiao Y (2014) Multi-view super vector for action recognition. In: 2014 IEEE Conference on computer vision and pattern recognition (CVPR), pp 596–603

Candés EJ, Wakin MB, Boyd SP (2008) Enhancing sparsity by reweighted l1 minimization. J Fourier Anal Appl 14(5–6):877–905

Carreira J, Zisserman A (2017) Quo vadis, action recognition? A new model and the kinetics dataset. volume 2017-January, pp 4724–4733

Caruccio L, Polese G, Tortora G, Iannone D (2019) EDCAR: a knowledge representation framework to enhance automatic video surveillance. Expert Syst Appl 131:190–207

Cho J, Lee M, Chang HJ, So H (2014) Robust action recognition using local motion and group sparsity. Pattern Recogn 47(5):1813–1825

Dollar P, Rabaud V, Cottrell G, Belongie S (2005) Behavior recognition via sparse spatio-temporal features. In: IEEE International workshop on visual surveillance and performance evaluation of tracking and surveillance, pp 65–72

Gao Z, Cheong LF, Wang YX (2014) Block-sparse rpca for salient motion detection. IEEE Trans Pattern Anal Mach Intell 36(10):1975–1987

Jain H, Harit G (2018) Unsupervised temporal segmentation of human action using community detection. In: 25th IEEE International conference on image processing (ICIP), pp 1892–1896

Jain M, Jegou H, Bouthemy P (2013) Better exploiting motion for better action recognition. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), pp 2555–2562

Jiang YG, Dai Q, Liu W, Xue X, Ngo CW (2015) Human action recognition in unconstrained videos by explicit motion modeling. IEEE Trans Image Process 24 (11):3781–3795

Kuehne H, Jhuang H, Garrote E, Poggio T, Serre T (2011) HMDB: a large video database for human motion recognition. In: Proceedings of IEEE international conference on computer vision (ICCV), pp 2556–2563

Laptev I (2005) On space-time interest points. Int J Comput Vis 64(2):107–123

Li X, Lu H, Zhang L, Ruan X, Yang MH (2013) Saliency detection via dense and sparse reconstruction. In: Proceedings of IEEE international conference on computer vision (ICCV), pp 2976–2983

Li Q, Cheng H, Zhou Y, Huo G (2016) Human action recognition using improved salient dense trajectories. Comput Intell Neurosci 2016(5):1–11

Lin Z, Chen M, Ma Y (2009) The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. Eprint Arxiv, 9

Liu J, Luo J, Shah M (2009) Recognizing realistic actions from videos in the wild. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), pp 1996–2003

Liu Y, Nie L, Han L, Zhang L, Rosenblum DS (2015) Action2Activity: recognizing complex activities from sensor data. In: Proceedings of the 24th international conference on artificial intelligence, pp 1617–1623

Liu L, Cheng L, Liu Y, Jia Y, Rosenblum DS (2016) Recognizing complex activities by a probabilistic interval-based model. In: Proceedings of 30th AAAI conference on artificial intelligence, pp 1266–1272

Liu Y, Nie L, Liu L, Rosenblum DS (2016) From action to activity: sensor-based activity recognition. Neurocomputing 181:108–115

Liu Z, Li J, Ye L, Sun G, Shen L (2017) Saliency detection for unconstrained videos using superpixel-level graph and spatiotemporal propagation. IEEE Trans Circ Syst Vid Technol 27(12):2527–2542

Lu Y, Wei Y, Liu L, Zhong J, Sun L, Liu Y (2017) Towards unsupervised physical activity recognition using smartphone accelerometers. Multimed Tools Appl 76(8):10701–10719

Lucas BD, Kanade T (1981) An iterative image registration technique with an application to stereo vision (darpa). Nutr Cycl Agroecosyst 83(1):13–26

Mairal J, Mairal J (2012) SPAMS: a sparse modeling software, v2.3. http://spams-devel.gforge.inria.fr

Marszalek M, Laptev I, Schmid C (2009) Actions in context. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), pp 2929–2936

Matikainen P, Hebert M, Sukthankar R (2009) Trajectons: action recognition through the motion analysis of tracked features. In: Proceedings of IEEE international conference on computer vision workshops, pp 514–521

Messing R, Pal C, Kautz H (2009) Activity recognition using the velocity histories of tracked keypoints. In: Proceedings of IEEE International conference on computer vision (ICCV), pp 104–111

Murthy OVR, Goecke R (2015) Ordered trajectories for human action recognition with large number of classes. Image Vis Comput, 22–34

Nigam S, Khare A (2016) Integration of moment invariants and uniform local binary patterns for human activity recognition in video sequences. Multimed Tools Appl 75(24):17303–17332

Peng X, Qiao Y, Peng Q (2014) Motion boundary based sampling and 3d co-occurrence descriptors for action recognition. Image Vis Comput 32(9):616–628

Perronnin F, Sánchez J, Mensink T (2010) Improving the fisher kernel for large-scale image classification. In: Proceedings of European conference on computer vision (ECCV), pp 143–156

Sánchez J, Perronnin F, Mensink T, Verbeek J (2013) Image classification with the fisher vector: theory and practice. Int J Comput Vis 105(3):222–245

Somasundaram G, Cherian A, Morellas V, Papanikolopoulos N (2014) Action recognition using global spatio-temporal features derived from sparse representations. Comput Vis Image Underst 123(0):1–13

Soomro K, Zamir AR, Shah M (2012) UCF101: a dataset of 101 human actions classes from videos in the wild CRCV-TR-12-01

Souly N, Shah M (2016) Visual saliency detection using group lasso regularization in videos of natural scenes. Int J Comput Vis 117(1):93–110

Sun J, Wu X, Yan S, Cheong LF (2009) Hierarchical spatio-temporal context modeling for action recognition. In: Proceedings of IEEE computer society conference on computer vision and pattern recognition (CVPR), pp 2004–2011

Sun J, Mu Y, Yan S, Cheong LF (2010) Activity recognition using dense long-duration trajectories. In: Proceedings of IEEE international conference on multimedia and expo (ICME), pp 322–327

Tong N, Lu H, Zhang Y, Ruan X (2015) Salient object detection via global and local cues. Pattern Recogn 48(10):3258–3267

Vig E, Dorr M, Cox D (2012) Space-variant descriptor sampling for action recognition based on saliency and eye movements. In: Proceedings of European conference on computer vision (ECCV), vol 7578, pp 84–97

Wang X, Qi C (2016) Saliency-based dense trajectories for action recognition using low-rank matrix decomposition. J Vis Commun Image Represent, 41

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: Proceedings of IEEE international conference on computer vision (ICCV), pp 3551–3558

Wang H, Ullah MM, Kläser A, Laptev I, Schmid C (2009) Evaluation of local spatio-temporal features for action recognition. In: Proceedings of British machine vision conference (BMVC)

Wang J, Yang J, Yu K, Lv F, Huang T, Gong Y (2010) Locality-constrained linear coding for image classification. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), pp 3360–3367

Wang H, Kläser A, Schmid C, Liu C-L (2013) Dense trajectories and motion boundary descriptors for action recognition. Int J Comput Vis 103(1):60–79

Wang W, Shen J, Yang R, Porikli F (2018) Saliency-aware video object segmentation. IEEE Trans Pattern Anal Mach Intell 40(1):20–33

Wang H, Schmid C LEAR-INRIA submission for the thumos workshop. In: http://crcv.ucf.edu/ICCV13-Action-Workshop/

Weng Z, Guan Y (2018) Action recognition using length-variable edge trajectory and spatio-temporal motion skeleton descriptor. EURASIP J Image Video Process 2018 (1):8

Wright J, Ganesh A, Rao S, Ma Y (2009) Robust principal component analysis: exact recovery of corrupted low-rank matrices via convex optimization

Wu S, Oreifej O, Shah M (2011) Action recognition in videos acquired by a moving camera using motion decomposition of lagrangian particle trajectories. In: Proceedings of IEEE conference on computer vision and pattern recognition (CVPR), pp 1419–1426

Wu J, Zhang Y, Lin W (2014) Towards good practices for action video encoding. In: 2014 IEEE Conference on computer vision and pattern recognition (CVPR), pp 2577–2584

Wu Y, Yin J, Wang L, Liu H, Dang Q, Li Z, Yin Y (2018) Temporal action detection based on action temporal semantic continuity. IEEE Access 6:31677–31684

Yan J, Zhu M, Liu H, Liu Y (2010) Visual saliency detection via sparsity pursuit. IEEE Signal Process Lett 17(8):739–742

Yang Y, Pan H, Xiaokang D (2018) Human action recognition with salient trajectories and multiple kernel learning. Multimed Tools Appl 77(14):17709–17730

Yao T, Wang Z, Xie Z, Gao J, Feng DD (2017) Learning universal multiview dictionary for human action recognition. Pattern Recogn 64(C):236–244

Acknowledgements

This work is supported in part by the National Natural Science Foundation of China (Grant No. 61572395) and the Project of Shandong Province Higher Educational Science and Technology Program (Grant No. J18KA345).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, X., Qi, C. Detecting action-relevant regions for action recognition using a three-stage saliency detection technique. Multimed Tools Appl 79, 7413–7433 (2020). https://doi.org/10.1007/s11042-019-08535-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-08535-8