Abstract

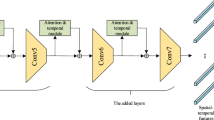

As an important issue in video classification, human action recognition is becoming a hot topic in computer vision. The ways of effectively representing the spatial static and temporal dynamic information of videos are important problems in video action recognition. This paper proposes an attention mechanism based convolutional LSTM action recognition algorithm to improve the accuracy of recognition by extracting the salient regions of actions in videos effectively. First, GoogleNet is used to extract the features of video frames. Then, those feature maps are processed by the spatial transformer network for the attention. Finally the sequential information of the features is modeled via the convolutional LSTM to classify the action in the original video. To accelerate the training speed, we adopt the analysis of temporal coherence to reduce the redundant features extracted by GoogleNet with trivial accuracy loss. In comparison with the state-of-the-art algorithms for video action recognition, competitive results are achieved on three widely-used datasets, UCF-11, HMDB-51 and UCF-101. Moreover, by using the analysis of temporal coherence, desirable results are obtained while the training time is reduced.

Similar content being viewed by others

References

Bahdanau Dzmitry, Cho K, Bengio Y (2015) Neural machine translation by jointly learning to align and translate. In: International conference on learning representations ICLR

Bhattacharya S, Sukthankar R, Jin R, Shah M (2011) A probabilistic representation for efficient large scale visual recognition tasks. In: IEEE conference on computer vision and pattern recognition, CVPR, vol 42, pp 2593–2600

Carreira J, Zisserman A (2017) Quo vadis, action recognition? A new model and the kinetics dataset. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 4724–4733

Deng J, Dong W, Socher R, Li LJ, Li K, Li F-F (2009) Imagenet: a large-scale hierarchical image database. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 248–255

Donahue J, Anne Hendricks L, Guadarrama S, Rohrbach M, Venugopalan S, Saenko K, Darrell T (2015) Long-term recurrent convolutional networks for visual recognition and description. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 2625–2634

Feichtenhofer C, Pinz A, Zisserman A (2016) Convolutional two-stream network fusion for video action recognition. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 1933–1941

Fernando B, Gavves E, Oramas MJ, Ghodrati A, Tuytelaars T (2015) Modeling video evolution for action recognition. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 5378–5387

Graves A, Mohamed AR, Hinton G (2013) Speech recognition with deep recurrent neural networks. In: IEEE international conference on acoustics, speech and signal processing, ICASSP, vol 38, pp 6645–6649

Guo Y, Tao D, Liu W, Cheng J (2017) Multiview cauchy estimator feature embedding for depth and inertial sensor-based human action recognition. IEEE Trans Syst Man Cybern Syst 47(4):617–627

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 770–778

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9:1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Ikizler-Cinbis N, Sclaroff S (2010) Object, scene and actions: combining multiple features for human action recognition. In: European conference on computer vision, ECCV, vol 6311, pp 494–507

Jaderberg M, Simonyan K, Zisserman A (2015) Spatial transformer networks. In: Advances in neural information processing systems, NIPS, pp 2017–2025

Jégou H, Douze M, Schmid C, Pérez P (2010). In: IEEE conference on computer vision and pattern recognition, CVPR, vol 238, pp 3304–3311

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Li FF (2014) Large-scale video classification with convolutional neural networks. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 1725–1732

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. In: International conference on learning representations ICLR

Krizhevsky A, Sutskever I, Hinton G (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, NIPS, pp 1097–1105

Kuehne H, Jhuang H, Garrote E, Poggio T, Serre T (2011) HMDB: a large video database for human motion recognition. In: IEEE international conference on computer vision, ICCV, vol 24, pp 2556– 2563

Lan Z, Lin M, Li X, Hauptmann AG, Raj B (2015) Beyond Gaussian pyramid: multi-skip feature stacking for action recognition. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 204–212

Le QV, Zou WY, Yeung SY, Ng AY (2011) Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In: IEEE conference on computer vision and pattern recognition, CVPR, vol 42, pp 3361–3368

Lei Q, Zhang H, Xin M, Cai Y (2018) A hierarchical representation for human action recognition in realistic scenes. Multimed Tools Appl, MTAP 3:1–21

Li Q, Qiu Z, Yao T, Mei T, Rui Y, Luo J (2016) Action recognition by learning deep multi-granular spatio-temporal video representation. In: ACM on international conference on multimedia retrieval, ICMR, pp 159–166

Li Z, Gavves E, Jain M, Snoek CGM (2018) Videolstm convolves, attends and flows for action recognition. Comput Vis Image Underst 166:41–50

Liu J, Luo J, Shah M (2009) Recognizing realistic actions from videos. In: IEEE conference on computer vision and pattern recognition, CVPR, vol 38, pp 1996–2003

Luo Y, Yin D, Wang A, Wu W (2018) Pedestrian tracking in surveillance video based on modified CNN. Multimed Tools Appl, MTAP 77(18):24041–24058

Mnih V, Heess N, Graves A (2014) Recurrent models of visual attention. In: Advances in neural information processing systems, NIPS, pp 2204–2212

Ng JYH, Hausknecht M, Vijayanarasimhan S, Vinyals O, Monga R, Toderici G (2015) Beyond short snippets: deep networks for video classification. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 4694–4702

Peng X, Zou C, Qiao Y, Peng Q (2014) Action recognition with stacked fisher vectors. In: European conference on computer vision, ECCV, vol 8693, pp 581–595

Peng X, Wang L, Wang X, Yu Q (2016) Bag of visual words and fusion methods for action recognition: comprehensive study and good practice. Comput Vis Image Underst 150:109–125

Qiu Z, Yao T, Mei T (2017) Learning spatio-temporal representation with pseudo-3d residual networks. In: IEEE international conference on computer vision, ICCV, pp 5534–5542

Saleh A, Abdel-Nasser M, Akram F, Garcia MA, Puig D (2016) Analysis of temporal coherence in videos for action recognition. In: International conference on image analysis and recognition ICIAR

Sharma S, Kiros R, Salakhutdinov R (2016) Action recognition using visual attention. In: International conference on learning representations, ICLR

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. Adv Neural Inf Proces Syst, NIPS 1(4):568–576

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on learning representations, ICLR

Soomro K, Zamir AR, Shah M (2012) UCF101: a dataset of 101 human actions classes from videos in the wild, Technical report CRCV-TR-12-01 UCF center for research in computer vision

Srivastava N, Mansimov E, Salakhudinov R (2015) Unsupervised Learning of Video Representations Using LSTMs. In: International conference on machine learning, ICML, pp 843–852

Sun L, Jia K, Yeung DY, Shi BE (2015) Human action recognition using factorized spatio-temporal convolutional networks. In: IEEE international conference on computer vision, CVPR, pp 4597–4605

Szegedy C et al (2015) Going deeper with convolutions. In: IEEE conference on computer vision and pattern recognition, CVPR

Tao D, Wen Y, Hong R (2016) Multicolumn bidirectional long short-term memory for mobile devices-based human activity recognition. IEEE Internet Things J 3(6):1124–1134

Tao D, Guo Y, Li Y, Gao X (2018) Tensor rank preserving discriminant analysis for facial recognition. IEEE Trans Image Process 27(1):325–334

Tran D, Bourdev LD, Fergus R, Torresani L, Paluri M (2014) C3D: generic features for video analysis. Commun Res Rep, CoRR 2(7):8

Tran D, Wang H, Torresani L, Ray J, LeCun Y, Paluri M (2017) A closer look at spatiotemporal convolutions for action recognition. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 6450–6459

Veeriah V, Zhuang N, Qi GJ, Differential recurrent neural networks for action recognition (2015). In: IEEE international conference on computer vision, CVPR, pp 4041–4049

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: IEEE international conference on computer vision, CVPR, pp 3551–3558

Wang H, Klaser A, Schmid C, Liu CL (2013) Dense trajectories and motion boundary descriptors for action recognition. Int J Comput Vis 103(1):60–79

Wang L, Qiao Y, Tang X (2015) Action recognition with trajectory-pooled deep-convolutional descriptors. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 4305–4314

Wang X, Farhadi A, Gupta A (2016) Actions transformations. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 2658–2667

Wu Z, Wang X, Jiang YG, Ye H, Xue X (2015) Modeling spatial-temporal clues in a hybrid deep learning framework for video classification. In: ACM International Conference on Multimedia, pp 461–470

Wu Z, Jiang YG, Wang X, Ye H, Xue X (2016) Multi-stream multi-class fusion of deep networks for video classification. In: ACM Conference on Multimedia, pp 791–800

Wu CY, Zaheer M, Hu H, Manmatha R, Smola AJ, Krähenbühl P (2018) Compressed video action recognition. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 6026–6035

Xu K, Ba J, Kiros R, et al. (2015) Show, attend and tell: neural image caption generation with visual attention. In: International conference on machine learning, ICML, pp 2048–2057

Xu W, Xu W, Yang M, Yu K (2012) 3D convolutional neural networks for human action recognition, vol 35, pp 221–231

Yan Y, Ni B, Yang X (2017) Predicting human interaction via relative attention model. In: International joint conference on artificial intelligence, IJCAI, pp 3245–3251

Yao L, Torabi A, Cho K, Ballas N, Pal C, Larochelle H, Courville A (2015) Describing videos by exploiting temporal structure. In: IEEE international conference on computer vision, ICCV, pp 4507–4515

Ye H, Wu Z, Zhao RW, Wang X, Jiang YG, Xue X (2015) Evaluating two-stream CNN for video classification. In: ACM international conference on multimedia retrieval, ICMR, pp 435–442

Zhu Y, Zhao C, Gun H, Wang J, Zhao X, Lu H (2019) Attention CoupleNet: fully convolutional attention coupling network for object detection. IEEE Trans Image Process 28(1):113–126

Acknowledgements

The authors are grateful to the support of the National Natural Science Foundation of China (61572104, 61103146, 61402076) and the Fundamental Research Funds for the Central Universities (DUT17JC04).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ge, H., Yan, Z., Yu, W. et al. An attention mechanism based convolutional LSTM network for video action recognition. Multimed Tools Appl 78, 20533–20556 (2019). https://doi.org/10.1007/s11042-019-7404-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-7404-z