Abstract

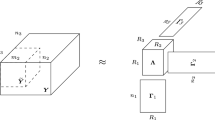

Tensor decomposition methods have been widely applied to big data analysis as they bring multiple modes and aspects of data to a unified framework, which allows us to discover complex internal structures and correlations of data. Unfortunately most existing approaches are not designed to meet the challenges posed by big data dilemma. This paper attempts to improve the scalability of tensor decompositions and makes two contributions: A flexible and fast algorithm for the CP decomposition (FFCP) of tensors based on their Tucker compression; A distributed randomized Tucker decomposition approach for arbitrarily big tensors but with relatively low multilinear rank. These two algorithms can deal with huge tensors, even if they are dense. Extensive simulations provide empirical evidence of the validity and efficiency of the proposed algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Notes

We have assumed that each sub-tensor can be efficiently handled in by the associated worker, otherwise we increase the number of workers and decrease the size of each sub-tensor accordingly

The performance index SIR is defined as \(\text {SIR}(\mathbf {a}_{r}^{(n)},{\hat {\mathbf {a}}}_{r}^{(n)})=20\log {\left \|\left \|{\mathbf {a}_{r}^{(n)}-{\hat {\mathbf {a}}}_{r}^{(n)}}\right \|\right \|}/{\left \|\left \|{\mathbf {a}_{r}^{(n)}}\right \|\right \|}\), where \(\mathbf {a}_{r}^{(n)}\) is an estimate of \(\mathbf {a}_{r}^{(n)}\), both \(\mathbf {a}_{r}^{(n)}\) and \(\mathbf {a}_{r}^{(n)}\) are normalized to be zero-mean and unit-variance.

References

Andersson CA, Bro R (2000) The N-way toolbox for MATLAB. http://www.models.life.ku.dk/source/nwaytoolbox/

Bader BW, Kolda TG (2012) MATLAB tensor toolbox version 2.5. http://csmr.ca.sandia.gov/tgkolda/TensorToolbox/

Baraniuk R, Cevher V, Wakin M (2010) Low-dimensional models for dimensionality reduction and signal recovery: a geometric perspective. Proc IEEE 98 (6):959–971. https://doi.org/10.1109/JPROC.2009.2038076

Bro R, Andersson CA (1998) Improving the speed of multiway algorithms: part ii: compression. Chemom Intell Lab Syst 42(1–2):105–113

Cai D, He X, Han J, Huang T (2011) Graph regularized nonnegative matrix factorization for data representation. IEEE Trans Pattern Anal Mach Intell 33 (8):1548–1560. https://doi.org/10.1109/TPAMI.2010.231

Caiafa CF, Cichocki A (2010) Generalizing the column-row matrix decomposition to multi-way arrays. Linear Algebra Appl 433(3):557–573

Cevher V, Becker S, Schmidt M (2014) Convex optimization for big data: scalable, randomized, and parallel algorithms for big data analytics. IEEE Signal Process Mag 31(5):32–43. https://doi.org/10.1109/MSP.2014.2329397

Cichocki A, Phan AH (2009) Fast local algorithms for large scale nonnegative matrix and tensor factorizations. IEICE Trans Fund Electron Commun Comput Sci (Invited paper) E92-A(3):708–721

Cichocki A, Zdunek R, Phan AH, Amari SI (2009) Nonnegative matrix and tensor factorizations: applications to exploratory multi-way data analysis and blind source separationpplications to exploratory multi-way data analysis and blind source separation. Wiley, Chichester

Cohen J, Farias R, Comon P (2015) Fast decomposition of large nonnegative tensors. IEEE Signal Process Lett 22(7):862–866. https://doi.org/10.1109/LSP.2014.2374838

Comon P (2014) Tensors: a brief introduction. IEEE Signal Process Mag 31 (3):44–53. https://doi.org/10.1109/MSP.2014.2298533

De Lathauwer L, De Moor B, Vandewalle J (2000) A multilinear singular value decomposition. SIAM J Matrix Anal Appl 21:1253–1278

De Lathauwer L, Castaing J, Cardoso J (2007) Fourth-order cumulant-based blind identification of underdetermined mixtures. IEEE Trans Signal Process 55 (6):2965–2973. https://doi.org/10.1109/TSP.2007.893943

De Silva V, Lim LH (2008) Tensor rank and the ill-posedness of the best low-rank approximation problem. SIAM J Matrix Anal Appl 30(3):1084–1127

Drineas P, Mahoney MW (2007) A randomized algorithm for a tensor-based generalization of the singular value decomposition. Linear Algebra Appl 420 (2–3):553–571. https://doi.org/10.1016/j.laa.2006.08.023

Duchenne O, Bach F, Kweon IS, Ponce J (2011) A tensor-based algorithm for high-order graph matching. IEEE Trans Pattern Anal Mach Intell 33(12):2383–2395. https://doi.org/10.1109/TPAMI.2011.110

Halko N, Martinsson P, Tropp J (2011) Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev 53(2):217–288. https://doi.org/10.1137/090771806

Kang U, Papalexakis E, Harpale A, Faloutsos C (2012) GigaTensor: scaling tensor analysis up by 100 times - algorithms and discoveries. In: Proceedings of the 18th ACM SIGKDD international conference on knowledge discovery and data mining, KDD ’12. ACM, New York, pp 316–324, DOI https://doi.org/10.1145/2339530.2339583

Kolda TG, Bader BW (2009) Tensor decompositions and applications. SIAM Rev 51(3):455–500

Lim LH, Comon P (2009) Nonnegative approximations of nonnegative tensors. J Chemometr 23(7-8):432–441. https://doi.org/10.1002/cem.1244

Nesterov Y (1983) A method of solving a convex programming problem with convergence rate o(1/k2). Soviet Math Doklady 27(2):372–376

Papalexakis EE, Faloutsos C, Sidiropoulos ND (2012) ParCube: sparse parallelizable tensor decompositions. In: European conference on machine learning and knowledge discovery in databases - volume part I, ECML PKDD’12. Springer, Berlin, pp 521–536, DOI https://doi.org/10.1007/978-3-642-33460-3_39

Papalexakis E, Sidiropoulos N, Bro R (2013) From k-means to higher-way co-clustering: multilinear decomposition with sparse latent factors. IEEE Trans Signal Process 61(2):493–506. https://doi.org/10.1109/TSP.2012.2225052

Phan A, Cichocki A (2009) Advances in PARAFAC using parallel block decomposition. In: Leung C, Lee M, Chan J (eds) Neural information processing, lecture notes in computer science, vol 5863. Springer, Berlin, pp 323–330, DOI https://doi.org/10.1007/978-3-642-10677-4_36

Phan H, Tichavsky P, Cichocki A (2013) Fast alternating LS algorithms for high order CANDECOMP/PARAFAC tensor factorizations. IEEE Trans Signal Process 61(19):4834–4846. https://doi.org/10.1109/TSP.2013.2269903

Rajwade A, Rangarajan A, Banerjee A (2013) Image denoising using the higher order singular value decomposition. IEEE Trans Pattern Anal Mach Intell 35 (4):849–862. https://doi.org/10.1109/TPAMI.2012.140

Rodriguez M, Ahmed J, Shah M (2008) Action MACH: a spatio-temporal maximum average correlation height filter for action recognition. In: IEEE Conference on computer vision and pattern recognition, 2008. CVPR 2008, pp 1–8, DOI https://doi.org/10.1109/CVPR.2008.4587727

Sidiropoulos ND, Bro R (2000) On the uniqueness of multilinear decomposition of N-way arrays. J Chemometr 14(3):229–239

Sidiropoulos N, Papalexakis E, Faloutsos C (2014) A parallel algorithm for big tensor decomposition using randomly compressed cubes (PARACOMP). In: IEEE 39th International conference on acoustics, speech and signal processing (ICASSP), pp 1–5, DOI https://doi.org/10.1109/ICASSP.2014.6853546

Tsourakakis C (2010) MACH: fast randomized tensor decompositions. In: Proceedings of the 2010 SIAM international conference on data mining, pp 689–700, DOI https://doi.org/10.1137/1.9781611972801.60

Vasilescu M, Terzopoulos D (2002) Multilinear analysis of image ensembles: tensorfaces. In: Heyden A, Sparr G, Nielsen M, Johansen P (eds) Computer vision — ECCV 2002, lecture notes in computer science, vol 2350. Springer, Berlin, pp 447–460, DOI https://doi.org/10.1007/3-540-47969-4_30

Vervliet N, Debals O, Sorber L, De Lathauwer L (2014) Breaking the curse of dimensionality using decompositions of incomplete tensors: tensor-based scientific computing in big data analysis. IEEE Signal Process Mag 31(5):71–79. https://doi.org/10.1109/MSP.2014.2329429

Zhang Y, Zhou G, Jin J, Wang M, Wang X, Cichocki A (2013) L1-regularized multiway canonical correlation analysis for SSVEP-based BCI. IEEE Trans Neural Syst Rehabil Eng 21(6):887–896. https://doi.org/10.1109/TNSRE.2013.2279680

Zhang Y, Zhou G, Jin J, Wang X, Cichocki A (2014) Frequency recognition in SSVEP-based BCI using multiset canonical correlation analysis. Int J Neur Syst 4(24):1450013(1–14)

Zhang Y, Zhou G, Jin J, Wang X, Cichocki A (2015) SSVEP recognition using common feature analysis in brain–computer interface. J Neurosci Methods 244 (0):8–15. https://doi.org/10.1016/j.jneumeth.2014.03.012

Zhang Y, Zhou G, Zhao Q, Cichocki A, Wang X (2016) Fast nonnegative tensor factorization based on accelerated proximal gradient and low-rank approximation. Neurocomputing 198:148–154

Zhang Y, Nam CS, Zhou G, Jin J, Wang X, Cichocki A (2019) Temporally constrained sparse group spatial patterns for motor imagery bci. IEEE Trans Cybern 49(9):3322–3332

Zhang Y, Zhang H, Chen X, Liu M, Zhu X, Lee SW, Shen D (2019) Strength and similarity guided group-level brain functional network construction for mci diagnosis. Pattern Recogn 88:421–430

Zhou G, Cichocki A (2012) Canonical polyadic decomposition based on a single mode blind source separation. IEEE Signal Process Lett 19(8):523–526. https://doi.org/10.1109/LSP.2012.2205237

Zhou G, Cichocki A, Zhao Q, Xie S (2014) Nonnegative matrix and tensor factorizations: an algorithmic perspective. IEEE Signal Process Mag 31(3):54–65. https://doi.org/10.1109/MSP.2014.2298891

Zhou G, Cichocki A, Zhao Q, Xie S (2015) Efficient nonnegative Tucker decompositions: algorithms and uniqueness. IEEE Trans Image Process 24(12):4990–5003

Zhou G, Cichocki A, Zhang Y, Mandic D (2016) Group component analysis from multiblock data: common and individual feature extraction. IEEE Transactions on Neural Networks and Learning Systems In print

Zhou G, Zhao Q, Zhang Y, Adali T, Xie S, Cichocki A (2016) Linked component analysis from matrices to high-order tensors: applications to biomedical data. Proc IEEE 104(2):310–331. https://doi.org/10.1109/JPROC.2015.2474704

Zhou T, Zhang C, Gong C, Bhaskar H, Yang J (2018) Multiview latent space learning with feature redundancy minimization. IEEE Transactions on Cybernetics

Zhou T, Liu M, Thung KH, Shen D (2019) Latent representation learning for alzheimer’s disease diagnosis with incomplete multi-modality neuroimaging and genetic data. IEEE Transactions on Medical Imaging

Zhou T, Zhang C, Peng X, Bhaskar H, Yang J (2019) Dual shared-specific multiview subspace clustering. IEEE Transactions on Cybernetics

Acknowledgements

This work was partially supported by the National Natural Science Foundation of China (NSFC) Grant 61673124, Grant 61973090, and Grant 61727810.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Qiu, Y., Zhou, G., Zhang, Y. et al. Canonical polyadic decomposition (CPD) of big tensors with low multilinear rank. Multimed Tools Appl 80, 22987–23007 (2021). https://doi.org/10.1007/s11042-020-08711-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-08711-1