Abstract

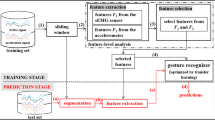

New interaction paradigms combined with emerging technologies have produced the creation of diverse Natural User Interface (NUI) devices in the market. These devices enable the recognition of body gestures allowing users to interact with applications in a more direct, expressive, and intuitive way. In particular, the Leap Motion Controller (LMC) device has been receiving plenty of attention from NUI application developers because it allows them to address limitations on gestures made with hands. Although this device is able to recognize the position of several parts of the hands, developers are still left with the difficult task of recognizing gestures. For this reason, several authors approached this problem using machine learning techniques. We propose a classifier based on Approximate String Matching (ASM). In short, we encode the trajectories of the hand joints as character sequences using the K-means algorithm and then we analyze these sequences with ASM. It should be noted that, when using the K-means algorithm, we select the number of clusters for each part of the hands by considering the Silhouette Coefficient. Furthermore, we define other important factors to take into account for improving the recognition accuracy. For the experiments, we generated a balanced dataset including different types of gestures and afterwards we performed a cross-validation scheme. Experimental results showed the robustness of the approach in terms of recognizing different types of gestures, time spent, and allocated memory. Besides, our approach achieved higher performance rates than well-known algorithms proposed in the current state-of-art for gesture recognition.

Similar content being viewed by others

References

Bachmann D, Weichert F, Rinkenauer G (2014) Evaluation of the leap motion controller as a new contact-free pointing device. Sensors 15(1):214–233

Chen Y, Ding Z, Chen YL, Wu X (2015) Rapid recognition of dynamic hand gestures using leap motion. In: 2015 IEEE international conference on information and automation. IEEE, pp 1419–1424

Chuan CH, Regina E, Guardino C (2014) American sign language recognition using leap motion sensor. In: 2014 13th international conference on machine learning and applications (ICMLA). IEEE, pp 541–544

Eddy SR (1996) Hidden markov models. Current Opinion in Structural Biology 6(3):361–365

Fok KY, Ganganath N, Cheng CT, Chi KT (2015) A real-time asl recognition system using leap motion sensors. In: 2015 international conference on cyber-enabled distributed computing and knowledge discovery (cyberc). IEEE, pp 411–414

Guna J, Jakus G, Pogačnik M, Tomažič S, Sodnik J (2014) An analysis of the precision and reliability of the leap motion sensor and its suitability for static and dynamic tracking. Sensors 14(2):3702–3720

Hall PA, Dowling GR (1980) Approximate string matching. ACM Computing Surveys (CSUR) 12(4):381–402

Han J, Gold N (2014) Lessons learned in exploring the leap motion sensor for gesture-based instrument design. Goldsmiths University of London

Hearst MA, Dumais ST, Osuna E, Platt J, Scholkopf B (1998) Support vector machines. IEEE Intell Sys Appl 13(4):18–28

Ibañez R, Soria Á, Teyseyre A, Rodríguez G, Campo M (2017) Approximate string matching: a lightweight approach to recognize gestures with kinect. Pattern Recogn 62:73–86

Ibanez R, Soria Á, Teyseyre A, Campo M (2014) Easy gesture recognition for kinect. Adv Eng Softw 76:171–180

Image taken from leap motion’s blog. (2015). http://blog.showleap.com/tag/leap-motion/

Jacob MG, Wachs JP, Packer RA (2012) Hand-gesture-based sterile interface for the operating room using contextual cues for the navigation of radiological images. J Am Med Inform Assoc 20(e1):e183–e186

Kaufman L, Rousseeuw PJ (2009) Finding groups in data: an introduction to cluster analysis, vol 344. Wiley, New York

Khelil B, Amiri H (2016) Hand gesture recognition using leap motion controller for recognition of arabic sign language. In: 3rd international conference ACECS’16

Kumar P, Gauba H, Roy PP, Dogra DP (2017) Coupled hmm-based multi-sensor data fusion for sign language recognition. Pattern Recogn Lett 86:1–8

Leap motion controller specs (2013). https://www.cnet.com/products/leap-motion-controller/specs/

Lee B, Lee D, Chin S (2018) Structural motion grammar for universal use of leap motion: amusement and functional contents focused. Journal of Sensors 2018

Leitão PMO (2015) Analysis and evaluation of gesture recognition using leapmotion. In: Proceedings of the 10th doctoral symposium in informatics engineering-DSIE’

Lloyd S (1982) Least squares quantization in pcm. IEEE Trans Inform Theory 28(2):129–137

López G, Quesada L, Guerrero LA (2015) A gesture-based interaction approach for manipulating augmented objects using leap motion. In: International workshop on ambient assisted living. Springer, pp 231–243

Lu W, Tong Z, Chu J (2016) Dynamic hand gesture recognition with leap motion controller. IEEE Signal Process Lett 23(9):1188–1192

Marin G, Dominio F, Zanuttigh P (2014) Hand gesture recognition with leap motion and kinect devices. In: 2014 IEEE international conference on image processing (ICIP). IEEE, pp 1565–1569

Marin G, Dominio F, Zanuttigh P (2016) Hand gesture recognition with jointly calibrated leap motion and depth sensor. Multimed Tools Appl 75(22):14991–15015

McCartney R, Yuan J, Bischof HP (2015) Gesture recognition with the leap motion controller. In: Proceedings of the international conference on image processing, computer vision, and pattern recognition (IPCV). The steering committee of the world congress in computer science, computer engineering and applied computing (WorldComp), p 3

Mohandes M, Aliyu S, Deriche M (2014) Arabic sign language recognition using the leap motion controller. In: 2014 IEEE 23rd international symposium on industrial electronics (ISIE). IEEE , pp 960–965

Navarro G, Raffinot M (2002) Flexible pattern matching in strings: practical on-line search algorithms for texts and biological sequences. Cambridge University Press, Cambridge

Regenbrecht H, Collins J, Hoermann S (2013) A leap-supported, hybrid ar interface approach. In: Proceedings of the 25th Australian computer-human interaction conference: augmentation, application, innovation, collaboration. ACM, pp 281–284

Rish I (2001) An empirical study of the naive bayes classifier. In: IJCAI 2001 workshop on empirical methods in artificial intelligence, vol 3. IBM, pp 41–46

Sarkar AR, Sanyal G, Majumder S (2013) Hand gesture recognition systems: a survey. Int J Comput Appl 71(15)

Silva ES, De Abreu J, De Almeida J, Teichrieb V, Ramalho GL (2013) A preliminary evaluation of the leap motion sensor as controller of new digital musical instruments. Recife, Brasil

Smeragliuolo AH, Hill NJ, Disla L, Putrino D (2016) Validation of the leap motion controller using markered motion capture technology. Journal of Biomechanics 49(9):1742–1750

Tao W, Leu MC, Yin Z (2018) American sign language alphabet recognition using convolutional neural networks with multiview augmentation and inference fusion. Eng Appl Artif Intell 76:202–213

Wang Q, Xu Y, Chen YL, Wang Y, Wu X (2014) Dynamic hand gesture early recognition based on hidden semi-markov models. In: 2014 IEEE international conference on robotics and biomimetics (ROBIO). IEEE, pp 654–658

Acknowledgements

We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Titan Xp GPU used for this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Alonso, D.G., Teyseyre, A., Soria, A. et al. Hand gesture recognition in real world scenarios using approximate string matching. Multimed Tools Appl 79, 20773–20794 (2020). https://doi.org/10.1007/s11042-020-08913-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-08913-7